Shai Shalev Shwartz

Research Program: Theory of Learning in Dynamical Systems

Dec 22, 2025

Abstract:Modern learning systems increasingly interact with data that evolve over time and depend on hidden internal state. We ask a basic question: when is such a dynamical system learnable from observations alone? This paper proposes a research program for understanding learnability in dynamical systems through the lens of next-token prediction. We argue that learnability in dynamical systems should be studied as a finite-sample question, and be based on the properties of the underlying dynamics rather than the statistical properties of the resulting sequence. To this end, we give a formulation of learnability for stochastic processes induced by dynamical systems, focusing on guarantees that hold uniformly at every time step after a finite burn-in period. This leads to a notion of dynamic learnability which captures how the structure of a system, such as stability, mixing, observability, and spectral properties, governs the number of observations required before reliable prediction becomes possible. We illustrate the framework in the case of linear dynamical systems, showing that accurate prediction can be achieved after finite observation without system identification, by leveraging improper methods based on spectral filtering. We survey the relationship between learning in dynamical systems and classical PAC, online, and universal prediction theories, and suggest directions for studying nonlinear and controlled systems.

More data speeds up training time in learning halfspaces over sparse vectors

Nov 10, 2013Abstract:The increased availability of data in recent years has led several authors to ask whether it is possible to use data as a {\em computational} resource. That is, if more data is available, beyond the sample complexity limit, is it possible to use the extra examples to speed up the computation time required to perform the learning task? We give the first positive answer to this question for a {\em natural supervised learning problem} --- we consider agnostic PAC learning of halfspaces over $3$-sparse vectors in $\{-1,1,0\}^n$. This class is inefficiently learnable using $O\left(n/\epsilon^2\right)$ examples. Our main contribution is a novel, non-cryptographic, methodology for establishing computational-statistical gaps, which allows us to show that, under a widely believed assumption that refuting random $\mathrm{3CNF}$ formulas is hard, it is impossible to efficiently learn this class using only $O\left(n/\epsilon^2\right)$ examples. We further show that under stronger hardness assumptions, even $O\left(n^{1.499}/\epsilon^2\right)$ examples do not suffice. On the other hand, we show a new algorithm that learns this class efficiently using $\tilde{\Omega}\left(n^2/\epsilon^2\right)$ examples. This formally establishes the tradeoff between sample and computational complexity for a natural supervised learning problem.

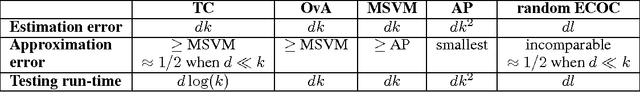

Multiclass Learning Approaches: A Theoretical Comparison with Implications

Jun 01, 2012

Abstract:We theoretically analyze and compare the following five popular multiclass classification methods: One vs. All, All Pairs, Tree-based classifiers, Error Correcting Output Codes (ECOC) with randomly generated code matrices, and Multiclass SVM. In the first four methods, the classification is based on a reduction to binary classification. We consider the case where the binary classifier comes from a class of VC dimension $d$, and in particular from the class of halfspaces over $\reals^d$. We analyze both the estimation error and the approximation error of these methods. Our analysis reveals interesting conclusions of practical relevance, regarding the success of the different approaches under various conditions. Our proof technique employs tools from VC theory to analyze the \emph{approximation error} of hypothesis classes. This is in sharp contrast to most, if not all, previous uses of VC theory, which only deal with estimation error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge