Setareh Rahimi Taghanaki

Wearable-based Classification of Running Styles with Deep Learning

Sep 23, 2021

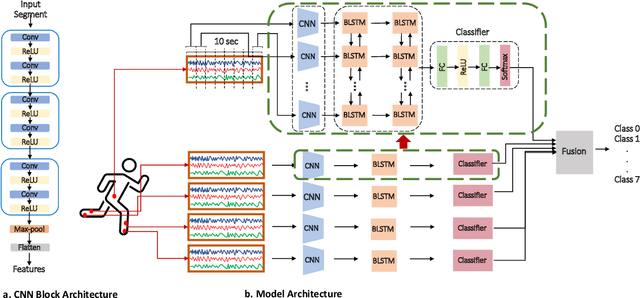

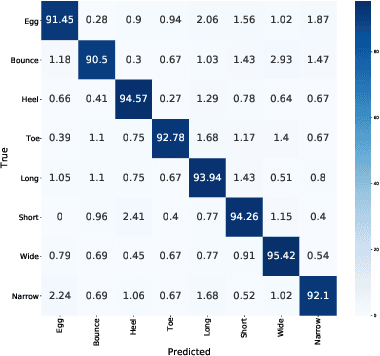

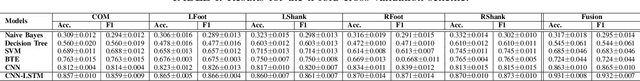

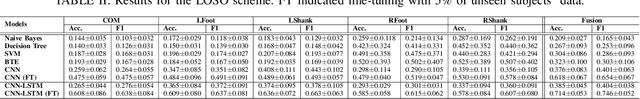

Abstract:Automatic classification of running styles can enable runners to obtain feedback with the aim of optimizing performance in terms of minimizing energy expenditure, fatigue, and risk of injury. To develop a system capable of classifying running styles using wearables, we collect a dataset from 10 healthy runners performing 8 different pre-defined running styles. Five wearable devices are used to record accelerometer data from different parts of the lower body, namely left and right foot, left and right medial tibia, and lower back. Using the collected dataset, we develop a deep learning solution which consists of a Convolutional Neural Network and Long Short-Term Memory network to first automatically extract effective features, followed by learning temporal relationships. Score-level fusion is used to aggregate the classification results from the different sensors. Experiments show that the proposed model is capable of automatically classifying different running styles in a subject-dependant manner, outperforming several classical machine learning methods (following manual feature extraction) and a convolutional neural network baseline. Moreover, our study finds that subject-independent classification of running styles is considerably more challenging than a subject-dependant scheme, indicating a high level of personalization in such running styles. Finally, we demonstrate that by fine-tuning the model with as few as 5% subject-specific samples, considerable performance boost is obtained.

Self-supervised Wearable-based Activity Recognition by Learning to Forecast Motion

Oct 21, 2020

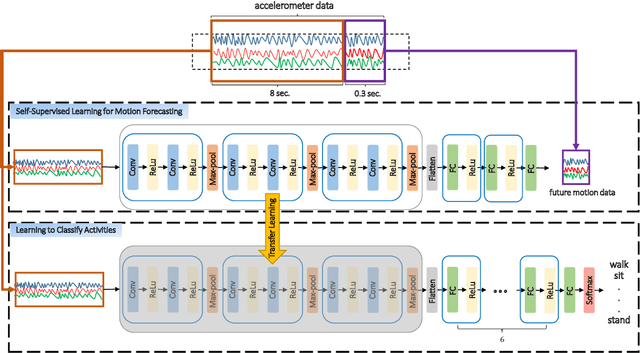

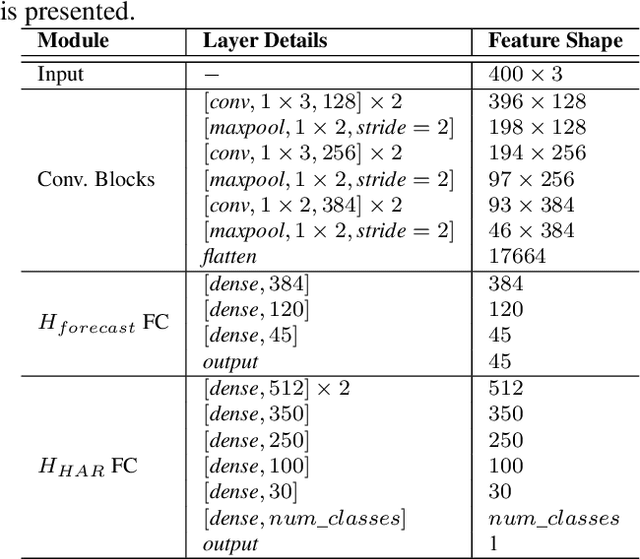

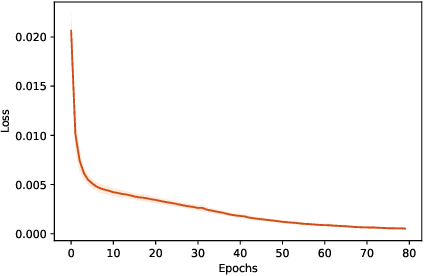

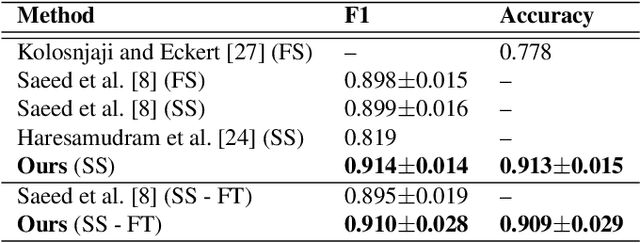

Abstract:We propose the use of self-supervised learning for human activity recognition. Our proposed solution consists of two steps. First, the representations of unlabeled input signals are learned by training a deep convolutional neural network to predict the values of accelerometer signals in future time-steps. Then, we freeze the convolution blocks of this network and transfer the weights to our next network aimed at human activity recognition. For this task, we add a number of fully connected layers to the end of the frozen network and train the added layers with labeled accelerometer signals to learn to classify human activities. We evaluate the performance of our method on two publicly available human activity datasets: UCI HAR and MotionSense. The results show that our self-supervised approach outperforms the existing supervised and self-supervised methods to set new state-of-the-art values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge