Sebastian Köhler

SATversary: Adversarial Attacks on Satellite Fingerprinting

Jun 06, 2025

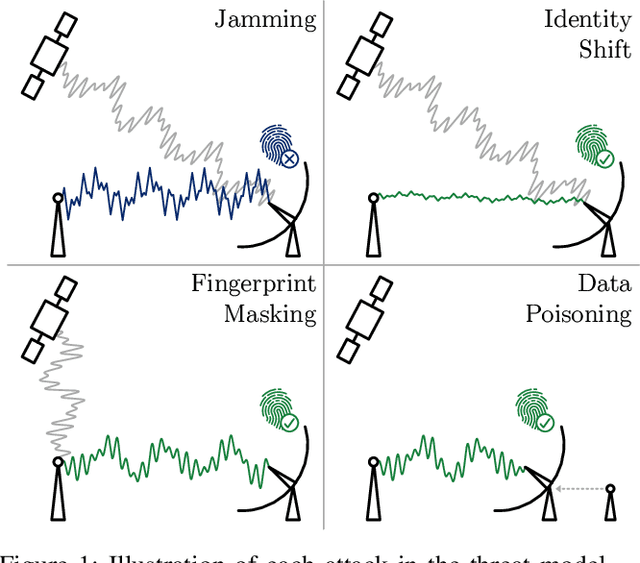

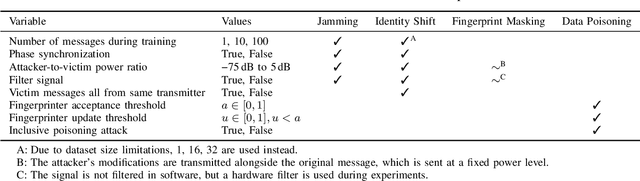

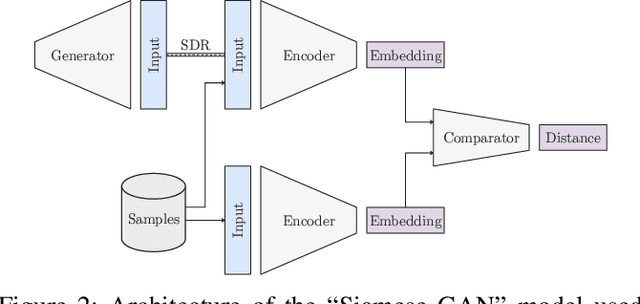

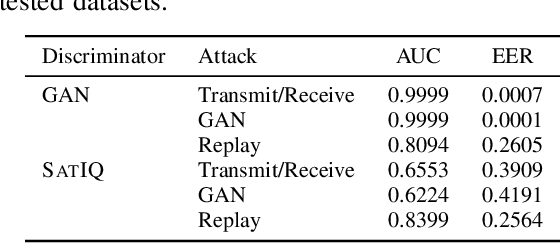

Abstract:As satellite systems become increasingly vulnerable to physical layer attacks via SDRs, novel countermeasures are being developed to protect critical systems, particularly those lacking cryptographic protection, or those which cannot be upgraded to support modern cryptography. Among these is transmitter fingerprinting, which provides mechanisms by which communication can be authenticated by looking at characteristics of the transmitter, expressed as impairments on the signal. Previous works show that fingerprinting can be used to classify satellite transmitters, or authenticate them against SDR-equipped attackers under simple replay scenarios. In this paper we build upon this by looking at attacks directly targeting the fingerprinting system, with an attacker optimizing for maximum impact in jamming, spoofing, and dataset poisoning attacks, and demonstrate these attacks on the SatIQ system designed to authenticate Iridium transmitters. We show that an optimized jamming signal can cause a 50% error rate with attacker-to-victim ratios as low as -30dB (far less power than traditional jamming) and demonstrate successful identity forgery during spoofing attacks, with an attacker successfully removing their own transmitter's fingerprint from messages. We also present a data poisoning attack, enabling persistent message spoofing by altering the data used to authenticate incoming messages to include the fingerprint of the attacker's transmitter. Finally, we show that our model trained to optimize spoofing attacks can also be used to detect spoofing and replay attacks, even when it has never seen the attacker's transmitter before. Furthermore, this technique works even when the training dataset includes only a single transmitter, enabling fingerprinting to be used to protect small constellations and even individual satellites, providing additional protection where it is needed the most.

Signal Injection Attacks against CCD Image Sensors

Aug 19, 2021

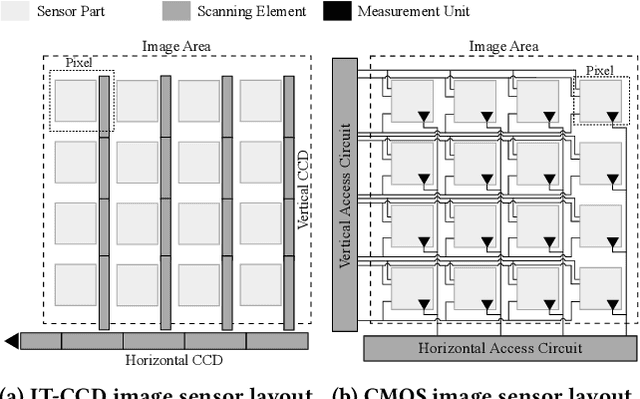

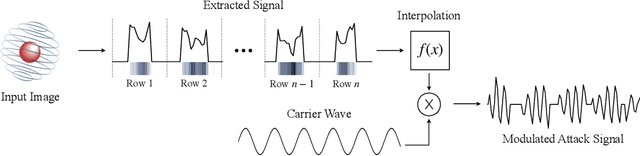

Abstract:Since cameras have become a crucial part in many safety-critical systems and applications, such as autonomous vehicles and surveillance, a large body of academic and non-academic work has shown attacks against their main component - the image sensor. However, these attacks are limited to coarse-grained and often suspicious injections because light is used as an attack vector. Furthermore, due to the nature of optical attacks, they require the line-of-sight between the adversary and the target camera. In this paper, we present a novel post-transducer signal injection attack against CCD image sensors, as they are used in professional, scientific, and even military settings. We show how electromagnetic emanation can be used to manipulate the image information captured by a CCD image sensor with the granularity down to the brightness of individual pixels. We study the feasibility of our attack and then demonstrate its effects in the scenario of automatic barcode scanning. Our results indicate that the injected distortion can disrupt automated vision-based intelligent systems.

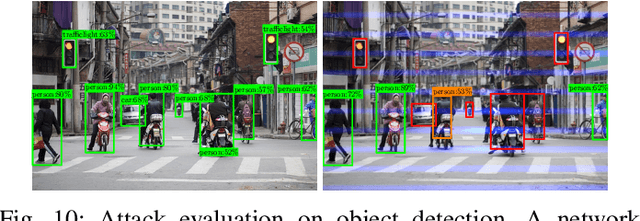

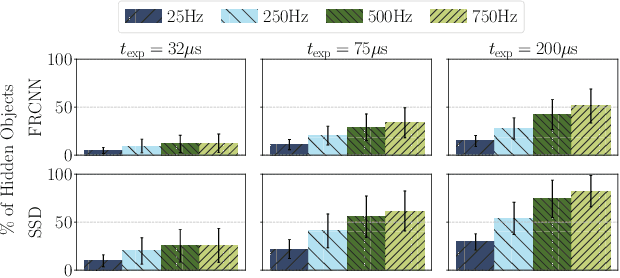

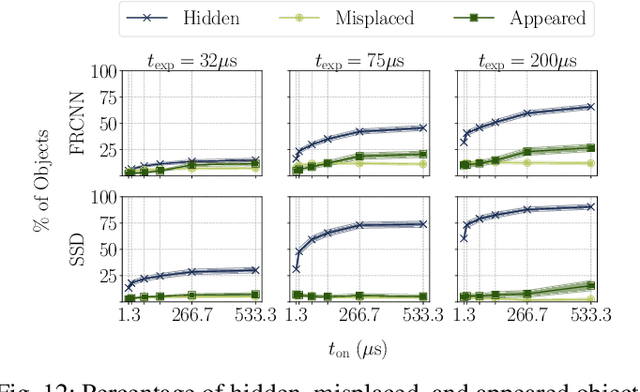

They See Me Rollin': Inherent Vulnerability of the Rolling Shutter in CMOS Image Sensors

Jan 25, 2021

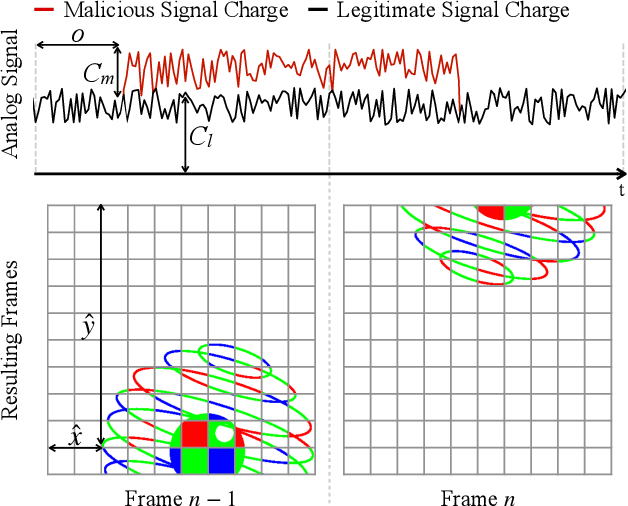

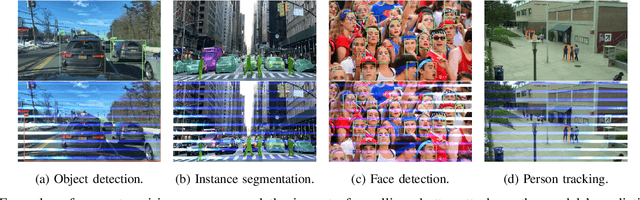

Abstract:Cameras have become a fundamental component of vision-based intelligent systems. As a balance between production costs and image quality, most modern cameras use Complementary Metal-Oxide Semiconductor image sensors that implement an electronic rolling shutter mechanism, where image rows are captured consecutively rather than all-at-once. In this paper, we describe how the electronic rolling shutter can be exploited using a bright, modulated light source (e.g., an inexpensive, off-the-shelf laser), to inject fine-grained image disruptions. These disruptions substantially affect camera-based computer vision systems, where high-frequency data is crucial in extracting informative features from objects. We study the fundamental factors affecting a rolling shutter attack, such as environmental conditions, angle of the incident light, laser to camera distance, and aiming precision. We demonstrate how these factors affect the intensity of the injected distortion and how an adversary can take them into account by modeling the properties of the camera. We introduce a general pipeline of a practical attack, which consists of: (i) profiling several properties of the target camera and (ii) partially simulating the attack to find distortions that satisfy the adversary's goal. Then, we instantiate the attack to the scenario of object detection, where the adversary's goal is to maximally disrupt the detection of objects in the image. We show that the adversary can modulate the laser to hide up to 75% of objects perceived by state-of-the-art detectors while controlling the amount of perturbation to keep the attack inconspicuous. Our results indicate that rolling shutter attacks can substantially reduce the performance and reliability of vision-based intelligent systems.

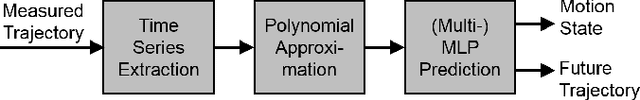

Intentions of Vulnerable Road Users - Detection and Forecasting by Means of Machine Learning

Mar 09, 2018

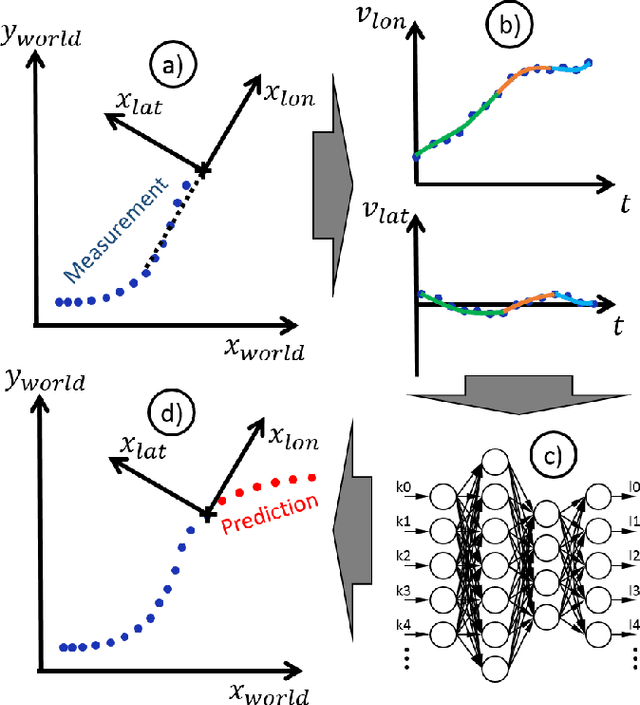

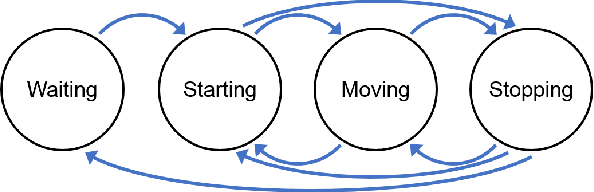

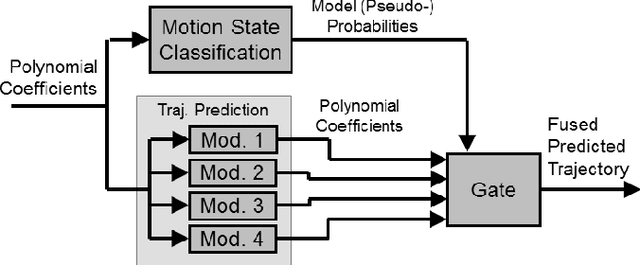

Abstract:Avoiding collisions with vulnerable road users (VRUs) using sensor-based early recognition of critical situations is one of the manifold opportunities provided by the current development in the field of intelligent vehicles. As especially pedestrians and cyclists are very agile and have a variety of movement options, modeling their behavior in traffic scenes is a challenging task. In this article we propose movement models based on machine learning methods, in particular artificial neural networks, in order to classify the current motion state and to predict the future trajectory of VRUs. Both model types are also combined to enable the application of specifically trained motion predictors based on a continuously updated pseudo probabilistic state classification. Furthermore, the architecture is used to evaluate motion-specific physical models for starting and stopping and video-based pedestrian motion classification. A comprehensive dataset consisting of 1068 pedestrian and 494 cyclist scenes acquired at an urban intersection is used for optimization, training, and evaluation of the different models. The results show substantial higher classification rates and the ability to earlier recognize motion state changes with the machine learning approaches compared to interacting multiple model (IMM) Kalman Filtering. The trajectory prediction quality is also improved for all kinds of test scenes, especially when starting and stopping motions are included. Here, 37\% and 41\% lower position errors were achieved on average, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge