Sean Anderson

Explainable Deep Convolutional Multi-Type Anomaly Detection

Nov 14, 2025

Abstract:Most explainable anomaly detection methods often identify anomalies but lack the capability to differentiate the type of anomaly. Furthermore, they often require the costly training and maintenance of separate models for each object category. The lack of specificity is a significant research gap, as identifying the type of anomaly (e.g., "Crack" vs. "Scratch") is crucial for accurate diagnosis that facilitates cost-saving operational decisions across diverse application domains. While some recent large-scale Vision-Language Models (VLMs) have begun to address this, they are computationally intensive and memory-heavy, restricting their use in real-time or embedded systems. We propose MultiTypeFCDD, a simple and lightweight convolutional framework designed as a practical alternative for explainable multi-type anomaly detection. MultiTypeFCDD uses only image-level labels to learn and produce multi-channel heatmaps, where each channel is trained to correspond to a specific anomaly type. The model functions as a single, unified framework capable of differentiating anomaly types across multiple object categories, eliminating the need to train and manage separate models for each object category. We evaluated our proposed method on the Real-IAD dataset and it delivers results competitive with state-of-the-art complex models at significantly reduced parametric load and inference times. This makes it a highly practical and viable solution for real-world applications where computational resources are tightly constrained.

Explainable AI for medical imaging: Explaining pneumothorax diagnoses with Bayesian Teaching

Jun 08, 2021

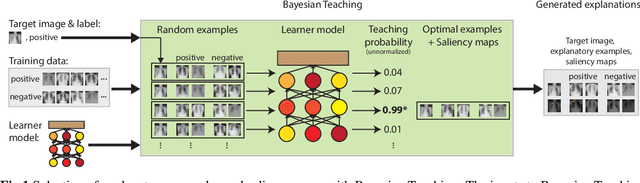

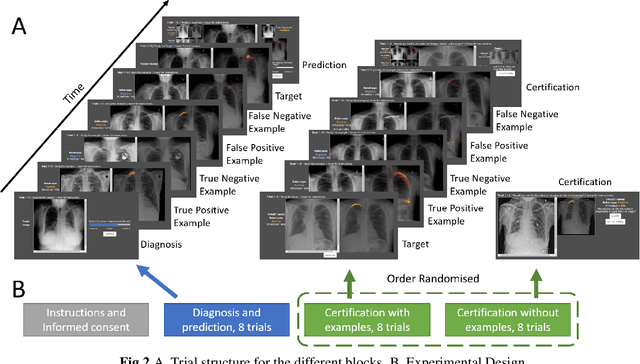

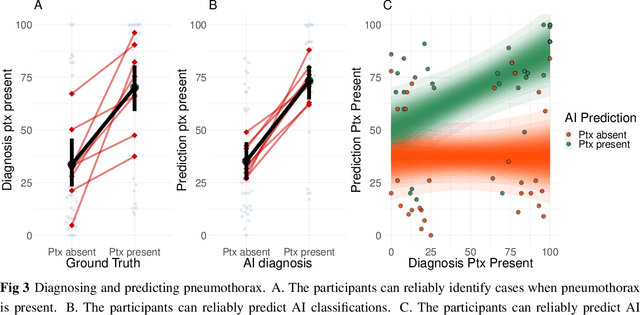

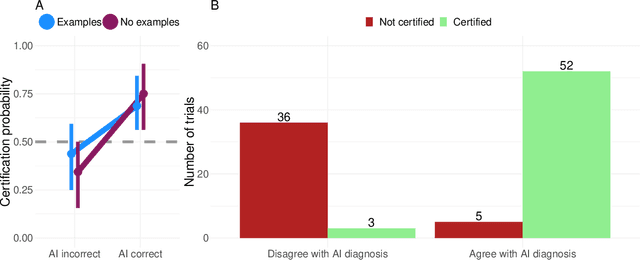

Abstract:Limited expert time is a key bottleneck in medical imaging. Due to advances in image classification, AI can now serve as decision-support for medical experts, with the potential for great gains in radiologist productivity and, by extension, public health. However, these gains are contingent on building and maintaining experts' trust in the AI agents. Explainable AI may build such trust by helping medical experts to understand the AI decision processes behind diagnostic judgements. Here we introduce and evaluate explanations based on Bayesian Teaching, a formal account of explanation rooted in the cognitive science of human learning. We find that medical experts exposed to explanations generated by Bayesian Teaching successfully predict the AI's diagnostic decisions and are more likely to certify the AI for cases when the AI is correct than when it is wrong, indicating appropriate trust. These results show that Explainable AI can be used to support human-AI collaboration in medical imaging.

Batch Nonlinear Continuous-Time Trajectory Estimation as Exactly Sparse Gaussian Process Regression

Dec 01, 2014

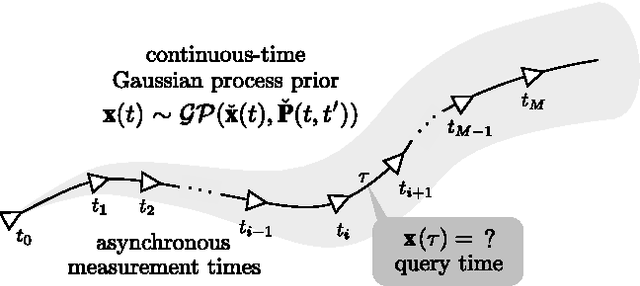

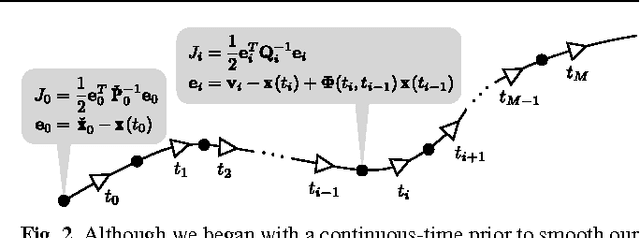

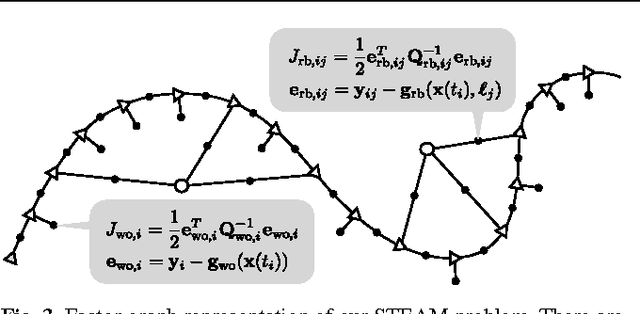

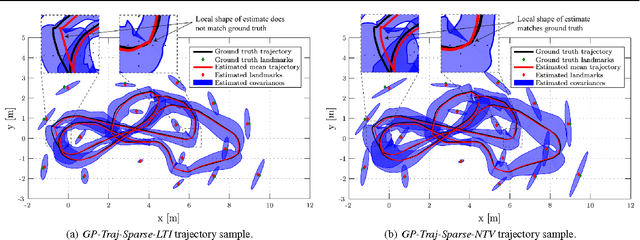

Abstract:In this paper, we revisit batch state estimation through the lens of Gaussian process (GP) regression. We consider continuous-discrete estimation problems wherein a trajectory is viewed as a one-dimensional GP, with time as the independent variable. Our continuous-time prior can be defined by any nonlinear, time-varying stochastic differential equation driven by white noise; this allows the possibility of smoothing our trajectory estimates using a variety of vehicle dynamics models (e.g., `constant-velocity'). We show that this class of prior results in an inverse kernel matrix (i.e., covariance matrix between all pairs of measurement times) that is exactly sparse (block-tridiagonal) and that this can be exploited to carry out GP regression (and interpolation) very efficiently. When the prior is based on a linear, time-varying stochastic differential equation and the measurement model is also linear, this GP approach is equivalent to classical, discrete-time smoothing (at the measurement times); when a nonlinearity is present, we iterate over the whole trajectory to maximize accuracy. We test the approach experimentally on a simultaneous trajectory estimation and mapping problem using a mobile robot dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge