Sareh Rowlands

Centre for Environmental Intelligence, University of Exeter, Exeter, UK, Department of Computer Science, Faculty of Environment, Science and Economy, University of Exeter, Exeter, UK

Bridging Domain Gaps for Fine-Grained Moth Classification Through Expert-Informed Adaptation and Foundation Model Priors

Aug 27, 2025Abstract:Labelling images of Lepidoptera (moths) from automated camera systems is vital for understanding insect declines. However, accurate species identification is challenging due to domain shifts between curated images and noisy field imagery. We propose a lightweight classification approach, combining limited expert-labelled field data with knowledge distillation from the high-performance BioCLIP2 foundation model into a ConvNeXt-tiny architecture. Experiments on 101 Danish moth species from AMI camera systems demonstrate that BioCLIP2 substantially outperforms other methods and that our distilled lightweight model achieves comparable accuracy with significantly reduced computational cost. These insights offer practical guidelines for the development of efficient insect monitoring systems and bridging domain gaps for fine-grained classification.

Automated classification of natural habitats using ground-level imagery

Aug 26, 2025Abstract:Accurate classification of terrestrial habitats is critical for biodiversity conservation, ecological monitoring, and land-use planning. Several habitat classification schemes are in use, typically based on analysis of satellite imagery with validation by field ecologists. Here we present a methodology for classification of habitats based solely on ground-level imagery (photographs), offering improved validation and the ability to classify habitats at scale (for example using citizen-science imagery). In collaboration with Natural England, a public sector organisation responsible for nature conservation in England, this study develops a classification system that applies deep learning to ground-level habitat photographs, categorising each image into one of 18 classes defined by the 'Living England' framework. Images were pre-processed using resizing, normalisation, and augmentation; re-sampling was used to balance classes in the training data and enhance model robustness. We developed and fine-tuned a DeepLabV3-ResNet101 classifier to assign a habitat class label to each photograph. Using five-fold cross-validation, the model demonstrated strong overall performance across 18 habitat classes, with accuracy and F1-scores varying between classes. Across all folds, the model achieved a mean F1-score of 0.61, with visually distinct habitats such as Bare Soil, Silt and Peat (BSSP) and Bare Sand (BS) reaching values above 0.90, and mixed or ambiguous classes scoring lower. These findings demonstrate the potential of this approach for ecological monitoring. Ground-level imagery is readily obtained, and accurate computational methods for habitat classification based on such data have many potential applications. To support use by practitioners, we also provide a simple web application that classifies uploaded images using our model.

Distributed Black-box Attack against Image Classification Cloud Services

Nov 02, 2022Abstract:Black-box adversarial attacks can fool image classifiers into misclassifying images without requiring access to model structure and weights. Recently proposed black-box attacks can achieve a success rate of more than 95% after less than 1,000 queries. The question then arises of whether black-box attacks have become a real threat against IoT devices that rely on cloud APIs to achieve image classification. To shed some light on this, note that prior research has primarily focused on increasing the success rate and reducing the number of required queries. However, another crucial factor for black-box attacks against cloud APIs is the time required to perform the attack. This paper applies black-box attacks directly to cloud APIs rather than to local models, thereby avoiding multiple mistakes made in prior research. Further, we exploit load balancing to enable distributed black-box attacks that can reduce the attack time by a factor of about five for both local search and gradient estimation methods.

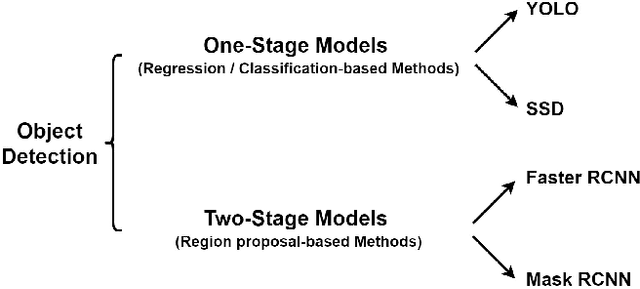

Adversarial Detection: Attacking Object Detection in Real Time

Sep 16, 2022

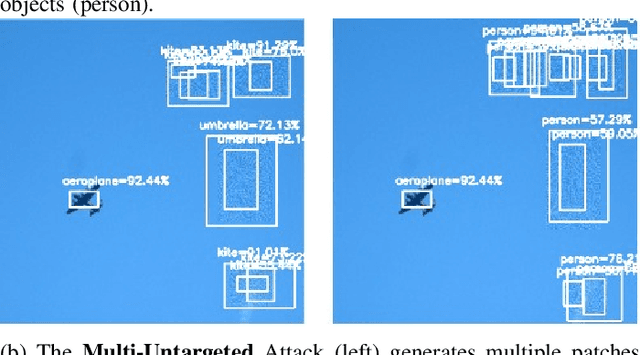

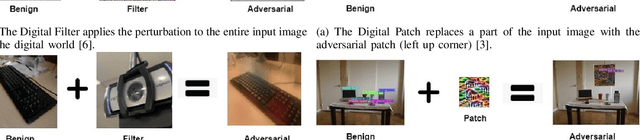

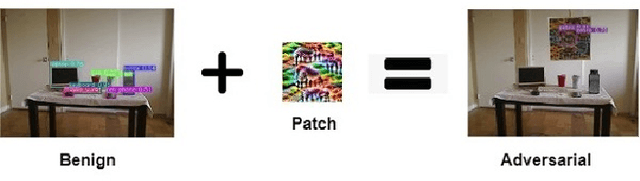

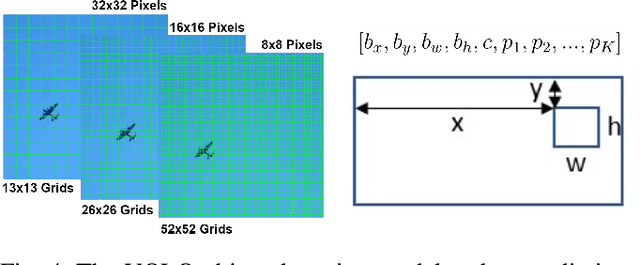

Abstract:Intelligent robots rely on object detection models to perceive the environment. Following advances in deep learning security it has been revealed that object detection models are vulnerable to adversarial attacks. However, prior research primarily focuses on attacking static images or offline videos. Therefore, it is still unclear if such attacks could jeopardize real-world robotic applications in dynamic environments. This paper bridges this gap by presenting the first real-time online attack against object detection models. We devise three attacks that fabricate bounding boxes for nonexistent objects at desired locations. The attacks achieve a success rate of about 90% within about 20 iterations. The demo video is available at: https://youtu.be/zJZ1aNlXsMU.

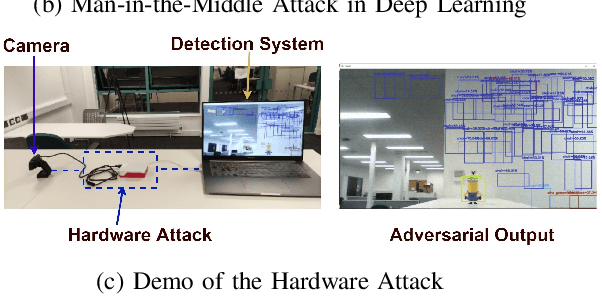

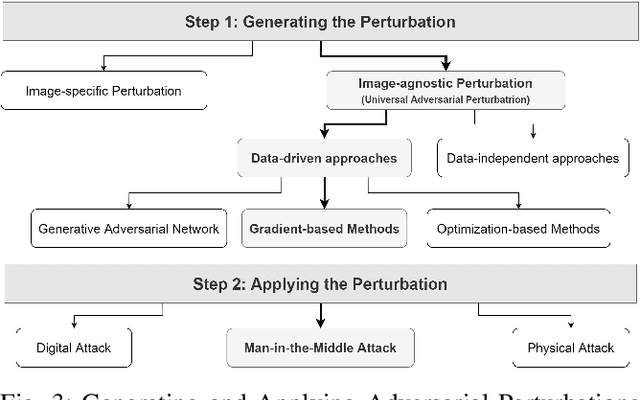

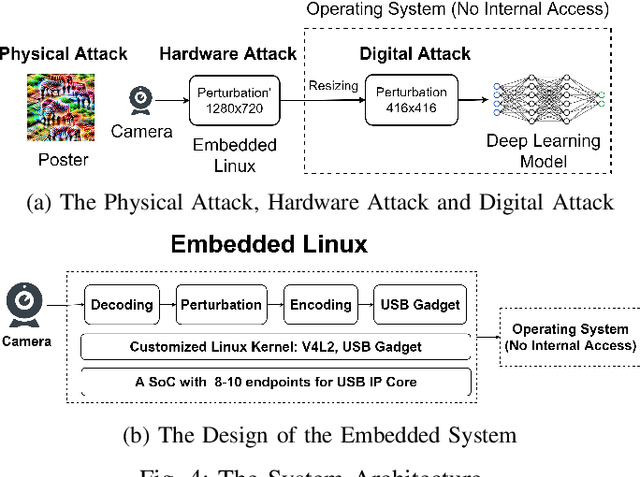

Man-in-the-Middle Attack against Object Detection Systems

Aug 15, 2022

Abstract:Is deep learning secure for robots? As embedded systems have access to more powerful CPUs and GPUs, deep-learning-enabled object detection systems become pervasive in robotic applications. Meanwhile, prior research unveils that deep learning models are vulnerable to adversarial attacks. Does this put real-world robots at threat? Our research borrows the idea of the Main-in-the-Middle attack from Cryptography to attack an object detection system. Our experimental results prove that we can generate a strong Universal Adversarial Perturbation (UAP) within one minute and then use the perturbation to attack a detection system via the Man-in-the-Middle attack. Our findings raise a serious concern over the applications of deep learning models in safety-critical systems such as autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge