Sangwook Baek

Lightweight Image Enhancement Network for Mobile Devices Using Self-Feature Extraction and Dense Modulation

May 02, 2022

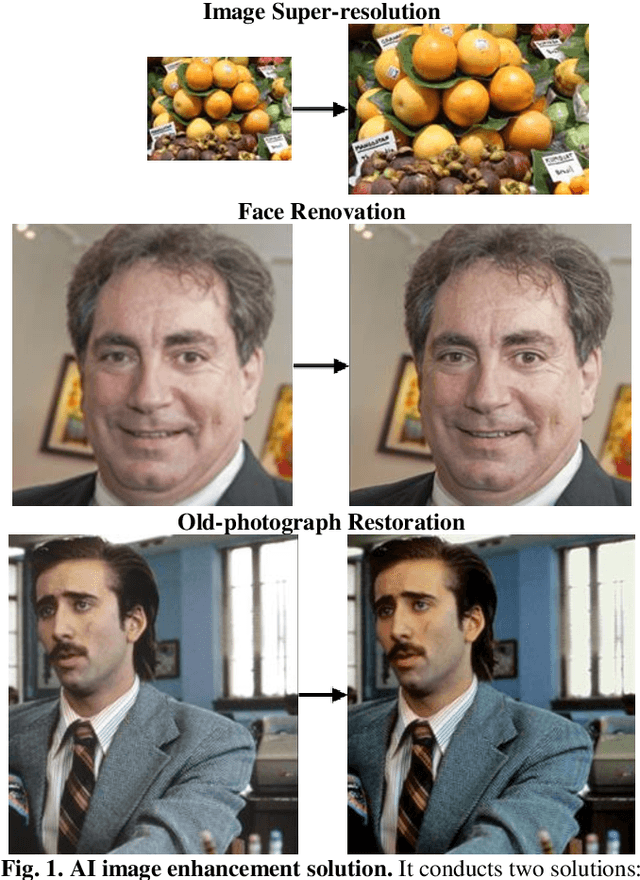

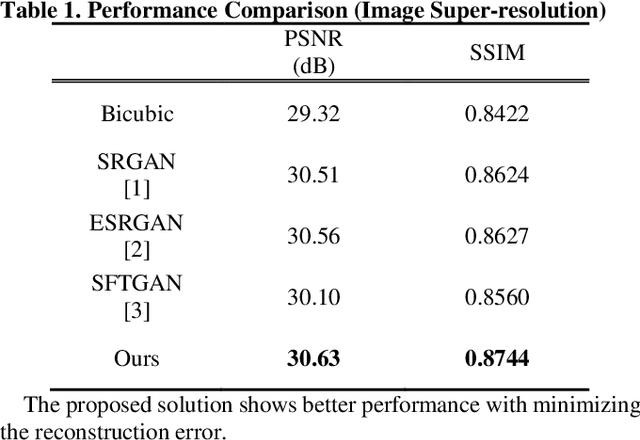

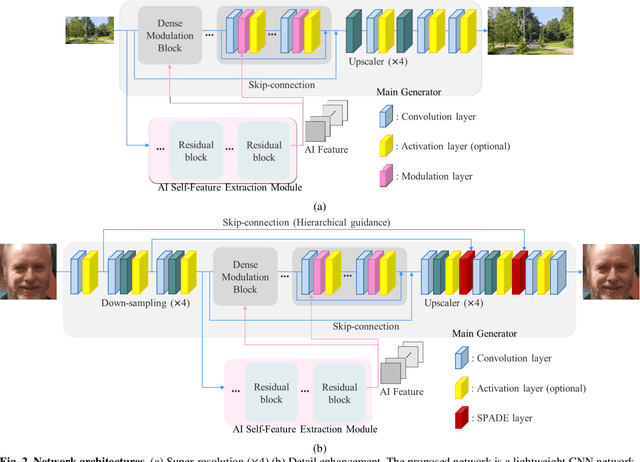

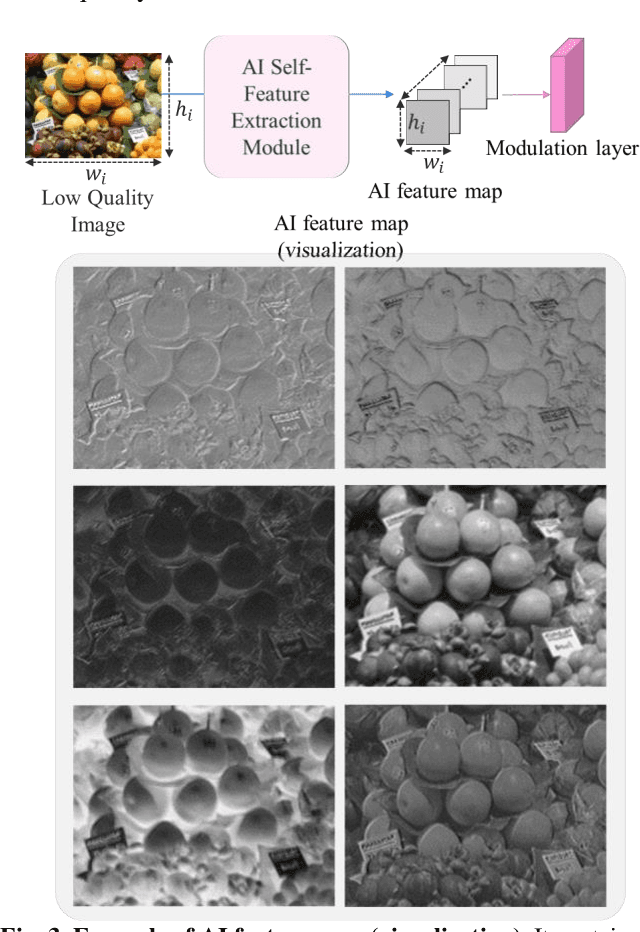

Abstract:Convolutional neural network (CNN) based image enhancement methods such as super-resolution and detail enhancement have achieved remarkable performances. However, amounts of operations including convolution and parameters within the networks cost high computing power and need huge memory resource, which limits the applications with on-device requirements. Lightweight image enhancement network should restore details, texture, and structural information from low-resolution input images while keeping their fidelity. To address these issues, a lightweight image enhancement network is proposed. The proposed network include self-feature extraction module which produces modulation parameters from low-quality image itself, and provides them to modulate the features in the network. Also, dense modulation block is proposed for unit block of the proposed network, which uses dense connections of concatenated features applied in modulation layers. Experimental results demonstrate better performance over existing approaches in terms of both quantitative and qualitative evaluations.

Smoother Network Tuning and Interpolation for Continuous-level Image Processing

Oct 05, 2020

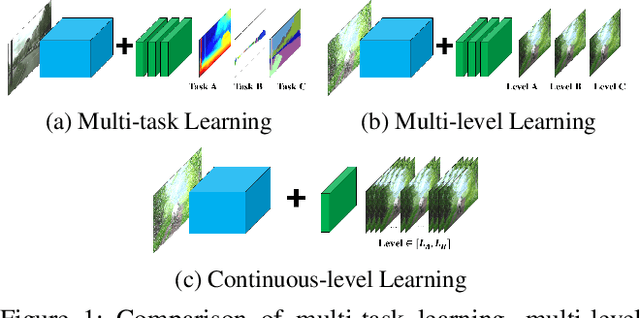

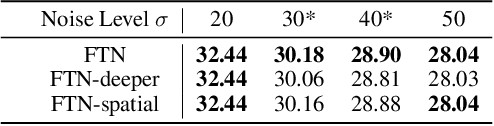

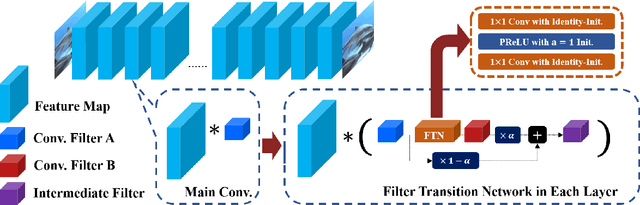

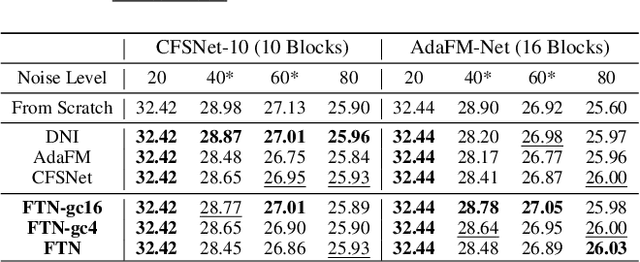

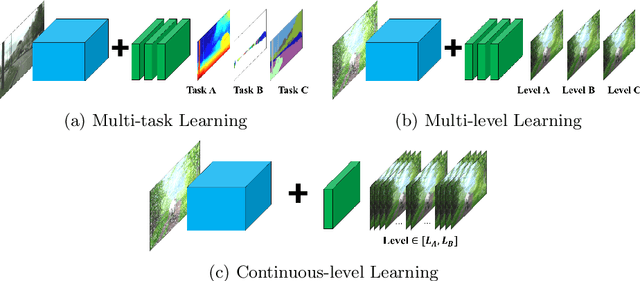

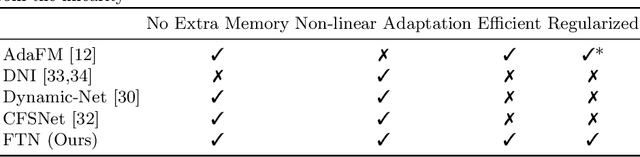

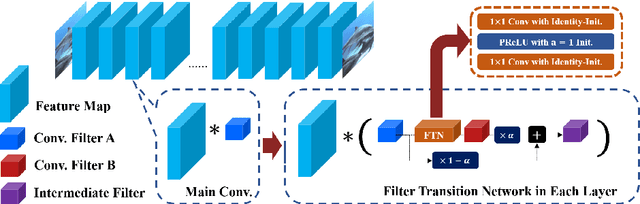

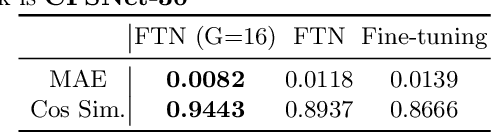

Abstract:In Convolutional Neural Network (CNN) based image processing, most studies propose networks that are optimized to single-level (or single-objective); thus, they underperform on other levels and must be retrained for delivery of optimal performance. Using multiple models to cover multiple levels involves very high computational costs. To solve these problems, recent approaches train networks on two different levels and propose their own interpolation methods to enable arbitrary intermediate levels. However, many of them fail to generalize or have certain side effects in practical usage. In this paper, we define these frameworks as network tuning and interpolation and propose a novel module for continuous-level learning, called Filter Transition Network (FTN). This module is a structurally smoother module than existing ones. Therefore, the frameworks with FTN generalize well across various tasks and networks and cause fewer undesirable side effects. For stable learning of FTN, we additionally propose a method to initialize non-linear neural network layers with identity mappings. Extensive results for various image processing tasks indicate that the performance of FTN is comparable in multiple continuous levels, and is significantly smoother and lighter than that of other frameworks.

Regularized Adaptation for Stable and Efficient Continuous-Level Learning on Image Processing Networks

Mar 12, 2020

Abstract:In Convolutional Neural Network (CNN) based image processing, most of the studies propose networks that are optimized for a single-level (or a single-objective); thus, they underperform on other levels and must be retrained for delivery of optimal performance. Using multiple models to cover multiple levels involves very high computational costs. To solve these problems, recent approaches train the networks on two different levels and propose their own interpolation methods to enable the arbitrary intermediate levels. However, many of them fail to adapt hard tasks or interpolate smoothly, or the others still require large memory and computational cost. In this paper, we propose a novel continuous-level learning framework using a Filter Transition Network (FTN) which is a non-linear module that easily adapt to new levels, and is regularized to prevent undesirable side-effects. Additionally, for stable learning of FTN, we newly propose a method to initialize non-linear CNNs with identity mappings. Furthermore, FTN is extremely lightweight module since it is a data-independent module, which means it is not affected by the spatial resolution of the inputs. Extensive results for various image processing tasks indicate that the performance of FTN is stable in terms of adaptation and interpolation, and comparable to that of the other heavy frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge