Samia Bouchafa

DH-PTAM: A Deep Hybrid Stereo Events-Frames Parallel Tracking And Mapping System

Jun 02, 2023

Abstract:This paper presents a robust approach for a visual parallel tracking and mapping (PTAM) system that excels in challenging environments. Our proposed method combines the strengths of heterogeneous multi-modal visual sensors, including stereo event-based and frame-based sensors, in a unified reference frame through a novel spatio-temporal synchronization of stereo visual frames and stereo event streams. We employ deep learning-based feature extraction and description for estimation to enhance robustness further. We also introduce an end-to-end parallel tracking and mapping optimization layer complemented by a simple loop-closure algorithm for efficient SLAM behavior. Through comprehensive experiments on both small-scale and large-scale real-world sequences of VECtor and TUM-VIE benchmarks, our proposed method (DH-PTAM) demonstrates superior performance compared to state-of-the-art methods in terms of robustness and accuracy in adverse conditions. Our implementation's research-based Python API is publicly available on GitHub for further research and development: https://github.com/AbanobSoliman/DH-PTAM.

IBISCape: A Simulated Benchmark for multi-modal SLAM Systems Evaluation in Large-scale Dynamic Environments

Jun 27, 2022

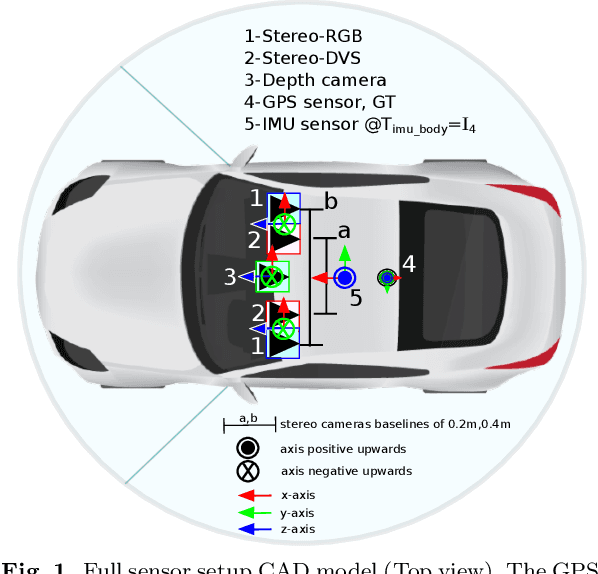

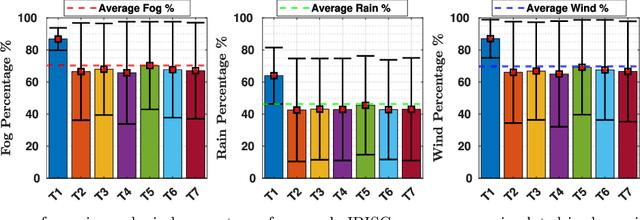

Abstract:The development process of high-fidelity SLAM systems depends on their validation upon reliable datasets. Towards this goal, we propose IBISCape, a simulated benchmark that includes data synchronization and acquisition APIs for telemetry from heterogeneous sensors: stereo-RGB/DVS, Depth, IMU, and GPS, along with the ground truth scene segmentation and vehicle ego-motion. Our benchmark is built upon the CARLA simulator, whose back-end is the Unreal Engine rendering a high dynamic scenery simulating the real world. Moreover, we offer 34 multi-modal datasets suitable for autonomous vehicles navigation, including scenarios for scene understanding evaluation like accidents, along with a wide range of frame quality based on a dynamic weather simulation class integrated with our APIs. We also introduce the first calibration targets to CARLA maps to solve the unknown distortion parameters problem of CARLA simulated DVS and RGB cameras. Finally, using IBISCape sequences, we evaluate four ORB-SLAM3 systems (monocular RGB, stereo RGB, Stereo Visual Inertial (SVI), and RGB-D) performance and BASALT Visual-Inertial Odometry (VIO) system on various sequences collected in simulated large-scale dynamic environments. Keywords: benchmark, multi-modal, datasets, Odometry, Calibration, DVS, SLAM

PCA Event-Based Optical Flow for Visual Odometry

May 17, 2021

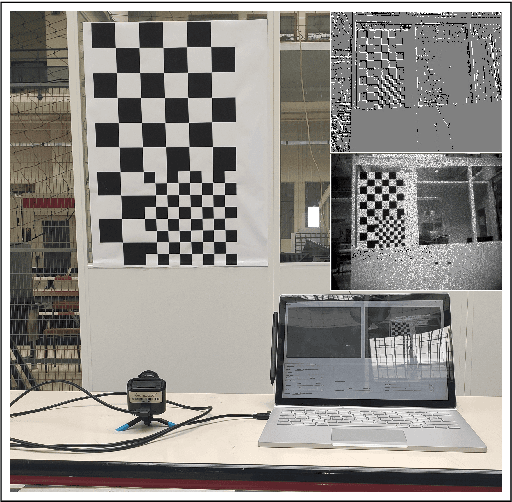

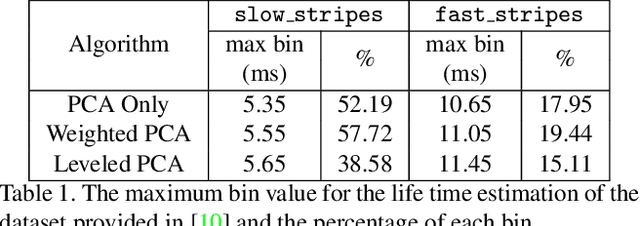

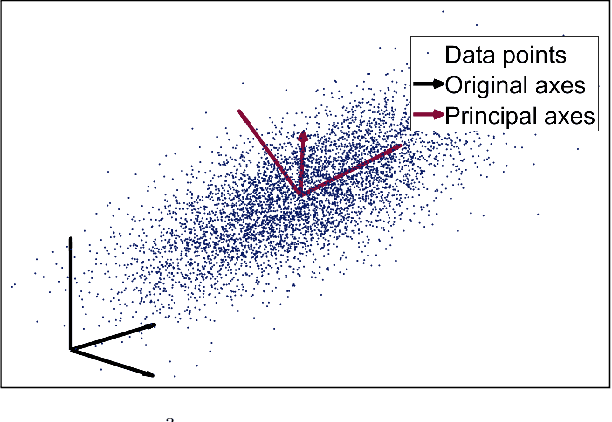

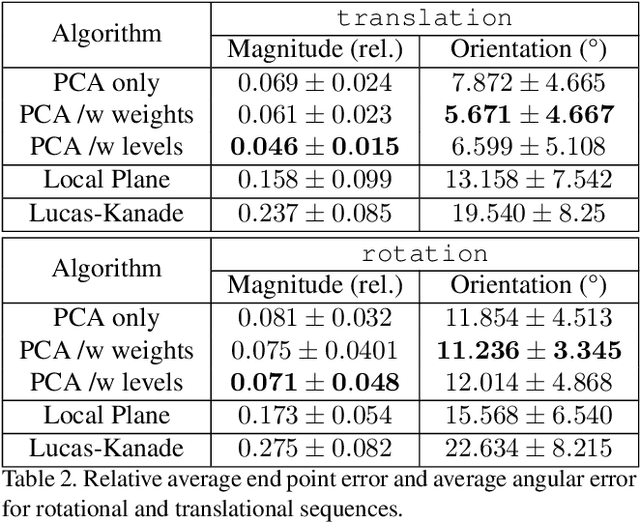

Abstract:With the advent of neuromorphic vision sensors such as event-based cameras, a paradigm shift is required for most computer vision algorithms. Among these algorithms, optical flow estimation is a prime candidate for this process considering that it is linked to a neuromorphic vision approach. Usage of optical flow is widespread in robotics applications due to its richness and accuracy. We present a Principal Component Analysis (PCA) approach to the problem of event-based optical flow estimation. In this approach, we examine different regularization methods which efficiently enhance the estimation of the optical flow. We show that the best variant of our proposed method, dedicated to the real-time context of visual odometry, is about two times faster compared to state-of-the-art implementations while significantly improves optical flow accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge