Samet Oymak

Theoretical Insights Into Multiclass Classification: A High-dimensional Asymptotic View

Nov 16, 2020

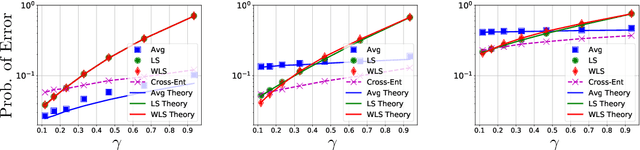

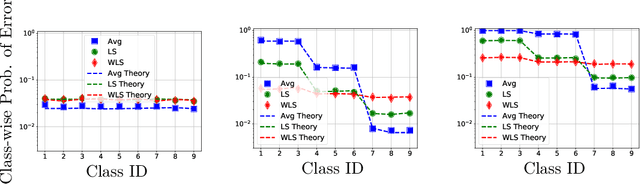

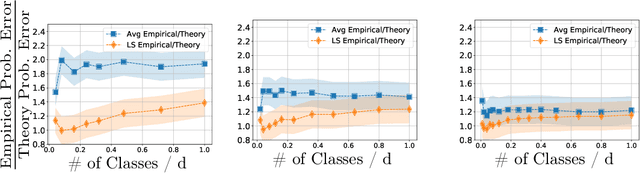

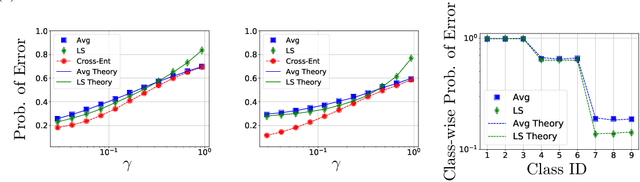

Abstract:Contemporary machine learning applications often involve classification tasks with many classes. Despite their extensive use, a precise understanding of the statistical properties and behavior of classification algorithms is still missing, especially in modern regimes where the number of classes is rather large. In this paper, we take a step in this direction by providing the first asymptotically precise analysis of linear multiclass classification. Our theoretical analysis allows us to precisely characterize how the test error varies over different training algorithms, data distributions, problem dimensions as well as number of classes, inter/intra class correlations and class priors. Specifically, our analysis reveals that the classification accuracy is highly distribution-dependent with different algorithms achieving optimal performance for different data distributions and/or training/features sizes. Unlike linear regression/binary classification, the test error in multiclass classification relies on intricate functions of the trained model (e.g., correlation between some of the trained weights) whose asymptotic behavior is difficult to characterize. This challenge is already present in simple classifiers, such as those minimizing a square loss. Our novel theoretical techniques allow us to overcome some of these challenges. The insights gained may pave the way for a precise understanding of other classification algorithms beyond those studied in this paper.

Unsupervised Paraphrasing via Deep Reinforcement Learning

Jul 05, 2020

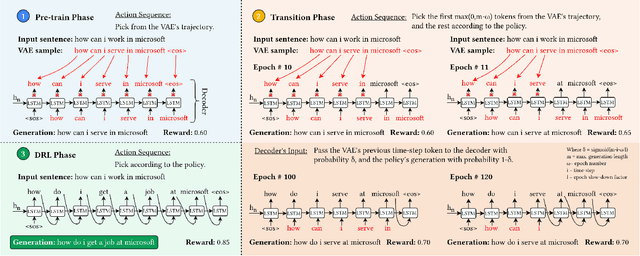

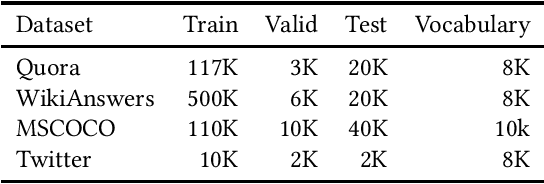

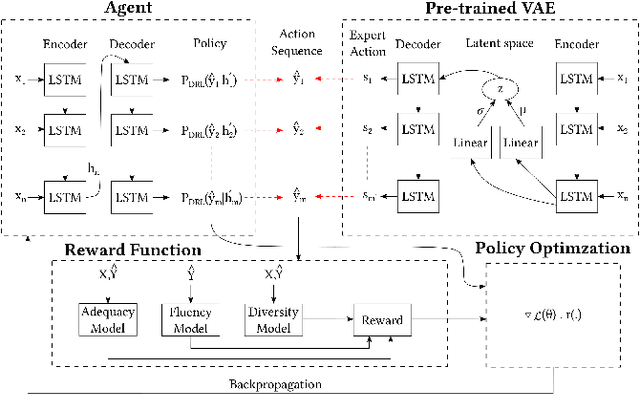

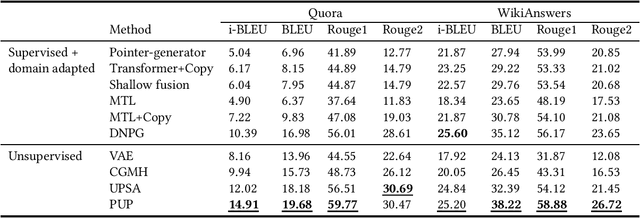

Abstract:Paraphrasing is expressing the meaning of an input sentence in different wording while maintaining fluency (i.e., grammatical and syntactical correctness). Most existing work on paraphrasing use supervised models that are limited to specific domains (e.g., image captions). Such models can neither be straightforwardly transferred to other domains nor generalize well, and creating labeled training data for new domains is expensive and laborious. The need for paraphrasing across different domains and the scarcity of labeled training data in many such domains call for exploring unsupervised paraphrase generation methods. We propose Progressive Unsupervised Paraphrasing (PUP): a novel unsupervised paraphrase generation method based on deep reinforcement learning (DRL). PUP uses a variational autoencoder (trained using a non-parallel corpus) to generate a seed paraphrase that warm-starts the DRL model. Then, PUP progressively tunes the seed paraphrase guided by our novel reward function which combines semantic adequacy, language fluency, and expression diversity measures to quantify the quality of the generated paraphrases in each iteration without needing parallel sentences. Our extensive experimental evaluation shows that PUP outperforms unsupervised state-of-the-art paraphrasing techniques in terms of both automatic metrics and user studies on four real datasets. We also show that PUP outperforms domain-adapted supervised algorithms on several datasets. Our evaluation also shows that PUP achieves a great trade-off between semantic similarity and diversity of expression.

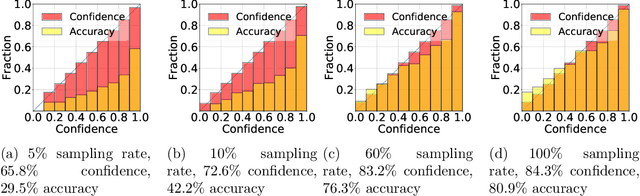

Statistical and Algorithmic Insights for Semi-supervised Learning with Self-training

Jun 19, 2020

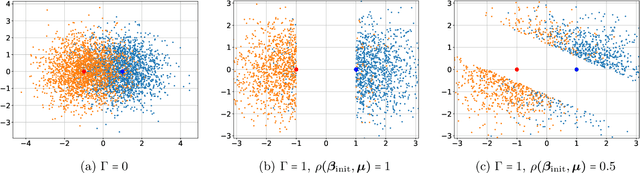

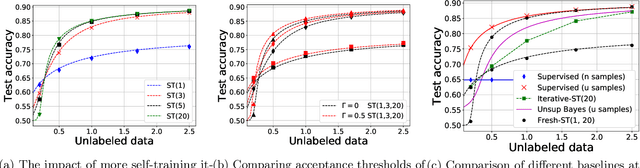

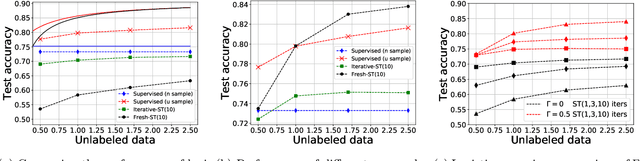

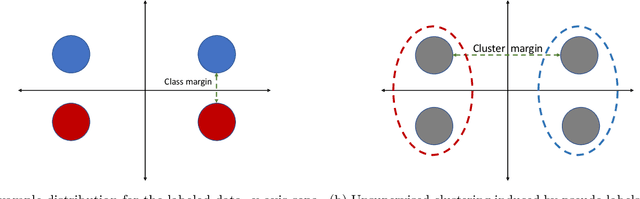

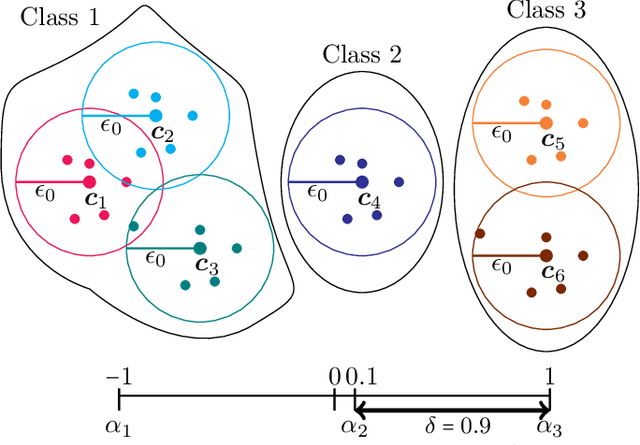

Abstract:Self-training is a classical approach in semi-supervised learning which is successfully applied to a variety of machine learning problems. Self-training algorithm generates pseudo-labels for the unlabeled examples and progressively refines these pseudo-labels which hopefully coincides with the actual labels. This work provides theoretical insights into self-training algorithm with a focus on linear classifiers. We first investigate Gaussian mixture models and provide a sharp non-asymptotic finite-sample characterization of the self-training iterations. Our analysis reveals the provable benefits of rejecting samples with low confidence and demonstrates that self-training iterations gracefully improve the model accuracy even if they do get stuck in sub-optimal fixed points. We then demonstrate that regularization and class margin (i.e. separation) is provably important for the success and lack of regularization may prevent self-training from identifying the core features in the data. Finally, we discuss statistical aspects of empirical risk minimization with self-training for general distributions. We show how a purely unsupervised notion of generalization based on self-training based clustering can be formalized based on cluster margin. We then establish a connection between self-training based semi-supervision and the more general problem of learning with heterogenous data and weak supervision.

Exploring Weight Importance and Hessian Bias in Model Pruning

Jun 19, 2020

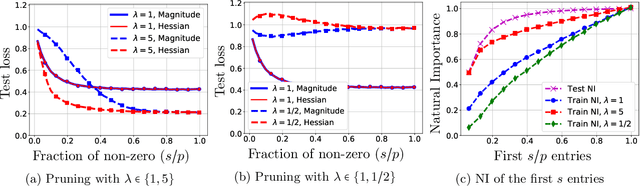

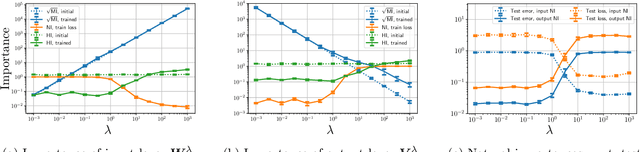

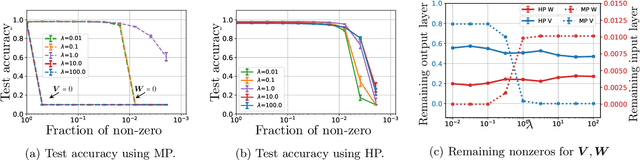

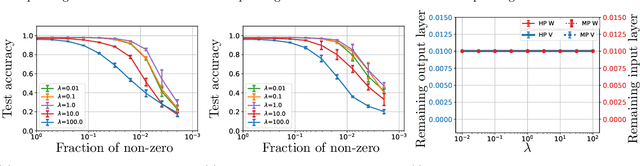

Abstract:Model pruning is an essential procedure for building compact and computationally-efficient machine learning models. A key feature of a good pruning algorithm is that it accurately quantifies the relative importance of the model weights. While model pruning has a rich history, we still don't have a full grasp of the pruning mechanics even for relatively simple problems involving linear models or shallow neural nets. In this work, we provide a principled exploration of pruning by building on a natural notion of importance. For linear models, we show that this notion of importance is captured by covariance scaling which connects to the well-known Hessian-based pruning. We then derive asymptotic formulas that allow us to precisely compare the performance of different pruning methods. For neural networks, we demonstrate that the importance can be at odds with larger magnitudes and proper initialization is critical for magnitude-based pruning. Specifically, we identify settings in which weights become more important despite becoming smaller, which in turn leads to a catastrophic failure of magnitude-based pruning. Our results also elucidate that implicit regularization in the form of Hessian structure has a catalytic role in identifying the important weights, which dictate the pruning performance.

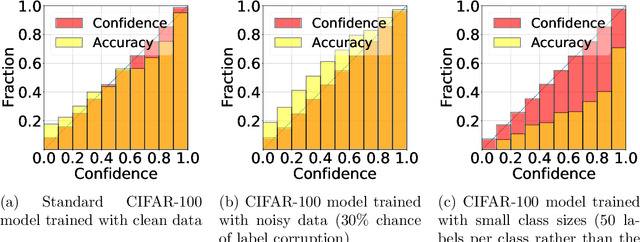

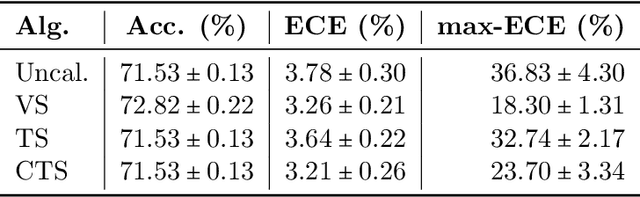

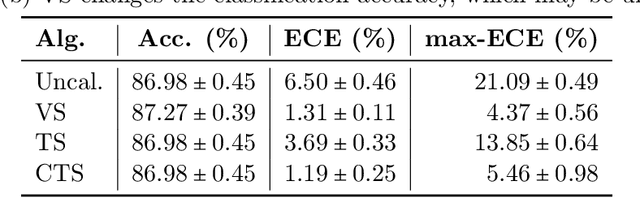

On the Role of Dataset Quality and Heterogeneity in Model Confidence

Feb 23, 2020

Abstract:Safety-critical applications require machine learning models that output accurate and calibrated probabilities. While uncalibrated deep networks are known to make over-confident predictions, it is unclear how model confidence is impacted by the variations in the data, such as label noise or class size. In this paper, we investigate the role of the dataset quality by studying the impact of dataset size and the label noise on the model confidence. We theoretically explain and experimentally demonstrate that, surprisingly, label noise in the training data leads to under-confident networks, while reduced dataset size leads to over-confident models. We then study the impact of dataset heterogeneity, where data quality varies across classes, on model confidence. We demonstrate that this leads to heterogenous confidence/accuracy behavior in the test data and is poorly handled by the standard calibration algorithms. To overcome this, we propose an intuitive heterogenous calibration technique and show that the proposed approach leads to improved calibration metrics (both average and worst-case errors) on the CIFAR datasets.

Non-asymptotic and Accurate Learning of Nonlinear Dynamical Systems

Feb 20, 2020

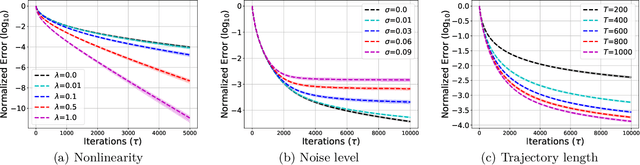

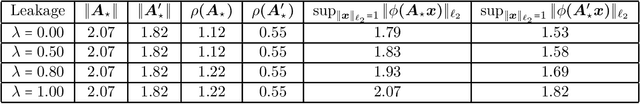

Abstract:We consider the problem of learning stabilizable systems governed by nonlinear state equation $h_{t+1}=\phi(h_t,u_t;\theta)+w_t$. Here $\theta$ is the unknown system dynamics, $h_t $ is the state, $u_t$ is the input and $w_t$ is the additive noise vector. We study gradient based algorithms to learn the system dynamics $\theta$ from samples obtained from a single finite trajectory. If the system is run by a stabilizing input policy, we show that temporally-dependent samples can be approximated by i.i.d. samples via a truncation argument by using mixing-time arguments. We then develop new guarantees for the uniform convergence of the gradients of empirical loss. Unlike existing work, our bounds are noise sensitive which allows for learning ground-truth dynamics with high accuracy and small sample complexity. Together, our results facilitate efficient learning of the general nonlinear system under stabilizing policy. We specialize our guarantees to entry-wise nonlinear activations and verify our theory in various numerical experiments

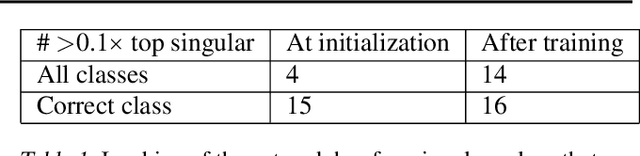

Generalization Guarantees for Neural Networks via Harnessing the Low-rank Structure of the Jacobian

Jul 04, 2019

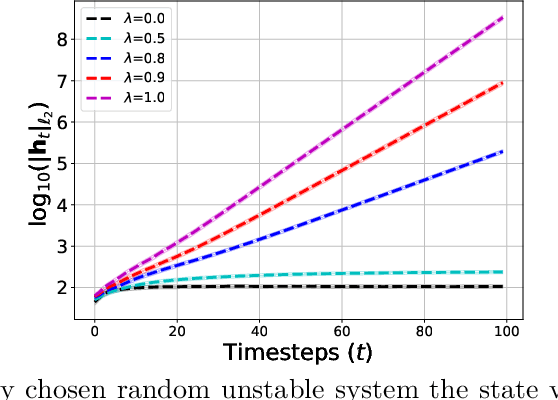

Abstract:Modern neural network architectures often generalize well despite containing many more parameters than the size of the training dataset. This paper explores the generalization capabilities of neural networks trained via gradient descent. We develop a data-dependent optimization and generalization theory which leverages the low-rank structure of the Jacobian matrix associated with the network. Our results help demystify why training and generalization is easier on clean and structured datasets and harder on noisy and unstructured datasets as well as how the network size affects the evolution of the train and test errors during training. Specifically, we use a control knob to split the Jacobian spectum into "information" and "nuisance" spaces associated with the large and small singular values. We show that over the information space learning is fast and one can quickly train a model with zero training loss that can also generalize well. Over the nuisance space training is slower and early stopping can help with generalization at the expense of some bias. We also show that the overall generalization capability of the network is controlled by how well the label vector is aligned with the information space. A key feature of our results is that even constant width neural nets can provably generalize for sufficiently nice datasets. We conduct various numerical experiments on deep networks that corroborate our theoretical findings and demonstrate that: (i) the Jacobian of typical neural networks exhibit low-rank structure with a few large singular values and many small ones leading to a low-dimensional information space, (ii) over the information space learning is fast and most of the label vector falls on this space, and (iii) label noise falls on the nuisance space and impedes optimization/generalization.

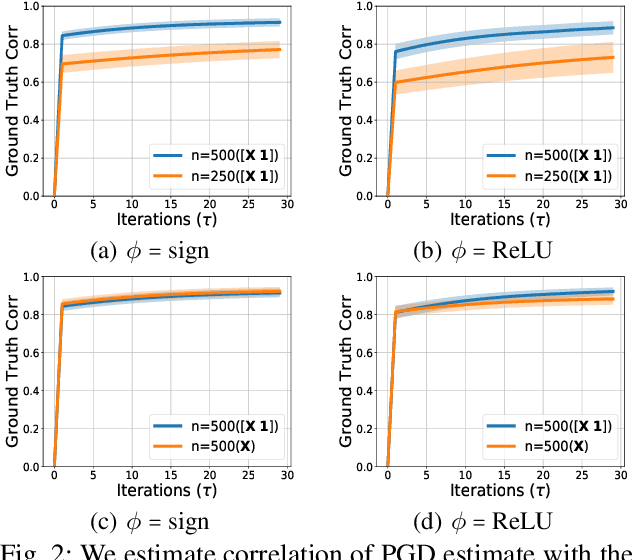

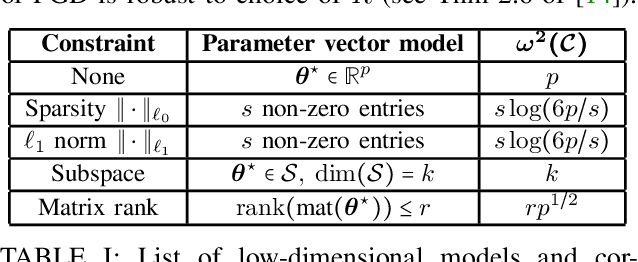

Quickly Finding the Best Linear Model in High Dimensions

Jul 03, 2019

Abstract:We study the problem of finding the best linear model that can minimize least-squares loss given a data-set. While this problem is trivial in the low dimensional regime, it becomes more interesting in high dimensions where the population minimizer is assumed to lie on a manifold such as sparse vectors. We propose projected gradient descent (PGD) algorithm to estimate the population minimizer in the finite sample regime. We establish linear convergence rate and data dependent estimation error bounds for PGD. Our contributions include: 1) The results are established for heavier tailed sub-exponential distributions besides sub-gaussian. 2) We directly analyze the empirical risk minimization and do not require a realizable model that connects input data and labels. 3) Our PGD algorithm is augmented to learn the bias terms which boosts the performance. The numerical experiments validate our theoretical results.

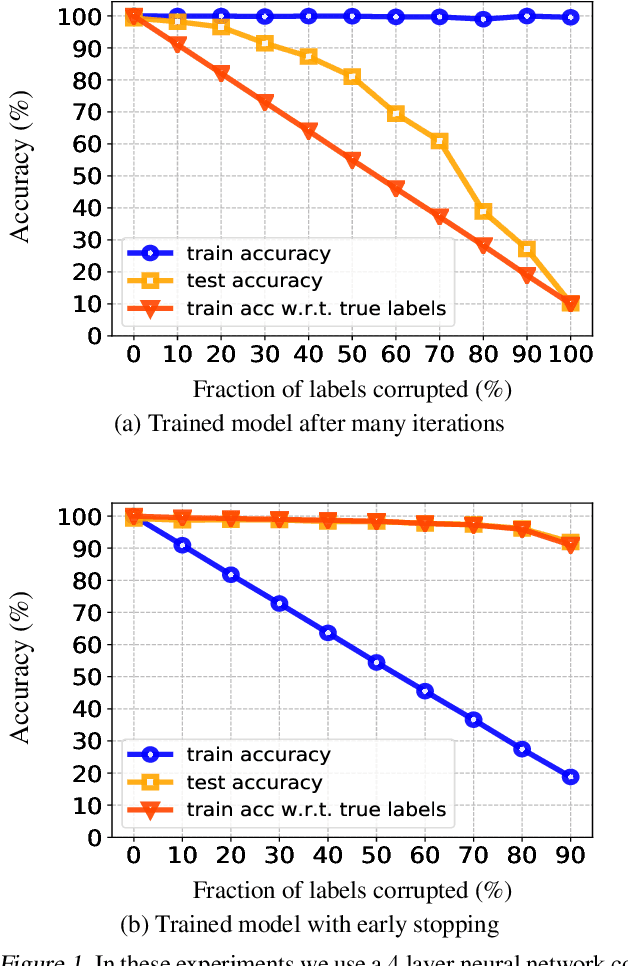

Gradient Descent with Early Stopping is Provably Robust to Label Noise for Overparameterized Neural Networks

Apr 07, 2019

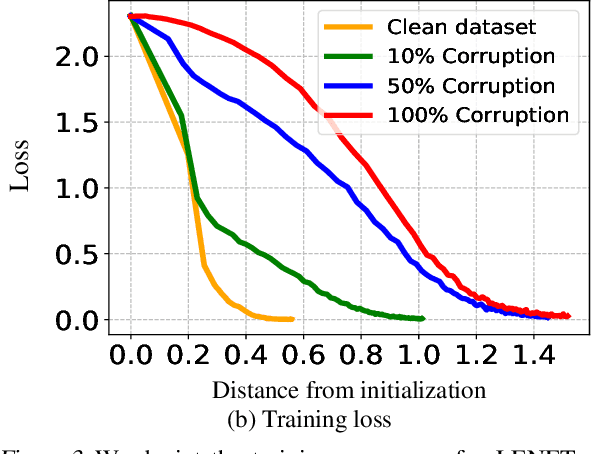

Abstract:Modern neural networks are typically trained in an over-parameterized regime where the parameters of the model far exceed the size of the training data. Due to over-parameterization these neural networks in principle have the capacity to (over)fit any set of labels including pure noise. Despite this high fitting capacity, somewhat paradoxically, neural network models trained via first-order methods continue to predict well on yet unseen test data. In this paper we take a step towards demystifying this phenomena. In particular we show that first order methods such as gradient descent are provably robust to noise/corruption on a constant fraction of the labels despite over-parametrization under a rich dataset model. In particular: i) First, we show that in the first few iterations where the updates are still in the vicinity of the initialization these algorithms only fit to the correct labels essentially ignoring the noisy labels. ii) Secondly, we prove that to start to overfit to the noisy labels these algorithms must stray rather far from from the initial model which can only occur after many more iterations. Together, these show that gradient descent with early stopping is provably robust to label noise and shed light on empirical robustness of deep networks as well as commonly adopted heuristics to prevent overfitting.

Towards moderate overparameterization: global convergence guarantees for training shallow neural networks

Feb 12, 2019

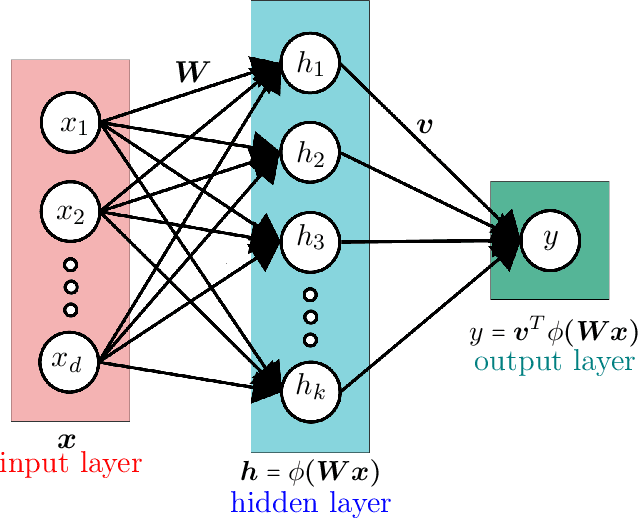

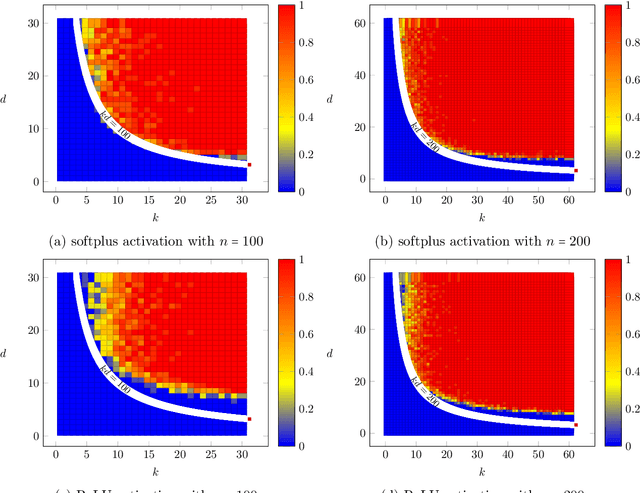

Abstract:Many modern neural network architectures are trained in an overparameterized regime where the parameters of the model exceed the size of the training dataset. Sufficiently overparameterized neural network architectures in principle have the capacity to fit any set of labels including random noise. However, given the highly nonconvex nature of the training landscape it is not clear what level and kind of overparameterization is required for first order methods to converge to a global optima that perfectly interpolate any labels. A number of recent theoretical works have shown that for very wide neural networks where the number of hidden units is polynomially large in the size of the training data gradient descent starting from a random initialization does indeed converge to a global optima. However, in practice much more moderate levels of overparameterization seems to be sufficient and in many cases overparameterized models seem to perfectly interpolate the training data as soon as the number of parameters exceed the size of the training data by a constant factor. Thus there is a huge gap between the existing theoretical literature and practical experiments. In this paper we take a step towards closing this gap. Focusing on shallow neural nets and smooth activations, we show that (stochastic) gradient descent when initialized at random converges at a geometric rate to a nearby global optima as soon as the square-root of the number of network parameters exceeds the size of the training data. Our results also benefit from a fast convergence rate and continue to hold for non-differentiable activations such as Rectified Linear Units (ReLUs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge