Saibal Mukhopadhyay

Forecasting local behavior of multi-agent system and its application to forest fire model

Oct 28, 2022Abstract:In this paper, we study a CNN-LSTM model to forecast the state of a specific agent in a large multi-agent system. The proposed model consists of a CNN encoder to represent the system into a low-dimensional vector, a LSTM module to learn the agent dynamics in the vector space, and a MLP decoder to predict the future state of an agent. A forest fire model is considered as an example where we need to predict when a specific tree agent will be burning. We observe that the proposed model achieves higher AUC with less computation than a frame-based model and significantly saves computational costs such as the activation than ConvLSTM.

Learning Point Processes using Recurrent Graph Network

Aug 11, 2022

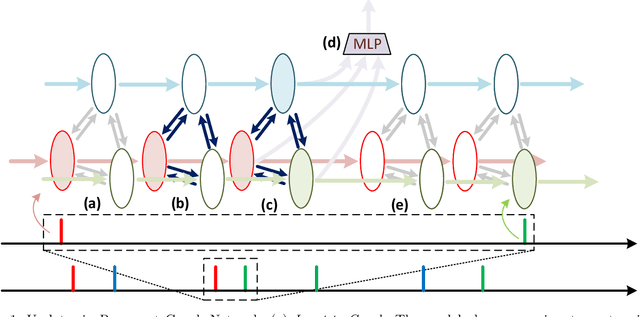

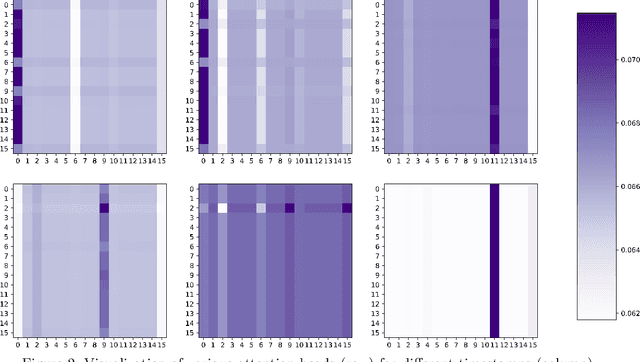

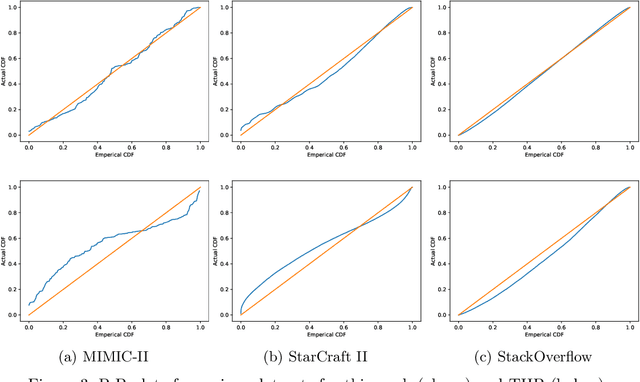

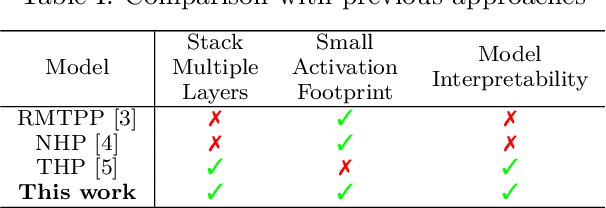

Abstract:We present a novel Recurrent Graph Network (RGN) approach for predicting discrete marked event sequences by learning the underlying complex stochastic process. Using the framework of Point Processes, we interpret a marked discrete event sequence as the superposition of different sequences each of a unique type. The nodes of the Graph Network use LSTM to incorporate past information whereas a Graph Attention Network (GAT Network) introduces strong inductive biases to capture the interaction between these different types of events. By changing the self-attention mechanism from attending over past events to attending over event types, we obtain a reduction in time and space complexity from $\mathcal{O}(N^2)$ (total number of events) to $\mathcal{O}(|\mathcal{Y}|^2)$ (number of event types). Experiments show that the proposed approach improves performance in log-likelihood, prediction and goodness-of-fit tasks with lower time and space complexity compared to state-of-the art Transformer based architectures.

Radar Guided Dynamic Visual Attention for Resource-Efficient RGB Object Detection

Jun 03, 2022

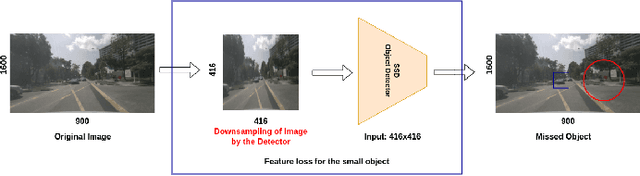

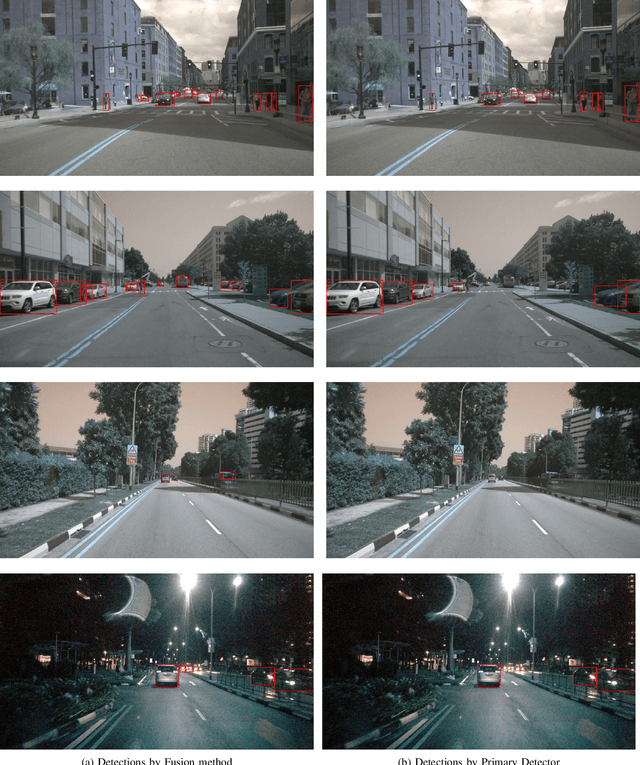

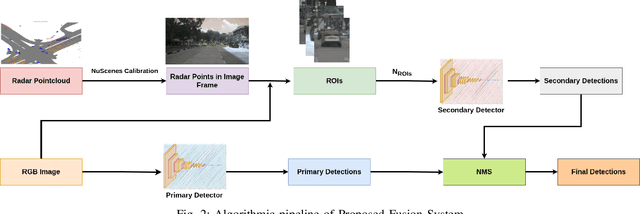

Abstract:An autonomous system's perception engine must provide an accurate understanding of the environment for it to make decisions. Deep learning based object detection networks experience degradation in the performance and robustness for small and far away objects due to a reduction in object's feature map as we move to higher layers of the network. In this work, we propose a novel radar-guided spatial attention for RGB images to improve the perception quality of autonomous vehicles operating in a dynamic environment. In particular, our method improves the perception of small and long range objects, which are often not detected by the object detectors in RGB mode. The proposed method consists of two RGB object detectors, namely the Primary detector and a lightweight Secondary detector. The primary detector takes a full RGB image and generates primary detections. Next, the radar proposal framework creates regions of interest (ROIs) for object proposals by projecting the radar point cloud onto the 2D RGB image. These ROIs are cropped and fed to the secondary detector to generate secondary detections which are then fused with the primary detections via non-maximum suppression. This method helps in recovering the small objects by preserving the object's spatial features through an increase in their receptive field. We evaluate our fusion method on the challenging nuScenes dataset and show that our fusion method with SSD-lite as primary and secondary detector improves the baseline primary yolov3 detector's recall by 14% while requiring three times fewer computational resources.

RADNet: A Deep Neural Network Model for Robust Perception in Moving Autonomous Systems

Apr 30, 2022

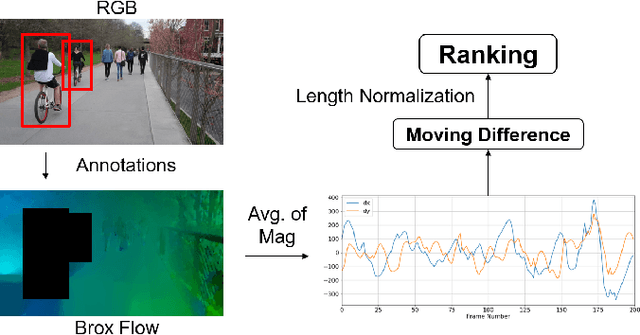

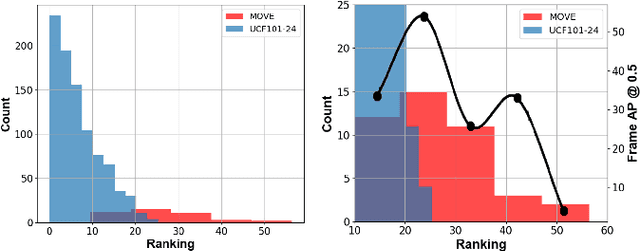

Abstract:Interactive autonomous applications require robustness of the perception engine to artifacts in unconstrained videos. In this paper, we examine the effect of camera motion on the task of action detection. We develop a novel ranking method to rank videos based on the degree of global camera motion. For the high ranking camera videos we show that the accuracy of action detection is decreased. We propose an action detection pipeline that is robust to the camera motion effect and verify it empirically. Specifically, we do actor feature alignment across frames and couple global scene features with local actor-specific features. We do feature alignment using a novel formulation of the Spatio-temporal Sampling Network (STSN) but with multi-scale offset prediction and refinement using a pyramid structure. We also propose a novel input dependent weighted averaging strategy for fusing local and global features. We show the applicability of our network on our dataset of moving camera videos with high camera motion (MOVE dataset) with a 4.1% increase in frame mAP and 17% increase in video mAP.

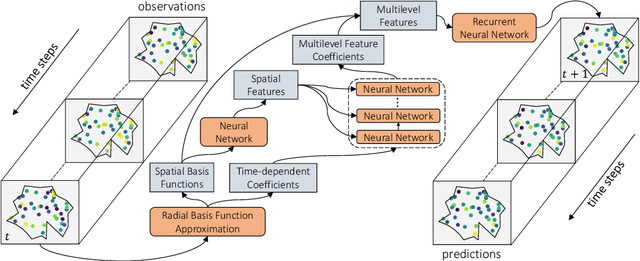

Unraveled Multilevel Transformation Networks for Predicting Sparsely-Observed Spatiotemporal Dynamics

Mar 16, 2022

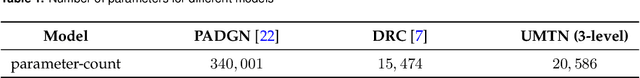

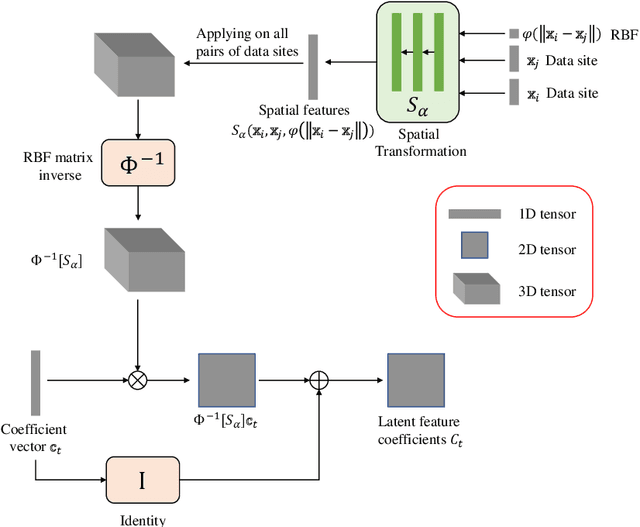

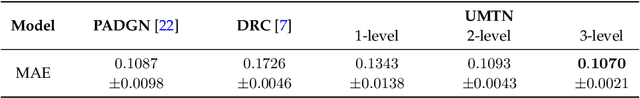

Abstract:In this paper, we address the problem of predicting complex, nonlinear spatiotemporal dynamics when available data is recorded at irregularly-spaced sparse spatial locations. Most of the existing deep learning models for modeling spatiotemporal dynamics are either designed for data in a regular grid or struggle to uncover the spatial relations from sparse and irregularly-spaced data sites. We propose a deep learning model that learns to predict unknown spatiotemporal dynamics using data from sparsely-distributed data sites. We base our approach on Radial Basis Function (RBF) collocation method which is often used for meshfree solution of partial differential equations (PDEs). The RBF framework allows us to unravel the observed spatiotemporal function and learn the spatial interactions among data sites on the RBF-space. The learned spatial features are then used to compose multilevel transformations of the raw observations and predict its evolution in future time steps. We demonstrate the advantage of our approach using both synthetic and real-world climate data.

$μ$DARTS: Model Uncertainty-Aware Differentiable Architecture Search

Jul 24, 2021

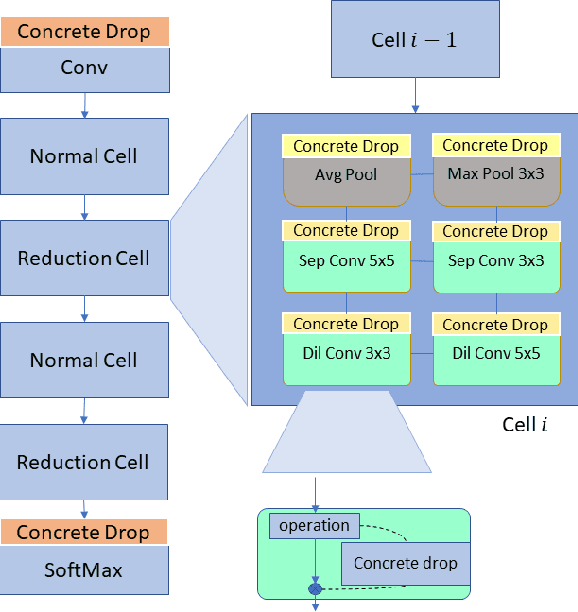

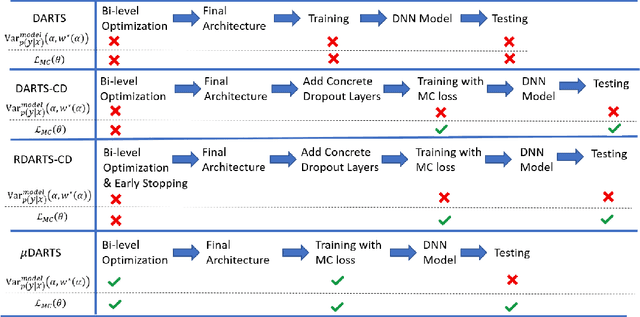

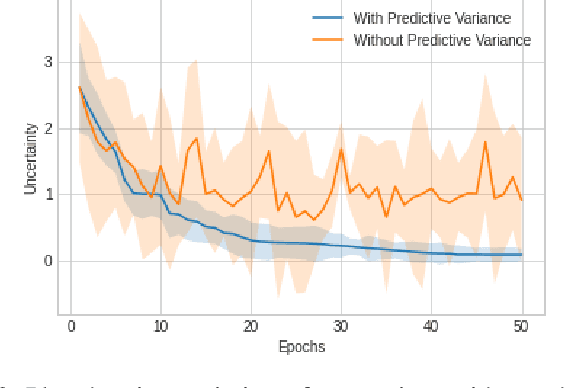

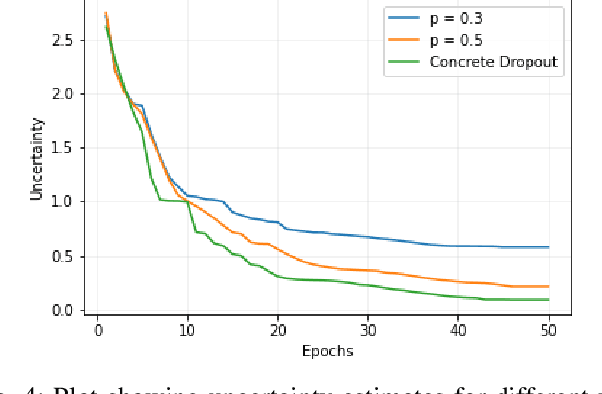

Abstract:We present a Model Uncertainty-aware Differentiable ARchiTecture Search ($\mu$DARTS) that optimizes neural networks to simultaneously achieve high accuracy and low uncertainty. We introduce concrete dropout within DARTS cells and include a Monte-Carlo regularizer within the training loss to optimize the concrete dropout probabilities. A predictive variance term is introduced in the validation loss to enable searching for architecture with minimal model uncertainty. The experiments on CIFAR10, CIFAR100, SVHN, and ImageNet verify the effectiveness of $\mu$DARTS in improving accuracy and reducing uncertainty compared to existing DARTS methods. Moreover, the final architecture obtained from $\mu$DARTS shows higher robustness to noise at the input image and model parameters compared to the architecture obtained from existing DARTS methods.

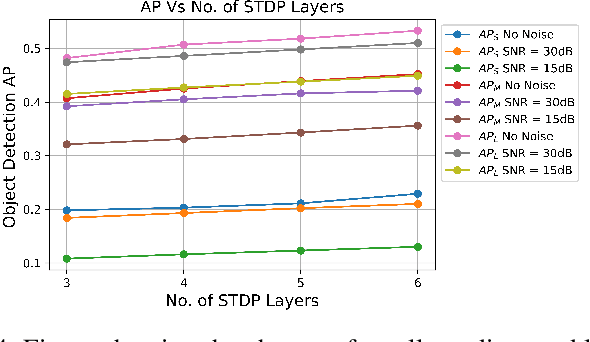

Characterization of Generalizability of Spike Time Dependent Plasticity trained Spiking Neural Networks

May 31, 2021

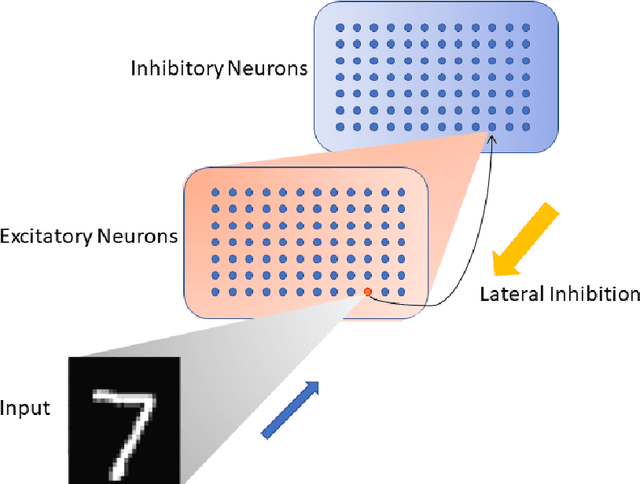

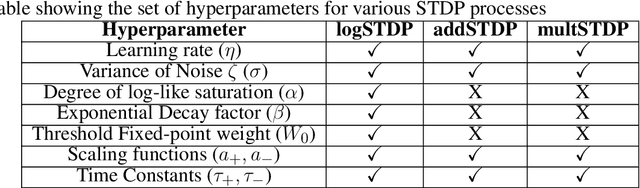

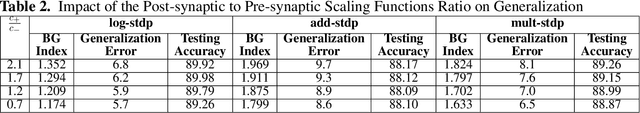

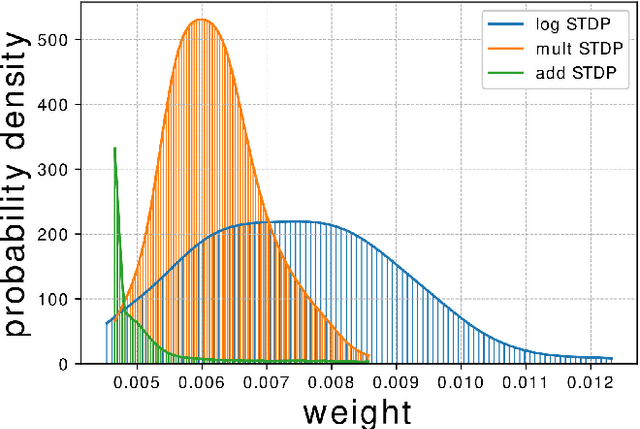

Abstract:A Spiking Neural Network (SNN) trained with Spike Time Dependent Plasticity (STDP) is a neuro-inspired unsupervised learning method for various machine learning applications. This paper studies the generalizability properties of the STDP learning processes using the Hausdorff dimension of the trajectories of the learning algorithm. The paper analyzes the effects of STDP learning models and associated hyper-parameters on the generalizability properties of an SNN and characterizes the generalizability vs learnability trade-off in an SNN. The analysis is used to develop a Bayesian optimization approach to optimize the hyper-parameters for an STDP model to improve the generalizability properties of an SNN.

A Quantum Hopfield Associative Memory Implemented on an Actual Quantum Processor

May 25, 2021

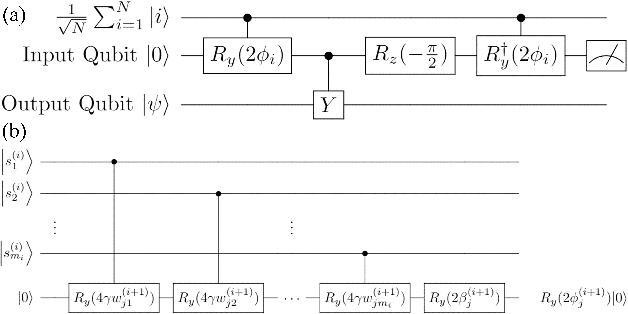

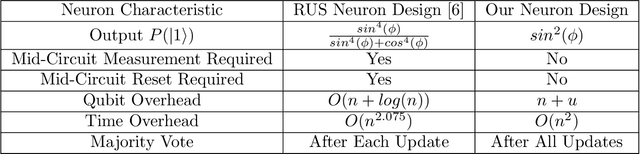

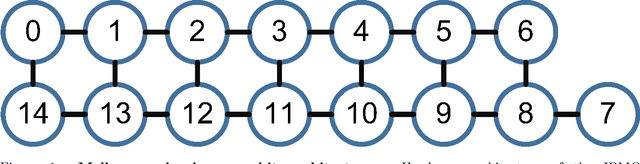

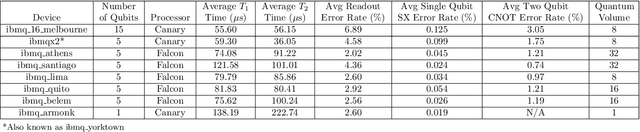

Abstract:In this work, we present a Quantum Hopfield Associative Memory (QHAM) and demonstrate its capabilities in simulation and hardware using IBM Quantum Experience. The QHAM is based on a quantum neuron design which can be utilized for many different machine learning applications and can be implemented on real quantum hardware without requiring mid-circuit measurement or reset operations. We analyze the accuracy of the neuron and the full QHAM considering hardware errors via simulation with hardware noise models as well as with implementation on the 15-qubit ibmq_16_melbourne device. The quantum neuron and the QHAM are shown to be resilient to noise and require low qubit and time overhead. We benchmark the QHAM by testing its effective memory capacity against qubit- and circuit-level errors and demonstrate its capabilities in the NISQ-era of quantum hardware. This demonstration of the first functional QHAM to be implemented in NISQ-era quantum hardware is a significant step in machine learning at the leading edge of quantum computing.

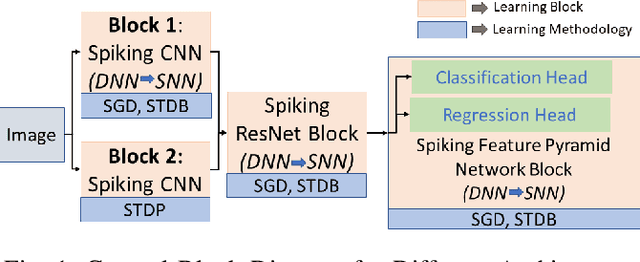

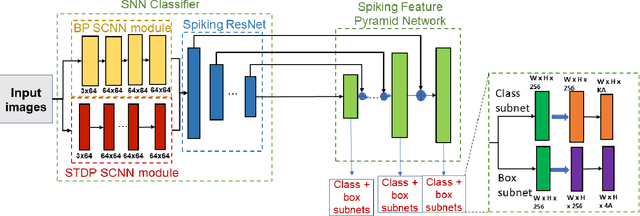

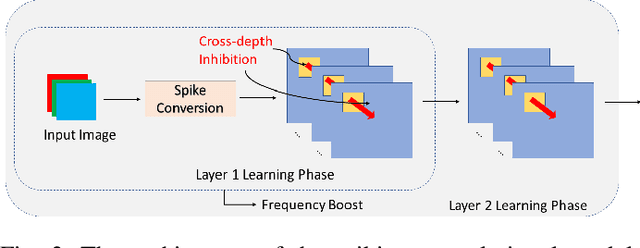

A Fully Spiking Hybrid Neural Network for Energy-Efficient Object Detection

Apr 21, 2021

Abstract:This paper proposes a Fully Spiking Hybrid Neural Network (FSHNN) for energy-efficient and robust object detection in resource-constrained platforms. The network architecture is based on Convolutional SNN using leaky-integrate-fire neuron models. The model combines unsupervised Spike Time-Dependent Plasticity (STDP) learning with back-propagation (STBP) learning methods and also uses Monte Carlo Dropout to get an estimate of the uncertainty error. FSHNN provides better accuracy compared to DNN based object detectors while being 150X energy-efficient. It also outperforms these object detectors, when subjected to noisy input data and less labeled training data with a lower uncertainty error.

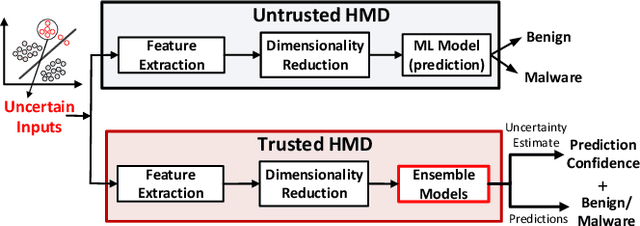

Towards Improving the Trustworthiness of Hardware based Malware Detector using Online Uncertainty Estimation

Mar 21, 2021

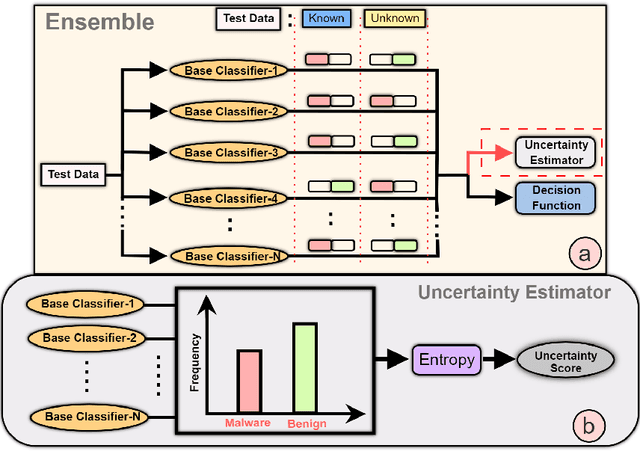

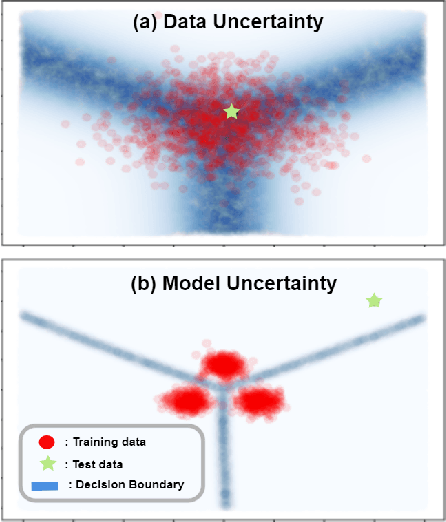

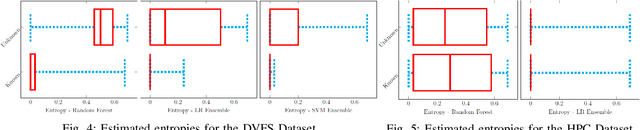

Abstract:Hardware-based Malware Detectors (HMDs) using Machine Learning (ML) models have shown promise in detecting malicious workloads. However, the conventional black-box based machine learning (ML) approach used in these HMDs fail to address the uncertain predictions, including those made on zero-day malware. The ML models used in HMDs are agnostic to the uncertainty that determines whether the model "knows what it knows," severely undermining its trustworthiness. We propose an ensemble-based approach that quantifies uncertainty in predictions made by ML models of an HMD, when it encounters an unknown workload than the ones it was trained on. We test our approach on two different HMDs that have been proposed in the literature. We show that the proposed uncertainty estimator can detect >90% of unknown workloads for the Power-management based HMD, and conclude that the overlapping benign and malware classes undermine the trustworthiness of the Performance Counter-based HMD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge