Ryuji Hirayama

AcoustoBots: A swarm of robots for acoustophoretic multimodal interactions

May 12, 2025Abstract:Acoustophoresis has enabled novel interaction capabilities, such as levitation, volumetric displays, mid-air haptic feedback, and directional sound generation, to open new forms of multimodal interactions. However, its traditional implementation as a singular static unit limits its dynamic range and application versatility. This paper introduces AcoustoBots - a novel convergence of acoustophoresis with a movable and reconfigurable phased array of transducers for enhanced application versatility. We mount a phased array of transducers on a swarm of robots to harness the benefits of multiple mobile acoustophoretic units. This offers a more flexible and interactive platform that enables a swarm of acoustophoretic multimodal interactions. Our novel AcoustoBots design includes a hinge actuation system that controls the orientation of the mounted phased array of transducers to achieve high flexibility in a swarm of acoustophoretic multimodal interactions. In addition, we designed a BeadDispenserBot that can deliver particles to trapping locations, which automates the acoustic levitation interaction. These attributes allow AcoustoBots to independently work for a common cause and interchange between modalities, allowing for novel augmentations (e.g., a swarm of haptics, audio, and levitation) and bilateral interactions with users in an expanded interaction area. We detail our design considerations, challenges, and methodological approach to extend acoustophoretic central control in distributed settings. This work demonstrates a scalable acoustic control framework with two mobile robots, laying the groundwork for future deployment in larger robotic swarms. Finally, we characterize the performance of our AcoustoBots and explore the potential interactive scenarios they can enable.

Computational ghost imaging using deep learning

Oct 19, 2017

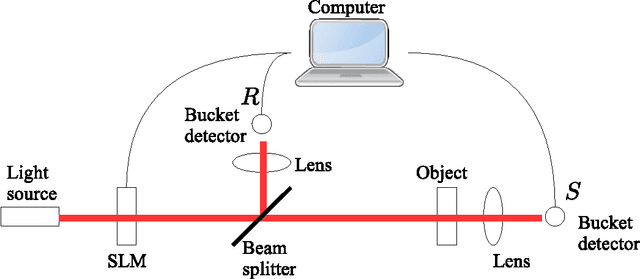

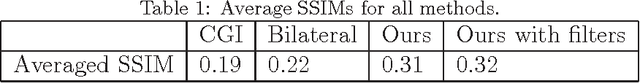

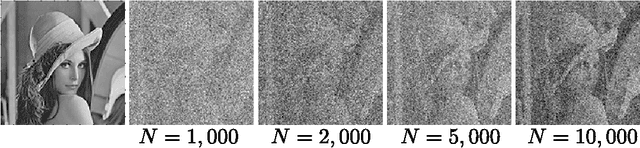

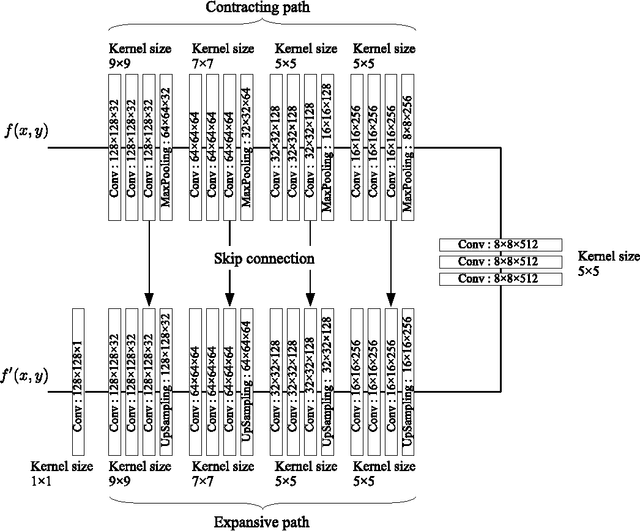

Abstract:Computational ghost imaging (CGI) is a single-pixel imaging technique that exploits the correlation between known random patterns and the measured intensity of light transmitted (or reflected) by an object. Although CGI can obtain two- or three- dimensional images with a single or a few bucket detectors, the quality of the reconstructed images is reduced by noise due to the reconstruction of images from random patterns. In this study, we improve the quality of CGI images using deep learning. A deep neural network is used to automatically learn the features of noise-contaminated CGI images. After training, the network is able to predict low-noise images from new noise-contaminated CGI images.

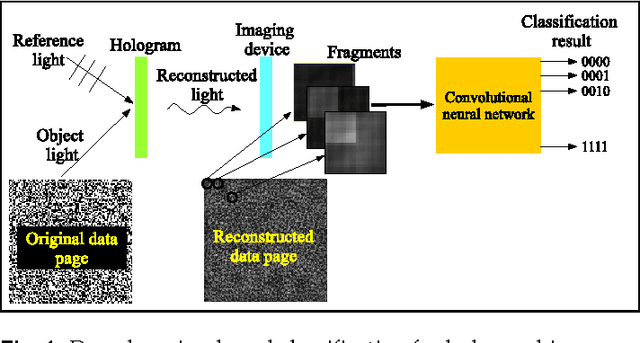

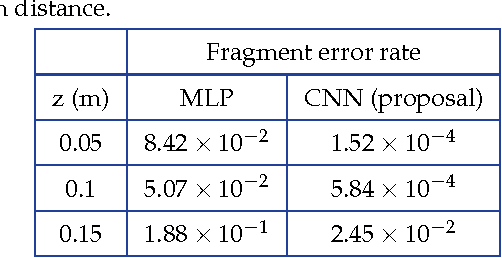

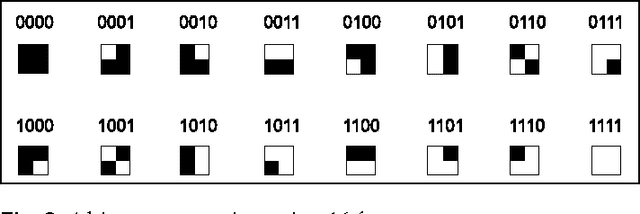

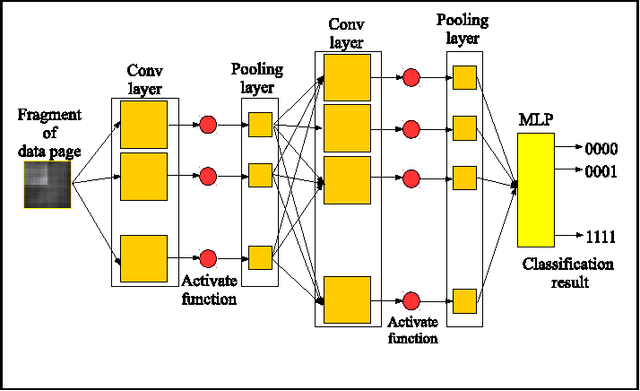

Deep-learning-based data page classification for holographic memory

Jul 02, 2017

Abstract:We propose a deep-learning-based classification of data pages used in holographic memory. We numerically investigated the classification performance of a conventional multi-layer perceptron (MLP) and a deep neural network, under the condition that reconstructed page data are contaminated by some noise and are randomly laterally shifted. The MLP was found to have a classification accuracy of 91.58%, whereas the deep neural network was able to classify data pages at an accuracy of 99.98%. The accuracy of the deep neural network is two orders of magnitude better than the MLP.

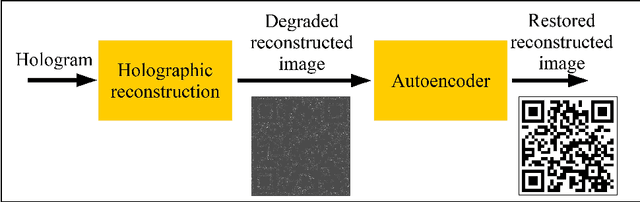

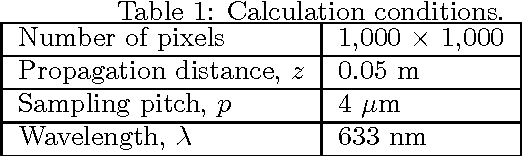

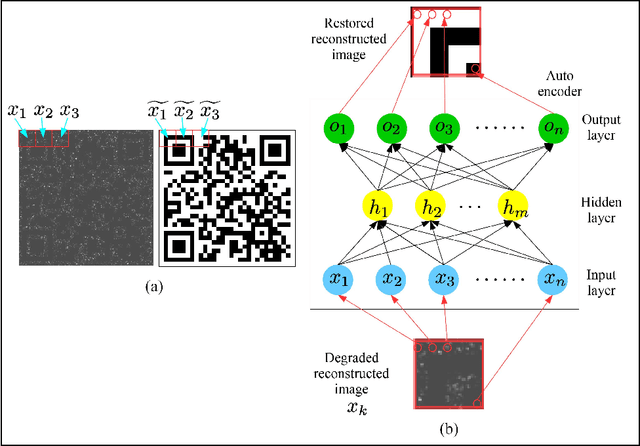

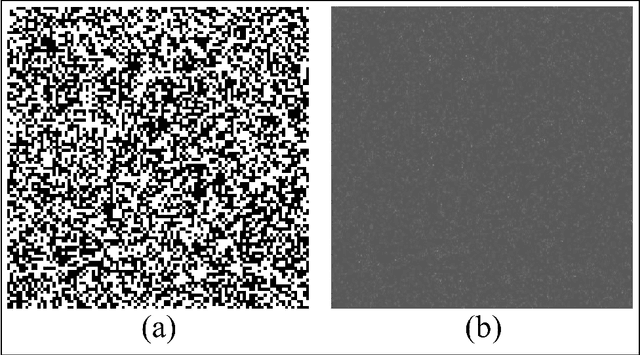

Autoencoder-based holographic image restoration

Dec 12, 2016

Abstract:We propose a holographic image restoration method using an autoencoder, which is an artificial neural network. Because holographic reconstructed images are often contaminated by direct light, conjugate light, and speckle noise, the discrimination of reconstructed images may be difficult. In this paper, we demonstrate the restoration of reconstructed images from holograms that record page data in holographic memory and QR codes by using the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge