Runar Helin

A Methodology for Transparent Logic-Based Classification Using a Multi-Task Convolutional Tsetlin Machine

Oct 02, 2025

Abstract:The Tsetlin Machine (TM) is a novel machine learning paradigm that employs finite-state automata for learning and utilizes propositional logic to represent patterns. Due to its simplistic approach, TMs are inherently more interpretable than learning algorithms based on Neural Networks. The Convolutional TM has shown comparable performance on various datasets such as MNIST, K-MNIST, F-MNIST and CIFAR-2. In this paper, we explore the applicability of the TM architecture for large-scale multi-channel (RGB) image classification. We propose a methodology to generate both local interpretations and global class representations. The local interpretations can be used to explain the model predictions while the global class representations aggregate important patterns for each class. These interpretations summarize the knowledge captured by the convolutional clauses, which can be visualized as images. We evaluate our methods on MNIST and CelebA datasets, using models that achieve 98.5\% accuracy on MNIST and 86.56\% F1-score on CelebA (compared to 88.07\% for ResNet50) respectively. We show that the TM performs competitively to this deep learning model while maintaining its interpretability, even in large-scale complex training environments. This contributes to a better understanding of TM clauses and provides insights into how these models can be applied to more complex and diverse datasets.

Multiblock-Networks: A Neural Network Analog to Component Based Methods for Multi-Source Data

Sep 21, 2021

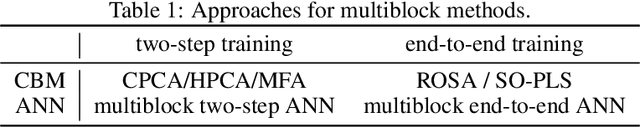

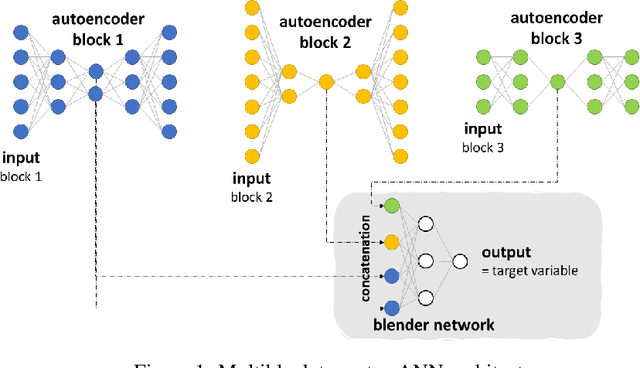

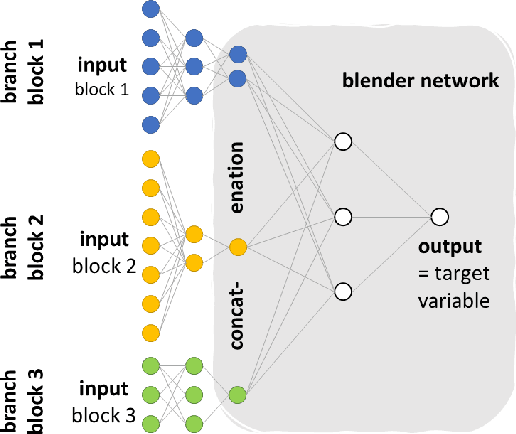

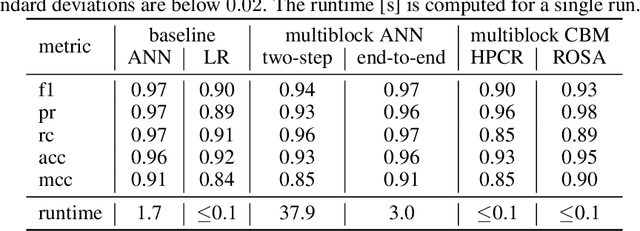

Abstract:Training predictive models on datasets from multiple sources is a common, yet challenging setup in applied machine learning. Even though model interpretation has attracted more attention in recent years, many modeling approaches still focus mainly on performance. To further improve the interpretability of machine learning models, we suggest the adoption of concepts and tools from the well-established framework of component based multiblock analysis, also known as chemometrics. Nevertheless, artificial neural networks provide greater flexibility in model architecture and thus, often deliver superior predictive performance. In this study, we propose a setup to transfer the concepts of component based statistical models, including multiblock variants of principal component regression and partial least squares regression, to neural network architectures. Thereby, we combine the flexibility of neural networks with the concepts for interpreting block relevance in multiblock methods. In two use cases we demonstrate how the concept can be implemented in practice, and compare it to both common feed-forward neural networks without blocks, as well as statistical component based multiblock methods. Our results underline that multiblock networks allow for basic model interpretation while matching the performance of ordinary feed-forward neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge