Roshan Gopalakrishnan

MaD: Mapping and debugging framework for implementing deep neural network onto a neuromorphic chip with crossbar array of synapses

Jan 01, 2019

Abstract:Neuromorphic systems or dedicated hardware for neuromorphic computing is getting popular with the advancement in research on different device materials for synapses, especially in crossbar architecture and also algorithms specific or compatible to neuromorphic hardware. Hence, an automated mapping of any deep neural network onto the neuromorphic chip with crossbar array of synapses and an efficient debugging framework is very essential. Here, mapping is defined as the deployment of a section of deep neural network layer onto a neuromorphic core and the generation of connection lists among population of neurons to specify the connectivity between various neuromorphic cores on the neuromorphic chip. Debugging is the verification of computations performed on the neuromorphic chip during inferencing. Together the framework becomes Mapping and Debugging (MaD) framework. MaD framework is quite general in usage as it is a Python wrapper which can be integrated with almost every simulator tools for neuromorphic chips. This paper illustrates the MaD framework in detail, considering some optimizations while mapping onto a single neuromorphic core. A classification task on MNIST and CIFAR-10 datasets are considered for test case implementation of MaD framework.

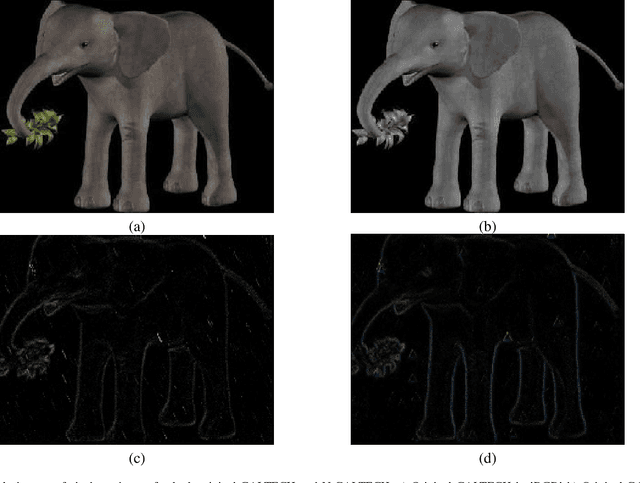

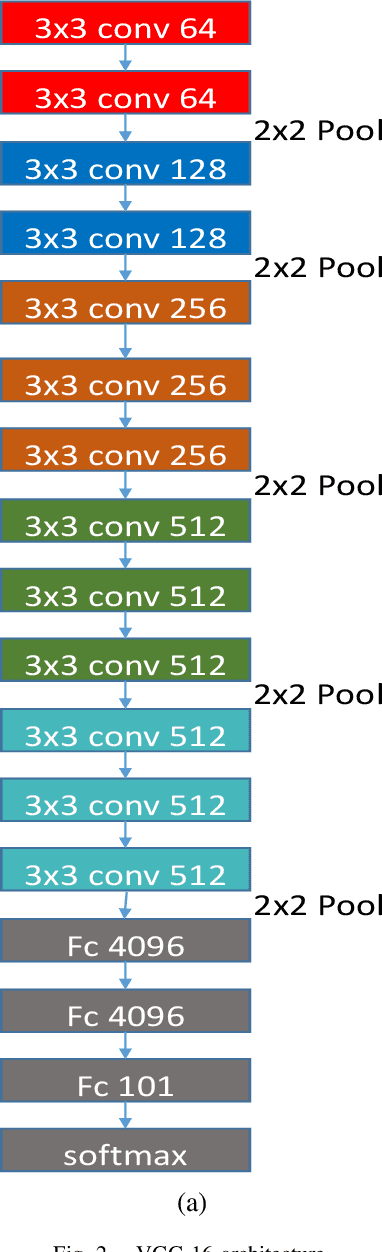

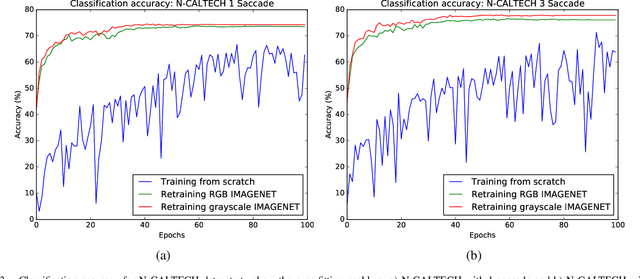

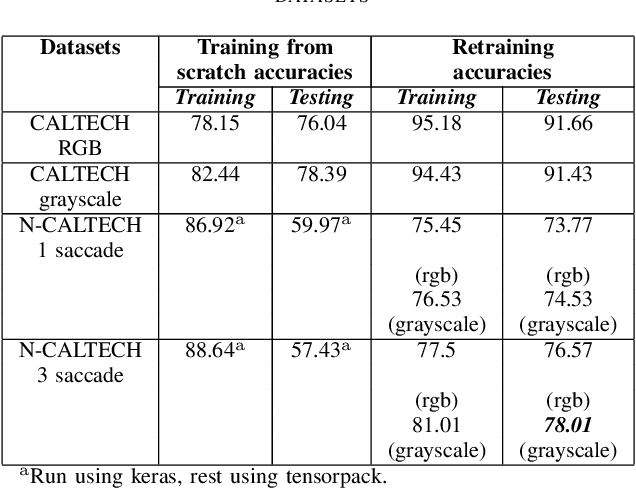

Classifying neuromorphic data using a deep learning framework for image classification

Jul 02, 2018

Abstract:In the field of artificial intelligence, neuromorphic computing has been around for several decades. Deep learning has however made much recent progress such that it consistently outperforms neuromorphic learning algorithms in classification tasks in terms of accuracy. Specifically in the field of image classification, neuromorphic computing has been traditionally using either the temporal or rate code for encoding static images in datasets into spike trains. It is only till recently, that neuromorphic vision sensors are widely used by the neuromorphic research community, and provides an alternative to such encoding methods. Since then, several neuromorphic datasets as obtained by applying such sensors on image datasets (e.g. the neuromorphic CALTECH 101) have been introduced. These data are encoded in spike trains and hence seem ideal for benchmarking of neuromorphic learning algorithms. Specifically, we train a deep learning framework used for image classification on the CALTECH 101 and a collapsed version of the neuromorphic CALTECH 101 datasets. We obtained an accuracy of 91.66% and 78.01% for the CALTECH 101 and neuromorphic CALTECH 101 datasets respectively. For CALTECH 101, our accuracy is close to the best reported accuracy, while for neuromorphic CALTECH 101, it outperforms the last best reported accuracy by over 10%. This raises the question of the suitability of such datasets as benchmarks for neuromorphic learning algorithms.

Triplet Spike Time Dependent Plasticity: A floating-gate Implementation

Dec 29, 2015

Abstract:Synapse plays an important role of learning in a neural network; the learning rules which modify the synaptic strength based on the timing difference between the pre- and post-synaptic spike occurrence is termed as Spike Time Dependent Plasticity (STDP). The most commonly used rule posits weight change based on time difference between one pre- and one post spike and is hence termed doublet STDP (DSTDP). However, D-STDP could not reproduce results of many biological experiments; a triplet STDP (T-STDP) that considers triplets of spikes as the fundamental unit has been proposed recently to explain these observations. This paper describes the compact implementation of a synapse using single floating-gate (FG) transistor that can store a weight in a nonvolatile manner and demonstrate the triplet STDP (T-STDP) learning rule by modifying drain voltages according to triplets of spikes. We describe a mathematical procedure to obtain control voltages for the FG device for T-STDP and also show measurement results from a FG synapse fabricated in TSMC 0.35um CMOS process to support the theory. Possible VLSI implementation of drain voltage waveform generator circuits are also presented with simulation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge