Rosemary Monahan

Maynooth University

Learning-Infused Formal Reasoning: From Contract Synthesis to Artifact Reuse and Formal Semantics

Feb 02, 2026Abstract:This vision paper articulates a long-term research agenda for formal methods at the intersection with artificial intelligence, outlining multiple conceptual and technical dimensions and reporting on our ongoing work toward realising this agenda. It advances a forward-looking perspective on the next generation of formal methods based on the integration of automated contract synthesis, semantic artifact reuse, and refinement-based theory. We argue that future verification systems must move beyond isolated correctness proofs toward a cumulative, knowledge-driven paradigm in which specifications, contracts, and proofs are continuously synthesised and transferred across systems. To support this shift, we outline a hybrid framework combining large language models with graph-based representations to enable scalable semantic matching and principled reuse of verification artifacts. Learning-based components provide semantic guidance across heterogeneous notations and abstraction levels, while symbolic matching ensures formal soundness. Grounded in compositional reasoning, this vision points toward verification ecosystems that evolve systematically, leveraging past verification efforts to accelerate future assurance.

ACM Survey Draft on Formalising Software Requirements with Large Language Models

Jun 17, 2025Abstract:This draft is a working document, having a summary of nighty-four (94) papers with additional sections on Traceability of Software Requirements (Section 4), Formal Methods and Its Tools (Section 5), Unifying Theories of Programming (UTP) and Theory of Institutions (Section 6). Please refer to abstract of [7,8]. Key difference of this draft from our recently anticipated ones with similar titles, i.e. AACS 2025 [7] and SAIV 2025 [8] is: [7] is a two page submission to ADAPT Annual Conference, Ireland. Submitted on 18th of March, 2025, it went through the light-weight blind review and accepted for poster presentation. Conference was held on 15th of May, 2025. [8] is a nine page paper with additional nine pages of references and summary tables, submitted to Symposium on AI Verification (SAIV 2025) on 24th of April, 2025. It went through rigorous review process. The uploaded version on arXiv.org [8] is the improved one of the submission, after addressing the specific suggestions to improve the paper.

Formalising Software Requirements using Large Language Models

Jun 12, 2025Abstract:This paper is a brief introduction to our recently initiated project named VERIFAI: Traceability and verification of natural language requirements. The project addresses the challenges in the traceability and verification of formal specifications through providing support for the automatic generation of the formal specifications and the traceability of the requirements from the initial software design stage through the systems implementation and verification. Approaches explored in this project include Natural Language Processing, use of ontologies to describe the software system domain, reuse of existing software artefacts from similar systems (i.e. through similarity based reuse) and large language models to identify and declare the specifications as well as use of artificial intelligence to guide the process.

A Generalised Framework for Property-Driven Machine Learning

May 01, 2025Abstract:Neural networks have been shown to frequently fail to satisfy critical safety and correctness properties after training, highlighting the pressing need for training methods that incorporate such properties directly. While adversarial training can be used to improve robustness to small perturbations within $\epsilon$-cubes, domains other than computer vision -- such as control systems and natural language processing -- may require more flexible input region specifications via generalised hyper-rectangles. Meanwhile, differentiable logics offer a way to encode arbitrary logical constraints as additional loss terms that guide the learning process towards satisfying these constraints. In this paper, we investigate how these two complementary approaches can be unified within a single framework for property-driven machine learning. We show that well-known properties from the literature are subcases of this general approach, and we demonstrate its practical effectiveness on a case study involving a neural network controller for a drone system. Our framework is publicly available at https://github.com/tflinkow/property-driven-ml.

Creating a Formally Verified Neural Network for Autonomous Navigation: An Experience Report

Nov 21, 2024

Abstract:The increased reliance of self-driving vehicles on neural networks opens up the challenge of their verification. In this paper we present an experience report, describing a case study which we undertook to explore the design and training of a neural network on a custom dataset for vision-based autonomous navigation. We are particularly interested in the use of machine learning with differentiable logics to obtain networks satisfying basic safety properties by design, guaranteeing the behaviour of the neural network after training. We motivate the choice of a suitable neural network verifier for our purposes and report our observations on the use of neural network verifiers for self-driving systems.

* In Proceedings FMAS2024, arXiv:2411.13215

Comparing Differentiable Logics for Learning Systems: A Research Preview

Nov 16, 2023Abstract:Extensive research on formal verification of machine learning (ML) systems indicates that learning from data alone often fails to capture underlying background knowledge. A variety of verifiers have been developed to ensure that a machine-learnt model satisfies correctness and safety properties, however, these verifiers typically assume a trained network with fixed weights. ML-enabled autonomous systems are required to not only detect incorrect predictions, but should also possess the ability to self-correct, continuously improving and adapting. A promising approach for creating ML models that inherently satisfy constraints is to encode background knowledge as logical constraints that guide the learning process via so-called differentiable logics. In this research preview, we compare and evaluate various logics from the literature in weakly-supervised contexts, presenting our findings and highlighting open problems for future work. Our experimental results are broadly consistent with results reported previously in literature; however, learning with differentiable logics introduces a new hyperparameter that is difficult to tune and has significant influence on the effectiveness of the logics.

* In Proceedings FMAS 2023, arXiv:2311.08987

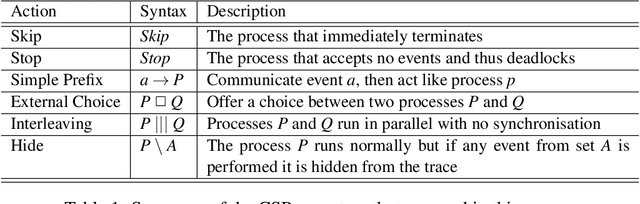

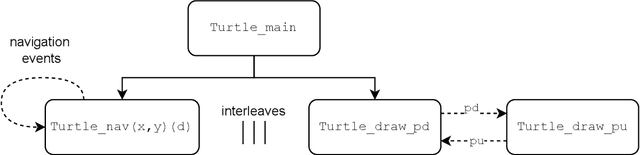

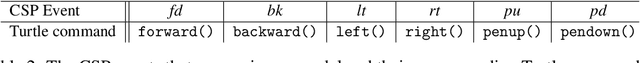

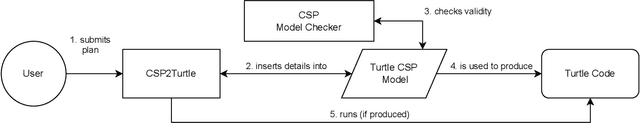

Modelling the Turtle Python library in CSP

Jul 20, 2022

Abstract:Software verification is an important tool in establishing the reliability of critical systems. One potential area of application is in the field of robotics, as robots take on more tasks in both day-to-day areas and highly specialised domains. Robots are usually given a plan to follow, if there are errors in this plan the robot will not perform reliably. The capability to check plans for errors in advance could prevent this. Python is a popular programming language in the robotics domain, through the use of the Robot Operating System (ROS) and various other libraries. Python's Turtle package provides a mobile agent, which we formally model here using Communicating Sequential Processes (CSP). Our interactive toolchain CSP2Turtle with CSP model and Python components, enables Turtle plans to be verified in CSP before being executed in Python. This means that certain classes of errors can be avoided, and provides a starting point for more detailed verification of Turtle programs and more complex robotic systems. We illustrate our approach with examples of robot navigation and obstacle avoidance in a 2D grid-world.

* In Proceedings AREA 2022, arXiv:2207.09058

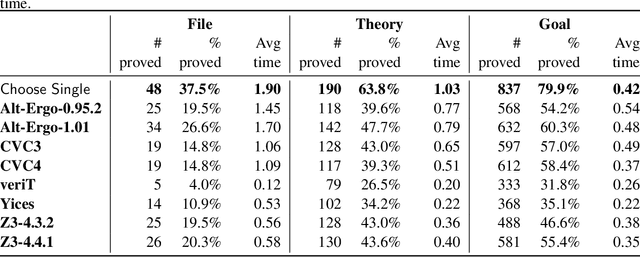

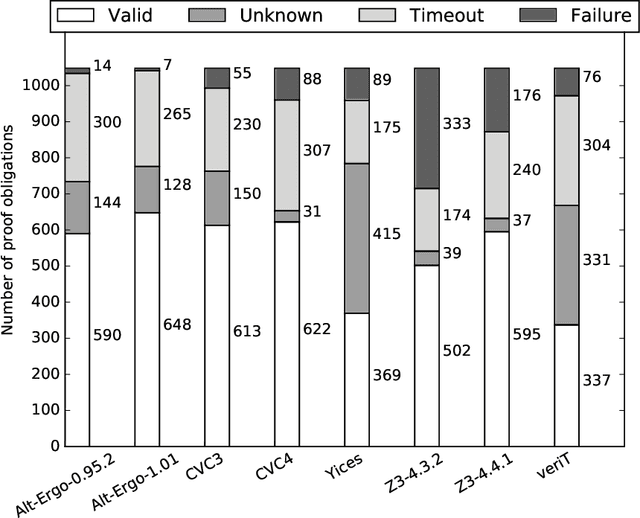

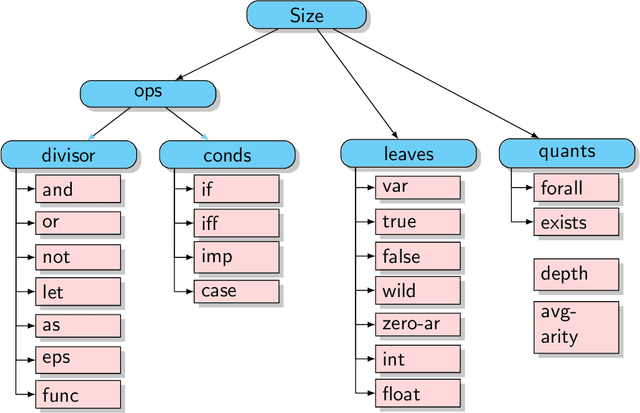

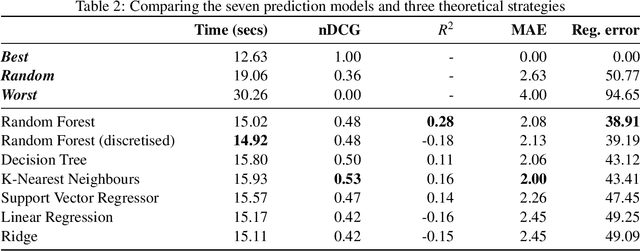

Predicting SMT Solver Performance for Software Verification

Jan 30, 2017

Abstract:The Why3 IDE and verification system facilitates the use of a wide range of Satisfiability Modulo Theories (SMT) solvers through a driver-based architecture. We present Where4: a portfolio-based approach to discharge Why3 proof obligations. We use data analysis and machine learning techniques on static metrics derived from program source code. Our approach benefits software engineers by providing a single utility to delegate proof obligations to the solvers most likely to return a useful result. It does this in a time-efficient way using existing Why3 and solver installations - without requiring low-level knowledge about SMT solver operation from the user.

* In Proceedings F-IDE 2016, arXiv:1701.07925

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge