Romain Hennequin

An Experimental Comparison Of Multi-view Self-supervised Methods For Music Tagging

Apr 14, 2024

Abstract:Self-supervised learning has emerged as a powerful way to pre-train generalizable machine learning models on large amounts of unlabeled data. It is particularly compelling in the music domain, where obtaining labeled data is time-consuming, error-prone, and ambiguous. During the self-supervised process, models are trained on pretext tasks, with the primary objective of acquiring robust and informative features that can later be fine-tuned for specific downstream tasks. The choice of the pretext task is critical as it guides the model to shape the feature space with meaningful constraints for information encoding. In the context of music, most works have relied on contrastive learning or masking techniques. In this study, we expand the scope of pretext tasks applied to music by investigating and comparing the performance of new self-supervised methods for music tagging. We open-source a simple ResNet model trained on a diverse catalog of millions of tracks. Our results demonstrate that, although most of these pre-training methods result in similar downstream results, contrastive learning consistently results in better downstream performance compared to other self-supervised pre-training methods. This holds true in a limited-data downstream context.

Distinguishing Fictional Voices: a Study of Authorship Verification Models for Quotation Attribution

Jan 30, 2024

Abstract:Recent approaches to automatically detect the speaker of an utterance of direct speech often disregard general information about characters in favor of local information found in the context, such as surrounding mentions of entities. In this work, we explore stylistic representations of characters built by encoding their quotes with off-the-shelf pretrained Authorship Verification models in a large corpus of English novels (the Project Dialogism Novel Corpus). Results suggest that the combination of stylistic and topical information captured in some of these models accurately distinguish characters among each other, but does not necessarily improve over semantic-only models when attributing quotes. However, these results vary across novels and more investigation of stylometric models particularly tailored for literary texts and the study of characters should be conducted.

Ex2Vec: Characterizing Users and Items from the Mere Exposure Effect

Nov 17, 2023Abstract:The traditional recommendation framework seeks to connect user and content, by finding the best match possible based on users past interaction. However, a good content recommendation is not necessarily similar to what the user has chosen in the past. As humans, users naturally evolve, learn, forget, get bored, they change their perspective of the world and in consequence, of the recommendable content. One well known mechanism that affects user interest is the Mere Exposure Effect: when repeatedly exposed to stimuli, users' interest tends to rise with the initial exposures, reaching a peak, and gradually decreasing thereafter, resulting in an inverted-U shape. Since previous research has shown that the magnitude of the effect depends on a number of interesting factors such as stimulus complexity and familiarity, leveraging this effect is a way to not only improve repeated recommendation but to gain a more in-depth understanding of both users and stimuli. In this work we present (Mere) Exposure2Vec (Ex2Vec) our model that leverages the Mere Exposure Effect in repeat consumption to derive user and item characterization and track user interest evolution. We validate our model through predicting future music consumption based on repetition and discuss its implications for recommendation scenarios where repetition is common.

On the Consistency of Average Embeddings for Item Recommendation

Aug 30, 2023

Abstract:A prevalent practice in recommender systems consists in averaging item embeddings to represent users or higher-level concepts in the same embedding space. This paper investigates the relevance of such a practice. For this purpose, we propose an expected precision score, designed to measure the consistency of an average embedding relative to the items used for its construction. We subsequently analyze the mathematical expression of this score in a theoretical setting with specific assumptions, as well as its empirical behavior on real-world data from music streaming services. Our results emphasize that real-world averages are less consistent for recommendation, which paves the way for future research to better align real-world embeddings with assumptions from our theoretical setting.

Of Spiky SVDs and Music Recommendation

Jun 30, 2023Abstract:The truncated singular value decomposition is a widely used methodology in music recommendation for direct similar-item retrieval or embedding musical items for downstream tasks. This paper investigates a curious effect that we show naturally occurring on many recommendation datasets: spiking formations in the embedding space. We first propose a metric to quantify this spiking organization's strength, then mathematically prove its origin tied to underlying communities of items of varying internal popularity. With this new-found theoretical understanding, we finally open the topic with an industrial use case of estimating how music embeddings' top-k similar items will change over time under the addition of data.

Attention Mixtures for Time-Aware Sequential Recommendation

Apr 17, 2023Abstract:Transformers emerged as powerful methods for sequential recommendation. However, existing architectures often overlook the complex dependencies between user preferences and the temporal context. In this short paper, we introduce MOJITO, an improved Transformer sequential recommender system that addresses this limitation. MOJITO leverages Gaussian mixtures of attention-based temporal context and item embedding representations for sequential modeling. Such an approach permits to accurately predict which items should be recommended next to users depending on past actions and the temporal context. We demonstrate the relevance of our approach, by empirically outperforming existing Transformers for sequential recommendation on several real-world datasets.

A Human Subject Study of Named Entity Recognition in Conversational Music Recommendation Queries

Mar 13, 2023

Abstract:We conducted a human subject study of named entity recognition on a noisy corpus of conversational music recommendation queries, with many irregular and novel named entities. We evaluated the human NER linguistic behaviour in these challenging conditions and compared it with the most common NER systems nowadays, fine-tuned transformers. Our goal was to learn about the task to guide the design of better evaluation methods and NER algorithms. The results showed that NER in our context was quite hard for both human and algorithms under a strict evaluation schema; humans had higher precision, while the model higher recall because of entity exposure especially during pre-training; and entity types had different error patterns (e.g. frequent typing errors for artists). The released corpus goes beyond predefined frames of interaction and can support future work in conversational music recommendation.

New Frontiers in Graph Autoencoders: Joint Community Detection and Link Prediction

Nov 16, 2022

Abstract:Graph autoencoders (GAE) and variational graph autoencoders (VGAE) emerged as powerful methods for link prediction (LP). Their performances are less impressive on community detection (CD), where they are often outperformed by simpler alternatives such as the Louvain method. It is still unclear to what extent one can improve CD with GAE and VGAE, especially in the absence of node features. It is moreover uncertain whether one could do so while simultaneously preserving good performances on LP in a multi-task setting. In this workshop paper, summarizing results from our journal publication (Salha-Galvan et al. 2022), we show that jointly addressing these two tasks with high accuracy is possible. For this purpose, we introduce a community-preserving message passing scheme, doping our GAE and VGAE encoders by considering both the initial graph and Louvain-based prior communities when computing embedding spaces. Inspired by modularity-based clustering, we further propose novel training and optimization strategies specifically designed for joint LP and CD. We demonstrate the empirical effectiveness of our approach, referred to as Modularity-Aware GAE and VGAE, on various real-world graphs.

Discovery Dynamics: Leveraging Repeated Exposure for User and Music Characterization

Oct 28, 2022Abstract:Repetition in music consumption is a common phenomenon. It is notably more frequent when compared to the consumption of other media, such as books and movies. In this paper, we show that one particularly interesting repetitive behavior arises when users are consuming new items. Users' interest tends to rise with the first repetitions and attains a peak after which interest will decrease with subsequent exposures, resulting in an inverted-U shape. This behavior, which has been extensively studied in psychology, is called the mere exposure effect. In this paper, we show how a number of factors, both content and user-based, well documented in the literature on the mere exposure effect, modulate the magnitude of the effect. Due to the vast availability of data of users discovering new songs everyday in music streaming platforms, these findings enable new ways to characterize both the music, users and their relationships. Ultimately, it opens up the possibility of developing new recommender systems paradigms based on these characterizations.

Learning Unsupervised Hierarchies of Audio Concepts

Jul 21, 2022

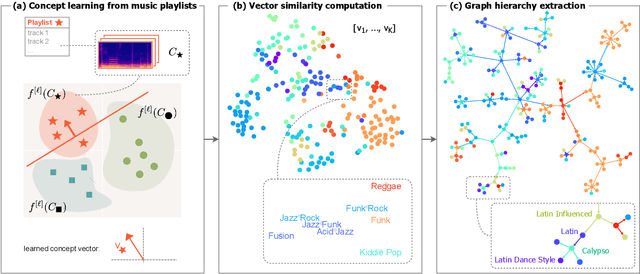

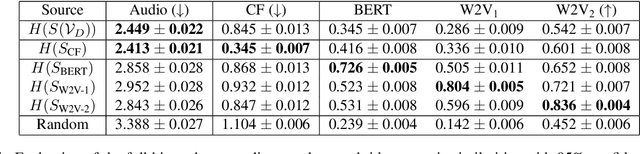

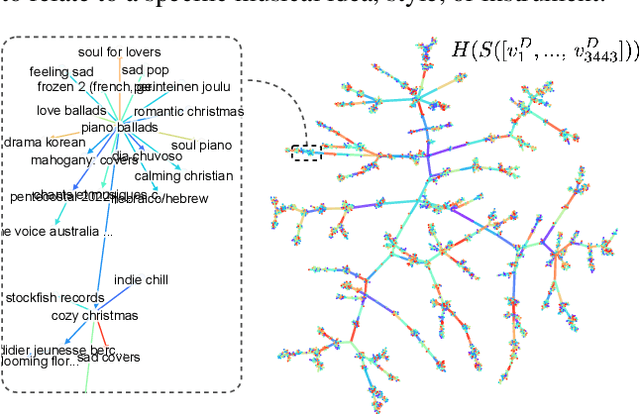

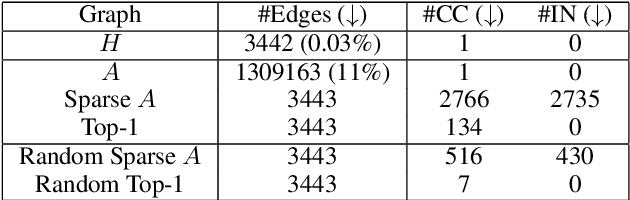

Abstract:Music signals are difficult to interpret from their low-level features, perhaps even more than images: e.g. highlighting part of a spectrogram or an image is often insufficient to convey high-level ideas that are genuinely relevant to humans. In computer vision, concept learning was therein proposed to adjust explanations to the right abstraction level (e.g. detect clinical concepts from radiographs). These methods have yet to be used for MIR. In this paper, we adapt concept learning to the realm of music, with its particularities. For instance, music concepts are typically non-independent and of mixed nature (e.g. genre, instruments, mood), unlike previous work that assumed disentangled concepts. We propose a method to learn numerous music concepts from audio and then automatically hierarchise them to expose their mutual relationships. We conduct experiments on datasets of playlists from a music streaming service, serving as a few annotated examples for diverse concepts. Evaluations show that the mined hierarchies are aligned with both ground-truth hierarchies of concepts -- when available -- and with proxy sources of concept similarity in the general case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge