Rohan Alexander

Limits to AI Growth: The Ecological and Social Consequences of Scaling

Jan 29, 2025Abstract:The accelerating development and deployment of AI technologies depend on the continued ability to scale their infrastructure. This has implied increasing amounts of monetary investment and natural resources. Frontier AI applications have thus resulted in rising financial, environmental, and social costs. While the factors that AI scaling depends on reach its limits, the push for its accelerated advancement and entrenchment continues. In this paper, we provide a holistic review of AI scaling using four lenses (technical, economic, ecological, and social) and review the relationships between these lenses to explore the dynamics of AI growth. We do so by drawing on system dynamics concepts including archetypes such as "limits to growth" to model the dynamic complexity of AI scaling and synthesize several perspectives. Our work maps out the entangled relationships between the technical, economic, ecological and social perspectives and the apparent limits to growth. The analysis explains how industry's responses to external limits enables continued (but temporary) scaling and how this benefits Big Tech while externalizing social and environmental damages. To avoid an "overshoot and collapse" trajectory, we advocate for realigning priorities and norms around scaling to prioritize sustainable and mindful advancements.

Detecting Hate Speech with GPT-3

Mar 23, 2021

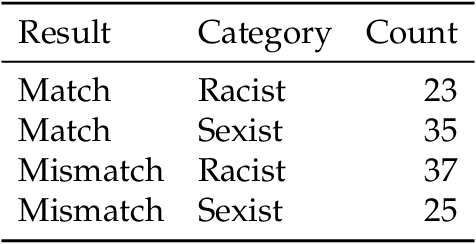

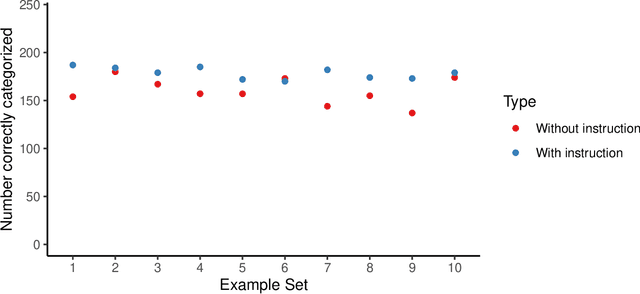

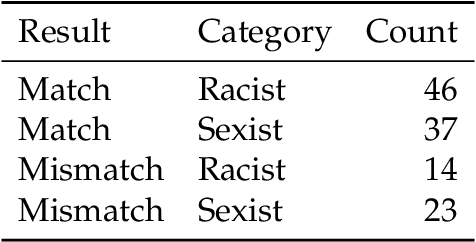

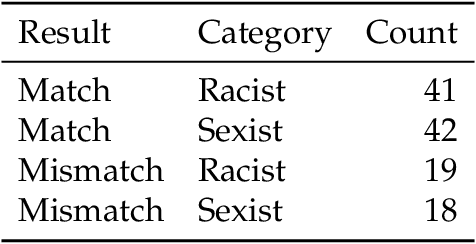

Abstract:Sophisticated language models such as OpenAI's GPT-3 can generate hateful text that targets marginalized groups. Given this capacity, we are interested in whether large language models can be used to identify hate speech and classify text as sexist or racist? We use GPT-3 to identify sexist and racist text passages with zero-, one-, and few-shot learning. We find that with zero- and one-shot learning, GPT-3 is able to identify sexist or racist text with an accuracy between 48 per cent and 69 per cent. With few-shot learning and an instruction included in the prompt, the model's accuracy can be as high as 78 per cent. We conclude that large language models have a role to play in hate speech detection, and that with further development language models could be used to counter hate speech and even self-police.

On consistency scores in text data with an implementation in R

Jan 13, 2021Abstract:In this paper, we introduce a reproducible cleaning process for the text extracted from PDFs using n-gram models. Our approach compares the originally extracted text with the text generated from, or expected by, these models using earlier text as stimulus. To guide this process, we introduce the notion of a consistency score, which refers to the proportion of text that is expected by the model. This is used to monitor changes during the cleaning process, and across different corpuses. We illustrate our process on text from the book Jane Eyre and introduce both a Shiny application and an R package to make our process easier for others to adopt.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge