Robert Moakler

Enhancing Transparency and Control when Drawing Data-Driven Inferences about Individuals

Jun 26, 2016

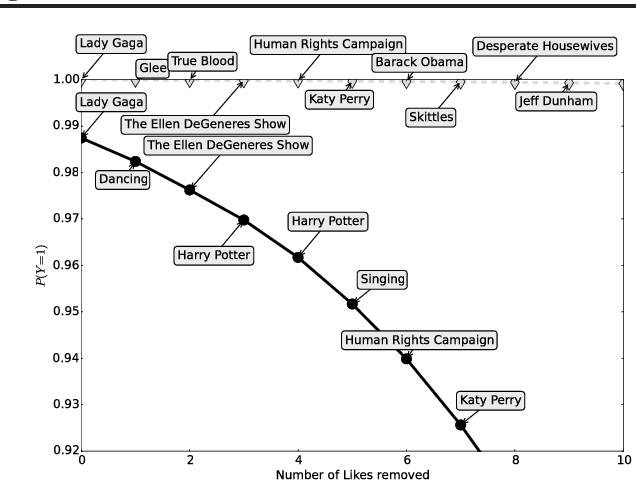

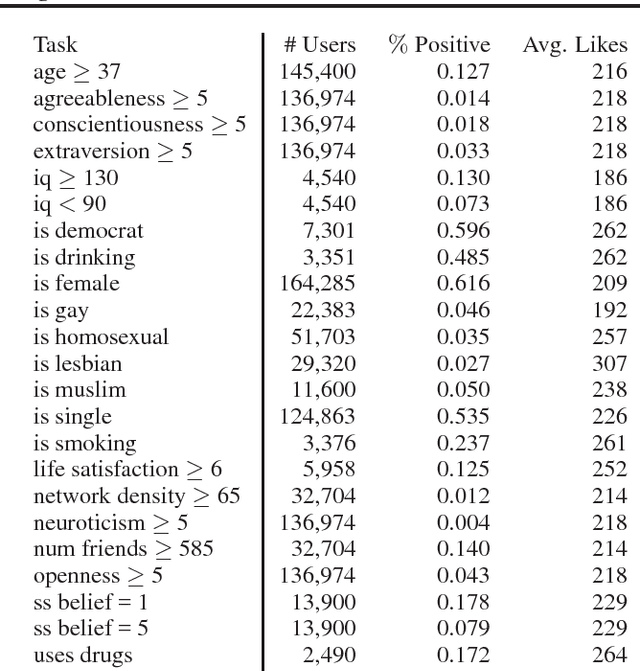

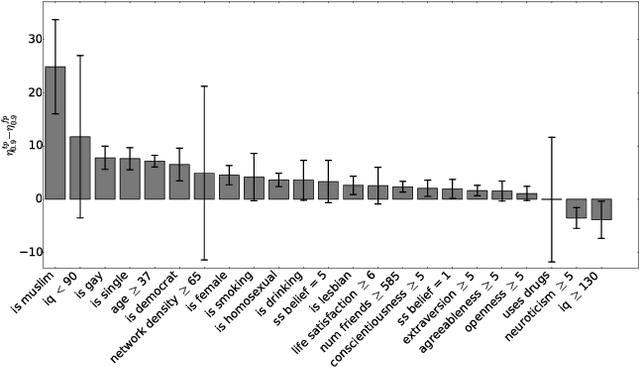

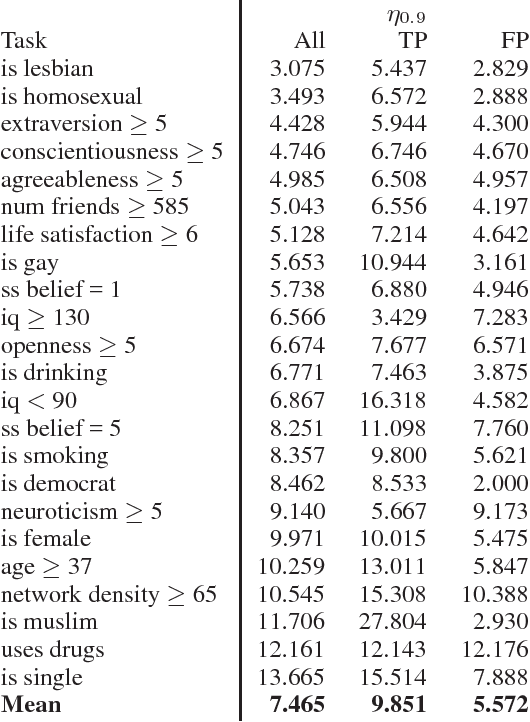

Abstract:Recent studies have shown that information disclosed on social network sites (such as Facebook) can be used to predict personal characteristics with surprisingly high accuracy. In this paper we examine a method to give online users transparency into why certain inferences are made about them by statistical models, and control to inhibit those inferences by hiding ("cloaking") certain personal information from inference. We use this method to examine whether such transparency and control would be a reasonable goal by assessing how difficult it would be for users to actually inhibit inferences. Applying the method to data from a large collection of real users on Facebook, we show that a user must cloak only a small portion of her Facebook Likes in order to inhibit inferences about their personal characteristics. However, we also show that in response a firm could change its modeling of users to make cloaking more difficult.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge