Richard Kimera

Data Augmentation With Back translation for Low Resource languages: A case of English and Luganda

May 05, 2025

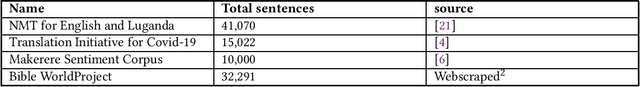

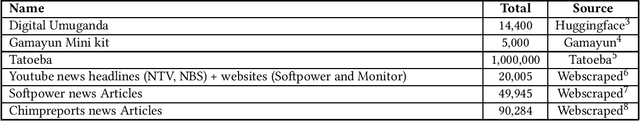

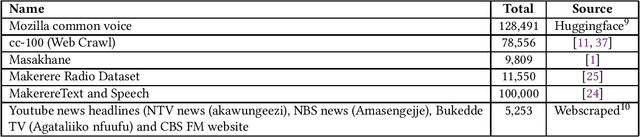

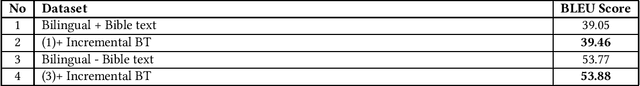

Abstract:In this paper,we explore the application of Back translation (BT) as a semi-supervised technique to enhance Neural Machine Translation(NMT) models for the English-Luganda language pair, specifically addressing the challenges faced by low-resource languages. The purpose of our study is to demonstrate how BT can mitigate the scarcity of bilingual data by generating synthetic data from monolingual corpora. Our methodology involves developing custom NMT models using both publicly available and web-crawled data, and applying Iterative and Incremental Back translation techniques. We strategically select datasets for incremental back translation across multiple small datasets, which is a novel element of our approach. The results of our study show significant improvements, with translation performance for the English-Luganda pair exceeding previous benchmarks by more than 10 BLEU score units across all translation directions. Additionally, our evaluation incorporates comprehensive assessment metrics such as SacreBLEU, ChrF2, and TER, providing a nuanced understanding of translation quality. The conclusion drawn from our research confirms the efficacy of BT when strategically curated datasets are utilized, establishing new performance benchmarks and demonstrating the potential of BT in enhancing NMT models for low-resource languages.

* NLPIR '24: Proceedings of the 2024 8th International Conference on Natural Language Processing and Information Retrieval

Advancing AI with Integrity: Ethical Challenges and Solutions in Neural Machine Translation

Apr 01, 2024Abstract:This paper addresses the ethical challenges of Artificial Intelligence in Neural Machine Translation (NMT) systems, emphasizing the imperative for developers to ensure fairness and cultural sensitivity. We investigate the ethical competence of AI models in NMT, examining the Ethical considerations at each stage of NMT development, including data handling, privacy, data ownership, and consent. We identify and address ethical issues through empirical studies. These include employing Transformer models for Luganda-English translations and enhancing efficiency with sentence mini-batching. And complementary studies that refine data labeling techniques and fine-tune BERT and Longformer models for analyzing Luganda and English social media content. Our second approach is a literature review from databases such as Google Scholar and platforms like GitHub. Additionally, the paper probes the distribution of responsibility between AI systems and humans, underscoring the essential role of human oversight in upholding NMT ethical standards. Incorporating a biblical perspective, we discuss the societal impact of NMT and the broader ethical responsibilities of developers, positing them as stewards accountable for the societal repercussions of their creations.

Enhanced Labeling Technique for Reddit Text and Fine-Tuned Longformer Models for Classifying Depression Severity in English and Luganda

Jan 25, 2024Abstract:Depression is a global burden and one of the most challenging mental health conditions to control. Experts can detect its severity early using the Beck Depression Inventory (BDI) questionnaire, administer appropriate medication to patients, and impede its progression. Due to the fear of potential stigmatization, many patients turn to social media platforms like Reddit for advice and assistance at various stages of their journey. This research extracts text from Reddit to facilitate the diagnostic process. It employs a proposed labeling approach to categorize the text and subsequently fine-tunes the Longformer model. The model's performance is compared against baseline models, including Naive Bayes, Random Forest, Support Vector Machines, and Gradient Boosting. Our findings reveal that the Longformer model outperforms the baseline models in both English (48%) and Luganda (45%) languages on a custom-made dataset.

Building a Parallel Corpus and Training Translation Models Between Luganda and English

Jan 07, 2023Abstract:Neural machine translation (NMT) has achieved great successes with large datasets, so NMT is more premised on high-resource languages. This continuously underpins the low resource languages such as Luganda due to the lack of high-quality parallel corpora, so even 'Google translate' does not serve Luganda at the time of this writing. In this paper, we build a parallel corpus with 41,070 pairwise sentences for Luganda and English which is based on three different open-sourced corpora. Then, we train NMT models with hyper-parameter search on the dataset. Experiments gave us a BLEU score of 21.28 from Luganda to English and 17.47 from English to Luganda. Some translation examples show high quality of the translation. We believe that our model is the first Luganda-English NMT model. The bilingual dataset we built will be available to the public.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge