Improved Algorithms for Misspecified Linear Markov Decision Processes

Sep 12, 2021Daniel Vial, Advait Parulekar, Sanjay Shakkottai, R. Srikant

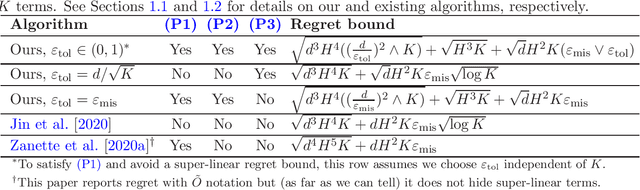

For the misspecified linear Markov decision process (MLMDP) model of Jin et al. [2020], we propose an algorithm with three desirable properties. (P1) Its regret after $K$ episodes scales as $K \max \{ \varepsilon_{\text{mis}}, \varepsilon_{\text{tol}} \}$, where $\varepsilon_{\text{mis}}$ is the degree of misspecification and $\varepsilon_{\text{tol}}$ is a user-specified error tolerance. (P2) Its space and per-episode time complexities remain bounded as $K \rightarrow \infty$. (P3) It does not require $\varepsilon_{\text{mis}}$ as input. To our knowledge, this is the first algorithm satisfying all three properties. For concrete choices of $\varepsilon_{\text{tol}}$, we also improve existing regret bounds (up to log factors) while achieving either (P2) or (P3) (existing algorithms satisfy neither). At a high level, our algorithm generalizes (to MLMDPs) and refines the Sup-Lin-UCB algorithm, which Takemura et al. [2021] recently showed satisfies (P3) in the contextual bandit setting.

Linear Convergence of Entropy-Regularized Natural Policy Gradient with Linear Function Approximation

Jun 08, 2021Semih Cayci, Niao He, R. Srikant

Natural policy gradient (NPG) methods with function approximation achieve impressive empirical success in reinforcement learning problems with large state-action spaces. However, theoretical understanding of their convergence behaviors remains limited in the function approximation setting. In this paper, we perform a finite-time analysis of NPG with linear function approximation and softmax parameterization, and prove for the first time that widely used entropy regularization method, which encourages exploration, leads to linear convergence rate. We adopt a Lyapunov drift analysis to prove the convergence results and explain the effectiveness of entropy regularization in improving the convergence rates.

Regret Bounds for Stochastic Shortest Path Problems with Linear Function Approximation

May 04, 2021Daniel Vial, Advait Parulekar, Sanjay Shakkottai, R. Srikant

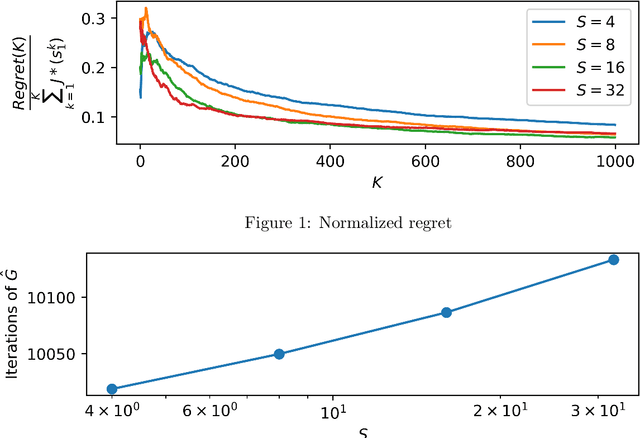

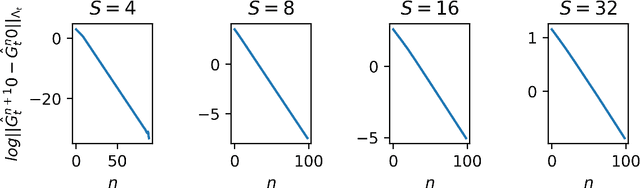

We propose two algorithms for episodic stochastic shortest path problems with linear function approximation. The first is computationally expensive but provably obtains $\tilde{O} (\sqrt{B_\star^3 d^3 K/c_{min}} )$ regret, where $B_\star$ is a (known) upper bound on the optimal cost-to-go function, $d$ is the feature dimension, $K$ is the number of episodes, and $c_{min}$ is the minimal cost of non-goal state-action pairs (assumed to be positive). The second is computationally efficient in practice, and we conjecture that it obtains the same regret bound. Both algorithms are based on an optimistic least-squares version of value iteration analogous to the finite-horizon backward induction approach from Jin et al. 2020. To the best of our knowledge, these are the first regret bounds for stochastic shortest path that are independent of the size of the state and action spaces.

Achieving Small Test Error in Mildly Overparameterized Neural Networks

Apr 24, 2021Shiyu Liang, Ruoyu Sun, R. Srikant

Recent theoretical works on over-parameterized neural nets have focused on two aspects: optimization and generalization. Many existing works that study optimization and generalization together are based on neural tangent kernel and require a very large width. In this work, we are interested in the following question: for a binary classification problem with two-layer mildly over-parameterized ReLU network, can we find a point with small test error in polynomial time? We first show that the landscape of loss functions with explicit regularization has the following property: all local minima and certain other points which are only stationary in certain directions achieve small test error. We then prove that for convolutional neural nets, there is an algorithm which finds one of these points in polynomial time (in the input dimension and the number of data points). In addition, we prove that for a fully connected neural net, with an additional assumption on the data distribution, there is a polynomial time algorithm.

Sample Complexity and Overparameterization Bounds for Projection-Free Neural TD Learning

Mar 02, 2021Semih Cayci, Siddhartha Satpathi, Niao He, R. Srikant

We study the dynamics of temporal-difference learning with neural network-based value function approximation over a general state space, namely, \emph{Neural TD learning}. Existing analysis of neural TD learning relies on either infinite width-analysis or constraining the network parameters in a (random) compact set; as a result, an extra projection step is required at each iteration. This paper establishes a new convergence analysis of neural TD learning \emph{without any projection}. We show that the projection-free TD learning equipped with a two-layer ReLU network of any width exceeding $poly(\overline{\nu},1/\epsilon)$ converges to the true value function with error $\epsilon$ given $poly(\overline{\nu},1/\epsilon)$ iterations or samples, where $\overline{\nu}$ is an upper bound on the RKHS norm of the value function induced by the neural tangent kernel. Our sample complexity and overparameterization bounds are based on a drift analysis of the network parameters as a stopped random process in the lazy training regime.

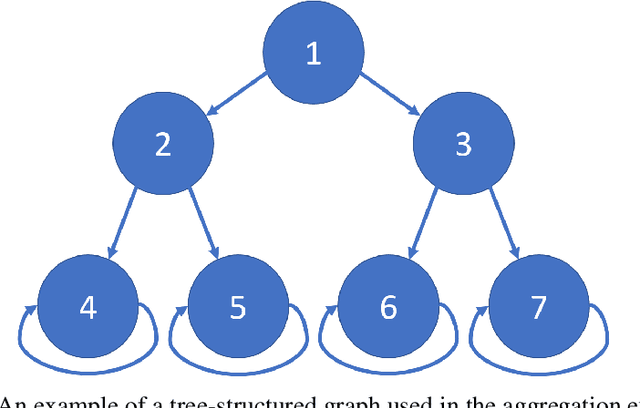

Optimistic Policy Iteration for MDPs with Acyclic Transient State Structure

Feb 13, 2021Joseph Lubars, Anna Winnicki, Michael Livesay, R. Srikant

We consider Markov Decision Processes (MDPs) in which every stationary policy induces the same graph structure for the underlying Markov chain and further, the graph has the following property: if we replace each recurrent class by a node, then the resulting graph is acyclic. For such MDPs, we prove the convergence of the stochastic dynamics associated with a version of optimistic policy iteration (OPI), suggested in Tsitsiklis (2002), in which the values associated with all the nodes visited during each iteration of the OPI are updated.

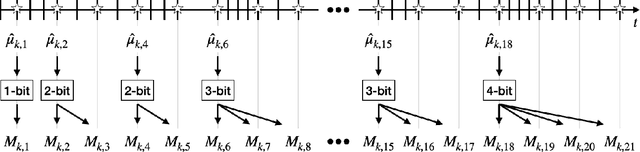

One-bit feedback is sufficient for upper confidence bound policies

Dec 04, 2020Daniel Vial, Sanjay Shakkottai, R. Srikant

We consider a variant of the traditional multi-armed bandit problem in which each arm is only able to provide one-bit feedback during each pull based on its past history of rewards. Our main result is the following: given an upper confidence bound policy which uses full-reward feedback, there exists a coding scheme for generating one-bit feedback, and a corresponding decoding scheme and arm selection policy, such that the ratio of the regret achieved by our policy and the regret of the full-reward feedback policy asymptotically approaches one.

Combining Reinforcement Learning with Model Predictive Control for On-Ramp Merging

Nov 17, 2020Joseph Lubars, Harsh Gupta, Adnan Raja, R. Srikant, Liyun Li, Xinzhou Wu

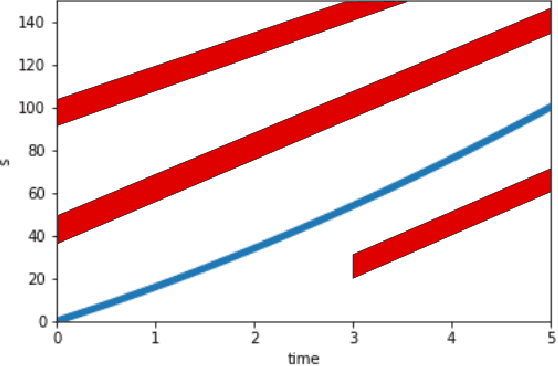

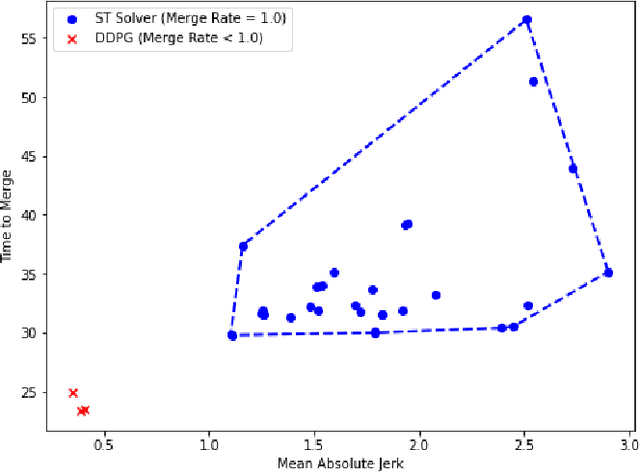

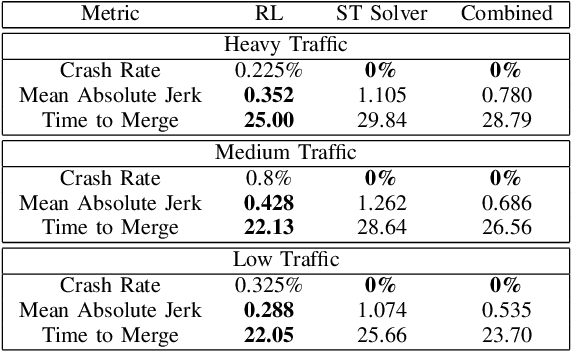

We consider the problem of designing an algorithm to allow a car to autonomously merge on to a highway from an on-ramp. Two broad classes of techniques have been proposed to solve motion planning problems in autonomous driving: Model Predictive Control (MPC) and Reinforcement Learning (RL). In this paper, we first establish the strengths and weaknesses of state-of-the-art MPC and RL-based techniques through simulations. We show that the performance of the RL agent is worse than that of the MPC solution from the perspective of safety and robustness to out-of-distribution traffic patterns, i.e., traffic patterns which were not seen by the RL agent during training. On the other hand, the performance of the RL agent is better than that of the MPC solution when it comes to efficiency and passenger comfort. We subsequently present an algorithm which blends the model-free RL agent with the MPC solution and show that it provides better trade-offs between all metrics -- passenger comfort, efficiency, crash rate and robustness.

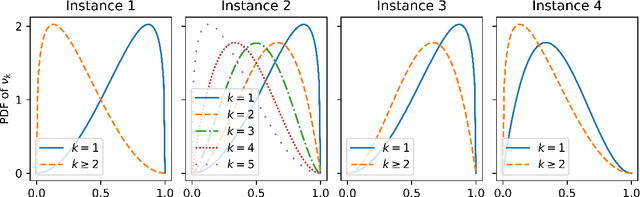

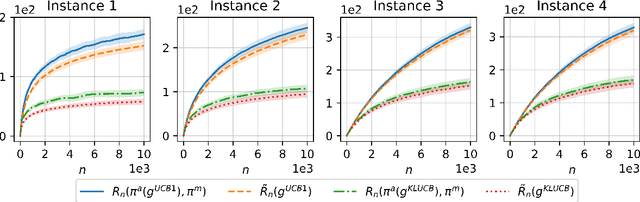

On the Consistency of Maximum Likelihood Estimators for Causal Network Identification

Oct 17, 2020Xiaotian Xie, Dimitrios Katselis, Carolyn L. Beck, R. Srikant

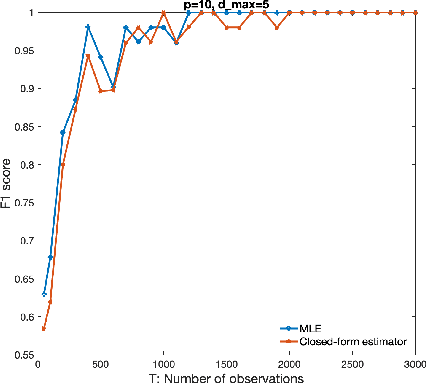

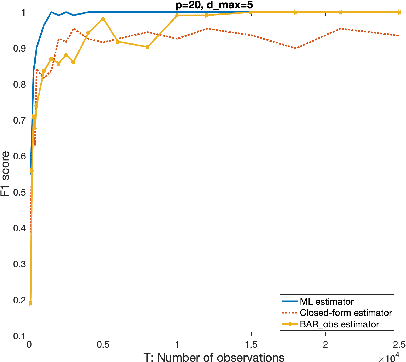

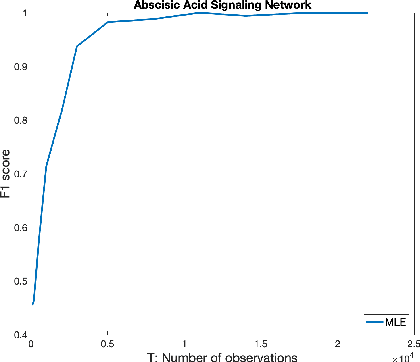

We consider the problem of identifying parameters of a particular class of Markov chains, called Bernoulli Autoregressive (BAR) processes. The structure of any BAR model is encoded by a directed graph. Incoming edges to a node in the graph indicate that the state of the node at a particular time instant is influenced by the states of the corresponding parental nodes in the previous time instant. The associated edge weights determine the corresponding level of influence from each parental node. In the simplest setup, the Bernoulli parameter of a particular node's state variable is a convex combination of the parental node states in the previous time instant and an additional Bernoulli noise random variable. This paper focuses on the problem of edge weight identification using Maximum Likelihood (ML) estimation and proves that the ML estimator is strongly consistent for two variants of the BAR model. We additionally derive closed-form estimators for the aforementioned two variants and prove their strong consistency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge