Rémi Delogne

Random Features for Grassmannian Kernels

Apr 30, 2025Abstract:The Grassmannian manifold G(k, n) serves as a fundamental tool in signal processing, computer vision, and machine learning, where problems often involve classifying, clustering, or comparing subspaces. In this work, we propose a sketching-based approach to approximate Grassmannian kernels using random projections. We introduce three variations of kernel approximation, including two that rely on binarised sketches, offering substantial memory gains. We establish theoretical properties of our method in the special case of G(1, n) and extend it to general G(k, n). Experimental validation demonstrates that our sketched kernels closely match the performance of standard Grassmannian kernels while avoiding the need to compute or store the full kernel matrix. Our approach enables scalable Grassmannian-based methods for large-scale applications in machine learning and pattern recognition.

Signal processing after quadratic random sketching with optical units

Jul 27, 2023

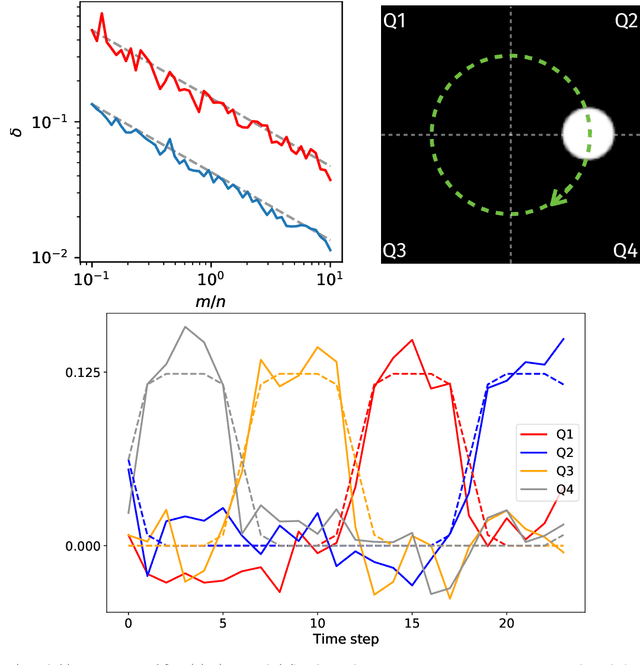

Abstract:Random data sketching (or projection) is now a classical technique enabling, for instance, approximate numerical linear algebra and machine learning algorithms with reduced computational complexity and memory. In this context, the possibility of performing data processing (such as pattern detection or classification) directly in the sketched domain without accessing the original data was previously achieved for linear random sketching methods and compressive sensing. In this work, we show how to estimate simple signal processing tasks (such as deducing local variations in a image) directly using random quadratic projections achieved by an optical processing unit. The same approach allows for naive data classification methods directly operated in the sketched domain. We report several experiments confirming the power of our approach.

Signal processing with optical quadratic random sketches

Dec 01, 2022

Abstract:Random data sketching (or projection) is now a classical technique enabling, for instance, approximate numerical linear algebra and machine learning algorithms with reduced computational complexity and memory. In this context, the possibility of performing data processing (such as pattern detection or classification) directly in the sketched domain without accessing the original data was previously achieved for linear random sketching methods and compressive sensing. In this work, we show how to estimate simple signal processing tasks (such as deducing local variations in a image) directly using random quadratic projections achieved by an optical processing unit. The same approach allows for naive data classification methods directly operated in the sketched domain. We report several experiments confirming the power of our approach.

ROP inception: signal estimation with quadratic random sketching

May 17, 2022

Abstract:Rank-one projections (ROP) of matrices and quadratic random sketching of signals support several data processing and machine learning methods, as well as recent imaging applications, such as phase retrieval or optical processing units. In this paper, we demonstrate how signal estimation can be operated directly through such quadratic sketches--equivalent to the ROPs of the "lifted signal" obtained as its outer product with itself--without explicitly reconstructing that signal. Our analysis relies on showing that, up to a minor debiasing trick, the ROP measurement operator satisfies a generalised sign product embedding (SPE) property. In a nutshell, the SPE shows that the scalar product of a signal sketch with the "sign" of the sketch of a given pattern approximates the square of the projection of that signal on this pattern. This thus amounts to an insertion (an "inception") of a ROP model inside a ROP sketch. The effectiveness of our approach is evaluated in several synthetic experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge