Philipp Kellmeyer

Predicting Length of Stay in Neurological ICU Patients Using Classical Machine Learning and Neural Network Models: A Benchmark Study on MIMIC-IV

May 23, 2025

Abstract:Intensive care unit (ICU) is a crucial hospital department that handles life-threatening cases. Nowadays machine learning (ML) is being leveraged in healthcare ubiquitously. In recent years, management of ICU became one of the most significant parts of the hospital functionality (largely but not only due to the worldwide COVID-19 pandemic). This study explores multiple ML approaches for predicting LOS in ICU specifically for the patients with neurological diseases based on the MIMIC-IV dataset. The evaluated models include classic ML algorithms (K-Nearest Neighbors, Random Forest, XGBoost and CatBoost) and Neural Networks (LSTM, BERT and Temporal Fusion Transformer). Given that LOS prediction is often framed as a classification task, this study categorizes LOS into three groups: less than two days, less than a week, and a week or more. As the first ML-based approach targeting LOS prediction for neurological disorder patients, this study does not aim to outperform existing methods but rather to assess their effectiveness in this specific context. The findings provide insights into the applicability of ML techniques for improving ICU resource management and patient care. According to the results, Random Forest model proved to outperform others on static, achieving an accuracy of 0.68, a precision of 0.68, a recall of 0.68, and F1-score of 0.67. While BERT model outperformed LSTM model on time-series data with an accuracy of 0.80, a precision of 0.80, a recall of 0.80 and F1-score 0.80.

Fairness and Bias in Robot Learning

Jul 07, 2022

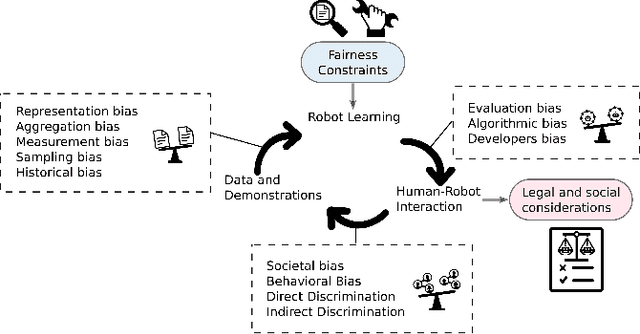

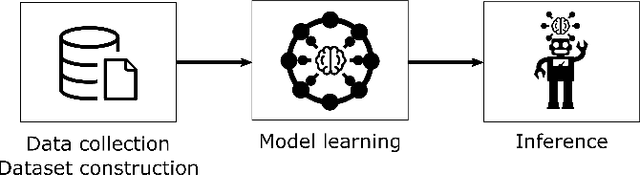

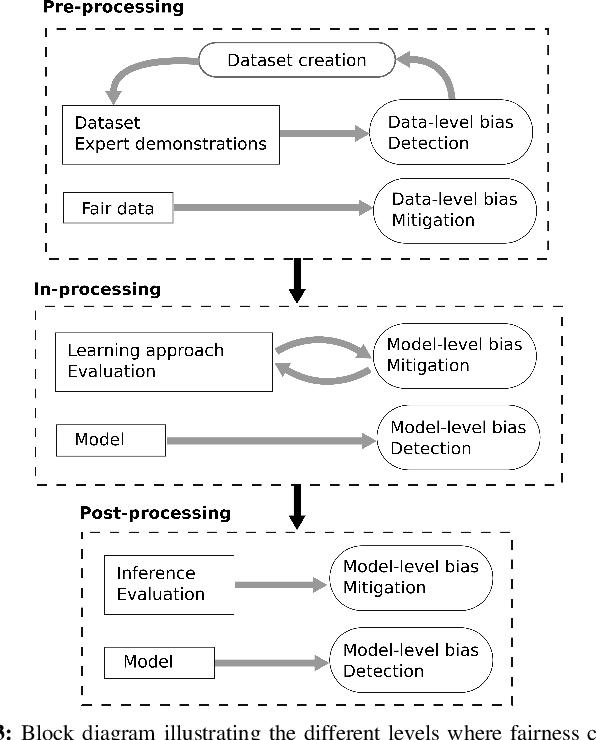

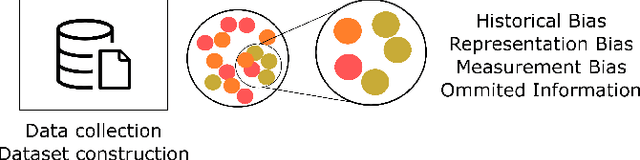

Abstract:Machine learning has significantly enhanced the abilities of robots, enabling them to perform a wide range of tasks in human environments and adapt to our uncertain real world. Recent works in various domains of machine learning have highlighted the importance of accounting for fairness to ensure that these algorithms do not reproduce human biases and consequently lead to discriminatory outcomes. With robot learning systems increasingly performing more and more tasks in our everyday lives, it is crucial to understand the influence of such biases to prevent unintended behavior toward certain groups of people. In this work, we present the first survey on fairness in robot learning from an interdisciplinary perspective spanning technical, ethical, and legal challenges. We propose a taxonomy for sources of bias and the resulting types of discrimination due to them. Using examples from different robot learning domains, we examine scenarios of unfair outcomes and strategies to mitigate them. We present early advances in the field by covering different fairness definitions, ethical and legal considerations, and methods for fair robot learning. With this work, we aim at paving the road for groundbreaking developments in fair robot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge