Philip Koopman

Lessons from the Cruise Robotaxi Pedestrian Dragging Mishap

Jun 07, 2024

Abstract:A robotaxi dragged a pedestrian 20 feet down a San Francisco street on the evening of October 2, 2023, coming to rest with its rear wheel on that woman's legs. The mishap was complex, involving a first impact by a different, human-driven vehicle. The following weeks saw Cruise stand down its road operations amid allegations of withholding crucial mishap information from regulators. The pedestrian has survived her severe injuries, but the robotaxi industry is still wrestling with the aftermath. Key observations include that the robotaxi had multiple possible ways available to avoid initial impact with the pedestrian. Limitations to the computer driver's programming prevented it from recognizing a pedestrian was about to be hit in an adjacent lane, caused the robotaxi to lose tracking of and then in essence forget a pedestrian who was hit by an adjacent vehicle, and forget that the robotaxi had just run over a presumed pedestrian when beginning a subsequent repositioning maneuver. The computer driver was unable to detect the pedestrian being dragged even though her legs were partially in view of a robotaxi camera. Moreover, more conservative operational approaches could have avoided the dragging portion of the mishap entirely, such as waiting for remote confirmation before moving after a crash with a pedestrian, or operating the still-developing robotaxi technology with an in-vehicle safety driver rather than prioritizing driver-out deployment.

Redefining Safety for Autonomous Vehicles

Apr 26, 2024Abstract:Existing definitions and associated conceptual frameworks for computer-based system safety should be revisited in light of real-world experiences from deploying autonomous vehicles. Current terminology used by industry safety standards emphasizes mitigation of risk from specifically identified hazards, and carries assumptions based on human-supervised vehicle operation. Operation without a human driver dramatically increases the scope of safety concerns, especially due to operation in an open world environment, a requirement to self-enforce operational limits, participation in an ad hoc sociotechnical system of systems, and a requirement to conform to both legal and ethical constraints. Existing standards and terminology only partially address these new challenges. We propose updated definitions for core system safety concepts that encompass these additional considerations as a starting point for evolving safe-ty approaches to address these additional safety challenges. These results might additionally inform framing safety terminology for other autonomous system applications.

Anatomy of a Robotaxi Crash: Lessons from the Cruise Pedestrian Dragging Mishap

Feb 08, 2024Abstract:An October 2023 crash between a GM Cruise robotaxi and a pedestrian in San Francisco resulted not only in a severe injury, but also dramatic upheaval at that company that will likely have lasting effects throughout the industry. The issues stem not just from the crash facts themselves, but also how Cruise mishandled dealing with their robotaxi dragging a pedestrian under the vehicle after the initial post-crash stop. A pair of external investigation reports provide raw material describing the incident and critique the company response from a regulatory interaction point of view, but did not include potential safety recommendations in scope. We use that report material to highlight specific facts and relationships between events by tying together different pieces of the report material. We then explore safety lessons that might be learned with regard to technology, operational safety practices, and organizational reaction to incidents.

Autonomous Vehicles Meet the Physical World: RSS, Variability, Uncertainty, and Proving Safety (Expanded Version)

Oct 31, 2019

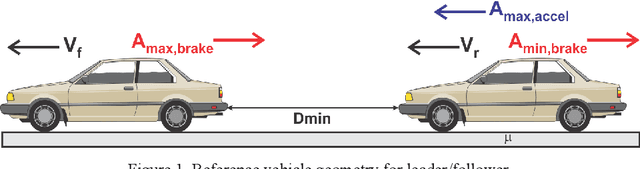

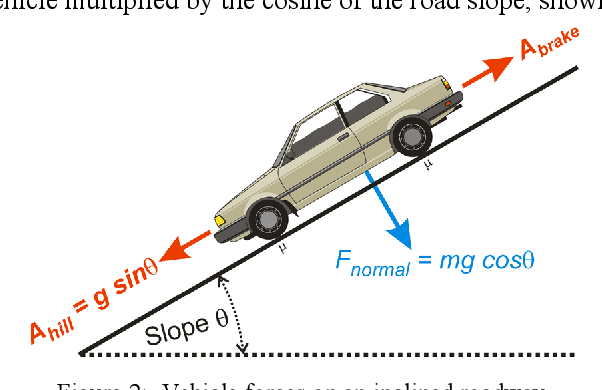

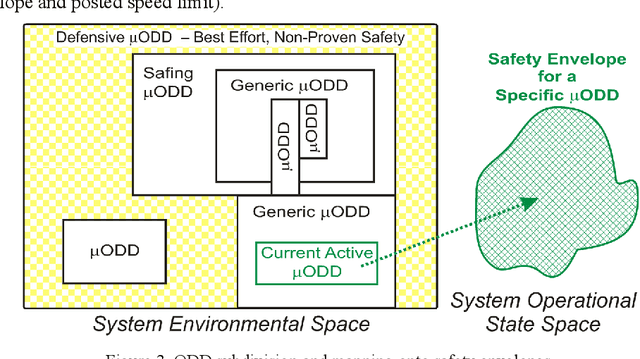

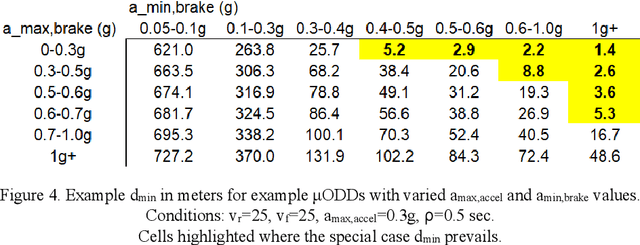

Abstract:The Responsibility-Sensitive Safety (RSS) model offers provable safety for vehicle behaviors such as minimum safe following distance. However, handling worst-case variability and uncertainty may significantly lower vehicle permissiveness, and in some situations safety cannot be guaranteed. Digging deeper into Newtonian mechanics, we identify complications that result from considering vehicle status, road geometry and environmental parameters. An especially challenging situation occurs if these parameters change during the course of a collision avoidance maneuver such as hard braking. As part of our analysis, we expand the original RSS following distance equation to account for edge cases involving potential collisions mid-way through a braking process. We additionally propose a Micro-Operational Design Domain ({\mu}ODD) approach to subdividing the operational space as a way of improving permissiveness. Confining probabilistic aspects of safety to {\mu}ODD transitions permits proving safety (when possible) under the assumption that the system has transitioned to the correct {\mu}ODD for the situation. Each {\mu}ODD can additionally be used to encode system fault responses, take credit for advisory information (e.g., from vehicle-to-vehicle communication), and anticipate likely emergent situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge