Philip Feldman

You've Changed: Detecting Modification of Black-Box Large Language Models

Apr 14, 2025

Abstract:Large Language Models (LLMs) are often provided as a service via an API, making it challenging for developers to detect changes in their behavior. We present an approach to monitor LLMs for changes by comparing the distributions of linguistic and psycholinguistic features of generated text. Our method uses a statistical test to determine whether the distributions of features from two samples of text are equivalent, allowing developers to identify when an LLM has changed. We demonstrate the effectiveness of our approach using five OpenAI completion models and Meta's Llama 3 70B chat model. Our results show that simple text features coupled with a statistical test can distinguish between language models. We also explore the use of our approach to detect prompt injection attacks. Our work enables frequent LLM change monitoring and avoids computationally expensive benchmark evaluations.

War Elephants: Rethinking Combat AI and Human Oversight

Apr 30, 2024

Abstract:This paper explores the changes that pervasive AI is having on the nature of combat. We look beyond the substitution of AI for experts to an approach where complementary human and machine abilities are blended. Using historical and modern examples, we show how autonomous weapons systems can be effectively managed by teams of human "AI Operators" combined with AI/ML "Proxy Operators." By basing our approach on the principles of complementation, we provide for a flexible and dynamic approach to managing lethal autonomous systems. We conclude by presenting a path to achieving an integrated vision of machine-speed combat where the battlefield AI is operated by AI Operators that watch for patterns of behavior within battlefield to assess the performance of lethal autonomous systems. This approach enables the development of combat systems that are likely to be more ethical, operate at machine speed, and are capable of responding to a broader range of dynamic battlefield conditions than any purely autonomous AI system could support.

Trapping LLM Hallucinations Using Tagged Context Prompts

Jun 09, 2023

Abstract:Recent advances in large language models (LLMs), such as ChatGPT, have led to highly sophisticated conversation agents. However, these models suffer from "hallucinations," where the model generates false or fabricated information. Addressing this challenge is crucial, particularly with AI-driven platforms being adopted across various sectors. In this paper, we propose a novel method to recognize and flag instances when LLMs perform outside their domain knowledge, and ensuring users receive accurate information. We find that the use of context combined with embedded tags can successfully combat hallucinations within generative language models. To do this, we baseline hallucination frequency in no-context prompt-response pairs using generated URLs as easily-tested indicators of fabricated data. We observed a significant reduction in overall hallucination when context was supplied along with question prompts for tested generative engines. Lastly, we evaluated how placing tags within contexts impacted model responses and were able to eliminate hallucinations in responses with 98.88% effectiveness.

Down the Rabbit Hole: Detecting Online Extremism, Radicalisation, and Politicised Hate Speech

Jan 27, 2023Abstract:Social media is a modern person's digital voice to project and engage with new ideas and mobilise communities $\unicode{x2013}$ a power shared with extremists. Given the societal risks of unvetted content-moderating algorithms for Extremism, Radicalisation, and Hate speech (ERH) detection, responsible software engineering must understand the who, what, when, where, and why such models are necessary to protect user safety and free expression. Hence, we propose and examine the unique research field of ERH context mining to unify disjoint studies. Specifically, we evaluate the start-to-finish design process from socio-technical definition-building and dataset collection strategies to technical algorithm design and performance. Our 2015-2021 51-study Systematic Literature Review (SLR) provides the first cross-examination of textual, network, and visual approaches to detecting extremist affiliation, hateful content, and radicalisation towards groups and movements. We identify consensus-driven ERH definitions and propose solutions to existing ideological and geographic biases, particularly due to the lack of research in Oceania/Australasia. Our hybridised investigation on Natural Language Processing, Community Detection, and visual-text models demonstrates the dominating performance of textual transformer-based algorithms. We conclude with vital recommendations for ERH context mining researchers and propose an uptake roadmap with guidelines for researchers, industries, and governments to enable a safer cyberspace.

Polling Latent Opinions: A Method for Computational Sociolinguistics Using Transformer Language Models

Apr 19, 2022

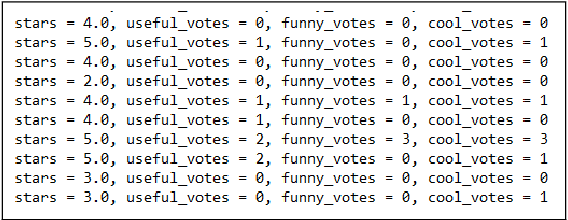

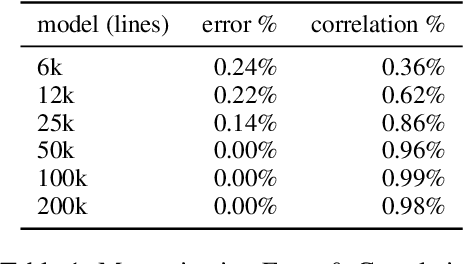

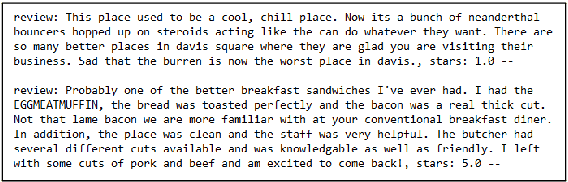

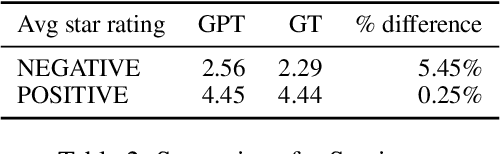

Abstract:Text analysis of social media for sentiment, topic analysis, and other analysis depends initially on the selection of keywords and phrases that will be used to create the research corpora. However, keywords that researchers choose may occur infrequently, leading to errors that arise from using small samples. In this paper, we use the capacity for memorization, interpolation, and extrapolation of Transformer Language Models such as the GPT series to learn the linguistic behaviors of a subgroup within larger corpora of Yelp reviews. We then use prompt-based queries to generate synthetic text that can be analyzed to produce insights into specific opinions held by the populations that the models were trained on. Once learned, more specific sentiment queries can be made of the model with high levels of accuracy when compared to traditional keyword searches. We show that even in cases where a specific keyphrase is limited or not present at all in the training corpora, the GPT is able to accurately generate large volumes of text that have the correct sentiment.

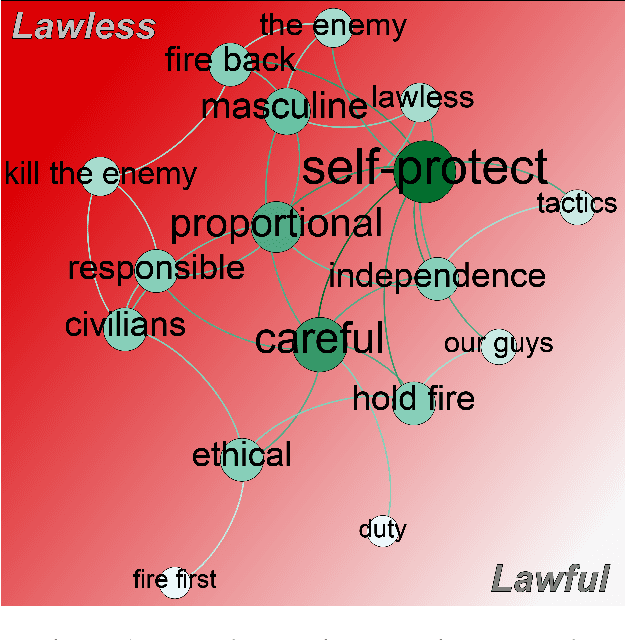

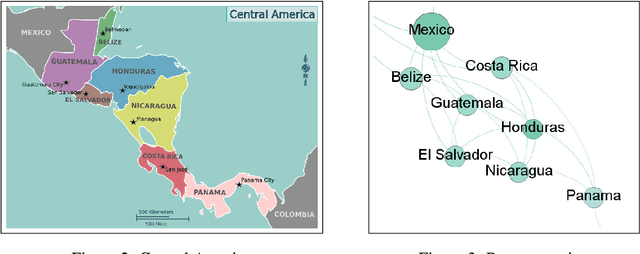

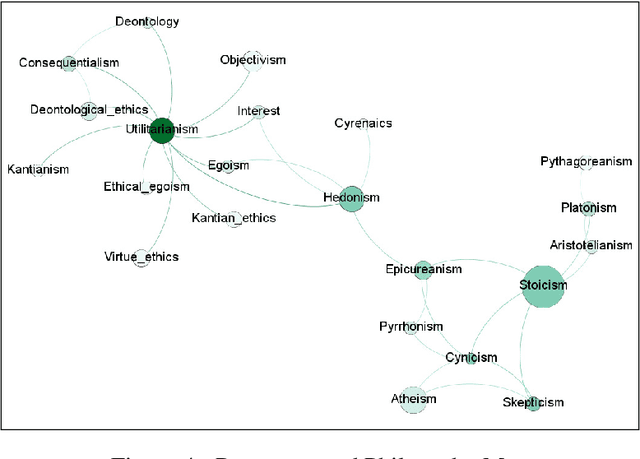

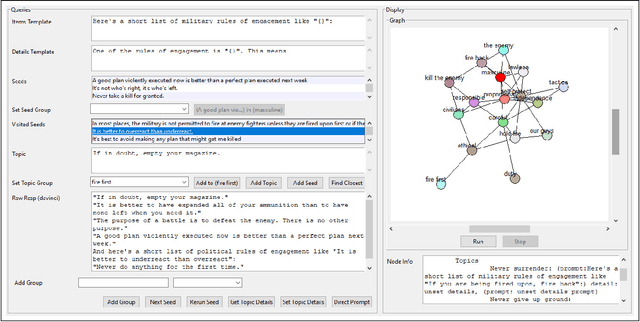

Ethics, Rules of Engagement, and AI: Neural Narrative Mapping Using Large Transformer Language Models

Feb 05, 2022

Abstract:The problem of determining if a military unit has correctly understood an order and is properly executing on it is one that has bedeviled military planners throughout history. The advent of advanced language models such as OpenAI's GPT-series offers new possibilities for addressing this problem. This paper presents a mechanism to harness the narrative output of large language models and produce diagrams or "maps" of the relationships that are latent in the weights of such models as the GPT-3. The resulting "Neural Narrative Maps" (NNMs), are intended to provide insight into the organization of information, opinion, and belief in the model, which in turn provide means to understand intent and response in the context of physical distance. This paper discusses the problem of mapping information spaces in general, and then presents a concrete implementation of this concept in the context of OpenAI's GPT-3 language model for determining if a subordinate is following a commander's intent in a high-risk situation. The subordinate's locations within the NNM allow a novel capability to evaluate the intent of the subordinate with respect to the commander. We show that is is possible not only to determine if they are nearby in narrative space, but also how they are oriented, and what "trajectory" they are on. Our results show that our method is able to produce high-quality maps, and demonstrate new ways of evaluating intent more generally.

* 18 Pages, 13 figures

Analyzing COVID-19 Tweets with Transformer-based Language Models

May 06, 2021

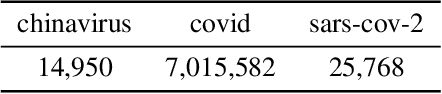

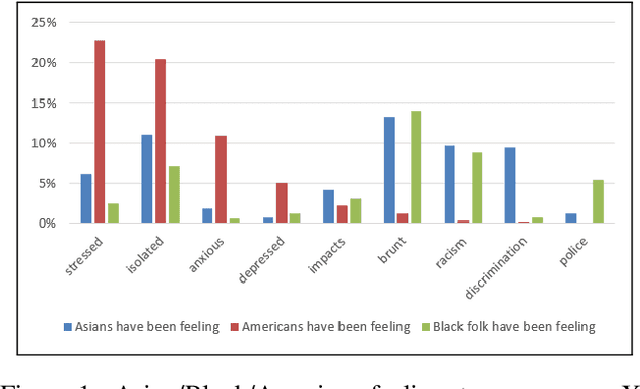

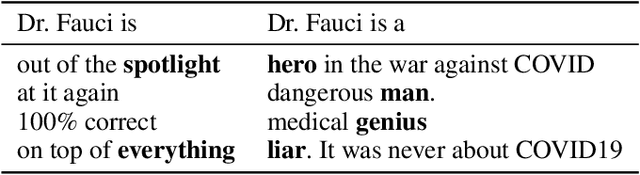

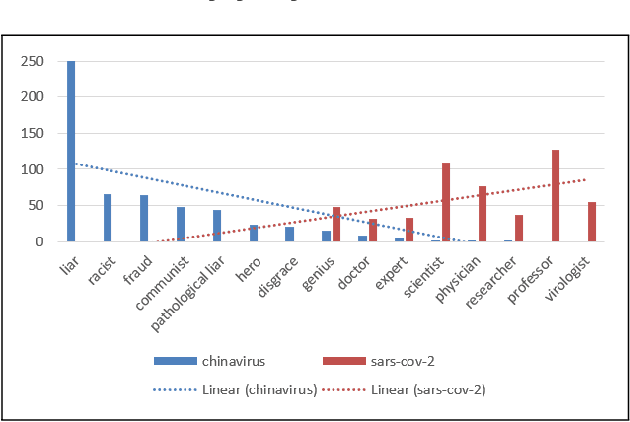

Abstract:This paper describes a method for using Transformer-based Language Models (TLMs) to understand public opinion from social media posts. In this approach, we train a set of GPT models on several COVID-19 tweet corpora that reflect populations of users with distinctive views. We then use prompt-based queries to probe these models to reveal insights into the biases and opinions of the users. We demonstrate how this approach can be used to produce results which resemble polling the public on diverse social, political and public health issues. The results on the COVID-19 tweet data show that transformer language models are promising tools that can help us understand public opinions on social media at scale.

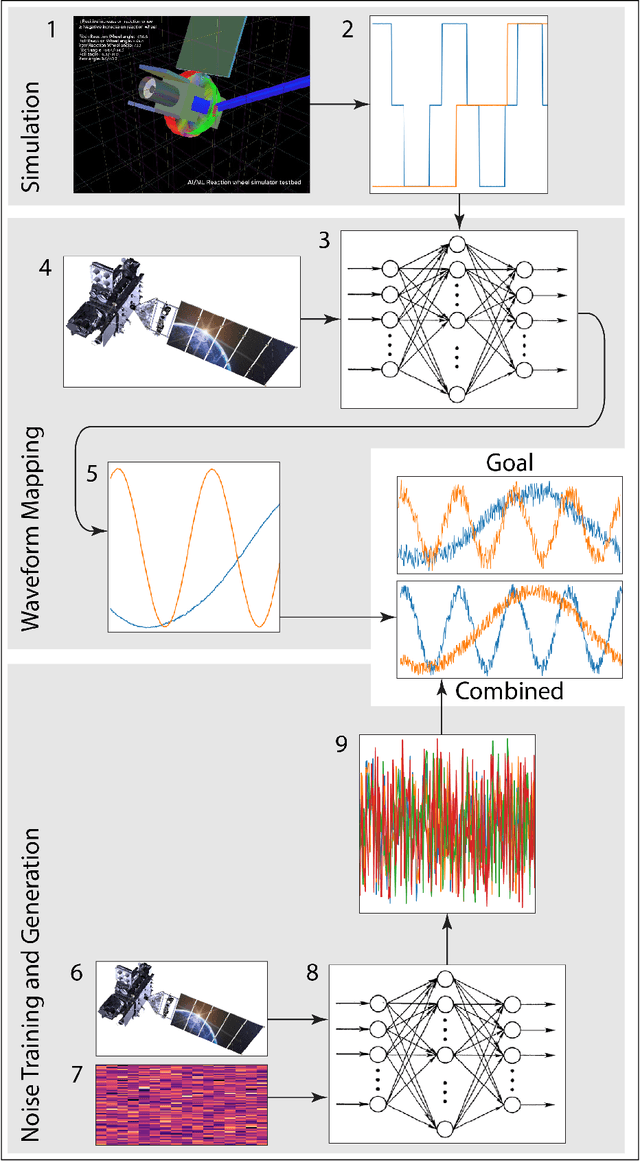

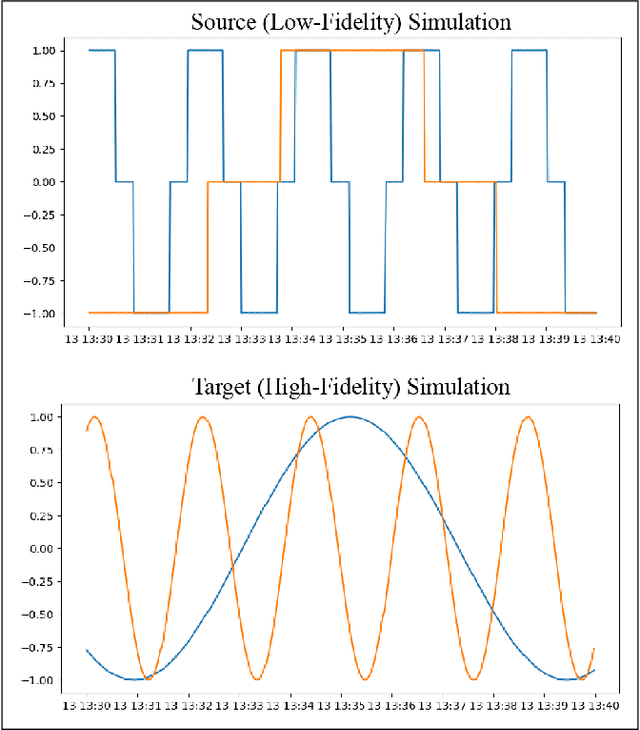

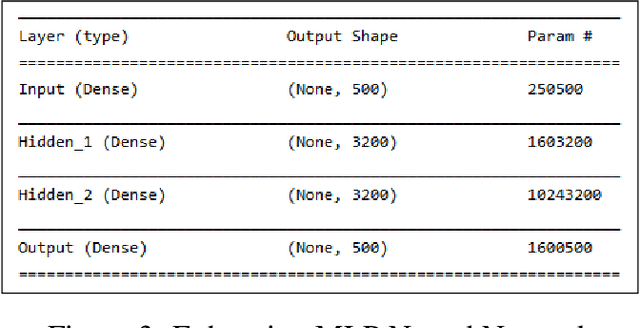

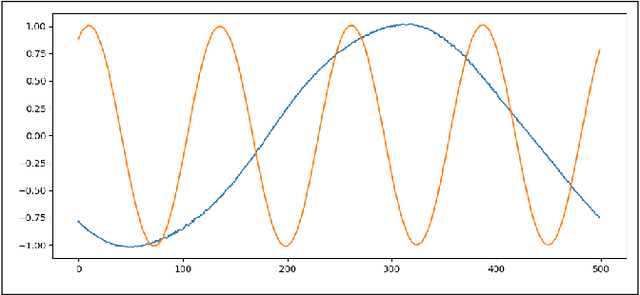

Training robust anomaly detection using ML-Enhanced simulations

Aug 27, 2020

Abstract:This paper describes the use of neural networks to enhance simulations for subsequent training of anomaly-detection systems. Simulations can provide edge conditions for anomaly detection which may be sparse or non-existent in real-world data. Simulations suffer, however, by producing data that is "too clean" resulting in anomaly detection systems that cannot transition from simulated data to actual conditions. Our approach enhances simulations using neural networks trained on real-world data to create outputs that are more realistic and variable than traditional simulations.

Navigating Language Models with Synthetic Agents

Aug 24, 2020

Abstract:Modern natural language models such as the GPT-2/GPT-3 contain tremendous amounts of information about human belief in a consistently interrogatable form. If these models could be shown to accurately reflect the underlying beliefs of the human beings that produced the data used to train these models, then such models become a powerful sociological tool in ways that are distinct from traditional methods, such as interviews and surveys. In this study, We train a version of the GPT-2 on a corpora of historical chess games, and then compare the learned relationships of words in the model to the known ground truth of the chess board, move legality, and historical patterns of play. We find that the percentages of moves by piece using the model are substantially similar from human patterns. We further find that the model creates an accurate latent representation of the chessboard, and that it is possible to plot trajectories of legal moves across the board using this knowledge.

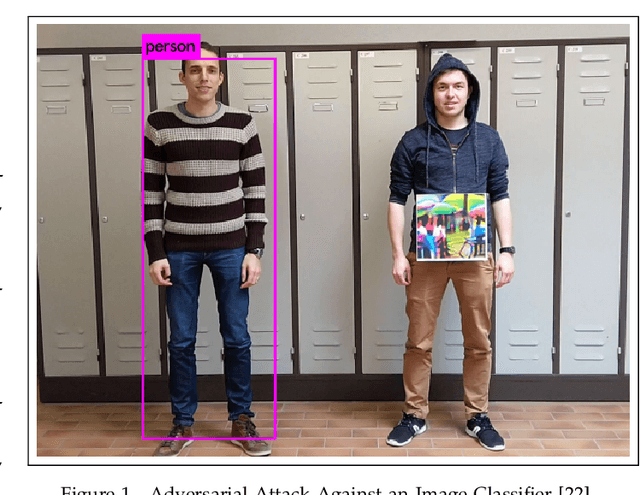

Integrating Artificial Intelligence into Weapon Systems

May 10, 2019

Abstract:The integration of Artificial Intelligence (AI) into weapon systems is one of the most consequential tactical and strategic decisions in the history of warfare. Current AI development is a remarkable combination of accelerating capability, hidden decision mechanisms, and decreasing costs. Implementation of these systems is in its infancy and exists on a spectrum from resilient and flexible to simplistic and brittle. Resilient systems should be able to effectively handle the complexities of a high-dimensional battlespace. Simplistic AI implementations could be manipulated by an adversarial AI that identifies and exploits their weaknesses. In this paper, we present a framework for understanding the development of dynamic AI/ML systems that interactively and continuously adapt to their user's needs. We explore the implications of increasingly capable AI in the kill chain and how this will lead inevitably to a fully automated, always on system, barring regulation by treaty. We examine the potential of total integration of cyber and physical security and how this likelihood must inform the development of AI-enabled systems with respect to the "fog of war", human morals, and ethics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge