Peter M. VanNostrand

KRONE: Hierarchical and Modular Log Anomaly Detection

Feb 07, 2026Abstract:Log anomaly detection is crucial for uncovering system failures and security risks. Although logs originate from nested component executions with clear boundaries, this structure is lost when they are stored as flat sequences. As a result, state-of-the-art methods risk missing true dependencies within executions while learning spurious ones across unrelated events. We propose KRONE, the first hierarchical anomaly detection framework that automatically derives execution hierarchies from flat logs for modular multi-level anomaly detection. At its core, the KRONE Log Abstraction Model captures application-specific semantic hierarchies from log data. This hierarchy is then leveraged to recursively decompose log sequences into multiple levels of coherent execution chunks, referred to as KRONE Seqs, transforming sequence-level anomaly detection into a set of modular KRONE Seq-level detection tasks. For each test KRONE Seq, KRONE employs a hybrid modular detection mechanism that dynamically routes between an efficient level-independent Local-Context detector, which rapidly filters normal sequences, and a Nested-Aware detector that incorporates cross-level semantic dependencies and supports LLM-based anomaly detection and explanation. KRONE further optimizes hierarchical detection through cached result reuse and early-exit strategies. Experiments on three public benchmarks and one industrial dataset from ByteDance Cloud demonstrate that KRONE achieves consistent improvements in detection accuracy, F1-score, data efficiency, resource efficiency, and interpretability. KRONE improves the F1-score by more than 10 percentage points over prior methods while reducing LLM usage to only a small fraction of the test data.

Confidential Deep Learning: Executing Proprietary Models on Untrusted Devices

Aug 28, 2019

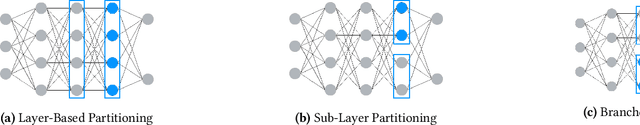

Abstract:Performing deep learning on end-user devices provides fast offline inference results and can help protect the user's privacy. However, running models on untrusted client devices reveals model information which may be proprietary, i.e., the operating system or other applications on end-user devices may be manipulated to copy and redistribute this information, infringing on the model provider's intellectual property. We propose the use of ARM TrustZone, a hardware-based security feature present in most phones, to confidentially run a proprietary model on an untrusted end-user device. We explore the limitations and design challenges of using TrustZone and examine potential approaches for confidential deep learning within this environment. Of particular interest is providing robust protection of proprietary model information while minimizing total performance overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge