Pegah Rokhforoz

Multi-Agent Reinforcement Learning with Graph Convolutional Neural Networks for optimal Bidding Strategies of Generation Units in Electricity Markets

Aug 11, 2022

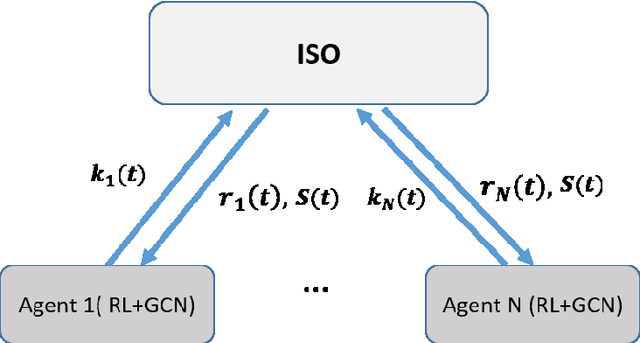

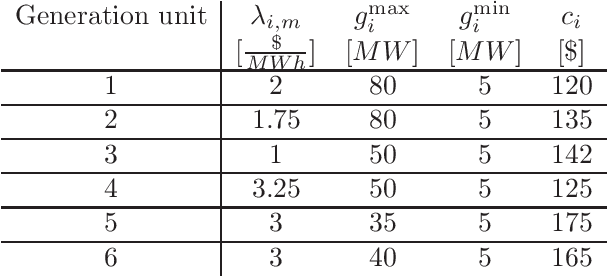

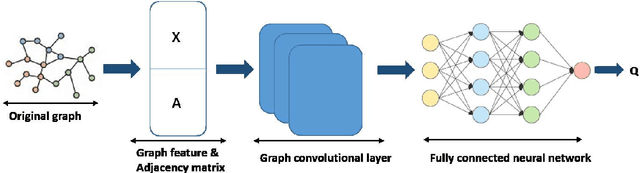

Abstract:Finding optimal bidding strategies for generation units in electricity markets would result in higher profit. However, it is a challenging problem due to the system uncertainty which is due to the unknown other generation units' strategies. Distributed optimization, where each entity or agent decides on its bid individually, has become state of the art. However, it cannot overcome the challenges of system uncertainties. Deep reinforcement learning is a promising approach to learn the optimal strategy in uncertain environments. Nevertheless, it is not able to integrate the information on the spatial system topology in the learning process. This paper proposes a distributed learning algorithm based on deep reinforcement learning (DRL) combined with a graph convolutional neural network (GCN). In fact, the proposed framework helps the agents to update their decisions by getting feedback from the environment so that it can overcome the challenges of the uncertainties. In this proposed algorithm, the state and connection between nodes are the inputs of the GCN, which can make agents aware of the structure of the system. This information on the system topology helps the agents to improve their bidding strategies and increase the profit. We evaluate the proposed algorithm on the IEEE 30-bus system under different scenarios. Also, to investigate the generalization ability of the proposed approach, we test the trained model on IEEE 39-bus system. The results show that the proposed algorithm has more generalization abilities compare to the DRL and can result in higher profit when changing the topology of the system.

Safe multi-agent deep reinforcement learning for joint bidding and maintenance scheduling of generation units

Dec 20, 2021

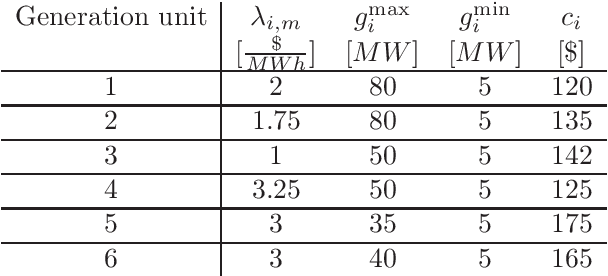

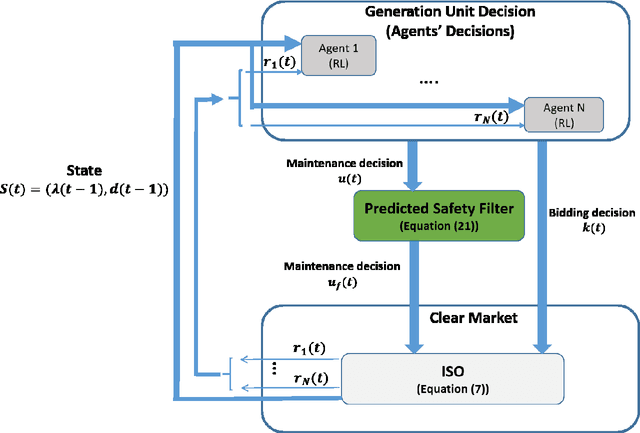

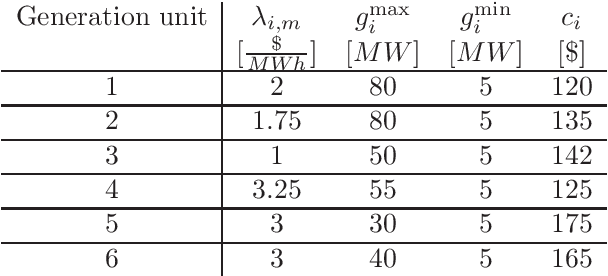

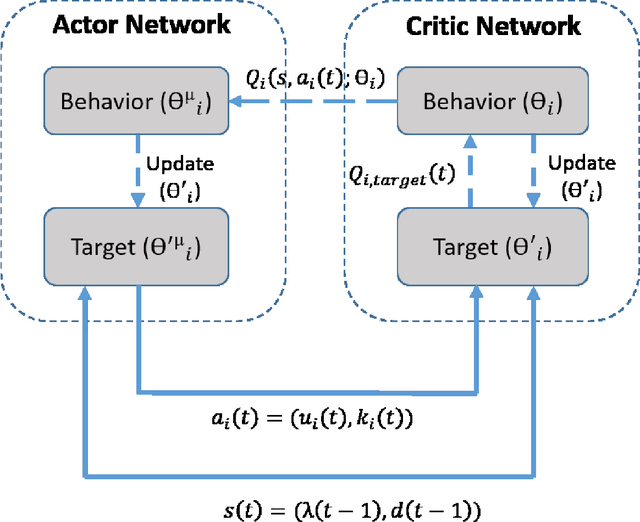

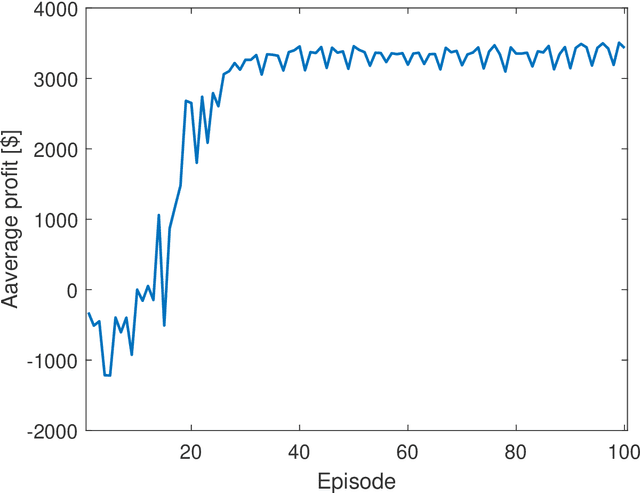

Abstract:This paper proposes a safe reinforcement learning algorithm for generation bidding decisions and unit maintenance scheduling in a competitive electricity market environment. In this problem, each unit aims to find a bidding strategy that maximizes its revenue while concurrently retaining its reliability by scheduling preventive maintenance. The maintenance scheduling provides some safety constraints which should be satisfied at all times. Satisfying the critical safety and reliability constraints while the generation units have an incomplete information of each others' bidding strategy is a challenging problem. Bi-level optimization and reinforcement learning are state of the art approaches for solving this type of problems. However, neither bi-level optimization nor reinforcement learning can handle the challenges of incomplete information and critical safety constraints. To tackle these challenges, we propose the safe deep deterministic policy gradient reinforcement learning algorithm which is based on a combination of reinforcement learning and a predicted safety filter. The case study demonstrates that the proposed approach can achieve a higher profit compared to other state of the art methods while concurrently satisfying the system safety constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge