Patrik Vuilleumier

Deep Neural Encoder-Decoder Model to Relate fMRI Brain Activity with Naturalistic Stimuli

Jul 16, 2025Abstract:We propose an end-to-end deep neural encoder-decoder model to encode and decode brain activity in response to naturalistic stimuli using functional magnetic resonance imaging (fMRI) data. Leveraging temporally correlated input from consecutive film frames, we employ temporal convolutional layers in our architecture, which effectively allows to bridge the temporal resolution gap between natural movie stimuli and fMRI acquisitions. Our model predicts activity of voxels in and around the visual cortex and performs reconstruction of corresponding visual inputs from neural activity. Finally, we investigate brain regions contributing to visual decoding through saliency maps. We find that the most contributing regions are the middle occipital area, the fusiform area, and the calcarine, respectively employed in shape perception, complex recognition (in particular face perception), and basic visual features such as edges and contrasts. These functions being strongly solicited are in line with the decoder's capability to reconstruct edges, faces, and contrasts. All in all, this suggests the possibility to probe our understanding of visual processing in films using as a proxy the behaviour of deep learning models such as the one proposed in this paper.

A Multi-Componential Approach to Emotion Recognition and the Effect of Personality

Oct 22, 2020

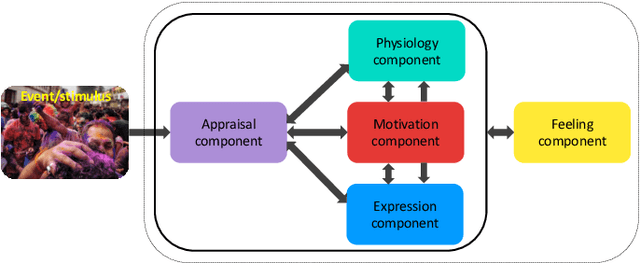

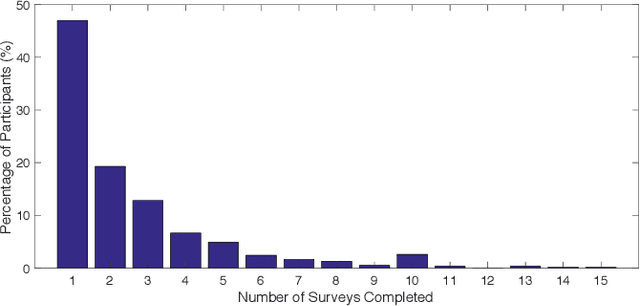

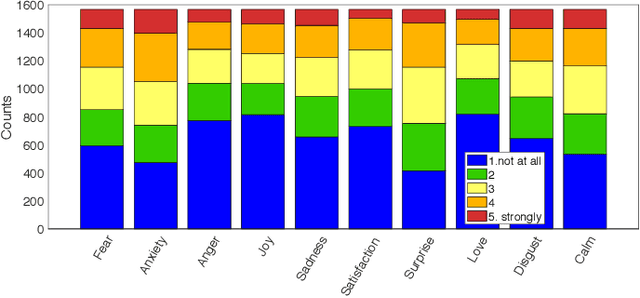

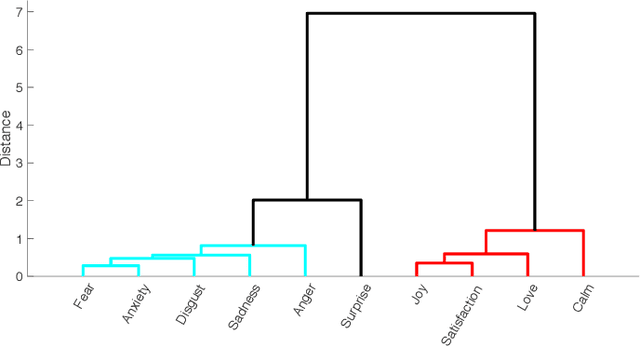

Abstract:Emotions are an inseparable part of human nature affecting our behavior in response to the outside world. Although most empirical studies have been dominated by two theoretical models including discrete categories of emotion and dichotomous dimensions, results from neuroscience approaches suggest a multi-processes mechanism underpinning emotional experience with a large overlap across different emotions. While these findings are consistent with the influential theories of emotion in psychology that emphasize a role for multiple component processes to generate emotion episodes, few studies have systematically investigated the relationship between discrete emotions and a full componential view. This paper applies a componential framework with a data-driven approach to characterize emotional experiences evoked during movie watching. The results suggest that differences between various emotions can be captured by a few (at least 6) latent dimensions, each defined by features associated with component processes, including appraisal, expression, physiology, motivation, and feeling. In addition, the link between discrete emotions and component model is explored and results show that a componential model with a limited number of descriptors is still able to predict the level of experienced discrete emotion(s) to a satisfactory level. Finally, as appraisals may vary according to individual dispositions and biases, we also study the relationship between personality traits and emotions in our computational framework and show that the role of personality on discrete emotion differences can be better justified using the component model.

* 13 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge