Paris Smaragdis

Heterogeneous Target Speech Separation

Apr 07, 2022

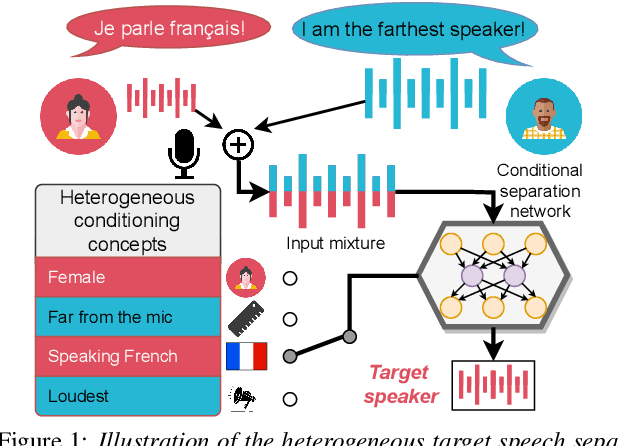

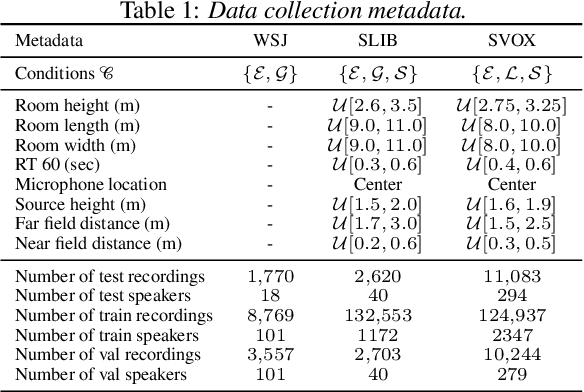

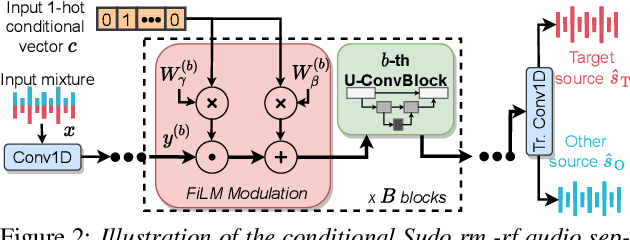

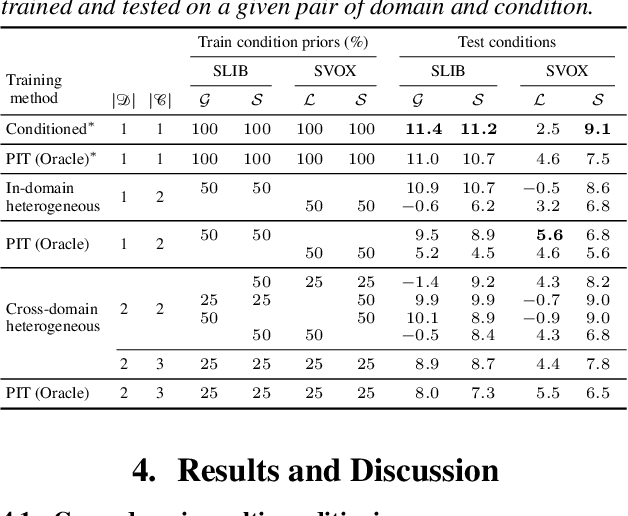

Abstract:We introduce a new paradigm for single-channel target source separation where the sources of interest can be distinguished using non-mutually exclusive concepts (e.g., loudness, gender, language, spatial location, etc). Our proposed heterogeneous separation framework can seamlessly leverage datasets with large distribution shifts and learn cross-domain representations under a variety of concepts used as conditioning. Our experiments show that training separation models with heterogeneous conditions facilitates the generalization to new concepts with unseen out-of-domain data while also performing substantially higher than single-domain specialist models. Notably, such training leads to more robust learning of new harder source separation discriminative concepts and can yield improvements over permutation invariant training with oracle source selection. We analyze the intrinsic behavior of source separation training with heterogeneous metadata and propose ways to alleviate emerging problems with challenging separation conditions. We release the collection of preparation recipes for all datasets used to further promote research towards this challenging task.

End-to-end LPCNet: A Neural Vocoder With Fully-Differentiable LPC Estimation

Mar 29, 2022

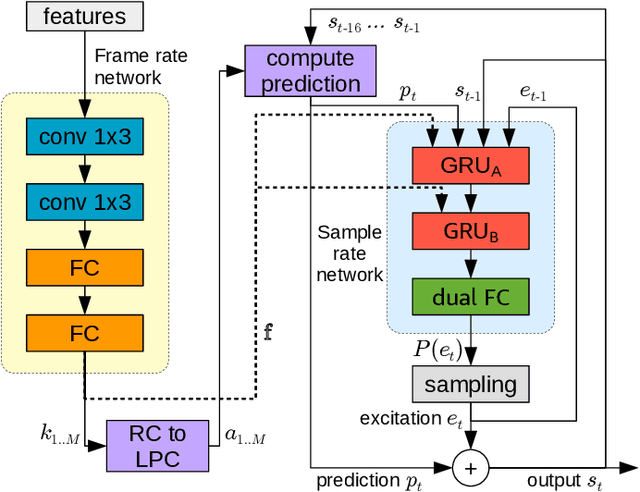

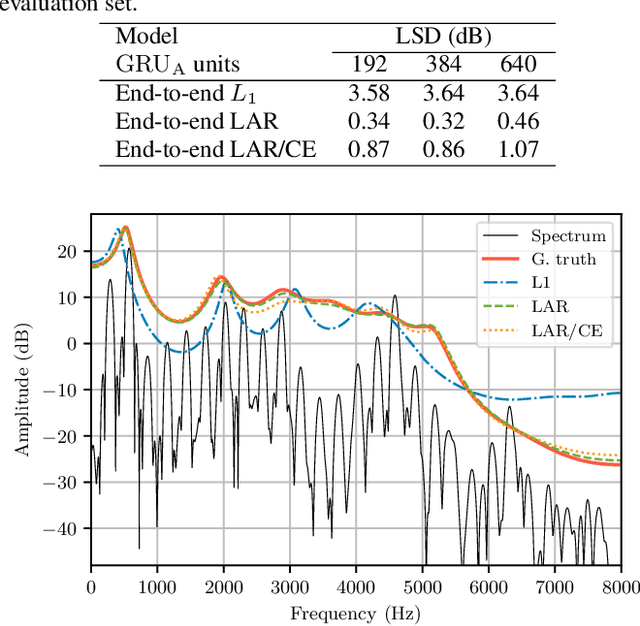

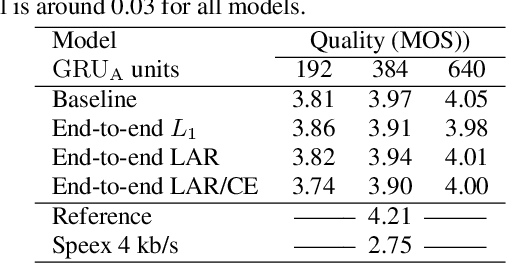

Abstract:Neural vocoders have recently demonstrated high quality speech synthesis, but typically require a high computational complexity. LPCNet was proposed as a way to reduce the complexity of neural synthesis by using linear prediction (LP) to assist an autoregressive model. At inference time, LPCNet relies on the LP coefficients being explicitly computed from the input acoustic features. That makes the design of LPCNet-based systems more complicated, while adding the constraint that the input features must represent a clean speech spectrum. We propose an end-to-end version of LPCNet that lifts these limitations by learning to infer the LP coefficients from the input features in the frame rate network. Results show that the proposed end-to-end approach equals or exceeds the quality of the original LPCNet model, but without explicit LP analysis. Our open-source end-to-end model still benefits from LPCNet's low complexity, while allowing for any type of conditioning features.

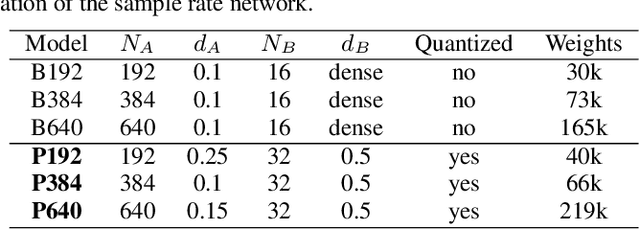

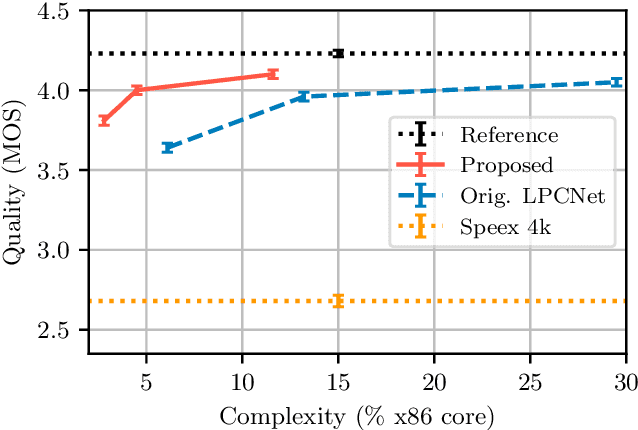

Neural Speech Synthesis on a Shoestring: Improving the Efficiency of LPCNet

Feb 22, 2022

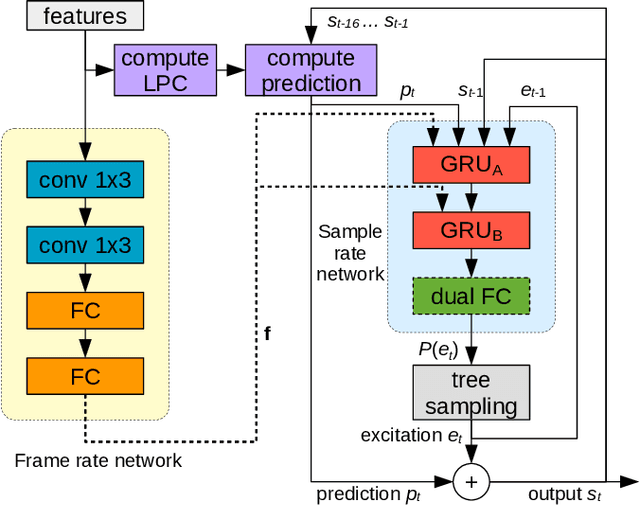

Abstract:Neural speech synthesis models can synthesize high quality speech but typically require a high computational complexity to do so. In previous work, we introduced LPCNet, which uses linear prediction to significantly reduce the complexity of neural synthesis. In this work, we further improve the efficiency of LPCNet -- targeting both algorithmic and computational improvements -- to make it usable on a wide variety of devices. We demonstrate an improvement in synthesis quality while operating 2.5x faster. The resulting open-source LPCNet algorithm can perform real-time neural synthesis on most existing phones and is even usable in some embedded devices.

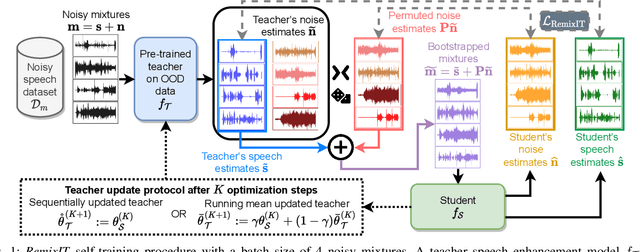

RemixIT: Continual self-training of speech enhancement models via bootstrapped remixing

Feb 22, 2022

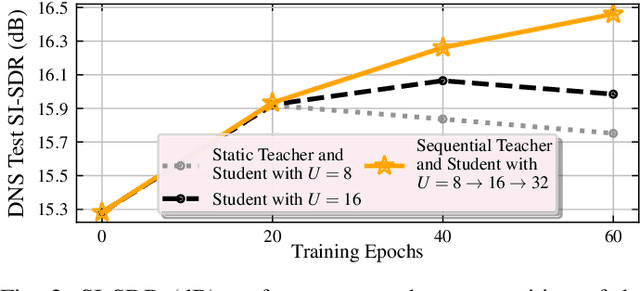

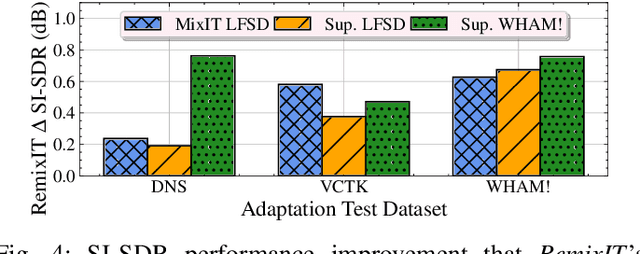

Abstract:We present RemixIT, a simple yet effective self-supervised method for training speech enhancement without the need of a single isolated in-domain speech nor a noise waveform. Our approach overcomes limitations of previous methods which make them dependent on clean in-domain target signals and thus, sensitive to any domain mismatch between train and test samples. RemixIT is based on a continuous self-training scheme in which a pre-trained teacher model on out-of-domain data infers estimated pseudo-target signals for in-domain mixtures. Then, by permuting the estimated clean and noise signals and remixing them together, we generate a new set of bootstrapped mixtures and corresponding pseudo-targets which are used to train the student network. Vice-versa, the teacher periodically refines its estimates using the updated parameters of the latest student models. Experimental results on multiple speech enhancement datasets and tasks not only show the superiority of our method over prior approaches but also showcase that RemixIT can be combined with any separation model as well as be applied towards any semi-supervised and unsupervised domain adaptation task. Our analysis, paired with empirical evidence, sheds light on the inside functioning of our self-training scheme wherein the student model keeps obtaining better performance while observing severely degraded pseudo-targets.

Auto-DSP: Learning to Optimize Acoustic Echo Cancellers

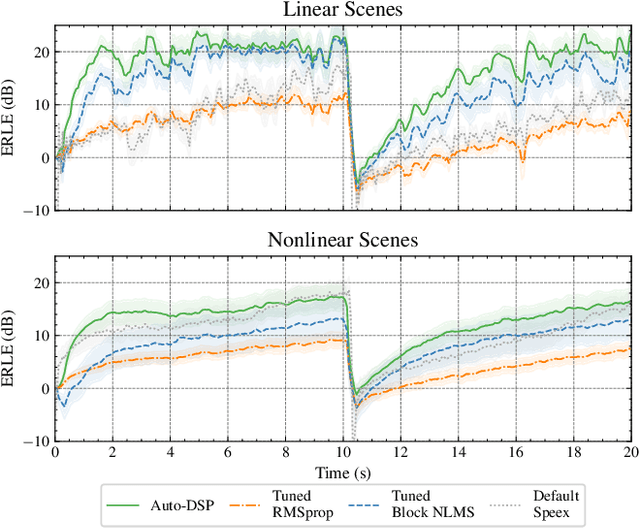

Oct 08, 2021

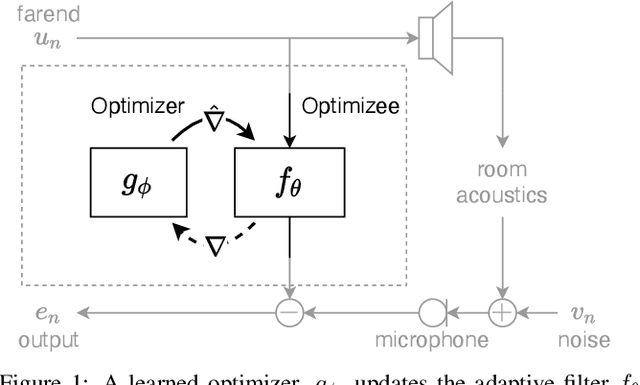

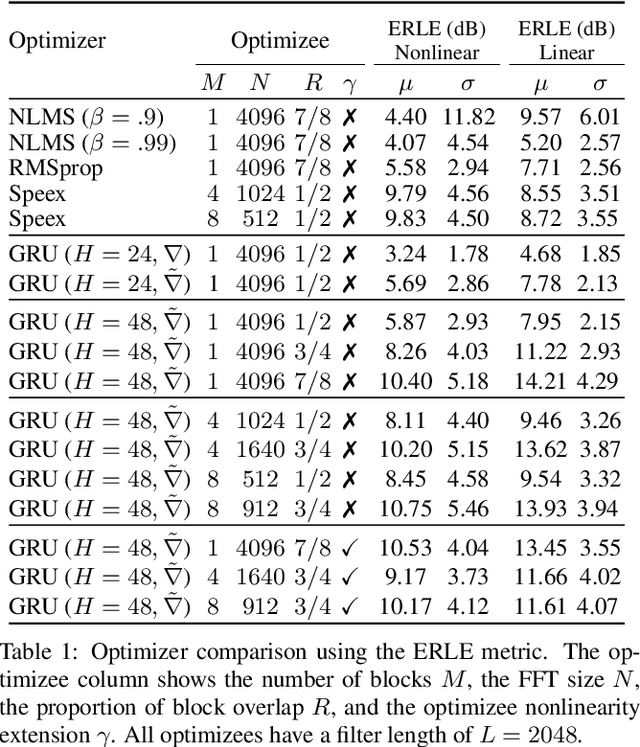

Abstract:Adaptive filtering algorithms are commonplace in signal processing and have wide-ranging applications from single-channel denoising to multi-channel acoustic echo cancellation and adaptive beamforming. Such algorithms typically operate via specialized online, iterative optimization methods and have achieved tremendous success, but require expert knowledge, are slow to develop, and are difficult to customize. In our work, we present a new method to automatically learn adaptive filtering update rules directly from data. To do so, we frame adaptive filtering as a differentiable operator and train a learned optimizer to output a gradient descent-based update rule from data via backpropagation through time. We demonstrate our general approach on an acoustic echo cancellation task (single-talk with noise) and show that we can learn high-performing adaptive filters for a variety of common linear and non-linear multidelayed block frequency domain filter architectures. We also find that our learned update rules exhibit fast convergence, can optimize in the presence of nonlinearities, and are robust to acoustic scene changes despite never encountering any during training.

Sound Event Detection with Adaptive Frequency Selection

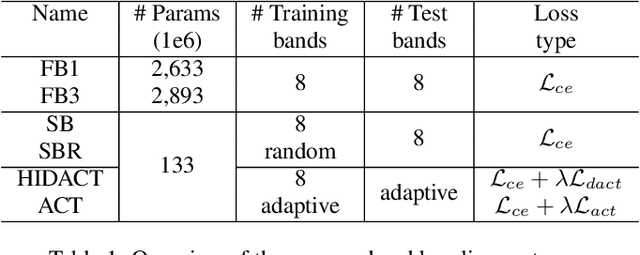

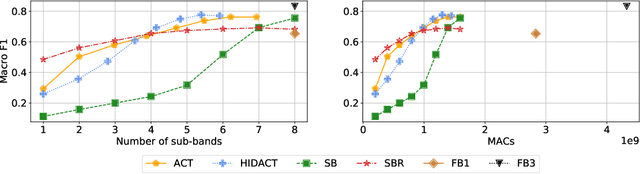

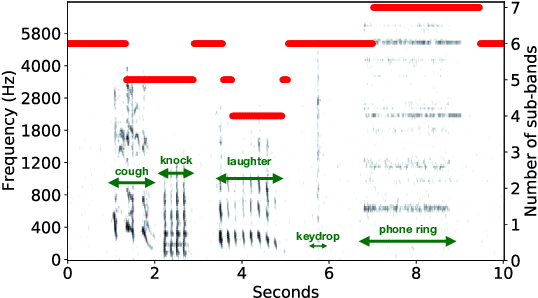

May 17, 2021

Abstract:In this work, we present HIDACT, a novel network architecture for adaptive computation for efficiently recognizing acoustic events. We evaluate the model on a sound event detection task where we train it to adaptively process frequency bands. The model learns to adapt to the input without requesting all frequency sub-bands provided. It can make confident predictions within fewer processing steps, hence reducing the amount of computation. Experimental results show that HIDACT has comparable performance to baseline models with more parameters and higher computational complexity. Furthermore, the model can adjust the amount of computation based on the data and computational budget.

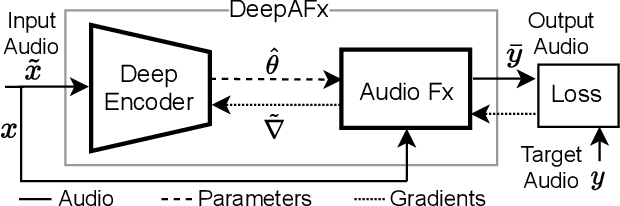

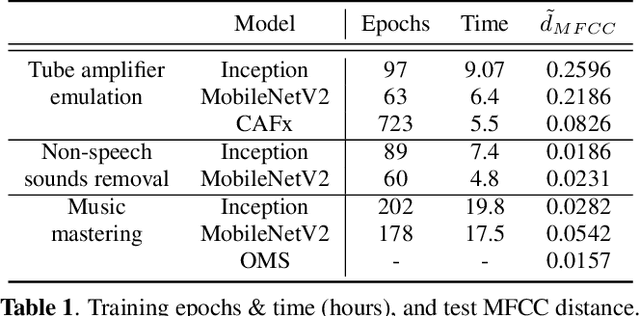

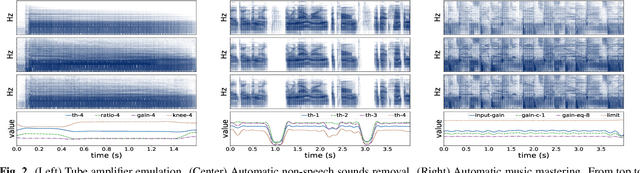

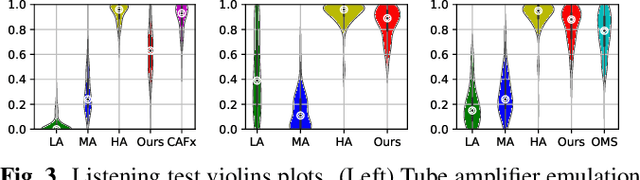

Differentiable Signal Processing With Black-Box Audio Effects

May 11, 2021

Abstract:We present a data-driven approach to automate audio signal processing by incorporating stateful third-party, audio effects as layers within a deep neural network. We then train a deep encoder to analyze input audio and control effect parameters to perform the desired signal manipulation, requiring only input-target paired audio data as supervision. To train our network with non-differentiable black-box effects layers, we use a fast, parallel stochastic gradient approximation scheme within a standard auto differentiation graph, yielding efficient end-to-end backpropagation. We demonstrate the power of our approach with three separate automatic audio production applications: tube amplifier emulation, automatic removal of breaths and pops from voice recordings, and automatic music mastering. We validate our results with a subjective listening test, showing our approach not only can enable new automatic audio effects tasks, but can yield results comparable to a specialized, state-of-the-art commercial solution for music mastering.

Separate but Together: Unsupervised Federated Learning for Speech Enhancement from Non-IID Data

May 11, 2021

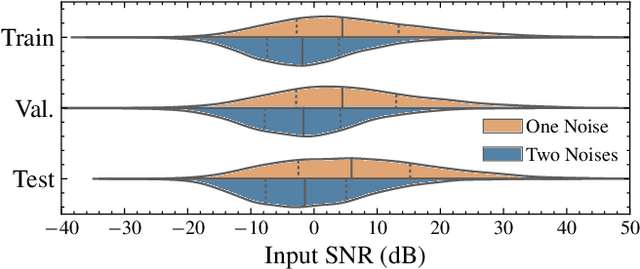

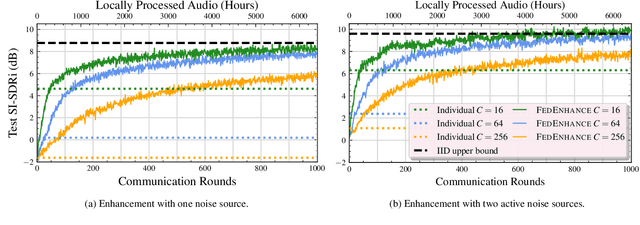

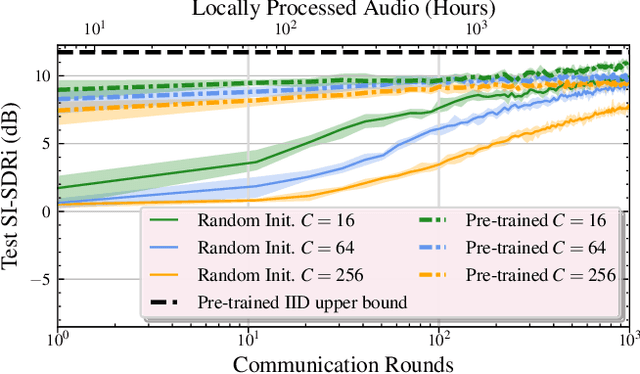

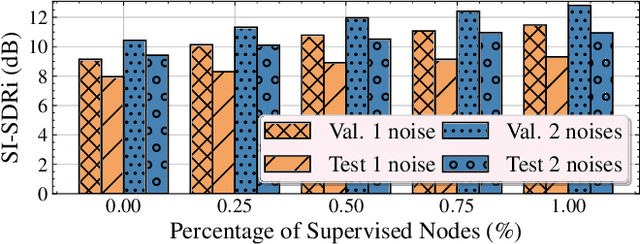

Abstract:We propose FEDENHANCE, an unsupervised federated learning (FL) approach for speech enhancement and separation with non-IID distributed data across multiple clients. We simulate a real-world scenario where each client only has access to a few noisy recordings from a limited and disjoint number of speakers (hence non-IID). Each client trains their model in isolation using mixture invariant training while periodically providing updates to a central server. Our experiments show that our approach achieves competitive enhancement performance compared to IID training on a single device and that we can further facilitate the convergence speed and the overall performance using transfer learning on the server-side. Moreover, we show that we can effectively combine updates from clients trained locally with supervised and unsupervised losses. We also release a new dataset LibriFSD50K and its creation recipe in order to facilitate FL research for source separation problems.

Point Cloud Audio Processing

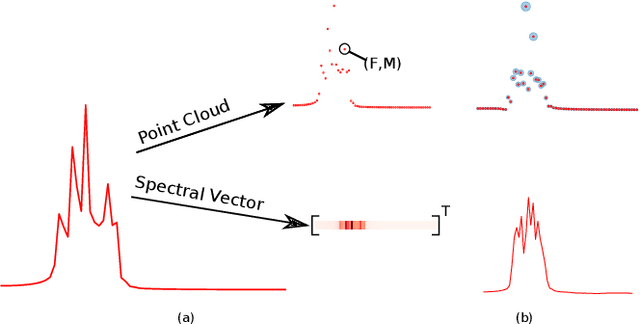

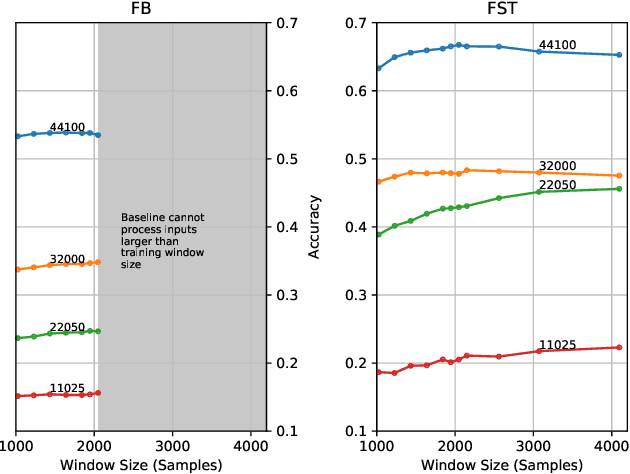

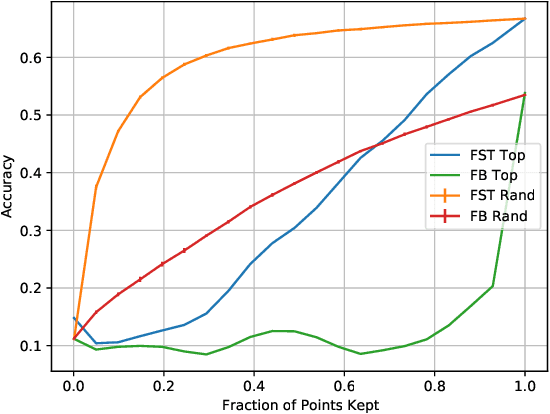

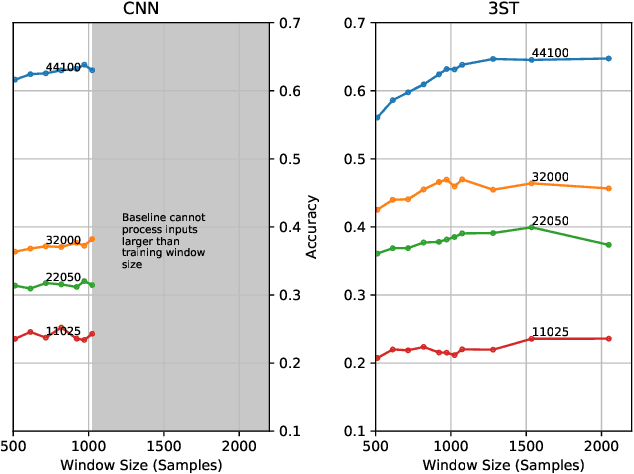

May 06, 2021

Abstract:Most audio processing pipelines involve transformations that act on fixed-dimensional input representations of audio. For example, when using the Short Time Fourier Transform (STFT) the DFT size specifies a fixed dimension for the input representation. As a consequence, most audio machine learning models are designed to process fixed-size vector inputs which often prohibits the repurposing of learned models on audio with different sampling rates or alternative representations. We note, however, that the intrinsic spectral information in the audio signal is invariant to the choice of the input representation or the sampling rate. Motivated by this, we introduce a novel way of processing audio signals by treating them as a collection of points in feature space, and we use point cloud machine learning models that give us invariance to the choice of representation parameters, such as DFT size or the sampling rate. Additionally, we observe that these methods result in smaller models, and allow us to significantly subsample the input representation with minimal effects to a trained model performance.

Compute and memory efficient universal sound source separation

Mar 03, 2021

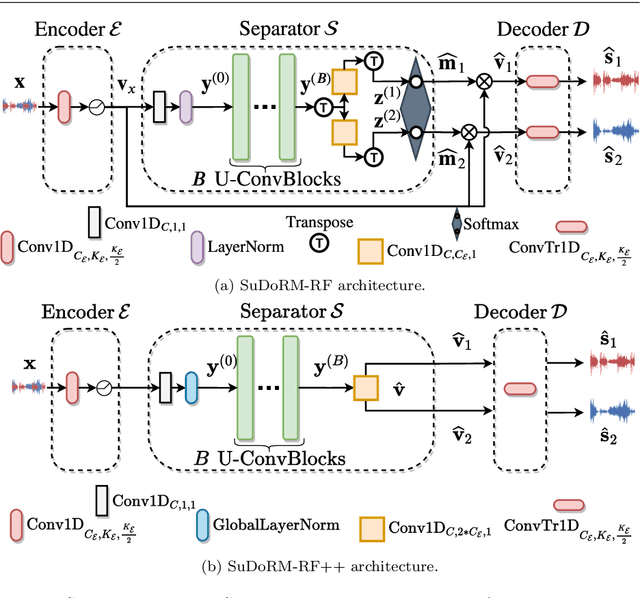

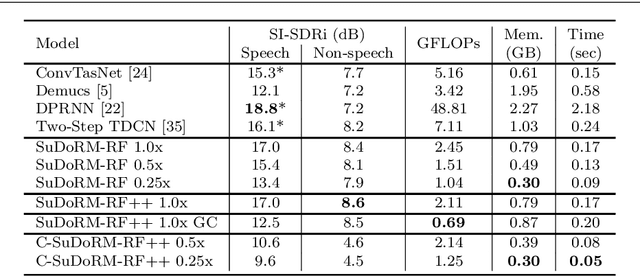

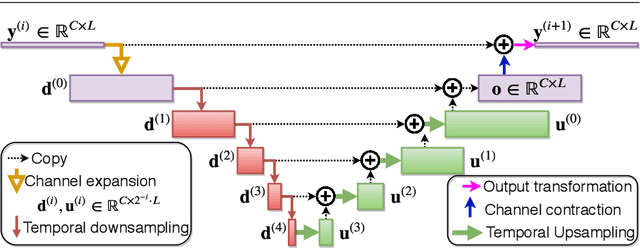

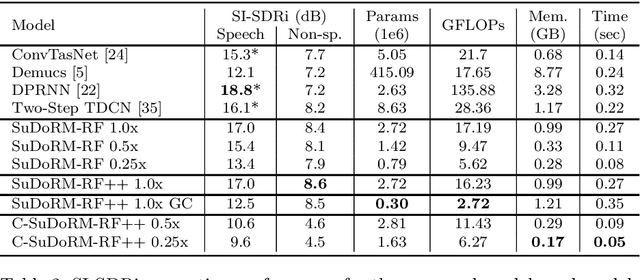

Abstract:Recent progress in audio source separation lead by deep learning has enabled many neural network models to provide robust solutions to this fundamental estimation problem. In this study, we provide a family of efficient neural network architectures for general purpose audio source separation while focusing on multiple computational aspects that hinder the application of neural networks in real-world scenarios. The backbone structure of this convolutional network is the SUccessive DOwnsampling and Resampling of Multi-Resolution Features (SuDoRM-RF) as well as their aggregation which is performed through simple one-dimensional convolutions. This mechanism enables our models to obtain high fidelity signal separation in a wide variety of settings where variable number of sources are present and with limited computational resources (e.g. floating point operations, memory footprint, number of parameters and latency). Our experiments show that SuDoRM-RF models perform comparably and even surpass several state-of-the-art benchmarks with significantly higher computational resource requirements. The causal variation of SuDoRM-RF is able to obtain competitive performance in real-time speech separation of around 10dB scale-invariant signal-to-distortion ratio improvement (SI-SDRi) while remaining up to 20 times faster than real-time on a laptop device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge