Panagiotis Artemiadis

On Swarm Leader Identification using Probing Policies

Dec 20, 2025

Abstract:Identifying the leader within a robotic swarm is crucial, especially in adversarial contexts where leader concealment is necessary for mission success. This work introduces the interactive Swarm Leader Identification (iSLI) problem, a novel approach where an adversarial probing agent identifies a swarm's leader by physically interacting with its members. We formulate the iSLI problem as a Partially Observable Markov Decision Process (POMDP) and employ Deep Reinforcement Learning, specifically Proximal Policy Optimization (PPO), to train the prober's policy. The proposed approach utilizes a novel neural network architecture featuring a Timed Graph Relationformer (TGR) layer combined with a Simplified Structured State Space Sequence (S5) model. The TGR layer effectively processes graph-based observations of the swarm, capturing temporal dependencies and fusing relational information using a learned gating mechanism to generate informative representations for policy learning. Extensive simulations demonstrate that our TGR-based model outperforms baseline graph neural network architectures and exhibits significant zero-shot generalization capabilities across varying swarm sizes and speeds different from those used during training. The trained prober achieves high accuracy in identifying the leader, maintaining performance even in out-of-training distribution scenarios, and showing appropriate confidence levels in its predictions. Real-world experiments with physical robots further validate the approach, confirming successful sim-to-real transfer and robustness to dynamic changes, such as unexpected agent disconnections.

Toward Seamless Physical Human-Humanoid Interaction: Insights from Control, Intent, and Modeling with a Vision for What Comes Next

Dec 08, 2025Abstract:Physical Human-Humanoid Interaction (pHHI) is a rapidly advancing field with significant implications for deploying robots in unstructured, human-centric environments. In this review, we examine the current state of the art in pHHI through three core pillars: (i) humanoid modeling and control, (ii) human intent estimation, and (iii) computational human models. For each pillar, we survey representative approaches, identify open challenges, and analyze current limitations that hinder robust, scalable, and adaptive interaction. These include the need for whole-body control strategies capable of handling uncertain human dynamics, real-time intent inference under limited sensing, and modeling techniques that account for variability in human physical states. Although significant progress has been made within each domain, integration across pillars remains limited. We propose pathways for unifying methods across these areas to enable cohesive interaction frameworks. This structure enables us not only to map the current landscape but also to propose concrete directions for future research that aim to bridge these domains. Additionally, we introduce a unified taxonomy of interaction types based on modality, distinguishing between direct interactions (e.g., physical contact) and indirect interactions (e.g., object-mediated), and on the level of robot engagement, ranging from assistance to cooperation and collaboration. For each category in this taxonomy, we provide the three core pillars that highlight opportunities for cross-pillar unification. Our goal is to suggest avenues to advance robust, safe, and intuitive physical interaction, providing a roadmap for future research that will allow humanoid systems to effectively understand, anticipate, and collaborate with human partners in diverse real-world settings.

Efficient and Compliant Control Framework for Versatile Human-Humanoid Collaborative Transportation

Dec 08, 2025Abstract:We present a control framework that enables humanoid robots to perform collaborative transportation tasks with a human partner. The framework supports both translational and rotational motions, which are fundamental to co-transport scenarios. It comprises three components: a high-level planner, a low-level controller, and a stiffness modulation mechanism. At the planning level, we introduce the Interaction Linear Inverted Pendulum (I-LIP), which, combined with an admittance model and an MPC formulation, generates dynamically feasible footstep plans. These are executed by a QP-based whole-body controller that accounts for the coupled humanoid-object dynamics. Stiffness modulation regulates robot-object interaction, ensuring convergence to the desired relative configuration defined by the distance between the object and the robot's center of mass. We validate the effectiveness of the framework through real-world experiments conducted on the Digit humanoid platform. To quantify collaboration quality, we propose an efficiency metric that captures both task performance and inter-agent coordination. We show that this metric highlights the role of compliance in collaborative tasks and offers insights into desirable trajectory characteristics across both high- and low-level control layers. Finally, we showcase experimental results on collaborative behaviors, including translation, turning, and combined motions such as semi circular trajectories, representative of naturally occurring co-transportation tasks.

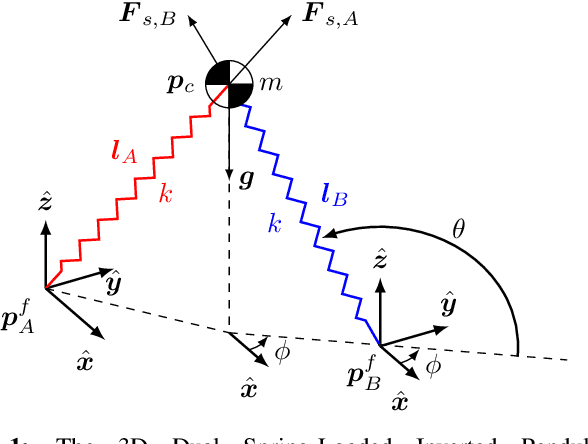

Robust Dynamic Walking for a 3D Dual-SLIP Model under One-Step Unilateral Stiffness Perturbations: Towards Bipedal Locomotion over Compliant Terrain

Mar 14, 2022

Abstract:Bipedal walking is one of the most important hallmarks of human that robots have been trying to mimic for many decades. Although previous control methodologies have achieved robot walking on some terrains, there is a need for a framework allowing stable and robust locomotion over a wide range of compliant surfaces. This work proposes a novel biomechanics-inspired controller that adjusts the stiffness of the legs in support for robust and dynamic bipedal locomotion over compliant terrains. First, the 3D Dual-SLIP model is extended to support for the first time locomotion over compliant surfaces with variable stiffness and damping parameters. Then, the proposed controller is compared to a Linear-Quadratic Regulator (LQR) controller, in terms of robustness on stepping on soft terrain. The LQR controller is shown to be robust only up to a moderate ground stiffness level of 174 kN/m, while it fails in lower stiffness levels. On the contrary, the proposed controller can produce stable gait in stiffness levels as low as 30 kN/m, which results in a vertical ground penetration of the leg that is deeper than 10% of its rest length. The proposed framework could advance the field of bipedal walking, by generating stable walking trajectories for a wide range of compliant terrains useful for the control of bipeds and humanoids, as well as by improving controllers for prosthetic devices with tunable stiffness.

Repeated Robot-Assisted Unilateral Stiffness Perturbations Result in Significant Aftereffects Relevant to Post-Stroke Gait Rehabilitation

Mar 01, 2022

Abstract:Due to hemiparesis, stroke survivors frequently develop a dysfunctional gait that is often characterized by an overall decrease in walking speed and a unilateral decrease in step length. With millions currently affected by this dysfunctional gait, robust and effective rehabilitation protocols are needed. Although robotic devices have been used in numerous rehabilitation protocols for gait, the lack of significant aftereffects that translate to effective therapy makes their application still questionable. This paper proposes a novel type of robot-assisted intervention that results in significant aftereffects that last much longer than any other previous study. With the utilization of a novel robotic device, the Variable Stiffness Treadmill (VST), the stiffness of the walking surface underneath one leg is decreased for a number of steps. This unilateral stiffness perturbation results in a significant aftereffect that is both useful for stroke rehabilitation and often lasts for over 200 gait cycles after the intervention has concluded. More specifically, the aftereffect created is an increase in both left and right step lengths, with the unperturbed step length increasing significantly more than the perturbed. These effects may be helpful in correcting two of the most common issues in post-stroke gait: overall decrease in walking speed and a unilateral shortened step length. The results of this work show that a robot-assisted therapy protocol involving repeated unilateral stiffness perturbations can lead to a more permanent and effective solution to post-stroke gait.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge