Ouiame Marnissi

FairEnergy: Contribution-Based Fairness meets Energy Efficiency in Federated Learning

Nov 19, 2025Abstract:Federated learning (FL) enables collaborative model training across distributed devices while preserving data privacy. However, balancing energy efficiency and fair participation while ensuring high model accuracy remains challenging in wireless edge systems due to heterogeneous resources, unequal client contributions, and limited communication capacity. To address these challenges, we propose FairEnergy, a fairness-aware energy minimization framework that integrates a contribution score capturing both the magnitude of updates and their compression ratio into the joint optimization of device selection, bandwidth allocation, and compression level. The resulting mixed-integer non-convex problem is solved by relaxing binary selection variables and applying Lagrangian decomposition to handle global bandwidth coupling, followed by per-device subproblem optimization. Experiments on non-IID data show that FairEnergy achieves higher accuracy while reducing energy consumption by up to 79\% compared to baseline strategies.

Joint Probability Selection and Power Allocation for Federated Learning

Jan 15, 2024

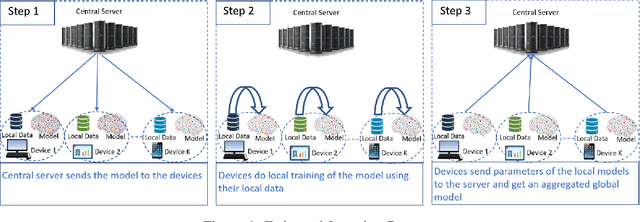

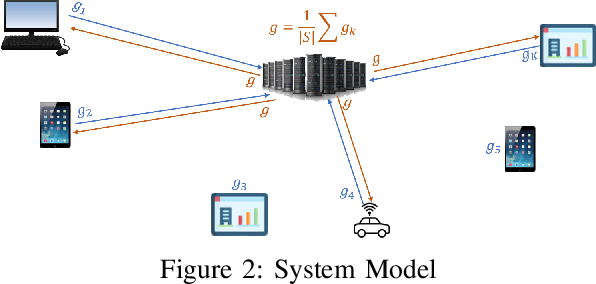

Abstract:In this paper, we study the performance of federated learning over wireless networks, where devices with a limited energy budget train a machine learning model. The federated learning performance depends on the selection of the clients participating in the learning at each round. Most existing studies suggest deterministic approaches for the client selection, resulting in challenging optimization problems that are usually solved using heuristics, and therefore without guarantees on the quality of the final solution. We formulate a new probabilistic approach to jointly select clients and allocate power optimally so that the expected number of participating clients is maximized. To solve the problem, a new alternating algorithm is proposed, where at each step, the closed-form solutions for user selection probabilities and power allocations are obtained. Our numerical results show that the proposed approach achieves a significant performance in terms of energy consumption, completion time and accuracy as compared to the studied benchmarks.

Client Selection in Federated Learning based on Gradients Importance

Nov 19, 2021

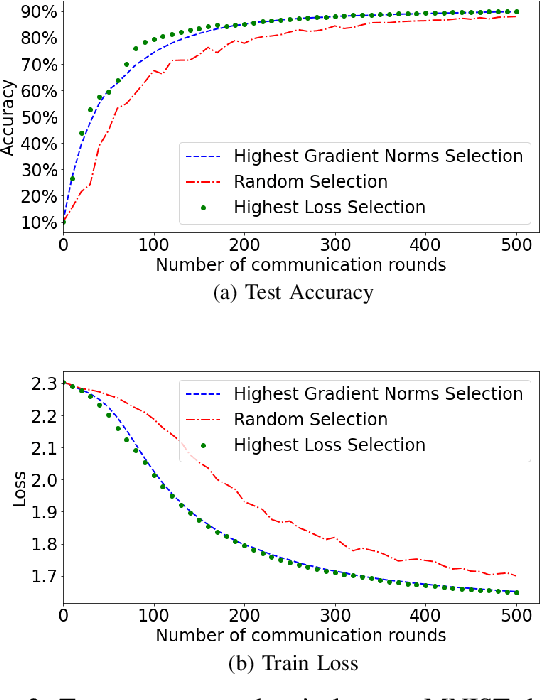

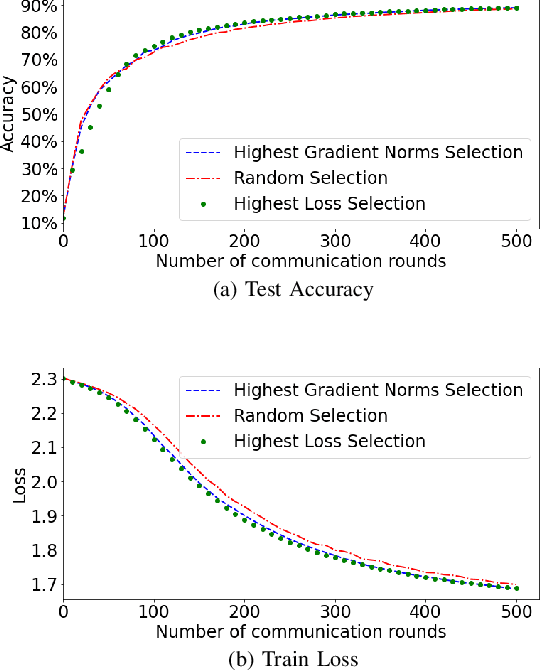

Abstract:Federated learning (FL) enables multiple devices to collaboratively learn a global model without sharing their personal data. In real-world applications, the different parties are likely to have heterogeneous data distribution and limited communication bandwidth. In this paper, we are interested in improving the communication efficiency of FL systems. We investigate and design a device selection strategy based on the importance of the gradient norms. In particular, our approach consists of selecting devices with the highest norms of gradient values at each communication round. We study the convergence and the performance of such a selection technique and compare it to existing ones. We perform several experiments with non-iid set-up. The results show the convergence of our method with a considerable increase of test accuracy comparing to the random selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge