Ole-Christoffer Granmo

A Scheme for Continuous Input to the Tsetlin Machine with Applications to Forecasting Disease Outbreaks

May 10, 2019

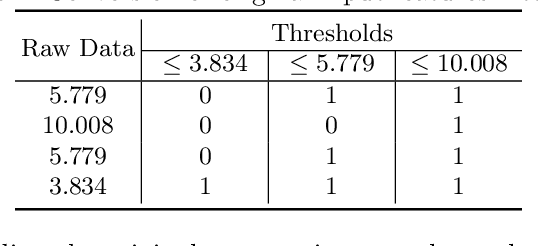

Abstract:In this paper, we apply a new promising tool for pattern classification, namely, the Tsetlin Machine (TM), to the field of disease forecasting. The TM is interpretable because it is based on manipulating expressions in propositional logic, leveraging a large team of Tsetlin Automata (TA). Apart from being interpretable, this approach is attractive due to its low computational cost and its capacity to handle noise. To attack the problem of forecasting, we introduce a preprocessing method that extends the TM so that it can handle continuous input. Briefly stated, we convert continuous input into a binary representation based on thresholding. The resulting extended TM is evaluated and analyzed using an artificial dataset. The TM is further applied to forecast dengue outbreaks of all the seventeen regions in Philippines using the spatio-temporal properties of the data. Experimental results show that dengue outbreak forecasts made by the TM are more accurate than those obtained by a Support Vector Machine (SVM), Decision Trees (DTs), and several multi-layered Artificial Neural Networks (ANNs), both in terms of forecasting precision and F1-score.

The Dreaming Variational Autoencoder for Reinforcement Learning Environments

Oct 02, 2018

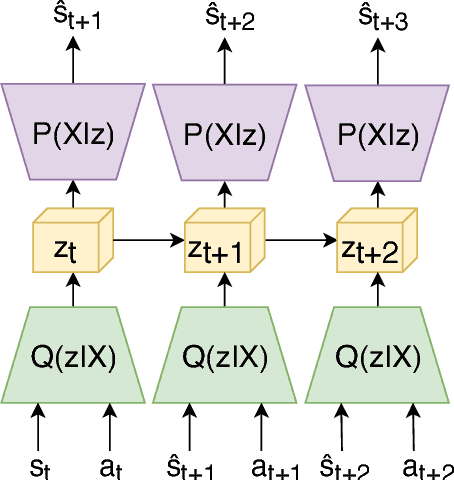

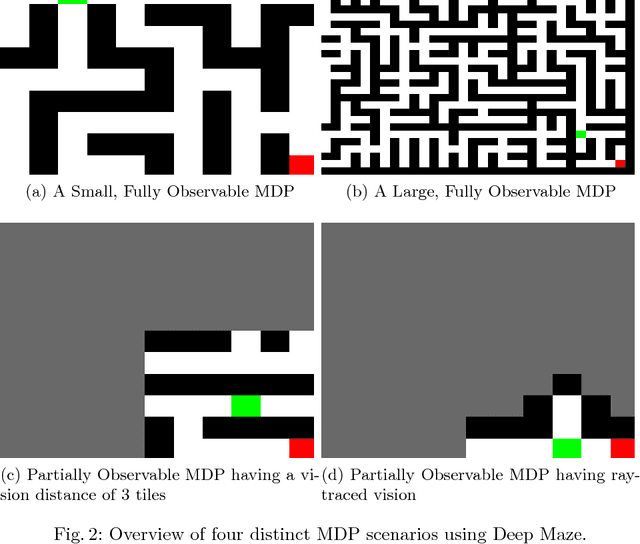

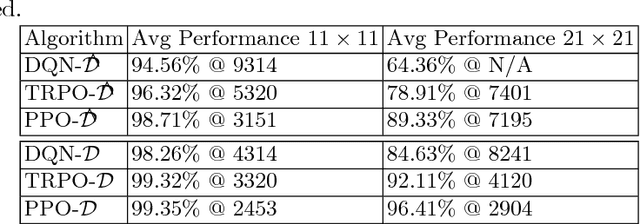

Abstract:Reinforcement learning has shown great potential in generalizing over raw sensory data using only a single neural network for value optimization. There are several challenges in the current state-of-the-art reinforcement learning algorithms that prevent them from converging towards the global optima. It is likely that the solution to these problems lies in short- and long-term planning, exploration and memory management for reinforcement learning algorithms. Games are often used to benchmark reinforcement learning algorithms as they provide a flexible, reproducible, and easy to control environment. Regardless, few games feature a state-space where results in exploration, memory, and planning are easily perceived. This paper presents The Dreaming Variational Autoencoder (DVAE), a neural network based generative modeling architecture for exploration in environments with sparse feedback. We further present Deep Maze, a novel and flexible maze engine that challenges DVAE in partial and fully-observable state-spaces, long-horizon tasks, and deterministic and stochastic problems. We show initial findings and encourage further work in reinforcement learning driven by generative exploration.

Using the Tsetlin Machine to Learn Human-Interpretable Rules for High-Accuracy Text Categorization with Medical Applications

Sep 16, 2018

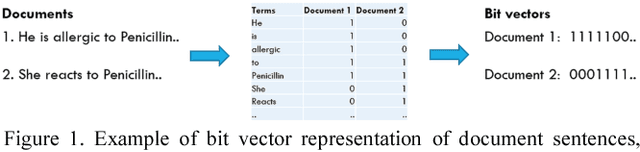

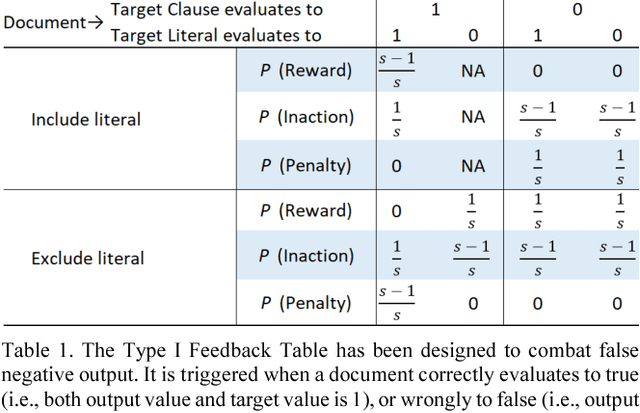

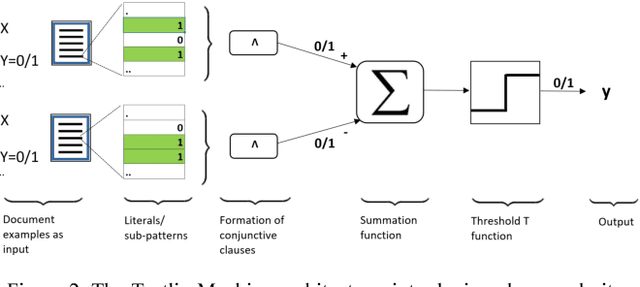

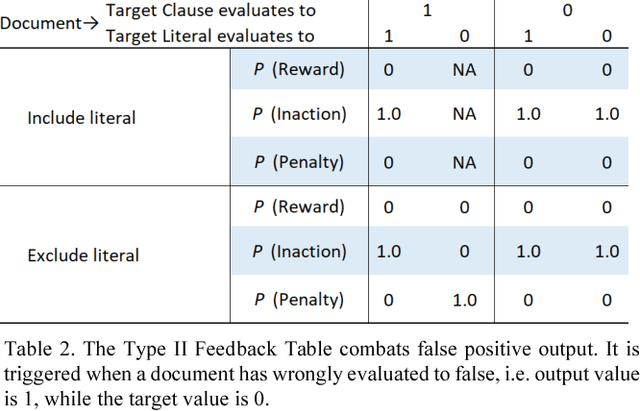

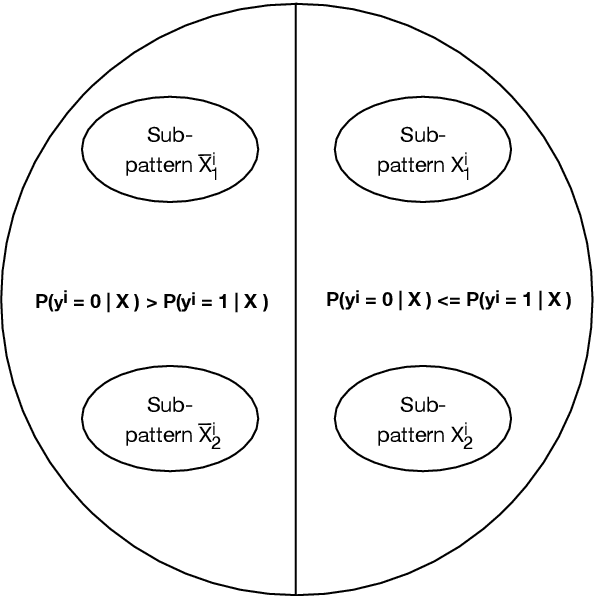

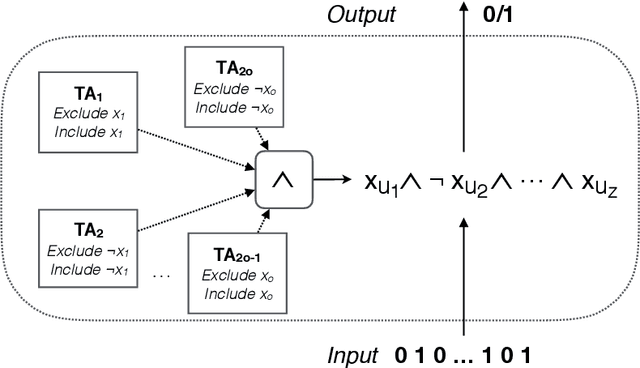

Abstract:Medical applications challenge today's text categorization techniques by demanding both high accuracy and ease-of-interpretation. Although deep learning has provided a leap ahead in accuracy, this leap comes at the sacrifice of interpretability. To address this accuracy-interpretability challenge, we here introduce, for the first time, a text categorization approach that leverages the recently introduced Tsetlin Machine. In all brevity, we represent the terms of a text as propositional variables. From these, we capture categories using simple propositional formulae, such as: if "rash" and "reaction" and "penicillin" then Allergy. The Tsetlin Machine learns these formulae from a labelled text, utilizing conjunctive clauses to represent the particular facets of each category. Indeed, even the absence of terms (negated features) can be used for categorization purposes. Our empirical comparison with Na\"ive Bayes, decision trees, linear support vector machines (SVMs), random forest, long short-term memory (LSTM) neural networks, and other techniques, is quite conclusive. The Tsetlin Machine either performs on par with or outperforms all of the evaluated methods on both the 20 Newsgroups and IMDb datasets, as well as on a non-public clinical dataset. On average, the Tsetlin Machine delivers the best recall and precision scores across the datasets. Finally, our GPU implementation of the Tsetlin Machine executes 5 to 15 times faster than the CPU implementation, depending on the dataset. We thus believe that our novel approach can have a significant impact on a wide range of text analysis applications, forming a promising starting point for deeper natural language understanding with the Tsetlin Machine.

Deep RTS: A Game Environment for Deep Reinforcement Learning in Real-Time Strategy Games

Aug 15, 2018

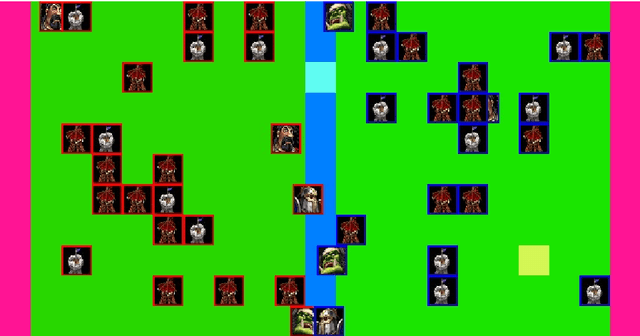

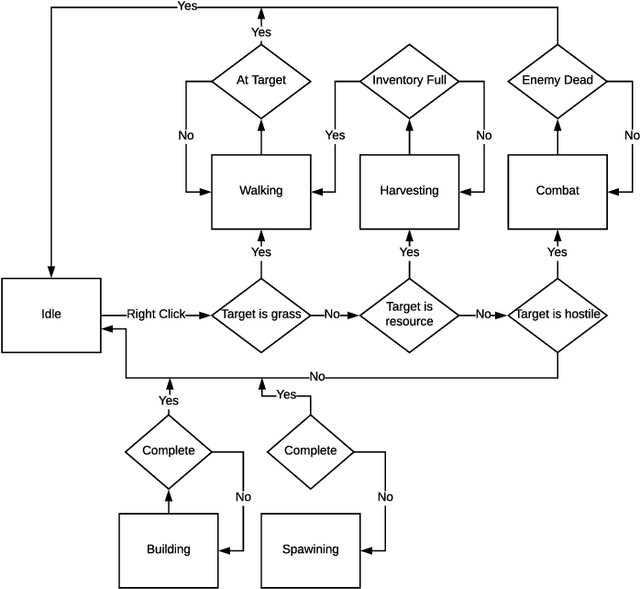

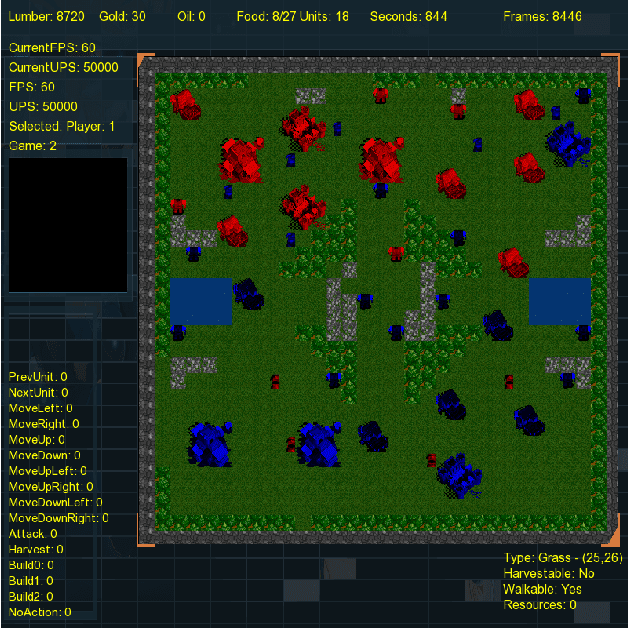

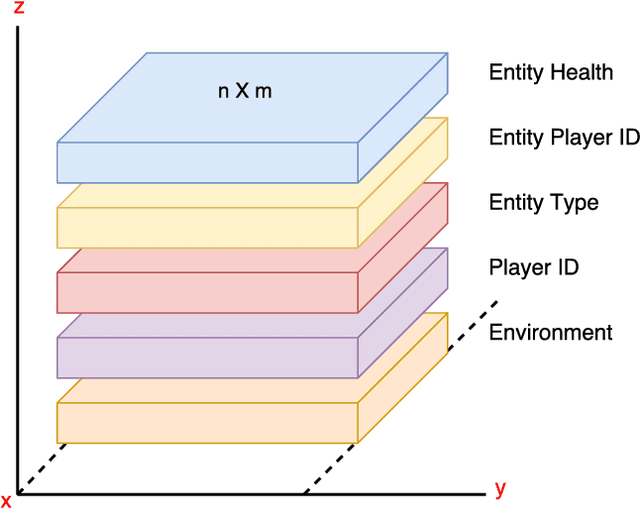

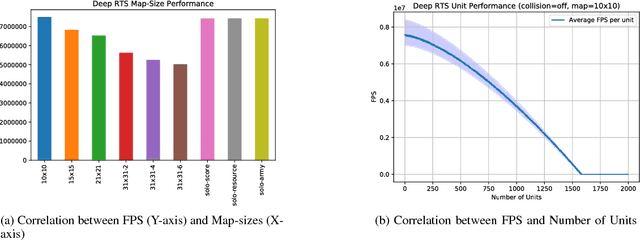

Abstract:Reinforcement learning (RL) is an area of research that has blossomed tremendously in recent years and has shown remarkable potential for artificial intelligence based opponents in computer games. This success is primarily due to the vast capabilities of convolutional neural networks, that can extract useful features from noisy and complex data. Games are excellent tools to test and push the boundaries of novel RL algorithms because they give valuable insight into how well an algorithm can perform in isolated environments without the real-life consequences. Real-time strategy games (RTS) is a genre that has tremendous complexity and challenges the player in short and long-term planning. There is much research that focuses on applied RL in RTS games, and novel advances are therefore anticipated in the not too distant future. However, there are to date few environments for testing RTS AIs. Environments in the literature are often either overly simplistic, such as microRTS, or complex and without the possibility for accelerated learning on consumer hardware like StarCraft II. This paper introduces the Deep RTS game environment for testing cutting-edge artificial intelligence algorithms for RTS games. Deep RTS is a high-performance RTS game made specifically for artificial intelligence research. It supports accelerated learning, meaning that it can learn at a magnitude of 50 000 times faster compared to existing RTS games. Deep RTS has a flexible configuration, enabling research in several different RTS scenarios, including partially observable state-spaces and map complexity. We show that Deep RTS lives up to our promises by comparing its performance with microRTS, ELF, and StarCraft II on high-end consumer hardware. Using Deep RTS, we show that a Deep Q-Network agent beats random-play agents over 70% of the time. Deep RTS is publicly available at https://github.com/cair/DeepRTS.

The Tsetlin Machine - A Game Theoretic Bandit Driven Approach to Optimal Pattern Recognition with Propositional Logic

Apr 23, 2018

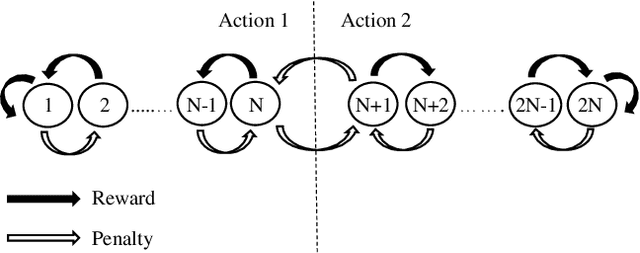

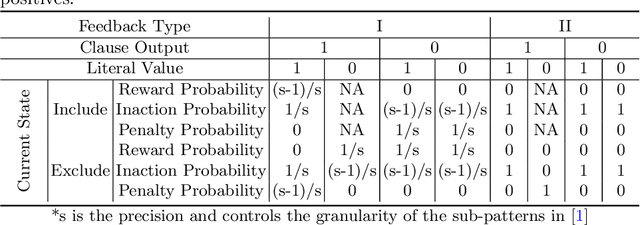

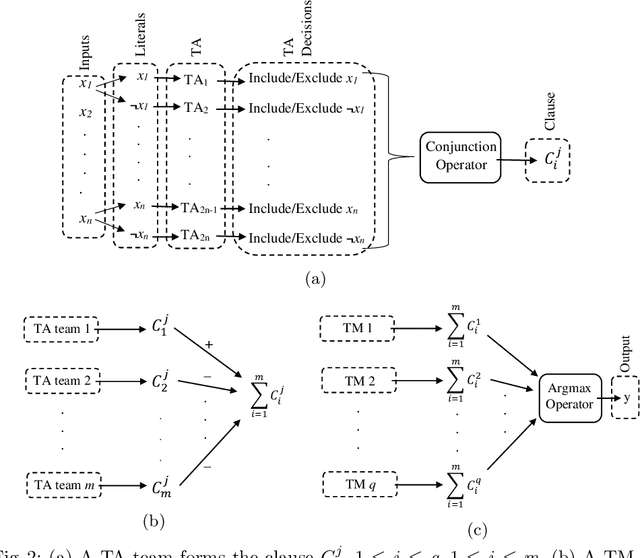

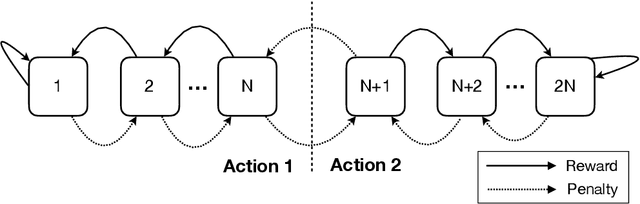

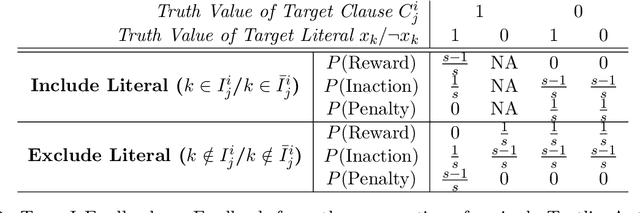

Abstract:Although simple individually, artificial neurons provide state-of-the-art performance when interconnected in deep networks. Unknown to many, there exists an arguably even simpler and more versatile learning mechanism, namely, the Tsetlin Automaton. Merely by means of a single integer as memory, it learns the optimal action in stochastic environments. In this paper, we introduce the Tsetlin Machine, which solves complex pattern recognition problems with easy-to-interpret propositional formulas, composed by a collective of Tsetlin Automata. To eliminate the longstanding problem of vanishing signal-to-noise ratio, the Tsetlin Machine orchestrates the automata using a novel game. Our theoretical analysis establishes that the Nash equilibria of the game are aligned with the propositional formulas that provide optimal pattern recognition accuracy. This translates to learning without local optima, only global ones. We argue that the Tsetlin Machine finds the propositional formula that provides optimal accuracy, with probability arbitrarily close to unity. In four distinct benchmarks, the Tsetlin Machine outperforms both Neural Networks, SVMs, Random Forests, the Naive Bayes Classifier and Logistic Regression. It further turns out that the accuracy advantage of the Tsetlin Machine increases with lack of data. The Tsetlin Machine has a significant computational performance advantage since both inputs, patterns, and outputs are expressed as bits, while recognition of patterns relies on bit manipulation. The combination of accuracy, interpretability, and computational simplicity makes the Tsetlin Machine a promising tool for a wide range of domains, including safety-critical medicine. Being the first of its kind, we believe the Tsetlin Machine will kick-start completely new paths of research, with a potentially significant impact on the AI field and the applications of AI.

FlashRL: A Reinforcement Learning Platform for Flash Games

Jan 26, 2018

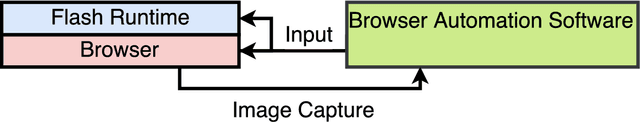

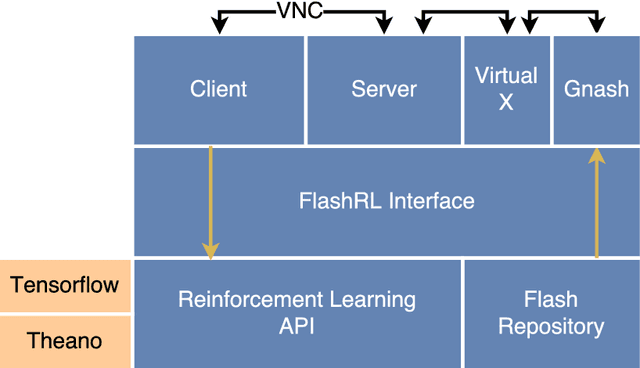

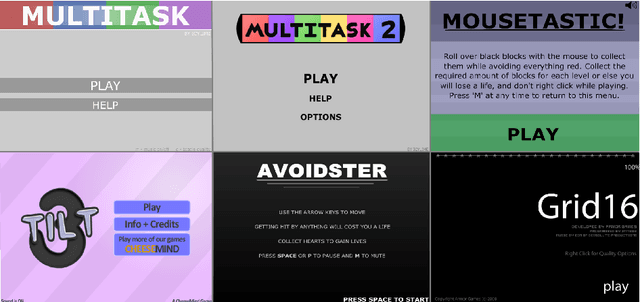

Abstract:Reinforcement Learning (RL) is a research area that has blossomed tremendously in recent years and has shown remarkable potential in among others successfully playing computer games. However, there only exists a few game platforms that provide diversity in tasks and state-space needed to advance RL algorithms. The existing platforms offer RL access to Atari- and a few web-based games, but no platform fully expose access to Flash games. This is unfortunate because applying RL to Flash games have potential to push the research of RL algorithms. This paper introduces the Flash Reinforcement Learning platform (FlashRL) which attempts to fill this gap by providing an environment for thousands of Flash games on a novel platform for Flash automation. It opens up easy experimentation with RL algorithms for Flash games, which has previously been challenging. The platform shows excellent performance with as little as 5% CPU utilization on consumer hardware. It shows promising results for novel reinforcement learning algorithms.

Towards a Deep Reinforcement Learning Approach for Tower Line Wars

Dec 17, 2017

Abstract:There have been numerous breakthroughs with reinforcement learning in the recent years, perhaps most notably on Deep Reinforcement Learning successfully playing and winning relatively advanced computer games. There is undoubtedly an anticipation that Deep Reinforcement Learning will play a major role when the first AI masters the complicated game plays needed to beat a professional Real-Time Strategy game player. For this to be possible, there needs to be a game environment that targets and fosters AI research, and specifically Deep Reinforcement Learning. Some game environments already exist, however, these are either overly simplistic such as Atari 2600 or complex such as Starcraft II from Blizzard Entertainment. We propose a game environment in between Atari 2600 and Starcraft II, particularly targeting Deep Reinforcement Learning algorithm research. The environment is a variant of Tower Line Wars from Warcraft III, Blizzard Entertainment. Further, as a proof of concept that the environment can harbor Deep Reinforcement algorithms, we propose and apply a Deep Q-Reinforcement architecture. The architecture simplifies the state space so that it is applicable to Q-learning, and in turn improves performance compared to current state-of-the-art methods. Our experiments show that the proposed architecture can learn to play the environment well, and score 33% better than standard Deep Q-learning which in turn proves the usefulness of the game environment.

Thompson Sampling Guided Stochastic Searching on the Line for Deceptive Environments with Applications to Root-Finding Problems

Aug 05, 2017

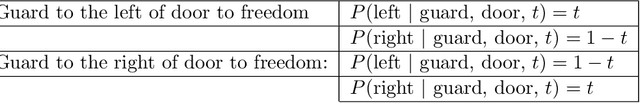

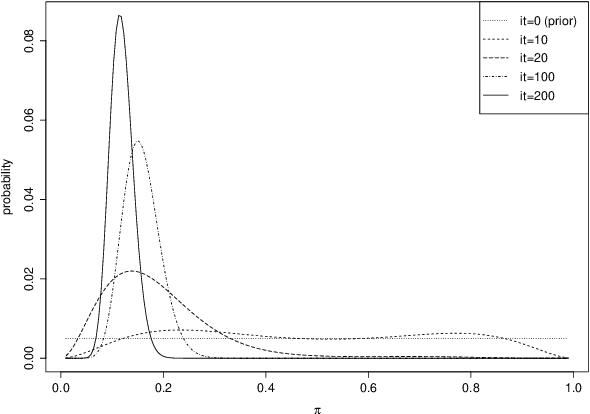

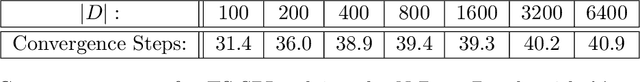

Abstract:The multi-armed bandit problem forms the foundation for solving a wide range of on-line stochastic optimization problems through a simple, yet effective mechanism. One simply casts the problem as a gambler that repeatedly pulls one out of N slot machine arms, eliciting random rewards. Learning of reward probabilities is then combined with reward maximization, by carefully balancing reward exploration against reward exploitation. In this paper, we address a particularly intriguing variant of the multi-armed bandit problem, referred to as the {\it Stochastic Point Location (SPL) Problem}. The gambler is here only told whether the optimal arm (point) lies to the "left" or to the "right" of the arm pulled, with the feedback being erroneous with probability $1-\pi$. This formulation thus captures optimization in continuous action spaces with both {\it informative} and {\it deceptive} feedback. To tackle this class of problems, we formulate a compact and scalable Bayesian representation of the solution space that simultaneously captures both the location of the optimal arm as well as the probability of receiving correct feedback. We further introduce the accompanying Thompson Sampling guided Stochastic Point Location (TS-SPL) scheme for balancing exploration against exploitation. By learning $\pi$, TS-SPL also supports {\it deceptive} environments that are lying about the direction of the optimal arm. This, in turn, allows us to solve the fundamental Stochastic Root Finding (SRF) Problem. Empirical results demonstrate that our scheme deals with both deceptive and informative environments, significantly outperforming competing algorithms both for SRF and SPL.

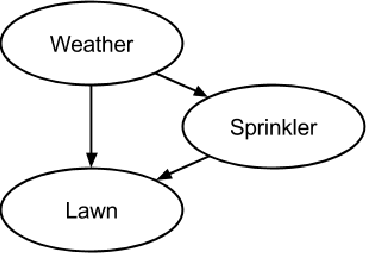

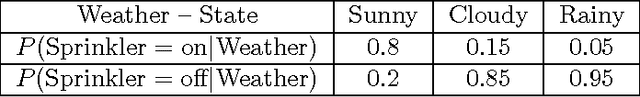

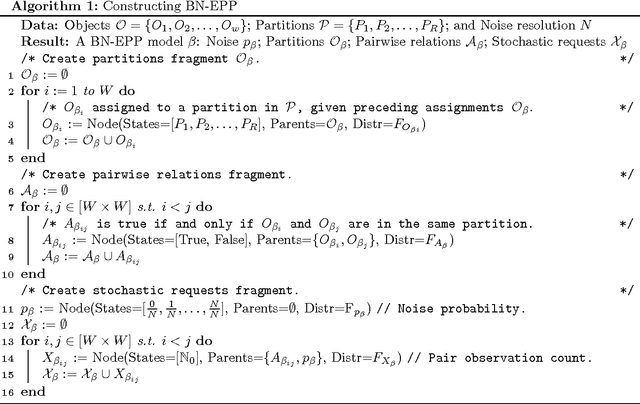

An Optimal Bayesian Network Based Solution Scheme for the Constrained Stochastic On-line Equi-Partitioning Problem

Jul 11, 2017

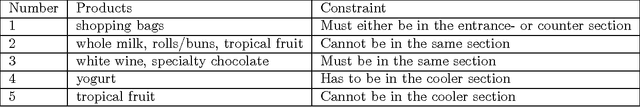

Abstract:A number of intriguing decision scenarios revolve around partitioning a collection of objects to optimize some application specific objective function. This problem is generally referred to as the Object Partitioning Problem (OPP) and is known to be NP-hard. We here consider a particularly challenging version of OPP, namely, the Stochastic On-line Equi-Partitioning Problem (SO-EPP). In SO-EPP, the target partitioning is unknown and has to be inferred purely from observing an on-line sequence of object pairs. The paired objects belong to the same partition with probability $p$ and to different partitions with probability $1-p$, with $p$ also being unknown. As an additional complication, the partitions are required to be of equal cardinality. Previously, only sub-optimal solution strategies have been proposed for SO- EPP. In this paper, we propose the first optimal solution strategy. In brief, the scheme that we propose, BN-EPP, is founded on a Bayesian network representation of SO-EPP problems. Based on probabilistic reasoning, we are not only able to infer the underlying object partitioning with optimal accuracy. We are also able to simultaneously infer $p$, allowing us to accelerate learning as object pairs arrive. Furthermore, our scheme is the first to support arbitrary constraints on the partitioning (Constrained SO-EPP). Being optimal, BN-EPP provides superior performance compared to existing solution schemes. We additionally introduce Walk-BN-EPP, a novel WalkSAT inspired algorithm for solving large scale BN-EPP problems. Finally, we provide a BN-EPP based solution to the problem of order picking, a representative real-life application of BN-EPP.

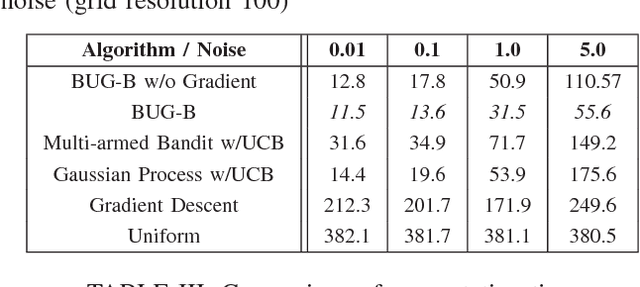

Bayesian Unification of Gradient and Bandit-based Learning for Accelerated Global Optimisation

May 28, 2017

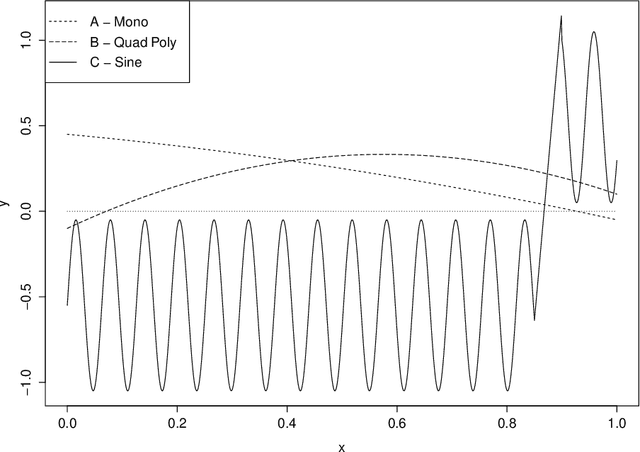

Abstract:Bandit based optimisation has a remarkable advantage over gradient based approaches due to their global perspective, which eliminates the danger of getting stuck at local optima. However, for continuous optimisation problems or problems with a large number of actions, bandit based approaches can be hindered by slow learning. Gradient based approaches, on the other hand, navigate quickly in high-dimensional continuous spaces through local optimisation, following the gradient in fine grained steps. Yet, apart from being susceptible to local optima, these schemes are less suited for online learning due to their reliance on extensive trial-and-error before the optimum can be identified. In this paper, we propose a Bayesian approach that unifies the above two paradigms in one single framework, with the aim of combining their advantages. At the heart of our approach we find a stochastic linear approximation of the function to be optimised, where both the gradient and values of the function are explicitly captured. This allows us to learn from both noisy function and gradient observations, and predict these properties across the action space to support optimisation. We further propose an accompanying bandit driven exploration scheme that uses Bayesian credible bounds to trade off exploration against exploitation. Our empirical results demonstrate that by unifying bandit and gradient based learning, one obtains consistently improved performance across a wide spectrum of problem environments. Furthermore, even when gradient feedback is unavailable, the flexibility of our model, including gradient prediction, still allows us outperform competing approaches, although with a smaller margin. Due to the pervasiveness of bandit based optimisation, our scheme opens up for improved performance both in meta-optimisation and in applications where gradient related information is readily available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge