Norman Ettrich

In-Context Learning for Seismic Data Processing

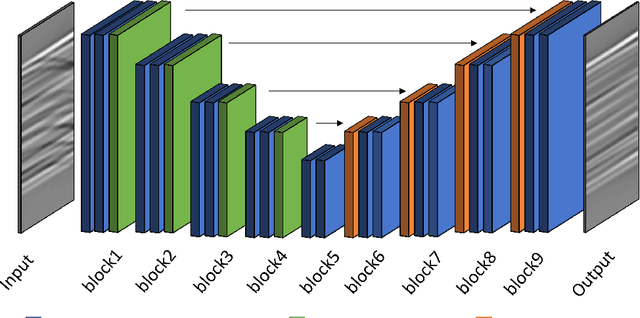

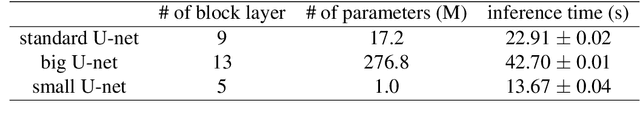

Dec 19, 2025Abstract:Seismic processing transforms raw data into subsurface images essential for geophysical applications. Traditional methods face challenges, such as noisy data, and manual parameter tuning, among others. Recently deep learning approaches have proposed alternative solutions to some of these problems. However, important challenges of existing deep learning approaches are spatially inconsistent results across neighboring seismic gathers and lack of user-control. We address these limitations by introducing ContextSeisNet, an in-context learning model, to seismic demultiple processing. Our approach conditions predictions on a support set of spatially related example pairs: neighboring common-depth point gathers from the same seismic line and their corresponding labels. This allows the model to learn task-specific processing behavior at inference time by observing how similar gathers should be processed, without any retraining. This method provides both flexibility through user-defined examples and improved lateral consistency across seismic lines. On synthetic data, ContextSeisNet outperforms a U-Net baseline quantitatively and demonstrates enhanced spatial coherence between neighboring gathers. On field data, our model achieves superior lateral consistency compared to both traditional Radon demultiple and the U-Net baseline. Relative to the U-Net, ContextSeisNet also delivers improved near-offset performance and more complete multiple removal. Notably, ContextSeisNet achieves comparable field data performance despite being trained on 90% less data, demonstrating substantial data efficiency. These results establish ContextSeisNet as a practical approach for spatially consistent seismic demultiple with potential applicability to other seismic processing tasks.

Foundation Models For Seismic Data Processing: An Extensive Review

Mar 31, 2025Abstract:Seismic processing plays a crucial role in transforming raw data into high-quality subsurface images, pivotal for various geoscience applications. Despite its importance, traditional seismic processing techniques face challenges such as noisy and damaged data and the reliance on manual, time-consuming workflows. The emergence of deep learning approaches has introduced effective and user-friendly alternatives, yet many of these deep learning approaches rely on synthetic datasets and specialized neural networks. Recently, foundation models have gained traction in the seismic domain, due to their success in natural imaging. This paper investigates the application of foundation models in seismic processing on the tasks: demultiple, interpolation, and denoising. It evaluates the impact of different model characteristics, such as pre-training technique and neural network architecture, on performance and efficiency. Rather than proposing a single seismic foundation model, this paper critically examines various natural image foundation models and suggest some promising candidates for future exploration.

Deep Diffusion Models for Seismic Processing

Jul 21, 2022

Abstract:Seismic data processing involves techniques to deal with undesired effects that occur during acquisition and pre-processing. These effects mainly comprise coherent artefacts such as multiples, non-coherent signals such as electrical noise, and loss of signal information at the receivers that leads to incomplete traces. In the past years, there has been a remarkable increase of machine-learning-based solutions that have addressed the aforementioned issues. In particular, deep-learning practitioners have usually relied on heavily fine-tuned, customized discriminative algorithms. Although, these methods can provide solid results, they seem to lack semantic understanding of the provided data. Motivated by this limitation, in this work, we employ a generative solution, as it can explicitly model complex data distributions and hence, yield to a better decision-making process. In particular, we introduce diffusion models for three seismic applications: demultiple, denoising and interpolation. To that end, we run experiments on synthetic and on real data, and we compare the diffusion performance with standardized algorithms. We believe that our pioneer study not only demonstrates the capability of diffusion models, but also opens the door to future research to integrate generative models in seismic workflows.

Dissecting U-net for Seismic Application: An In-Depth Study on Deep Learning Multiple Removal

Jun 24, 2022

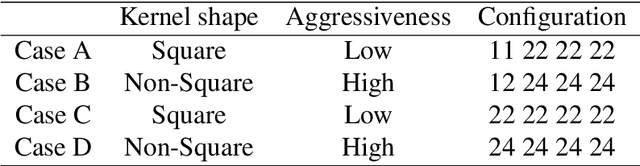

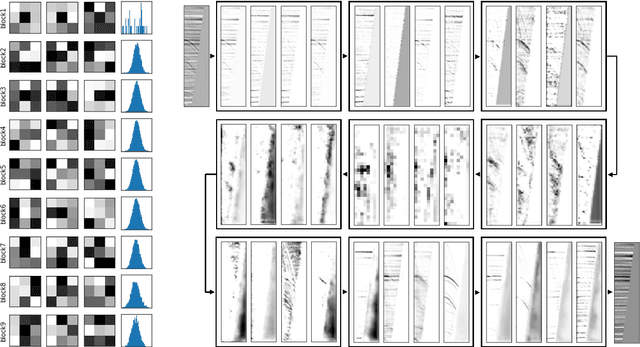

Abstract:Seismic processing often requires suppressing multiples that appear when collecting data. To tackle these artifacts, practitioners usually rely on Radon transform-based algorithms as post-migration gather conditioning. However, such traditional approaches are both time-consuming and parameter-dependent, making them fairly complex. In this work, we present a deep learning-based alternative that provides competitive results, while reducing its usage's complexity, and hence democratizing its applicability. We observe an excellent performance of our network when inferring complex field data, despite the fact of being solely trained on synthetics. Furthermore, extensive experiments show that our proposal can preserve the inherent characteristics of the data, avoiding undesired over-smoothed results, while removing the multiples. Finally, we conduct an in-depth analysis of the model, where we pinpoint the effects of the main hyperparameters with physical events. To the best of our knowledge, this study pioneers the unboxing of neural networks for the demultiple process, helping the user to gain insights into the inside running of the network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge