Nikolas Papadopoulos

Hephaestus Minicubes: A Global, Multi-Modal Dataset for Volcanic Unrest Monitoring

May 23, 2025Abstract:Ground deformation is regarded in volcanology as a key precursor signal preceding volcanic eruptions. Satellite-based Interferometric Synthetic Aperture Radar (InSAR) enables consistent, global-scale deformation tracking; however, deep learning methods remain largely unexplored in this domain, mainly due to the lack of a curated machine learning dataset. In this work, we build on the existing Hephaestus dataset, and introduce Hephaestus Minicubes, a global collection of 38 spatiotemporal datacubes offering high resolution, multi-source and multi-temporal information, covering 44 of the world's most active volcanoes over a 7-year period. Each spatiotemporal datacube integrates InSAR products, topographic data, as well as atmospheric variables which are known to introduce signal delays that can mimic ground deformation in InSAR imagery. Furthermore, we provide expert annotations detailing the type, intensity and spatial extent of deformation events, along with rich text descriptions of the observed scenes. Finally, we present a comprehensive benchmark, demonstrating Hephaestus Minicubes' ability to support volcanic unrest monitoring as a multi-modal, multi-temporal classification and semantic segmentation task, establishing strong baselines with state-of-the-art architectures. This work aims to advance machine learning research in volcanic monitoring, contributing to the growing integration of data-driven methods within Earth science applications.

Fixating on Attention: Integrating Human Eye Tracking into Vision Transformers

Aug 26, 2023

Abstract:Modern transformer-based models designed for computer vision have outperformed humans across a spectrum of visual tasks. However, critical tasks, such as medical image interpretation or autonomous driving, still require reliance on human judgments. This work demonstrates how human visual input, specifically fixations collected from an eye-tracking device, can be integrated into transformer models to improve accuracy across multiple driving situations and datasets. First, we establish the significance of fixation regions in left-right driving decisions, as observed in both human subjects and a Vision Transformer (ViT). By comparing the similarity between human fixation maps and ViT attention weights, we reveal the dynamics of overlap across individual heads and layers. This overlap is exploited for model pruning without compromising accuracy. Thereafter, we incorporate information from the driving scene with fixation data, employing a "joint space-fixation" (JSF) attention setup. Lastly, we propose a "fixation-attention intersection" (FAX) loss to train the ViT model to attend to the same regions that humans fixated on. We find that the ViT performance is improved in accuracy and number of training epochs when using JSF and FAX. These results hold significant implications for human-guided artificial intelligence.

Machine Learning Method for Functional Assessment of Retinal Models

Feb 05, 2022

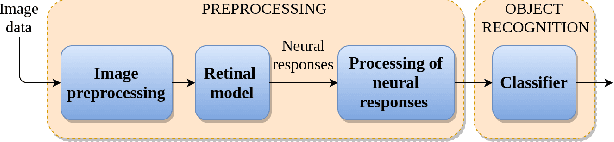

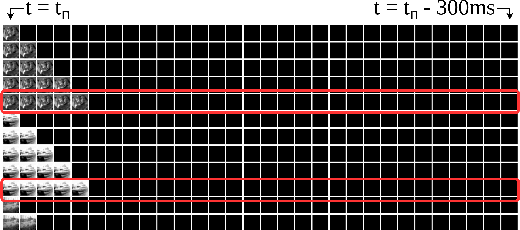

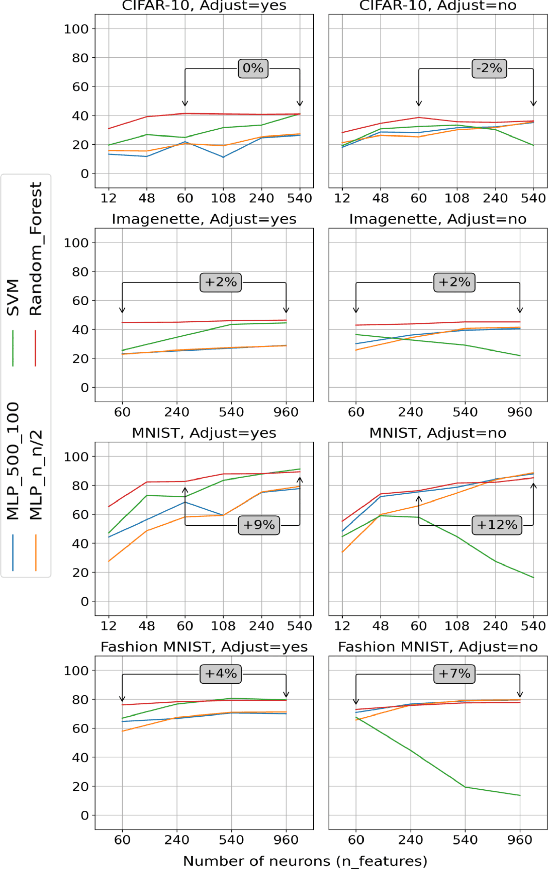

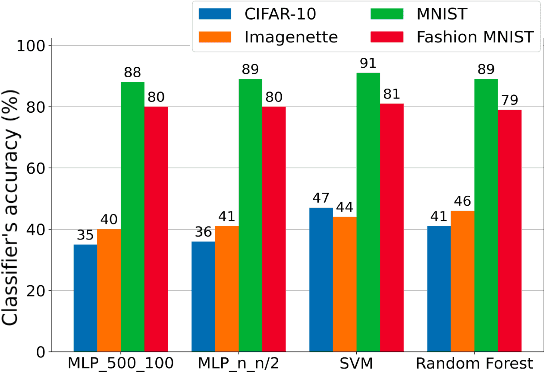

Abstract:Challenges in the field of retinal prostheses motivate the development of retinal models to accurately simulate Retinal Ganglion Cells (RGCs) responses. The goal of retinal prostheses is to enable blind individuals to solve complex, reallife visual tasks. In this paper, we introduce the functional assessment (FA) of retinal models, which describes the concept of evaluating the performance of retinal models on visual understanding tasks. We present a machine learning method for FA: we feed traditional machine learning classifiers with RGC responses generated by retinal models, to solve object and digit recognition tasks (CIFAR-10, MNIST, Fashion MNIST, Imagenette). We examined critical FA aspects, including how the performance of FA depends on the task, how to optimally feed RGC responses to the classifiers and how the number of output neurons correlates with the model's accuracy. To increase the number of output neurons, we manipulated input images - by splitting and then feeding them to the retinal model and we found that image splitting does not significantly improve the model's accuracy. We also show that differences in the structure of datasets result in largely divergent performance of the retinal model (MNIST and Fashion MNIST exceeded 80% accuracy, while CIFAR-10 and Imagenette achieved ~40%). Furthermore, retinal models which perform better in standard evaluation, i.e. more accurately predict RGC response, perform better in FA as well. However, unlike standard evaluation, FA results can be straightforwardly interpreted in the context of comparing the quality of visual perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge