Nicolas Ewen

Inheritance Between Feedforward and Convolutional Networks via Model Projection

Feb 05, 2026Abstract:Techniques for feedforward networks (FFNs) and convolutional networks (CNNs) are frequently reused across families, but the relationship between the underlying model classes is rarely made explicit. We introduce a unified node-level formalization with tensor-valued activations and show that generalized feedforward networks form a strict subset of generalized convolutional networks. Motivated by the mismatch in per-input parameterization between the two families, we propose model projection, a parameter-efficient transfer learning method for CNNs that freezes pretrained per-input-channel filters and learns a single scalar gate for each (output channel, input channel) contribution. Projection keeps all convolutional layers adaptable to downstream tasks while substantially reducing the number of trained parameters in convolutional layers. We prove that projected nodes take the generalized FFN form, enabling projected CNNs to inherit feedforward techniques that do not rely on homogeneous layer inputs. Experiments across multiple ImageNet-pretrained backbones and several downstream image classification datasets show that model projection is a strong transfer learning baseline under simple training recipes.

Online unsupervised Learning for domain shift in COVID-19 CT scan datasets

Jul 31, 2021

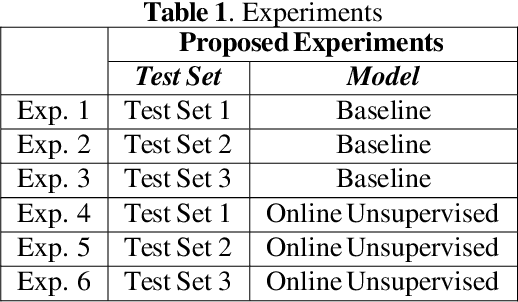

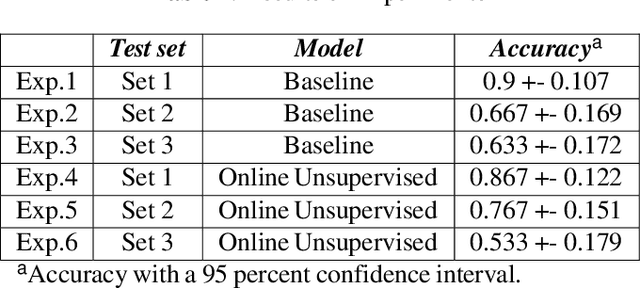

Abstract:Neural networks often require large amounts of expert annotated data to train. When changes are made in the process of medical imaging, trained networks may not perform as well, and obtaining large amounts of expert annotations for each change in the imaging process can be time consuming and expensive. Online unsupervised learning is a method that has been proposed to deal with situations where there is a domain shift in incoming data, and a lack of annotations. The aim of this study is to see whether online unsupervised learning can help COVID-19 CT scan classification models adjust to slight domain shifts, when there are no annotations available for the new data. A total of six experiments are performed using three test datasets with differing amounts of domain shift. These experiments compare the performance of the online unsupervised learning strategy to a baseline, as well as comparing how the strategy performs on different domain shifts. Code for online unsupervised learning can be found at this link: https://github.com/Mewtwo/online-unsupervised-learning

Targeted Self Supervision for Classification on a Small COVID-19 CT Scan Dataset

Nov 20, 2020

Abstract:Traditionally, convolutional neural networks need large amounts of data labelled by humans to train. Self supervision has been proposed as a method of dealing with small amounts of labelled data. The aim of this study is to determine whether self supervision can increase classification performance on a small COVID-19 CT scan dataset. This study also aims to determine whether the proposed self supervision strategy, targeted self supervision, is a viable option for a COVID-19 imaging dataset. A total of 10 experiments are run comparing the classification performance of the proposed method of self supervision with different amounts of data. The experiments run with the proposed self supervision strategy perform significantly better than their non-self supervised counterparts. We get almost 8% increase in accuracy with full self supervision when compared to no self supervision. The results suggest that self supervision can improve classification performance on a small COVID-19 CT scan dataset. Code for targeted self supervision can be found at this link: https://github.com/Mewtwo/Targeted-Self-Supervision/tree/main/COVID-CT

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge