Ngoc Huy Nguyen

DIPP: Discriminative Impact Point Predictor for Catching Diverse In-Flight Objects

Sep 18, 2025

Abstract:In this study, we address the problem of in-flight object catching using a quadruped robot with a basket. Our objective is to accurately predict the impact point, defined as the object's landing position. This task poses two key challenges: the absence of public datasets capturing diverse objects under unsteady aerodynamics, which are essential for training reliable predictors; and the difficulty of accurate early-stage impact point prediction when trajectories appear similar across objects. To overcome these issues, we construct a real-world dataset of 8,000 trajectories from 20 objects, providing a foundation for advancing in-flight object catching under complex aerodynamics. We then propose the Discriminative Impact Point Predictor (DIPP), consisting of two modules: (i) a Discriminative Feature Embedding (DFE) that separates trajectories by dynamics to enable early-stage discrimination and generalization, and (ii) an Impact Point Predictor (IPP) that estimates the impact point from these features. Two IPP variants are implemented: an Neural Acceleration Estimator (NAE)-based method that predicts trajectories and derives the impact point, and a Direct Point Estimator (DPE)-based method that directly outputs it. Experimental results show that our dataset is more diverse and complex than existing dataset, and that our method outperforms baselines on both 15 seen and 5 unseen objects. Furthermore, we show that improved early-stage prediction enhances catching success in simulation and demonstrate the effectiveness of our approach through real-world experiments. The demonstration is available at https://sites.google.com/view/robot-catching-2025.

A clinical validation of VinDr-CXR, an AI system for detecting abnormal chest radiographs

Apr 07, 2021

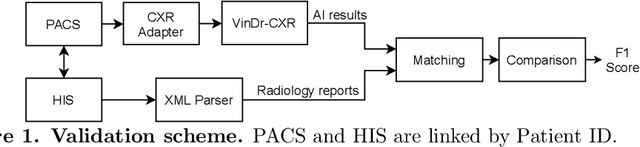

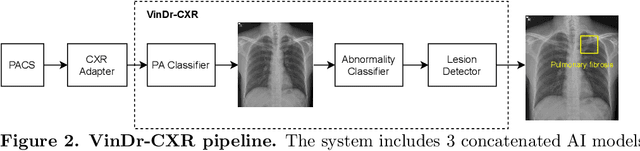

Abstract:Computer-Aided Diagnosis (CAD) systems for chest radiographs using artificial intelligence (AI) have recently shown a great potential as a second opinion for radiologists. The performances of such systems, however, were mostly evaluated on a fixed dataset in a retrospective manner and, thus, far from the real performances in clinical practice. In this work, we demonstrate a mechanism for validating an AI-based system for detecting abnormalities on X-ray scans, VinDr-CXR, at the Phu Tho General Hospital - a provincial hospital in the North of Vietnam. The AI system was directly integrated into the Picture Archiving and Communication System (PACS) of the hospital after being trained on a fixed annotated dataset from other sources. The performance of the system was prospectively measured by matching and comparing the AI results with the radiology reports of 6,285 chest X-ray examinations extracted from the Hospital Information System (HIS) over the last two months of 2020. The normal/abnormal status of a radiology report was determined by a set of rules and served as the ground truth. Our system achieves an F1 score - the harmonic average of the recall and the precision - of 0.653 (95% CI 0.635, 0.671) for detecting any abnormalities on chest X-rays. Despite a significant drop from the in-lab performance, this result establishes a high level of confidence in applying such a system in real-life situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge