Muhammad Zeeshan Baig

AAGATE: A NIST AI RMF-Aligned Governance Platform for Agentic AI

Oct 29, 2025Abstract:This paper introduces the Agentic AI Governance Assurance & Trust Engine (AAGATE), a Kubernetes-native control plane designed to address the unique security and governance challenges posed by autonomous, language-model-driven agents in production. Recognizing the limitations of traditional Application Security (AppSec) tooling for improvisational, machine-speed systems, AAGATE operationalizes the NIST AI Risk Management Framework (AI RMF). It integrates specialized security frameworks for each RMF function: the Agentic AI Threat Modeling MAESTRO framework for Map, a hybrid of OWASP's AIVSS and SEI's SSVC for Measure, and the Cloud Security Alliance's Agentic AI Red Teaming Guide for Manage. By incorporating a zero-trust service mesh, an explainable policy engine, behavioral analytics, and decentralized accountability hooks, AAGATE provides a continuous, verifiable governance solution for agentic AI, enabling safe, accountable, and scalable deployment. The framework is further extended with DIRF for digital identity rights, LPCI defenses for logic-layer injection, and QSAF monitors for cognitive degradation, ensuring governance spans systemic, adversarial, and ethical risks.

Fortifying the Agentic Web: A Unified Zero-Trust Architecture Against Logic-layer Threats

Aug 17, 2025Abstract:This paper presents a Unified Security Architecture that fortifies the Agentic Web through a Zero-Trust IAM framework. This architecture is built on a foundation of rich, verifiable agent identities using Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs), with discovery managed by a protocol-agnostic Agent Name Service (ANS). Security is operationalized through a multi-layered Trust Fabric which introduces significant innovations, including Trust-Adaptive Runtime Environments (TARE), Causal Chain Auditing, and Dynamic Identity with Behavioral Attestation. By explicitly linking the LPCI threat to these enhanced architectural countermeasures within a formal security model, we propose a comprehensive and forward-looking blueprint for a secure, resilient, and trustworthy agentic ecosystem. Our formal analysis demonstrates that the proposed architecture provides provable security guarantees against LPCI attacks with bounded probability of success.

Artificial Intelligence and Machine Learning in 5G Network Security: Opportunities, advantages, and future research trends

Jul 09, 2020

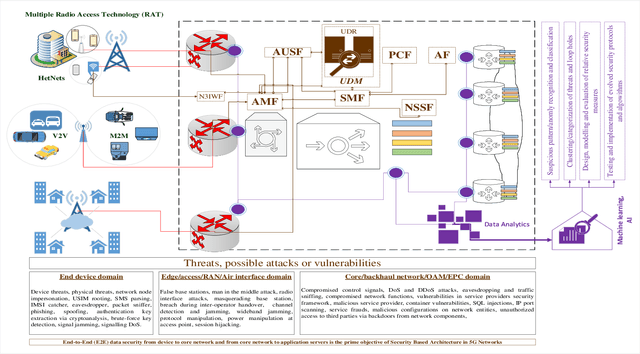

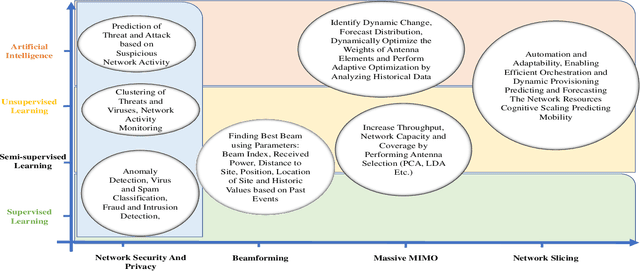

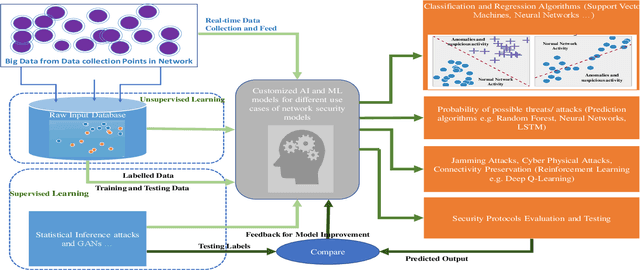

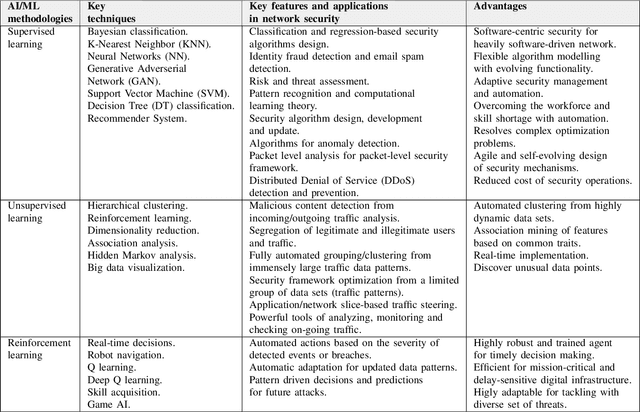

Abstract:Recent technological and architectural advancements in 5G networks have proven their worth as the deployment has started over the world. Key performance elevating factor from access to core network are softwareization, cloudification and virtualization of key enabling network functions. Along with the rapid evolution comes the risks, threats and vulnerabilities in the system for those who plan to exploit it. Therefore, ensuring fool proof end-to-end (E2E) security becomes a vital concern. Artificial intelligence (AI) and machine learning (ML) can play vital role in design, modelling and automation of efficient security protocols against diverse and wide range of threats. AI and ML has already proven their effectiveness in different fields for classification, identification and automation with higher accuracy. As 5G networks' primary selling point has been higher data rates and speed, it will be difficult to tackle wide range of threats from different points using typical/traditional protective measures. Therefore, AI and ML can play central role in protecting highly data-driven softwareized and virtualized network components. This article presents AI and ML driven applications for 5G network security, their implications and possible research directions. Also, an overview of key data collection points in 5G architecture for threat classification and anomaly detection are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge