Moreno Pintore

Computable Lipschitz Bounds for Deep Neural Networks

Oct 28, 2024

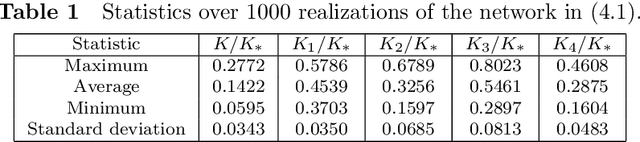

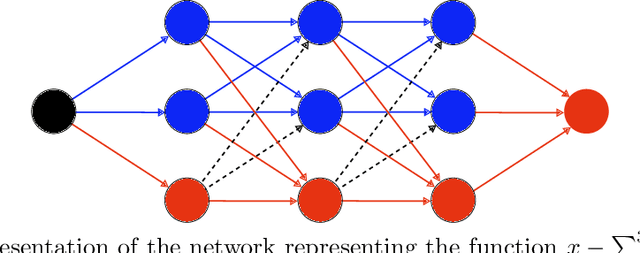

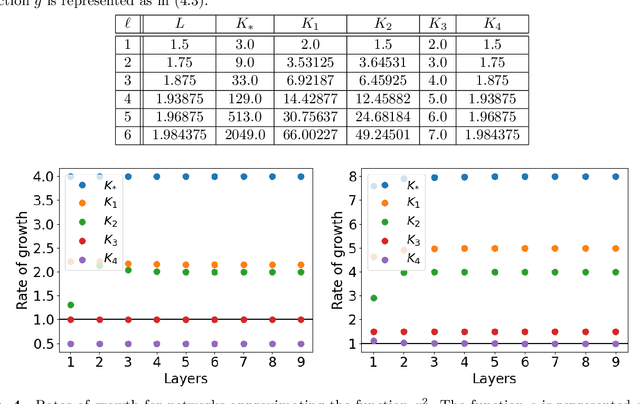

Abstract:Deriving sharp and computable upper bounds of the Lipschitz constant of deep neural networks is crucial to formally guarantee the robustness of neural-network based models. We analyse three existing upper bounds written for the $l^2$ norm. We highlight the importance of working with the $l^1$ and $l^\infty$ norms and we propose two novel bounds for both feed-forward fully-connected neural networks and convolutional neural networks. We treat the technical difficulties related to convolutional neural networks with two different methods, called explicit and implicit. Several numerical tests empirically confirm the theoretical results, help to quantify the relationship between the presented bounds and establish the better accuracy of the new bounds. Four numerical tests are studied: two where the output is derived from an analytical closed form are proposed; another one with random matrices; and the last one for convolutional neural networks trained on the MNIST dataset. We observe that one of our bound is optimal in the sense that it is exact for the first test with the simplest analytical form and it is better than other bounds for the other tests.

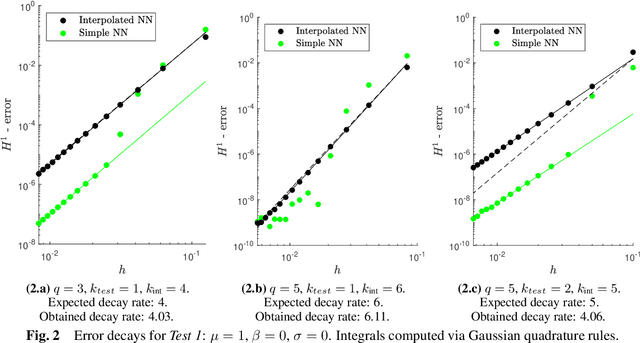

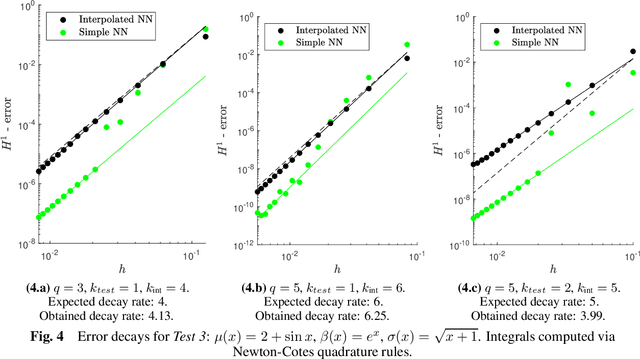

Variational Physics Informed Neural Networks: the role of quadratures and test functions

Sep 05, 2021

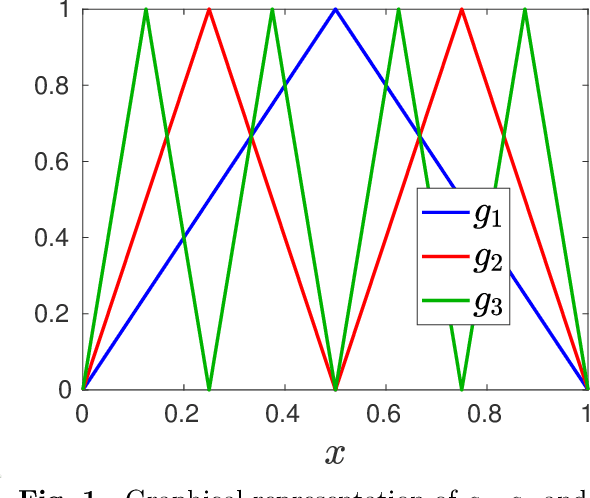

Abstract:In this work we analyze how Gaussian or Newton-Cotes quadrature rules of different precisions and piecewise polynomial test functions of different degrees affect the convergence rate of Variational Physics Informed Neural Networks (VPINN) with respect to mesh refinement, while solving elliptic boundary-value problems. Using a Petrov-Galerkin framework relying on an inf-sup condition, we derive an a priori error estimate in the energy norm between the exact solution and a suitable high-order piecewise interpolant of a computed neural network. Numerical experiments confirm the theoretical predictions, and also indicate that the error decay follows the same behavior when the neural network is not interpolated. Our results suggest, somehow counterintuitively, that for smooth solutions the best strategy to achieve a high decay rate of the error consists in choosing test functions of the lowest polynomial degree, while using quadrature formulas of suitably high precision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge