Moi Hoon Yap

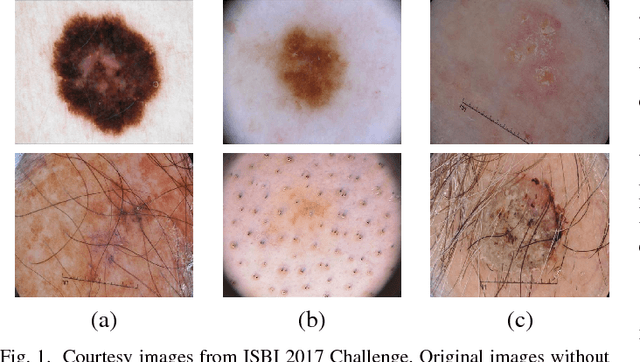

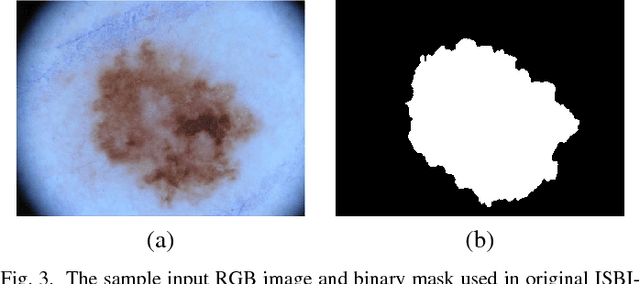

Region of Interest Detection in Dermoscopic Images for Natural Data-augmentation

Jul 27, 2018

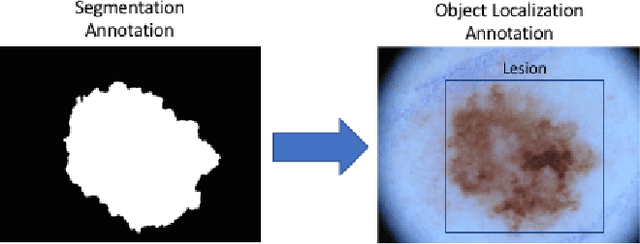

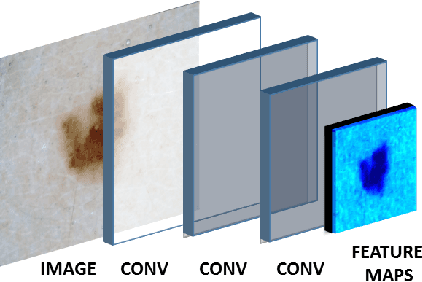

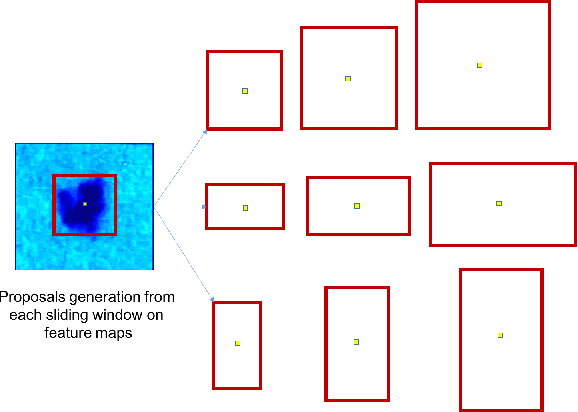

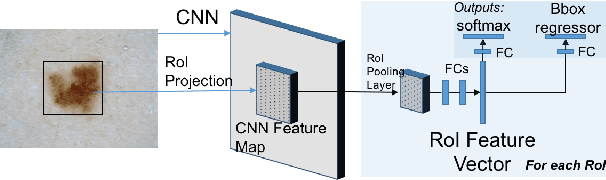

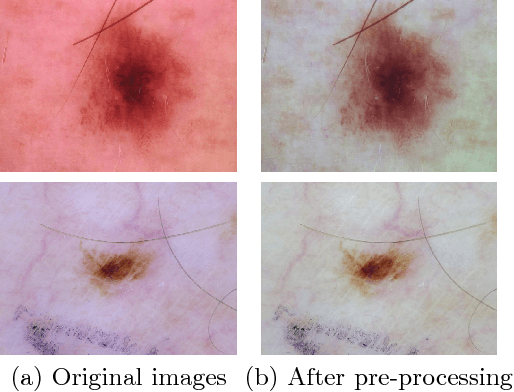

Abstract:With the rapid growth of medical imaging research, there is a great interest in the automated detection of skin lesions with computer algorithms. The state-of-the-art datasets for skin lesions are often accompanied with very limited amount of ground truth labeling as it is laborious and expensive. The region of interest (ROI) detection is vital to locate the lesion accurately and robust to subtle features of different skin lesion types. In this work, we propose the use of two object localization meta-architectures for end-to-end ROI skin lesion detection in dermoscopic images. We trained the Faster-RCNN-InceptionV2 and SSD-InceptionV2 on ISBI-2017 training dataset and evaluate the performances on ISBI-2017 testing set, PH2 and HAM10000 datasets. Since there was no earlier work in ROI detection for skin lesion with CNNs, we compare the performance of skin localization methods with the state-of-the-art segmentation method. The localization methods proved superiority over the segmentation method in ROI detection on skin lesion datasets. In addition, based on the detected ROI, an automated natural data-augmentation method is proposed. To demonstrate the potential of our work, we developed a real-time mobile application for automated skin lesions detection. The codes and mobile application will be made available for further research purposes.

Multi-Class Lesion Diagnosis with Pixel-wise Classification Network

Jul 24, 2018

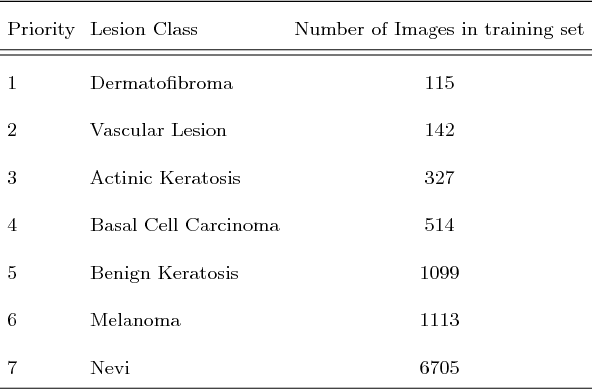

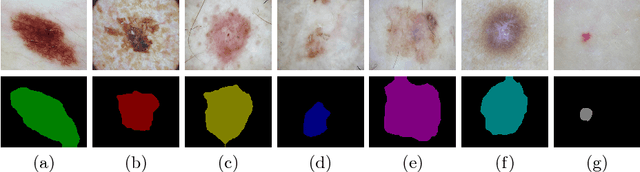

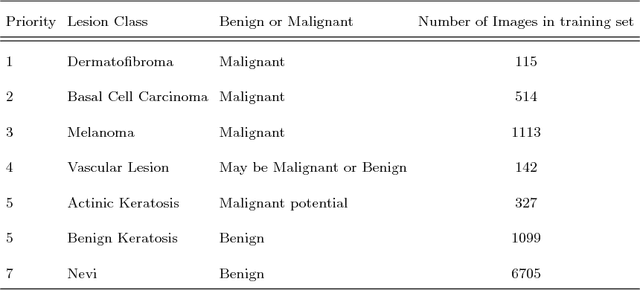

Abstract:Lesion diagnosis of skin lesions is a very challenging task due to high inter-class similarities and intra-class variations in terms of color, size, site and appearance among different skin lesions. With the emergence of computer vision especially deep learning algorithms, lesion diagnosis is made possible using these algorithms trained on dermoscopic images. Usually, deep classification networks are used for the lesion diagnosis to determine different types of skin lesions. In this work, we used pixel-wise classification network to provide lesion diagnosis rather than classification network. We propose to use DeeplabV3+ for multi-class lesion diagnosis in dermoscopic images of Task 3 of ISIC Challenge 2018. We used various post-processing methods with DeeplabV3+ to determine the lesion diagnosis in this challenge and submitted the test results.

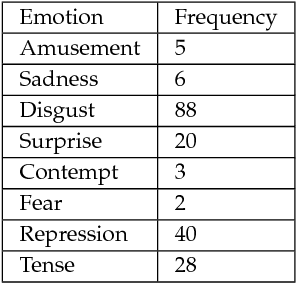

A Review on Facial Micro-Expressions Analysis: Datasets, Features and Metrics

May 07, 2018

Abstract:Facial micro-expressions are very brief, spontaneous facial expressions that appear on the face of humans when they either deliberately or unconsciously conceal an emotion. Micro-expression has shorter duration than macro-expression, which makes it more challenging for human and machine. Over the past ten years, automatic micro-expressions recognition has attracted increasing attention from researchers in psychology, computer science, security, neuroscience and other related disciplines. The aim of this paper is to provide the insights of automatic micro-expressions and recommendations for future research. There has been a lot of datasets released over the last decade that facilitated the rapid growth in this field. However, comparison across different datasets is difficult due to the inconsistency in experiment protocol, features used and evaluation methods. To address these issues, we review the datasets, features and the performance metrics deployed in the literature. Relevant challenges such as the spatial temporal settings during data collection, emotional classes versus objective classes in data labelling, face regions in data analysis, standardisation of metrics and the requirements for real-world implementation are discussed. We conclude by proposing some promising future directions to advancing micro-expressions research.

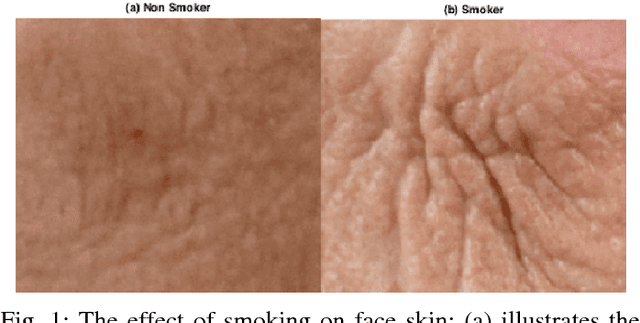

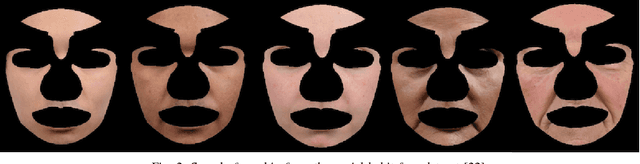

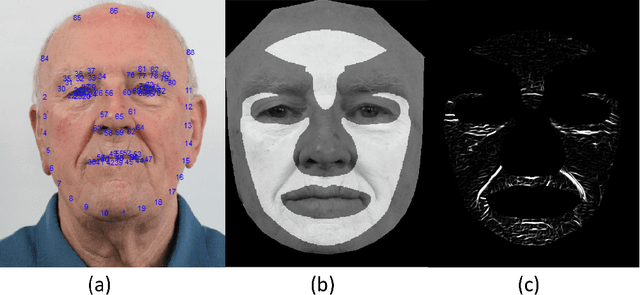

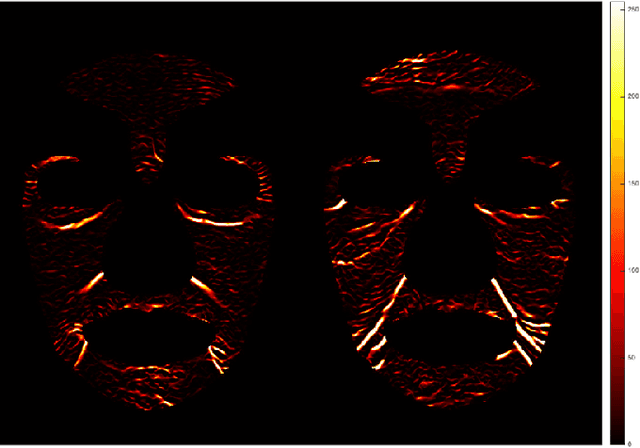

Automated Assessment of Facial Wrinkling: a case study on the effect of smoking

Dec 31, 2017

Abstract:Facial wrinkle is one of the most prominent biological changes that accompanying the natural aging process. However, there are some external factors contributing to premature wrinkles development, such as sun exposure and smoking. Clinical studies have shown that heavy smoking causes premature wrinkles development. However, there is no computerised system that can automatically assess the facial wrinkles on the whole face. This study investigates the effect of smoking on facial wrinkling using a social habit face dataset and an automated computerised computer vision algorithm. The wrinkles pattern represented in the intensity of 0-255 was first extracted using a modified Hybrid Hessian Filter. The face was divided into ten predefined regions, where the wrinkles in each region was extracted. Then the statistical analysis was performed to analyse which region is effected mainly by smoking. The result showed that the density of wrinkles for smokers in two regions around the mouth was significantly higher than the non-smokers, at p-value of 0.05. Other regions are inconclusive due to lack of large scale dataset. Finally, the wrinkle was visually compared between smoker and non-smoker faces by generating a generic 3D face model.

* 6 pages, 8 figures, Accepted in 2017 IEEE SMC International Conference

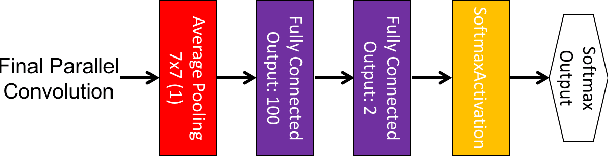

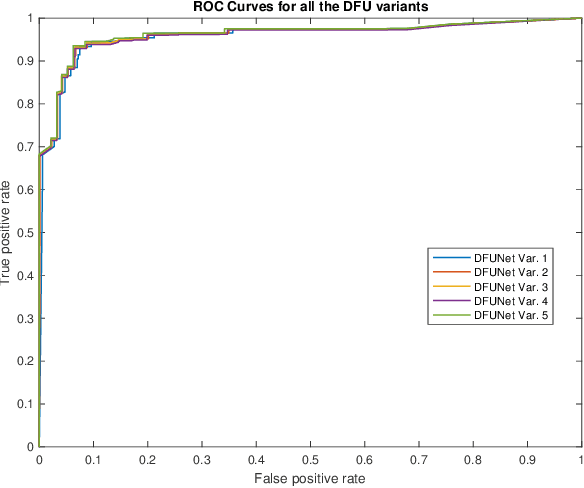

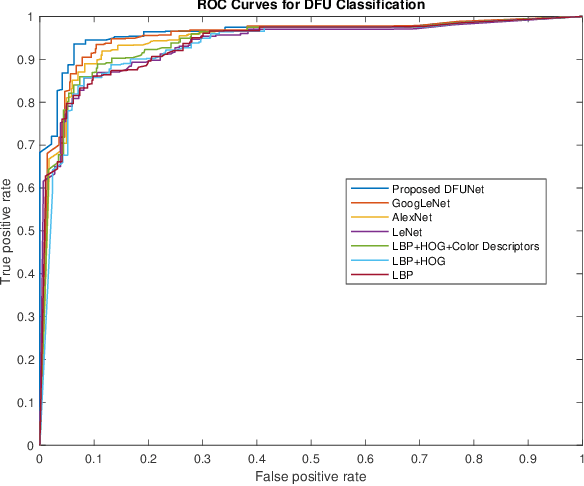

DFUNet: Convolutional Neural Networks for Diabetic Foot Ulcer Classification

Dec 10, 2017

Abstract:Globally, in 2016, one out of eleven adults suffered from Diabetes Mellitus. Diabetic Foot Ulcers (DFU) are a major complication of this disease, which if not managed properly can lead to amputation. Current clinical approaches to DFU treatment rely on patient and clinician vigilance, which has significant limitations such as the high cost involved in the diagnosis, treatment and lengthy care of the DFU. We collected an extensive dataset of foot images, which contain DFU from different patients. In this paper, we have proposed the use of traditional computer vision features for detecting foot ulcers among diabetic patients, which represent a cost-effective, remote and convenient healthcare solution. Furthermore, we used Convolutional Neural Networks (CNNs) for the first time in DFU classification. We have proposed a novel convolutional neural network architecture, DFUNet, with better feature extraction to identify the feature differences between healthy skin and the DFU. Using 10-fold cross-validation, DFUNet achieved an AUC score of 0.962. This outperformed both the machine learning and deep learning classifiers we have tested. Here we present the development of a novel and highly sensitive DFUNet for objectively detecting the presence of DFUs. This novel approach has the potential to deliver a paradigm shift in diabetic foot care.

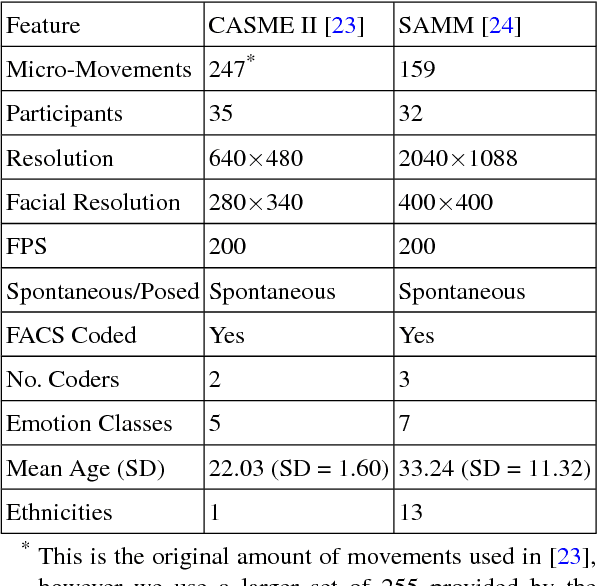

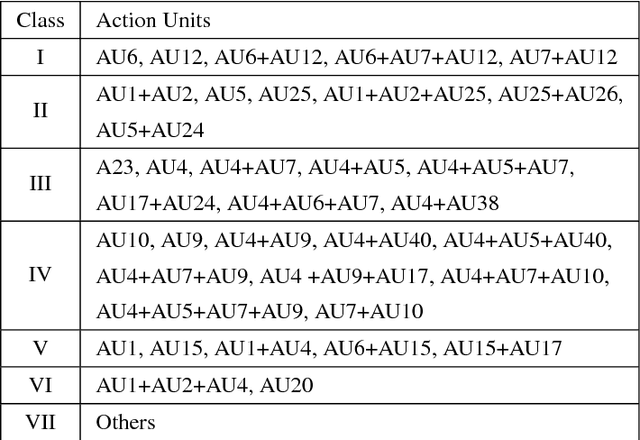

Objective Classes for Micro-Facial Expression Recognition

Dec 03, 2017

Abstract:Micro-expressions are brief spontaneous facial expressions that appear on a face when a person conceals an emotion, making them different to normal facial expressions in subtlety and duration. Currently, emotion classes within the CASME II dataset are based on Action Units and self-reports, creating conflicts during machine learning training. We will show that classifying expressions using Action Units, instead of predicted emotion, removes the potential bias of human reporting. The proposed classes are tested using LBP-TOP, HOOF and HOG 3D feature descriptors. The experiments are evaluated on two benchmark FACS coded datasets: CASME II and SAMM. The best result achieves 86.35\% accuracy when classifying the proposed 5 classes on CASME II using HOG 3D, outperforming the result of the state-of-the-art 5-class emotional-based classification in CASME II. Results indicate that classification based on Action Units provides an objective method to improve micro-expression recognition.

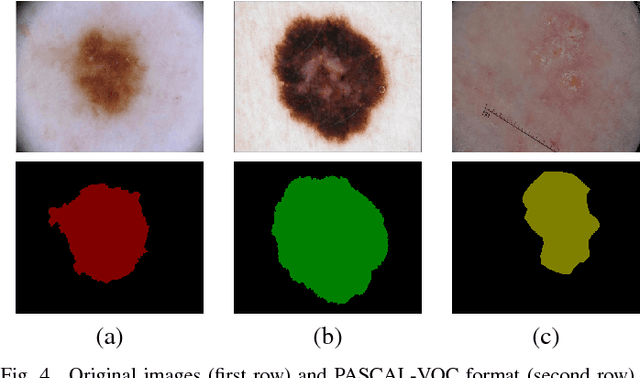

Multi-class Semantic Segmentation of Skin Lesions via Fully Convolutional Networks

Nov 28, 2017

Abstract:Early detection of skin cancer, particularly melanoma, is crucial to enable advanced treatment. Due to the rapid growth of skin cancers, there is a growing need of computerized analysis for skin lesions. These processes including detection, classification, and segmentation. There are three main types of skin lesions in common that are benign nevi, melanoma, and seborrhoeic keratoses which have huge intra-class variations in terms of color, size, place and appearance for each class and high inter-class visual similarities in dermoscopic images. The majority of current research is focusing on melanoma segmentation, but it is also very important to segment the seborrhoeic keratoses and benign nevi lesions as these regions potentially indicate the pre-cancer stage. We propose a multiclass semantic segmentation for these three classes from publicly available ISBI-2017 challenge dataset which consists of 2750 dermoscopic images. We propose an end-to-end solution using fully convolutional networks (FCNs) for multi-class semantic segmentation, which will automatically segment the melanoma, keratoses and benign lesions. To overcome the issue of data deficiency, we propose a transfer learning approach which uses both partial transfer learning and full transfer learning to train FCNs for multi-class semantic segmentation of skin lesions. The results are presented in Dice Similarity Coefficient (Dice) to compare the performance of the deep learning segmentation methods on the dataset with 5-fold cross-validation. The results showed that the two-tier level transfer learning FCN-8s achieved the overall best result with Dice score of 0.785 in a benign category, 0.653 in melanoma segmentation, and 0.557 in seborrhoeic keratoses.

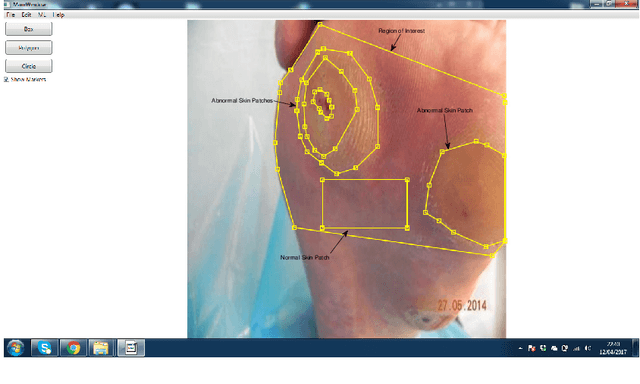

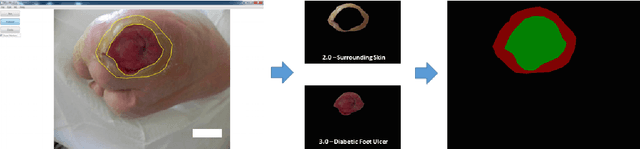

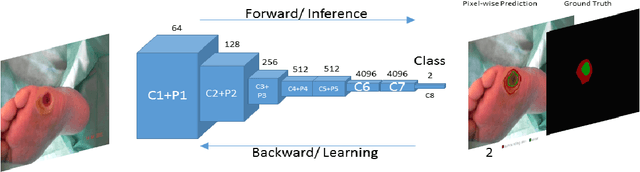

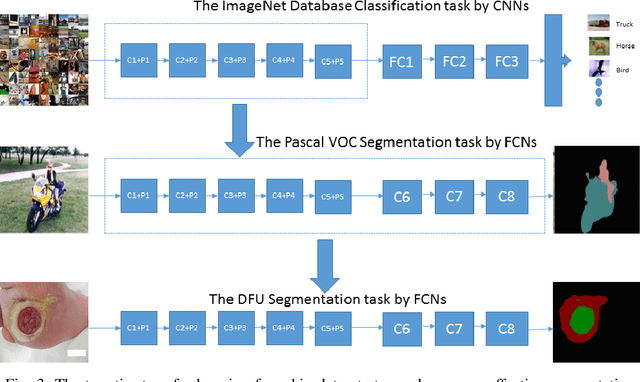

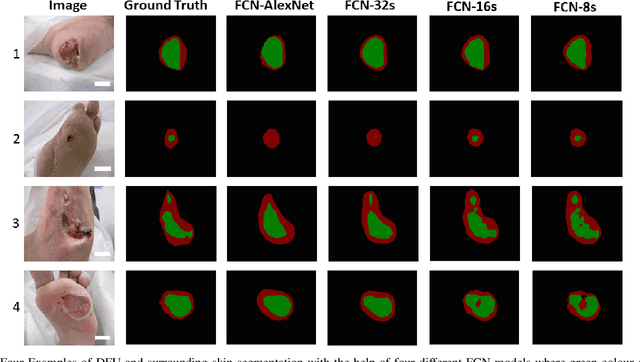

Fully Convolutional Networks for Diabetic Foot Ulcer Segmentation

Aug 06, 2017

Abstract:Diabetic Foot Ulcer (DFU) is a major complication of Diabetes, which if not managed properly can lead to amputation. DFU can appear anywhere on the foot and can vary in size, colour, and contrast depending on various pathologies. Current clinical approaches to DFU treatment rely on patients and clinician vigilance, which has significant limitations such as the high cost involved in the diagnosis, treatment and lengthy care of the DFU. We introduce a dataset of 705 foot images. We provide the ground truth of ulcer region and the surrounding skin that is an important indicator for clinicians to assess the progress of ulcer. Then, we propose a two-tier transfer learning from bigger datasets to train the Fully Convolutional Networks (FCNs) to automatically segment the ulcer and surrounding skin. Using 5-fold cross-validation, the proposed two-tier transfer learning FCN Models achieve a Dice Similarity Coefficient of 0.794 ($\pm$0.104) for ulcer region, 0.851 ($\pm$0.148) for surrounding skin region, and 0.899 ($\pm$0.072) for the combination of both regions. This demonstrates the potential of FCNs in DFU segmentation, which can be further improved with a larger dataset.

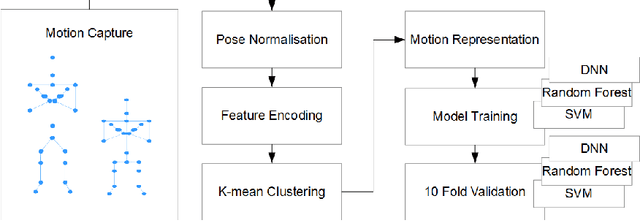

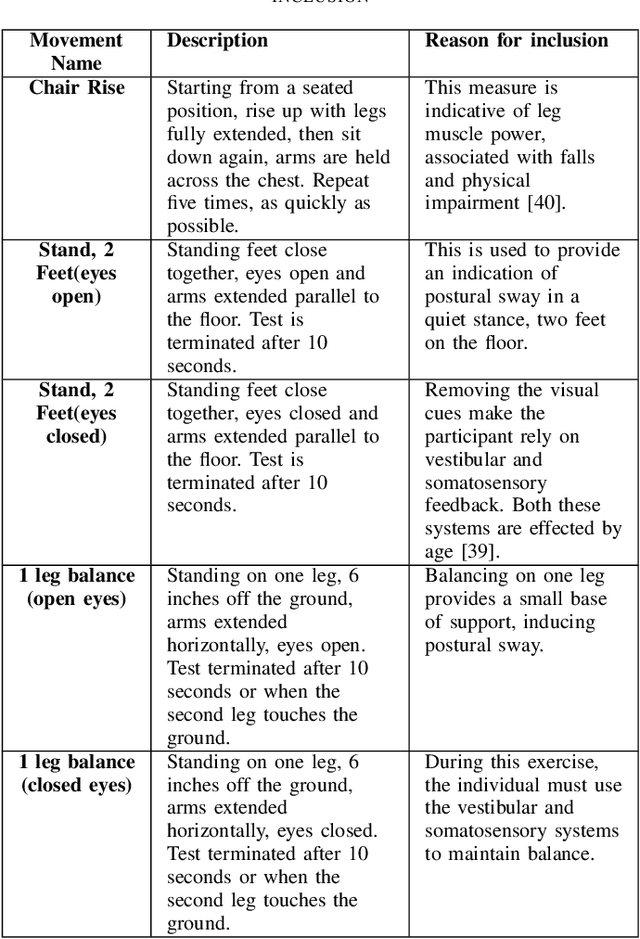

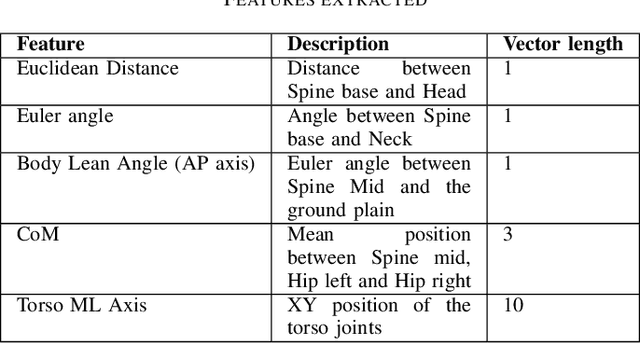

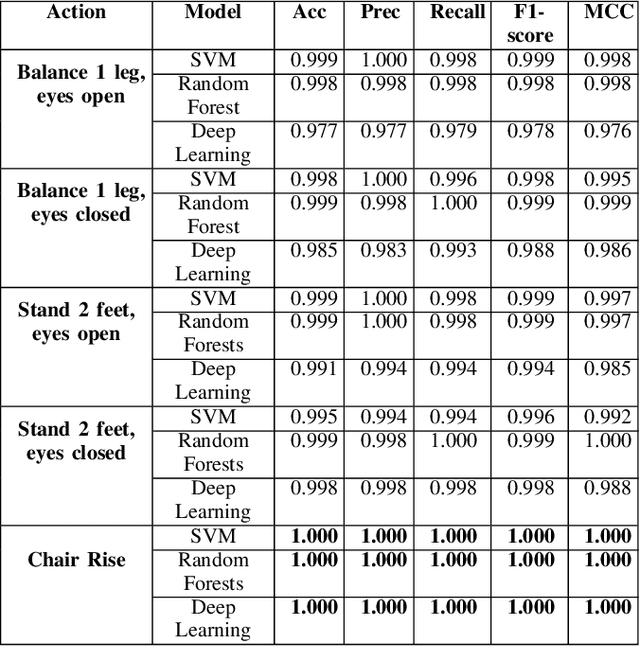

A Comparative Study of the Clinical use of Motion Analysis from Kinect Skeleton Data

Jul 31, 2017

Abstract:The analysis of human motion as a clinical tool can bring many benefits such as the early detection of disease and the monitoring of recovery, so in turn helping people to lead independent lives. However, it is currently under used. Developments in depth cameras, such as Kinect, have opened up the use of motion analysis in settings such as GP surgeries, care homes and private homes. To provide an insight into the use of Kinect in the healthcare domain, we present a review of the current state of the art. We then propose a method that can represent human motions from time-series data of arbitrary length, as a single vector. Finally, we demonstrate the utility of this method by extracting a set of clinically significant features and using them to detect the age related changes in the motions of a set of 54 individuals, with a high degree of certainty (F1- score between 0.9 - 1.0). Indicating its potential application in the detection of a range of age-related motion impairments.

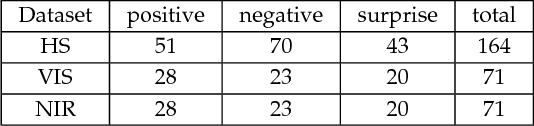

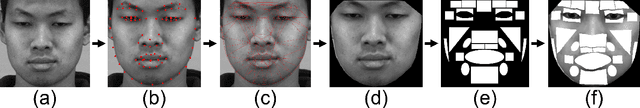

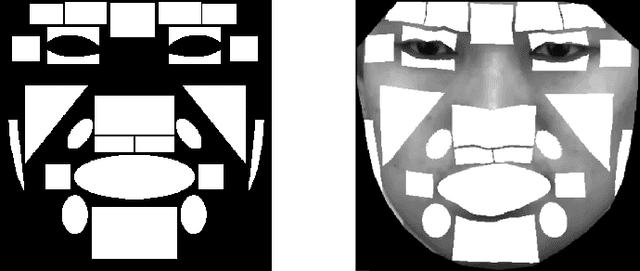

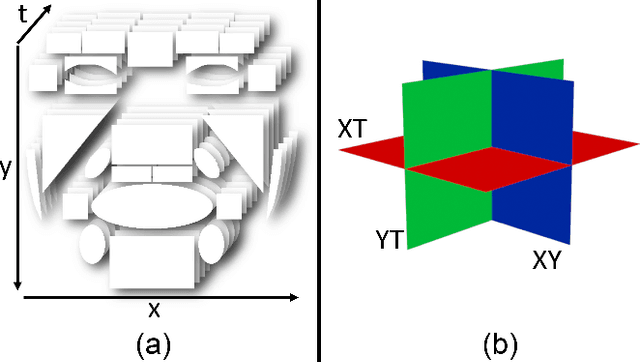

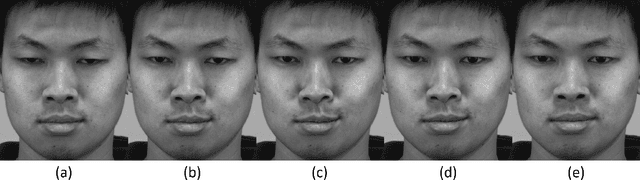

Objective Micro-Facial Movement Detection Using FACS-Based Regions and Baseline Evaluation

Dec 15, 2016

Abstract:Micro-facial expressions are regarded as an important human behavioural event that can highlight emotional deception. Spotting these movements is difficult for humans and machines, however research into using computer vision to detect subtle facial expressions is growing in popularity. This paper proposes an individualised baseline micro-movement detection method using 3D Histogram of Oriented Gradients (3D HOG) temporal difference method. We define a face template consisting of 26 regions based on the Facial Action Coding System (FACS). We extract the temporal features of each region using 3D HOG. Then, we use Chi-square distance to find subtle facial motion in the local regions. Finally, an automatic peak detector is used to detect micro-movements above the newly proposed adaptive baseline threshold. The performance is validated on two FACS coded datasets: SAMM and CASME II. This objective method focuses on the movement of the 26 face regions. When comparing with the ground truth, the best result was an AUC of 0.7512 and 0.7261 on SAMM and CASME II, respectively. The results show that 3D HOG outperformed for micro-movement detection, compared to state-of-the-art feature representations: Local Binary Patterns in Three Orthogonal Planes and Histograms of Oriented Optical Flow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge