Mohamed El-Habrouk

MonoLite3D: Lightweight 3D Object Properties Estimation

Mar 04, 2025Abstract:Reliable perception of the environment plays a crucial role in enabling efficient self-driving vehicles. Therefore, the perception system necessitates the acquisition of comprehensive 3D data regarding the surrounding objects within a specific time constrain, including their dimensions, spatial location and orientation. Deep learning has gained significant popularity in perception systems, enabling the conversion of image features captured by a camera into meaningful semantic information. This research paper introduces MonoLite3D network, an embedded-device friendly lightweight deep learning methodology designed for hardware environments with limited resources. MonoLite3D network is a cutting-edge technique that focuses on estimating multiple properties of 3D objects, encompassing their dimensions and spatial orientation, solely from monocular images. This approach is specifically designed to meet the requirements of resource-constrained environments, making it highly suitable for deployment on devices with limited computational capabilities. The experimental results validate the accuracy and efficiency of the proposed approach on the orientation benchmark of the KITTI dataset. It achieves an impressive score of 82.27% on the moderate class and 69.81% on the hard class, while still meeting the real-time requirements.

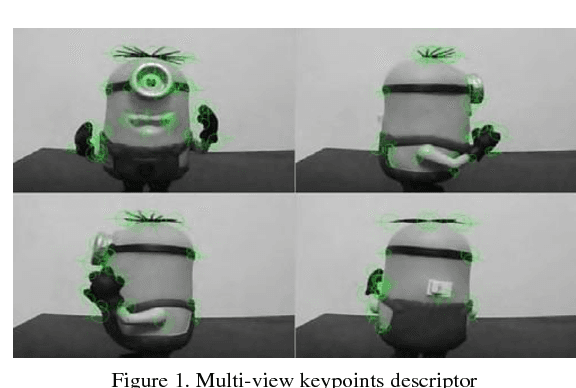

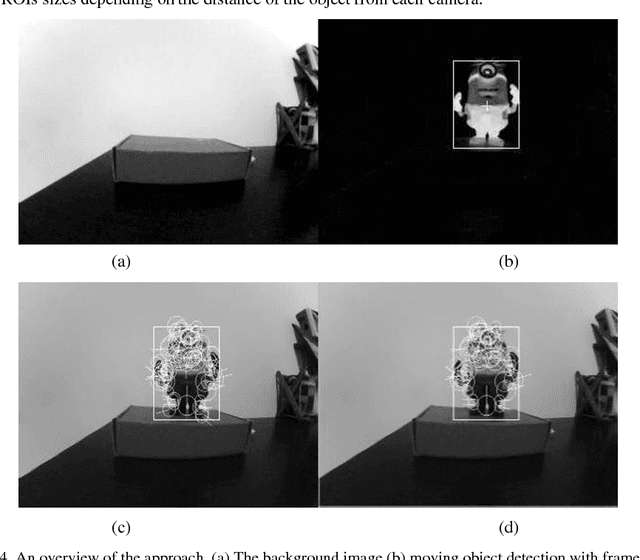

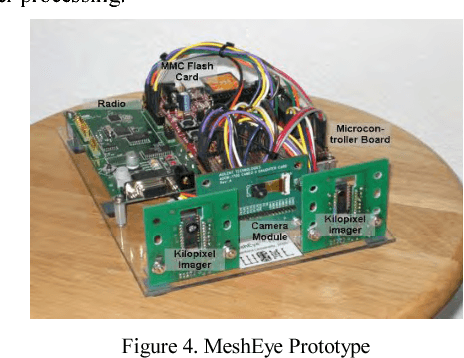

Keypoint-based object tracking and localization using networks of low-power embedded smart cameras

Nov 09, 2017

Abstract:Object tracking and localization is a complex task that typically requires processing power beyond the capabilities of low-power embedded cameras. This paper presents a new approach to real-time object tracking and localization using multi-view binary keypoints descriptor. The proposed approach offers a compromise between processing power, accuracy and networking bandwidth and has been tested using multiple distributed low-power smart cameras. Additionally, multiple optimization techniques are presented to improve the performance of the keypoints descriptor for low-power embedded systems.

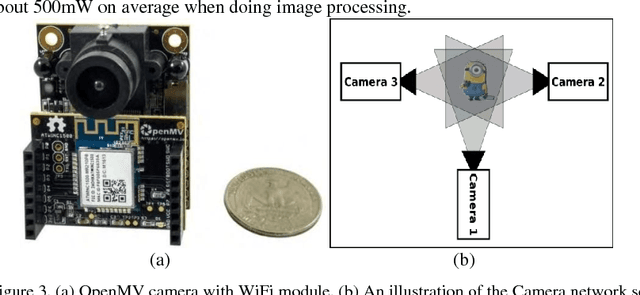

Openmv: A Python powered, extensible machine vision camera

Nov 01, 2017

Abstract:Advances in semiconductor manufacturing processes and large scale integration keep pushing demanding applications further away from centralized processing, and closer to the edges of the network (i.e. Edge Computing). It has become possible to perform complex in-network image processing using low-power embedded smart cameras, enabling a multitude of new collaborative image processing applications. This paper introduces OpenMV, a new low-power smart camera that lends itself naturally to wireless sensor networks and machine vision applications. The uniqueness of this platform lies in running an embedded Python3 interpreter, allowing its peripherals and machine vision library to be scripted in Python. In addition, its hardware is extensible via modules that augment the platform with new capabilities, such as thermal imaging and networking modules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge