Min Seok Kim

A Practical Approach for Building Production-Grade Conversational Agents with Workflow Graphs

May 29, 2025Abstract:The advancement of Large Language Models (LLMs) has led to significant improvements in various service domains, including search, recommendation, and chatbot applications. However, applying state-of-the-art (SOTA) research to industrial settings presents challenges, as it requires maintaining flexible conversational abilities while also strictly complying with service-specific constraints. This can be seen as two conflicting requirements due to the probabilistic nature of LLMs. In this paper, we propose our approach to addressing this challenge and detail the strategies we employed to overcome their inherent limitations in real-world applications. We conduct a practical case study of a conversational agent designed for the e-commerce domain, detailing our implementation workflow and optimizations. Our findings provide insights into bridging the gap between academic research and real-world application, introducing a framework for developing scalable, controllable, and reliable AI-driven agents.

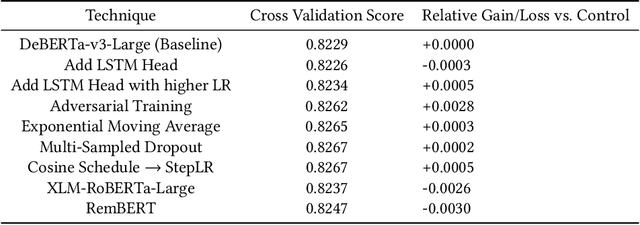

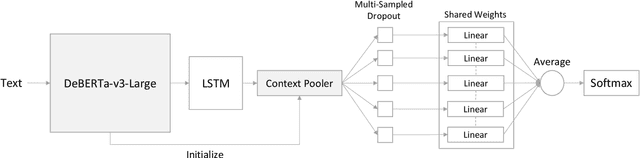

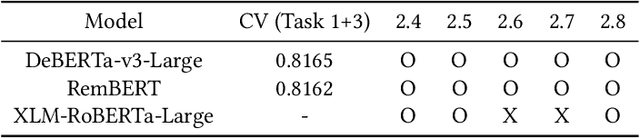

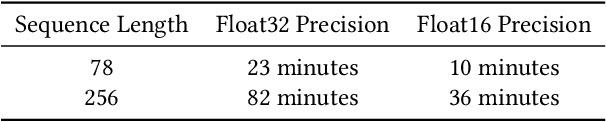

Predicting Query-Item Relationship using Adversarial Training and Robust Modeling Techniques

Aug 23, 2022

Abstract:We present an effective way to predict search query-item relationship. We combine pre-trained transformer and LSTM models, and increase model robustness using adversarial training, exponential moving average, multi-sampled dropout, and diversity based ensemble, to tackle an extremely difficult problem of predicting against queries not seen before. All of our strategies focus on increasing robustness of deep learning models and are applicable in any task where deep learning models are used. Applying our strategies, we achieved 10th place in KDD Cup 2022 Product Substitution Classification task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge