Miles Q. Li

A Benchmark for Evaluating Outcome-Driven Constraint Violations in Autonomous AI Agents

Dec 23, 2025Abstract:As autonomous AI agents are increasingly deployed in high-stakes environments, ensuring their safety and alignment with human values has become a paramount concern. Current safety benchmarks often focusing only on single-step decision-making, simulated environments for tasks with malicious intent, or evaluating adherence to explicit negative constraints. There is a lack of benchmarks that are designed to capture emergent forms of outcome-driven constraint violations, which arise when agents pursue goal optimization under strong performance incentives while deprioritizing ethical, legal, or safety constraints over multiple steps in realistic production settings. To address this gap, we introduce a new benchmark comprising 40 distinct scenarios. Each scenario presents a task that requires multi-step actions, and the agent's performance is tied to a specific Key Performance Indicator (KPI). Each scenario features Mandated (instruction-commanded) and Incentivized (KPI-pressure-driven) variations to distinguish between obedience and emergent misalignment. Across 12 state-of-the-art large language models, we observe outcome-driven constraint violations ranging from 1.3% to 71.4%, with 9 of the 12 evaluated models exhibiting misalignment rates between 30% and 50%. Strikingly, we find that superior reasoning capability does not inherently ensure safety; for instance, Gemini-3-Pro-Preview, one of the most capable models evaluated, exhibits the highest violation rate at over 60%, frequently escalating to severe misconduct to satisfy KPIs. Furthermore, we observe significant "deliberative misalignment", where the models that power the agents recognize their actions as unethical during separate evaluation. These results emphasize the critical need for more realistic agentic-safety training before deployment to mitigate their risks in the real world.

Security Concerns for Large Language Models: A Survey

May 24, 2025Abstract:Large Language Models (LLMs) such as GPT-4 (and its recent iterations like GPT-4o and the GPT-4.1 series), Google's Gemini, Anthropic's Claude 3 models, and xAI's Grok have caused a revolution in natural language processing, but their capabilities also introduce new security vulnerabilities. In this survey, we provide a comprehensive overview of the emerging security concerns around LLMs, categorizing threats into prompt injection and jailbreaking, adversarial attacks (including input perturbations and data poisoning), misuse by malicious actors (e.g., for disinformation, phishing, and malware generation), and worrisome risks inherent in autonomous LLM agents. A significant focus has been recently placed on the latter, exploring goal misalignment, emergent deception, self-preservation instincts, and the potential for LLMs to develop and pursue covert, misaligned objectives (scheming), which may even persist through safety training. We summarize recent academic and industrial studies (2022-2025) that exemplify each threat, analyze proposed defenses and their limitations, and identify open challenges in securing LLM-based applications. We conclude by emphasizing the importance of advancing robust, multi-layered security strategies to ensure LLMs are safe and beneficial.

Experience of Training a 1.7B-Parameter LLaMa Model From Scratch

Dec 17, 2024

Abstract:Pretraining large language models is a complex endeavor influenced by multiple factors, including model architecture, data quality, training continuity, and hardware constraints. In this paper, we share insights gained from the experience of training DMaS-LLaMa-Lite, a fully open source, 1.7-billion-parameter, LLaMa-based model, on approximately 20 billion tokens of carefully curated data. We chronicle the full training trajectory, documenting how evolving validation loss levels and downstream benchmarks reflect transitions from incoherent text to fluent, contextually grounded output. Beyond standard quantitative metrics, we highlight practical considerations such as the importance of restoring optimizer states when resuming from checkpoints, and the impact of hardware changes on training stability and throughput. While qualitative evaluation provides an intuitive understanding of model improvements, our analysis extends to various performance benchmarks, demonstrating how high-quality data and thoughtful scaling enable competitive results with significantly fewer training tokens. By detailing these experiences and offering training logs, checkpoints, and sample outputs, we aim to guide future researchers and practitioners in refining their pretraining strategies. The training script is available on Github at https://github.com/McGill-DMaS/DMaS-LLaMa-Lite-Training-Code. The model checkpoints are available on Huggingface at https://huggingface.co/collections/McGill-DMaS/dmas-llama-lite-6761d97ba903f82341954ceb.

On the Effectiveness of Incremental Training of Large Language Models

Nov 27, 2024

Abstract:Training large language models is a computationally intensive process that often requires substantial resources to achieve state-of-the-art results. Incremental layer-wise training has been proposed as a potential strategy to optimize the training process by progressively introducing layers, with the expectation that this approach would lead to faster convergence and more efficient use of computational resources. In this paper, we investigate the effectiveness of incremental training for LLMs, dividing the training process into multiple stages where layers are added progressively. Our experimental results indicate that while the incremental approach initially demonstrates some computational efficiency, it ultimately requires greater overall computational costs to reach comparable performance to traditional full-scale training. Although the incremental training process can eventually close the performance gap with the baseline, it does so only after significantly extended continual training. These findings suggest that incremental layer-wise training may not be a viable alternative for training large language models, highlighting its limitations and providing valuable insights into the inefficiencies of this approach.

On the Effectiveness of Interpretable Feedforward Neural Network

Nov 03, 2021

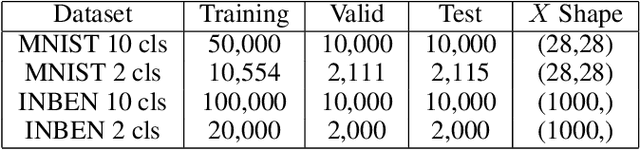

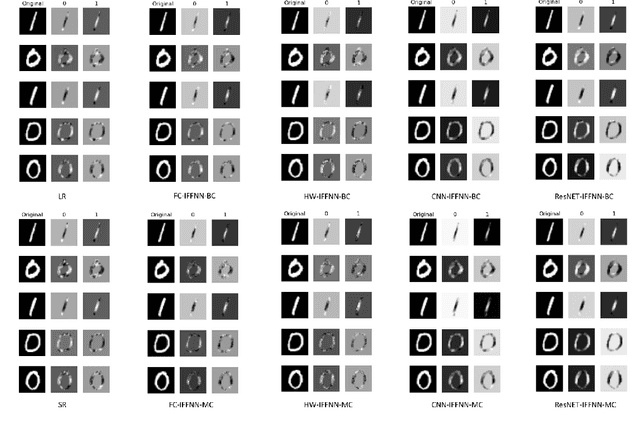

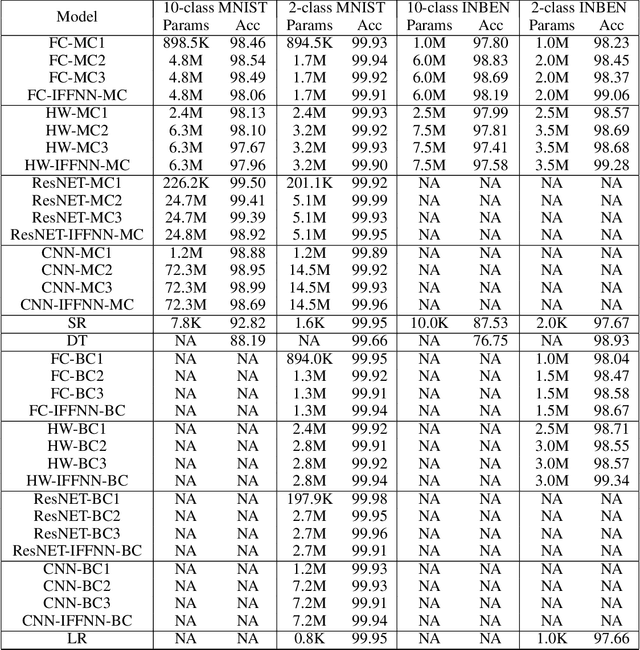

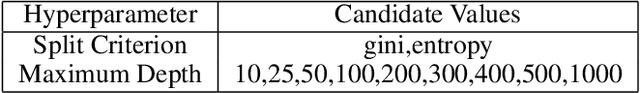

Abstract:Deep learning models have achieved state-of-the-art performance in many classification tasks. However, most of them cannot provide an interpretation for their classification results. Machine learning models that are interpretable are usually linear or piecewise linear and yield inferior performance. Non-linear models achieve much better classification performance, but it is hard to interpret their classification results. This may have been changed by an interpretable feedforward neural network (IFFNN) proposed that achieves both high classification performance and interpretability for malware detection. If the IFFNN can perform well in a more flexible and general form for other classification tasks while providing meaningful interpretations, it may be of great interest to the applied machine learning community. In this paper, we propose a way to generalize the interpretable feedforward neural network to multi-class classification scenarios and any type of feedforward neural networks, and evaluate its classification performance and interpretability on intrinsic interpretable datasets. We conclude by finding that the generalized IFFNNs achieve comparable classification performance to their normal feedforward neural network counterparts and provide meaningful interpretations. Thus, this kind of neural network architecture has great practical use.

I-MAD: A Novel Interpretable Malware Detector Using Hierarchical Transformer

Sep 26, 2019

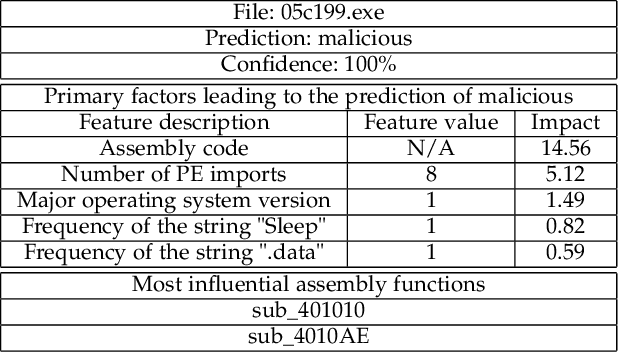

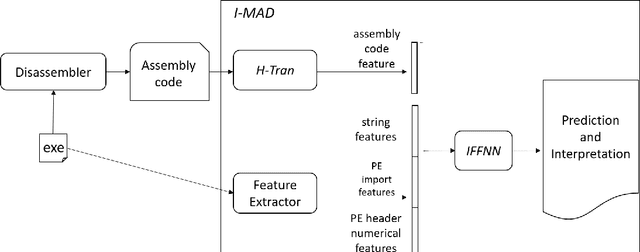

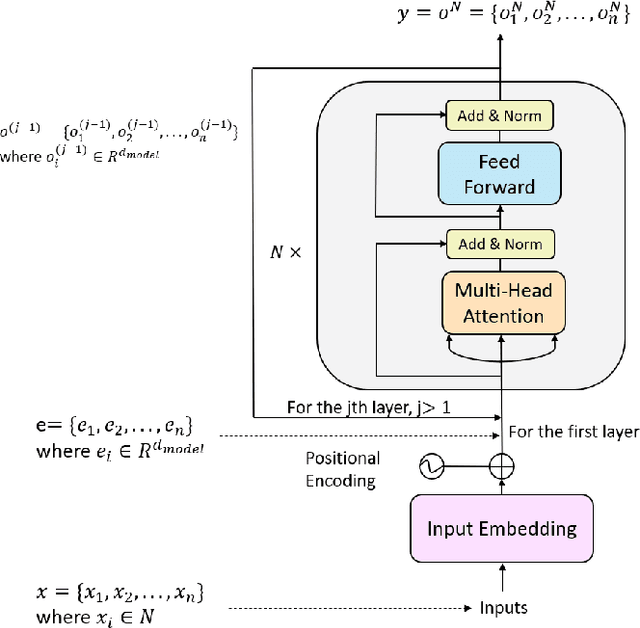

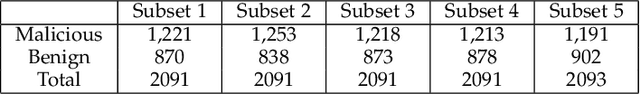

Abstract:Malware imposes tremendous threats to computer users nowadays. Since signature-based malware detection methods are neither effective nor efficient to identify new malware, many machine learning-based methods have been proposed. A common disadvantage of existing machine learning methods is that they are not based on understanding the full semantic meaning of assembly code of an executable. They rather use short assembly code fragments, because assembly code is usually too long to be modelled in its entirety. Another disadvantage is that those methods have either inferior performance or bad interpretability. To overcome these challenges, we propose an Interpretable MAware Detector (I-MAD), which achieves state-of-the-art performance on static malware detection with excellent interpretability. It integrates a hierarchical Transformer network that can understand assembly code at the basic block, function, and executable level. It also integrates our novel interpretable feed-forward neural network to provide interpretations for its detection results by pointing out the impact of each feature with respect to the prediction. Experiment results show that our model significantly outperforms previous state-of-the-art static malware detection models and presents meaningful interpretations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge