Michalis Vazirgiannis

Ecole Polytechnique, AUEB

Neural Architecture Search with Multimodal Fusion Methods for Diagnosing Dementia

Feb 12, 2023

Abstract:Alzheimer's dementia (AD) affects memory, thinking, and language, deteriorating person's life. An early diagnosis is very important as it enables the person to receive medical help and ensure quality of life. Therefore, leveraging spontaneous speech in conjunction with machine learning methods for recognizing AD patients has emerged into a hot topic. Most of the previous works employ Convolutional Neural Networks (CNNs), to process the input signal. However, finding a CNN architecture is a time-consuming process and requires domain expertise. Moreover, the researchers introduce early and late fusion approaches for fusing different modalities or concatenate the representations of the different modalities during training, thus the inter-modal interactions are not captured. To tackle these limitations, first we exploit a Neural Architecture Search (NAS) method to automatically find a high performing CNN architecture. Next, we exploit several fusion methods, including Multimodal Factorized Bilinear Pooling and Tucker Decomposition, to combine both speech and text modalities. To the best of our knowledge, there is no prior work exploiting a NAS approach and these fusion methods in the task of dementia detection from spontaneous speech. We perform extensive experiments on the ADReSS Challenge dataset and show the effectiveness of our approach over state-of-the-art methods.

Explainable Multilayer Graph Neural Network for Cancer Gene Prediction

Jan 20, 2023Abstract:The identification of cancer genes is a critical, yet challenging problem in cancer genomics research. Recently, several computational methods have been developed to address this issue, including deep neural networks. However, these methods fail to exploit the multilayered gene-gene interactions and provide little to no explanation for their predictions. Results: In this study, we propose an Explainable Multilayer Graph Neural Network (EMGNN) approach to identify cancer genes, by leveraging multiple gene-gene interaction networks and multi-omics data. Compared to conventional graph learning methods, EMGNN learned complementary information in multiple graphs to accurately predict cancer genes. Our method consistently outperforms existing approaches while providing valuable biological insights into its predictions. We further release our novel cancer gene predictions and connect them with known cancer patterns, aiming to accelerate the progress of cancer research

New Frontiers in Graph Autoencoders: Joint Community Detection and Link Prediction

Nov 16, 2022

Abstract:Graph autoencoders (GAE) and variational graph autoencoders (VGAE) emerged as powerful methods for link prediction (LP). Their performances are less impressive on community detection (CD), where they are often outperformed by simpler alternatives such as the Louvain method. It is still unclear to what extent one can improve CD with GAE and VGAE, especially in the absence of node features. It is moreover uncertain whether one could do so while simultaneously preserving good performances on LP in a multi-task setting. In this workshop paper, summarizing results from our journal publication (Salha-Galvan et al. 2022), we show that jointly addressing these two tasks with high accuracy is possible. For this purpose, we introduce a community-preserving message passing scheme, doping our GAE and VGAE encoders by considering both the initial graph and Louvain-based prior communities when computing embedding spaces. Inspired by modularity-based clustering, we further propose novel training and optimization strategies specifically designed for joint LP and CD. We demonstrate the empirical effectiveness of our approach, referred to as Modularity-Aware GAE and VGAE, on various real-world graphs.

Improving Graph Neural Networks at Scale: Combining Approximate PageRank and CoreRank

Nov 08, 2022

Abstract:Graph Neural Networks (GNNs) have achieved great successes in many learning tasks performed on graph structures. Nonetheless, to propagate information GNNs rely on a message passing scheme which can become prohibitively expensive when working with industrial-scale graphs. Inspired by the PPRGo model, we propose the CorePPR model, a scalable solution that utilises a learnable convex combination of the approximate personalised PageRank and the CoreRank to diffuse multi-hop neighbourhood information in GNNs. Additionally, we incorporate a dynamic mechanism to select the most influential neighbours for a particular node which reduces training time while preserving the performance of the model. Overall, we demonstrate that CorePPR outperforms PPRGo, particularly on large graphs where selecting the most influential nodes is particularly relevant for scalability. Our code is publicly available at: https://github.com/arielramos97/CorePPR.

Weisfeiler and Leman go Hyperbolic: Learning Distance Preserving Node Representations

Nov 04, 2022

Abstract:In recent years, graph neural networks (GNNs) have emerged as a promising tool for solving machine learning problems on graphs. Most GNNs are members of the family of message passing neural networks (MPNNs). There is a close connection between these models and the Weisfeiler-Leman (WL) test of isomorphism, an algorithm that can successfully test isomorphism for a broad class of graphs. Recently, much research has focused on measuring the expressive power of GNNs. For instance, it has been shown that standard MPNNs are at most as powerful as WL in terms of distinguishing non-isomorphic graphs. However, these studies have largely ignored the distances between the representations of nodes/graphs which are of paramount importance for learning tasks. In this paper, we define a distance function between nodes which is based on the hierarchy produced by the WL algorithm, and propose a model that learns representations which preserve those distances between nodes. Since the emerging hierarchy corresponds to a tree, to learn these representations, we capitalize on recent advances in the field of hyperbolic neural networks. We empirically evaluate the proposed model on standard node and graph classification datasets where it achieves competitive performance with state-of-the-art models.

Questioning the Validity of Summarization Datasets and Improving Their Factual Consistency

Oct 31, 2022Abstract:The topic of summarization evaluation has recently attracted a surge of attention due to the rapid development of abstractive summarization systems. However, the formulation of the task is rather ambiguous, neither the linguistic nor the natural language processing community has succeeded in giving a mutually agreed-upon definition. Due to this lack of well-defined formulation, a large number of popular abstractive summarization datasets are constructed in a manner that neither guarantees validity nor meets one of the most essential criteria of summarization: factual consistency. In this paper, we address this issue by combining state-of-the-art factual consistency models to identify the problematic instances present in popular summarization datasets. We release SummFC, a filtered summarization dataset with improved factual consistency, and demonstrate that models trained on this dataset achieve improved performance in nearly all quality aspects. We argue that our dataset should become a valid benchmark for developing and evaluating summarization systems.

DATScore: Evaluating Translation with Data Augmented Translations

Oct 12, 2022

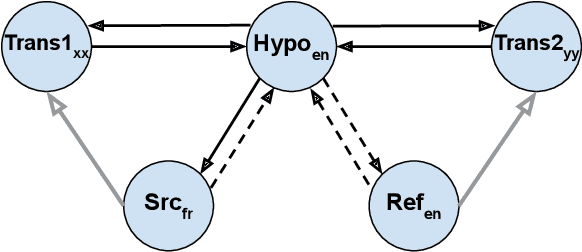

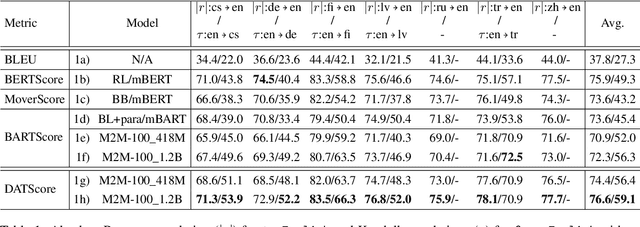

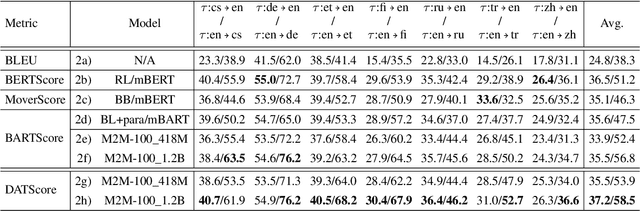

Abstract:The rapid development of large pretrained language models has revolutionized not only the field of Natural Language Generation (NLG) but also its evaluation. Inspired by the recent work of BARTScore: a metric leveraging the BART language model to evaluate the quality of generated text from various aspects, we introduce DATScore. DATScore uses data augmentation techniques to improve the evaluation of machine translation. Our main finding is that introducing data augmented translations of the source and reference texts is greatly helpful in evaluating the quality of the generated translation. We also propose two novel score averaging and term weighting strategies to improve the original score computing process of BARTScore. Experimental results on WMT show that DATScore correlates better with human meta-evaluations than the other recent state-of-the-art metrics, especially for low-resource languages. Ablation studies demonstrate the value added by our new scoring strategies. Moreover, we report in our extended experiments the performance of DATScore on 3 NLG tasks other than translation.

Word Sense Induction with Hierarchical Clustering and Mutual Information Maximization

Oct 11, 2022

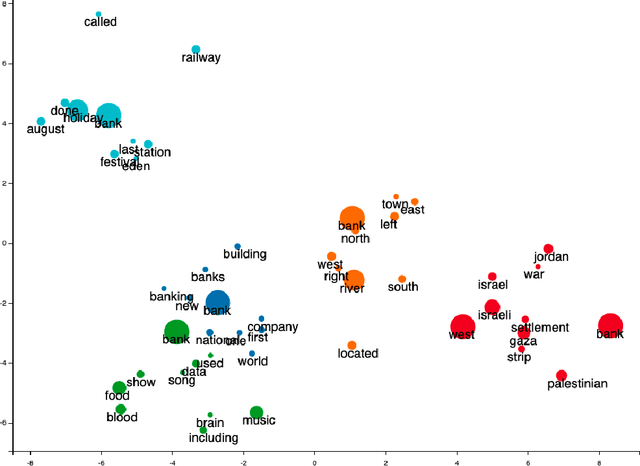

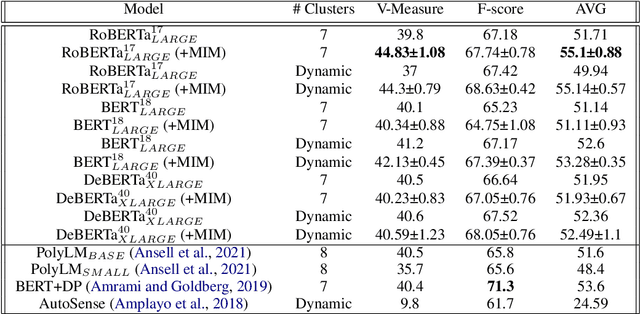

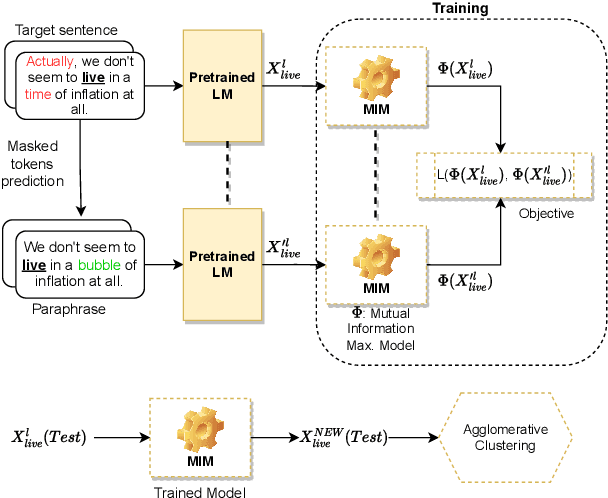

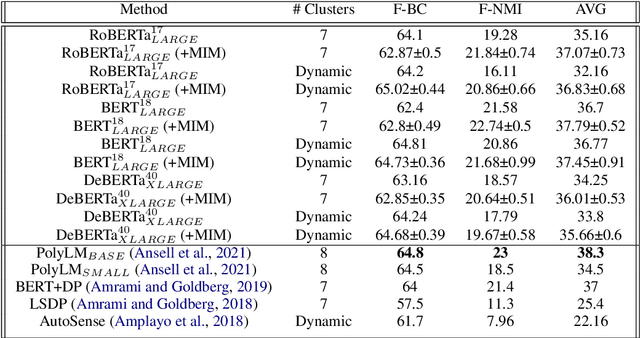

Abstract:Word sense induction (WSI) is a difficult problem in natural language processing that involves the unsupervised automatic detection of a word's senses (i.e. meanings). Recent work achieves significant results on the WSI task by pre-training a language model that can exclusively disambiguate word senses, whereas others employ previously pre-trained language models in conjunction with additional strategies to induce senses. In this paper, we propose a novel unsupervised method based on hierarchical clustering and invariant information clustering (IIC). The IIC is used to train a small model to optimize the mutual information between two vector representations of a target word occurring in a pair of synthetic paraphrases. This model is later used in inference mode to extract a higher quality vector representation to be used in the hierarchical clustering. We evaluate our method on two WSI tasks and in two distinct clustering configurations (fixed and dynamic number of clusters). We empirically demonstrate that, in certain cases, our approach outperforms prior WSI state-of-the-art methods, while in others, it achieves a competitive performance.

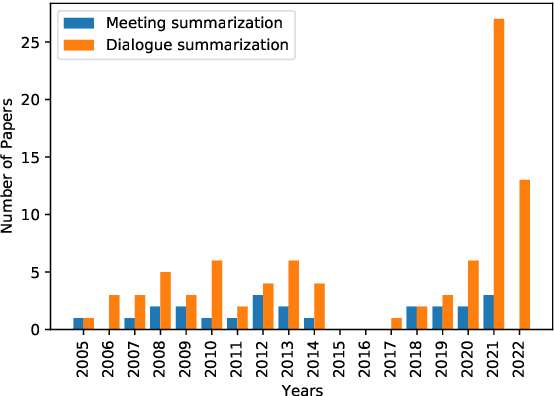

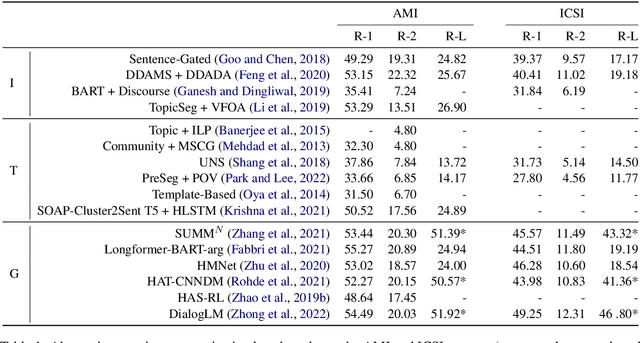

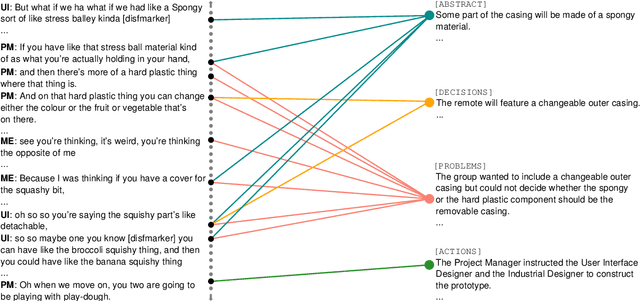

Abstractive Meeting Summarization: A Survey

Aug 08, 2022

Abstract:Recent advances in deep learning, and especially the invention of encoder-decoder architectures, has significantly improved the performance of abstractive summarization systems. While the majority of research has focused on written documents, we have observed an increasing interest in the summarization of dialogues and multi-party conversation over the past few years. A system that could reliably transform the audio or transcript of a human conversation into an abridged version that homes in on the most important points of the discussion would be valuable in a wide variety of real-world contexts, from business meetings to medical consultations to customer service calls. This paper focuses on abstractive summarization for multi-party meetings, providing a survey of the challenges, datasets and systems relevant to this task and a discussion of promising directions for future study.

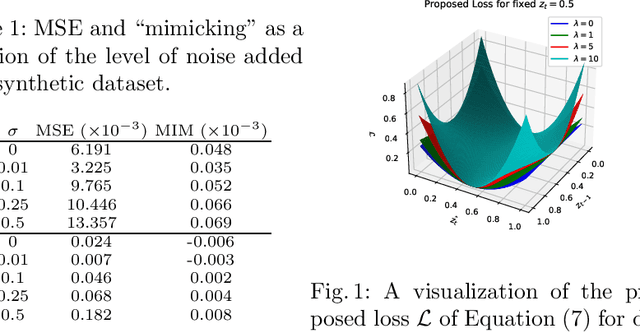

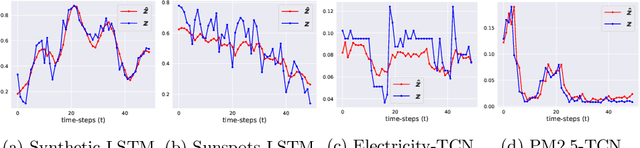

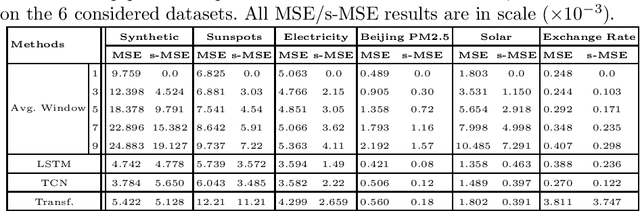

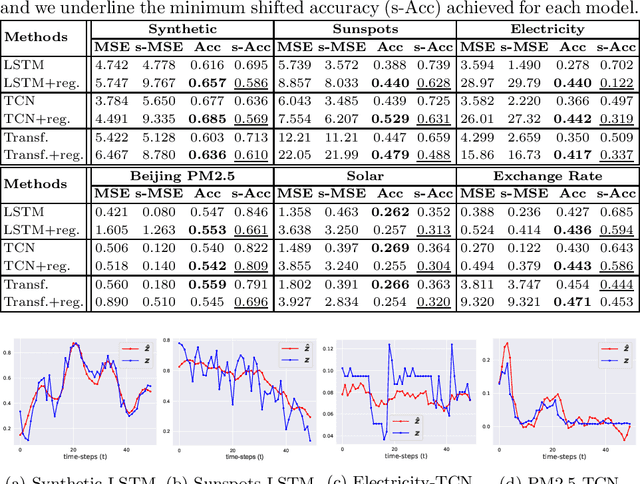

Time Series Forecasting Models Copy the Past: How to Mitigate

Jul 27, 2022

Abstract:Time series forecasting is at the core of important application domains posing significant challenges to machine learning algorithms. Recently neural network architectures have been widely applied to the problem of time series forecasting. Most of these models are trained by minimizing a loss function that measures predictions' deviation from the real values. Typical loss functions include mean squared error (MSE) and mean absolute error (MAE). In the presence of noise and uncertainty, neural network models tend to replicate the last observed value of the time series, thus limiting their applicability to real-world data. In this paper, we provide a formal definition of the above problem and we also give some examples of forecasts where the problem is observed. We also propose a regularization term penalizing the replication of previously seen values. We evaluate the proposed regularization term both on synthetic and real-world datasets. Our results indicate that the regularization term mitigates to some extent the aforementioned problem and gives rise to more robust models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge