Mauro Pezzè

Get on the Train or be Left on the Station: Using LLMs for Software Engineering Research

Jun 15, 2025Abstract:The adoption of Large Language Models (LLMs) is not only transforming software engineering (SE) practice but is also poised to fundamentally disrupt how research is conducted in the field. While perspectives on this transformation range from viewing LLMs as mere productivity tools to considering them revolutionary forces, we argue that the SE research community must proactively engage with and shape the integration of LLMs into research practices, emphasizing human agency in this transformation. As LLMs rapidly become integral to SE research - both as tools that support investigations and as subjects of study - a human-centric perspective is essential. Ensuring human oversight and interpretability is necessary for upholding scientific rigor, fostering ethical responsibility, and driving advancements in the field. Drawing from discussions at the 2nd Copenhagen Symposium on Human-Centered AI in SE, this position paper employs McLuhan's Tetrad of Media Laws to analyze the impact of LLMs on SE research. Through this theoretical lens, we examine how LLMs enhance research capabilities through accelerated ideation and automated processes, make some traditional research practices obsolete, retrieve valuable aspects of historical research approaches, and risk reversal effects when taken to extremes. Our analysis reveals opportunities for innovation and potential pitfalls that require careful consideration. We conclude with a call to action for the SE research community to proactively harness the benefits of LLMs while developing frameworks and guidelines to mitigate their risks, to ensure continued rigor and impact of research in an AI-augmented future.

On Introducing Automatic Test Case Generation in Practice: A Success Story and Lessons Learned

Feb 28, 2021

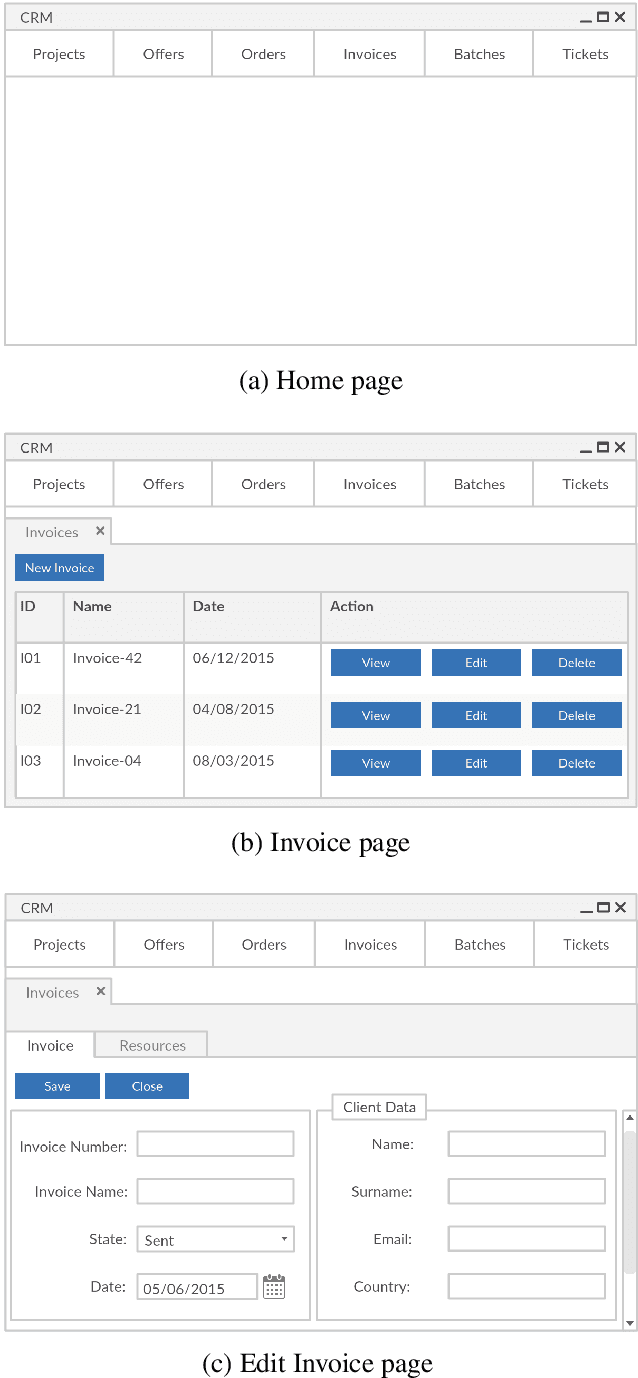

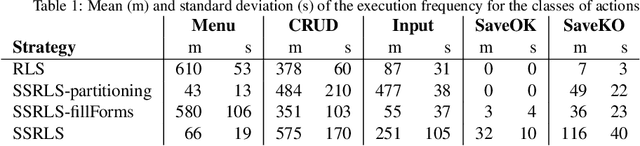

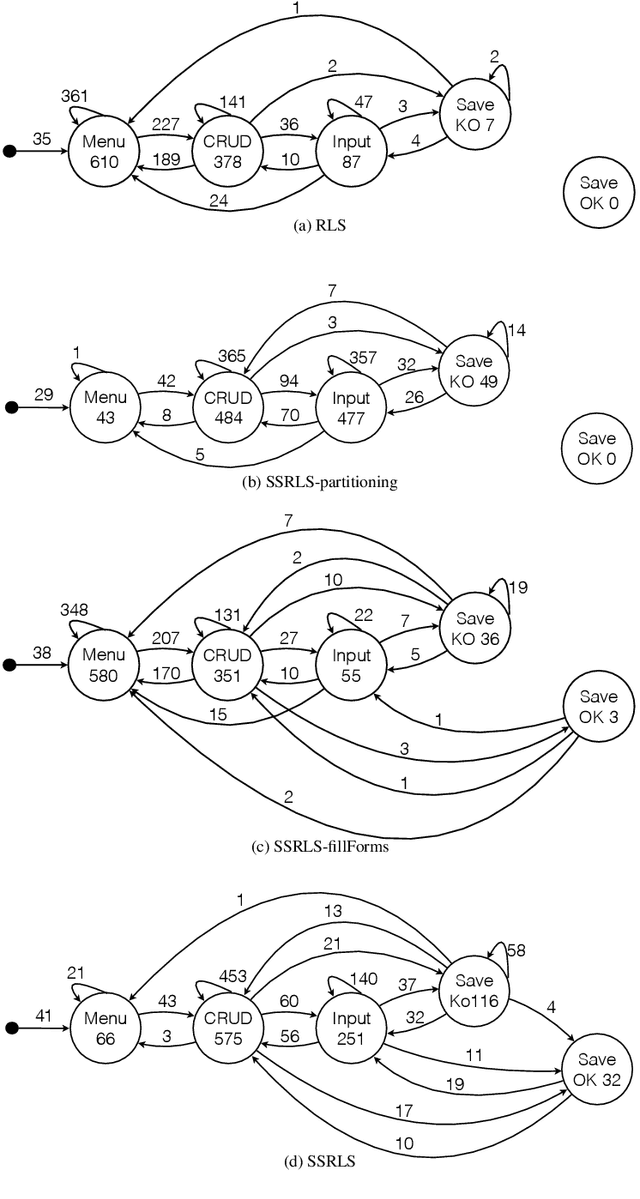

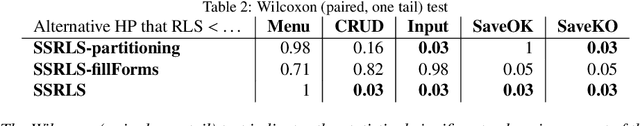

Abstract:The level and quality of automation dramatically affects software testing activities, determines costs and effectiveness of the testing process, and largely impacts on the quality of the final product. While costs and benefits of automating many testing activities in industrial practice (including managing the quality process, executing large test suites, and managing regression test suites) are well understood and documented, the benefits and obstacles of automatically generating system test suites in industrial practice are not well reported yet, despite the recent progresses of automated test case generation tools. Proprietary tools for automatically generating test cases are becoming common practice in large software organisations, and commercial tools are becoming available for some application domains and testing levels. However, generating system test cases in small and medium-size software companies is still largely a manual, inefficient and ad-hoc activity. This paper reports our experience in introducing techniques for automatically generating system test suites in a medium-size company. We describe the technical and organisational obstacles that we faced when introducing automatic test case generation in the development process of the company, and present the solutions that we successfully experienced in that context. In particular, the paper discusses the problems of automating the generation of test cases by referring to a customised ERP application that the medium-size company developed for a third party multinational company, and presents ABT2.0, the test case generator that we developed by tailoring ABT, a research state-of-the-art GUI test generator, to their industrial environment. This paper presents the new features of ABT2.0, and discusses how these new features address the issues that we faced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge