Martin Fleming

How Much Progress Has There Been in NVIDIA Datacenter GPUs?

Jan 27, 2026Abstract:Graphics Processing Units (GPUs) are the state-of-the-art architecture for essential tasks, ranging from rendering 2D/3D graphics to accelerating workloads in supercomputing centers and, of course, Artificial Intelligence (AI). As GPUs continue improving to satisfy ever-increasing performance demands, analyzing past and current progress becomes paramount in determining future constraints on scientific research. This is particularly compelling in the AI domain, where rapid technological advancements and fierce global competition have led the United States to recently implement export control regulations limiting international access to advanced AI chips. For this reason, this paper studies technical progress in NVIDIA datacenter GPUs released from the mid-2000s until today. Specifically, we compile a comprehensive dataset of datacenter NVIDIA GPUs comprising several features, ranging from computational performance to release price. Then, we examine trends in main GPU features and estimate progress indicators for per-memory bandwidth, per-dollar, and per-watt increase rates. Our main results identify doubling times of 1.44 and 1.69 years for FP16 and FP32 operations (without accounting for sparsity benefits), while FP64 doubling times range from 2.06 to 3.79 years. Off-chip memory size and bandwidth grew at slower rates than computing performance, doubling every 3.32 to 3.53 years. The release prices of datacenter GPUs have roughly doubled every 5.1 years, while their power consumption has approximately doubled every 16 years. Finally, we quantify the potential implications of current U.S. export control regulations in terms of the potential performance gaps that would result if implementation were assumed to be complete and successful. We find that recently proposed changes to export controls would shrink the potential performance gap from 23.6x to 3.54x.

Learning Occupational Task-Shares Dynamics for the Future of Work

Jan 28, 2020

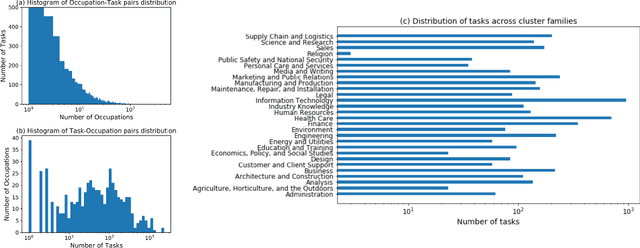

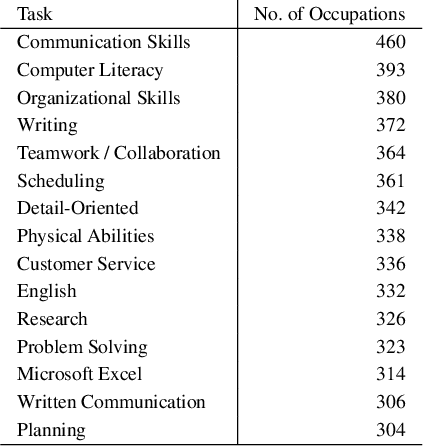

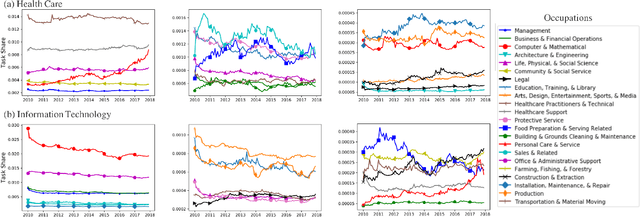

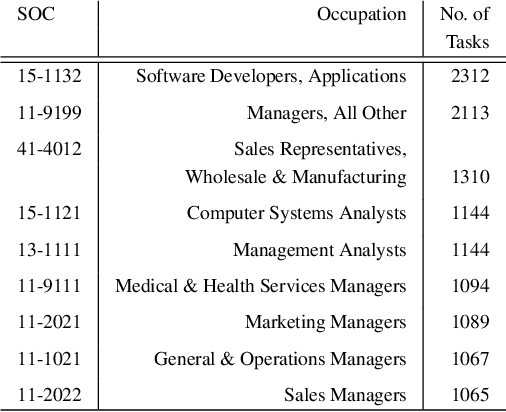

Abstract:The recent wave of AI and automation has been argued to differ from previous General Purpose Technologies (GPTs), in that it may lead to rapid change in occupations' underlying task requirements and persistent technological unemployment. In this paper, we apply a novel methodology of dynamic task shares to a large dataset of online job postings to explore how exactly occupational task demands have changed over the past decade of AI innovation, especially across high, mid and low wage occupations. Notably, big data and AI have risen significantly among high wage occupations since 2012 and 2016, respectively. We built an ARIMA model to predict future occupational task demands and showcase several relevant examples in Healthcare, Administration, and IT. Such task demands predictions across occupations will play a pivotal role in retraining the workforce of the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge