Marta Maslej

A Fully Generative Motivational Interviewing Counsellor Chatbot for Moving Smokers Towards the Decision to Quit

May 26, 2025Abstract:The conversational capabilities of Large Language Models (LLMs) suggest that they may be able to perform as automated talk therapists. It is crucial to know if these systems would be effective and adhere to known standards. We present a counsellor chatbot that focuses on motivating tobacco smokers to quit smoking. It uses a state-of-the-art LLM and a widely applied therapeutic approach called Motivational Interviewing (MI), and was evolved in collaboration with clinician-scientists with expertise in MI. We also describe and validate an automated assessment of both the chatbot's adherence to MI and client responses. The chatbot was tested on 106 participants, and their confidence that they could succeed in quitting smoking was measured before the conversation and one week later. Participants' confidence increased by an average of 1.7 on a 0-10 scale. The automated assessment of the chatbot showed adherence to MI standards in 98% of utterances, higher than human counsellors. The chatbot scored well on a participant-reported metric of perceived empathy but lower than typical human counsellors. Furthermore, participants' language indicated a good level of motivation to change, a key goal in MI. These results suggest that the automation of talk therapy with a modern LLM has promise.

Transfer Learning for Risk Classification of Social Media Posts: Model Evaluation Study

Jul 10, 2019

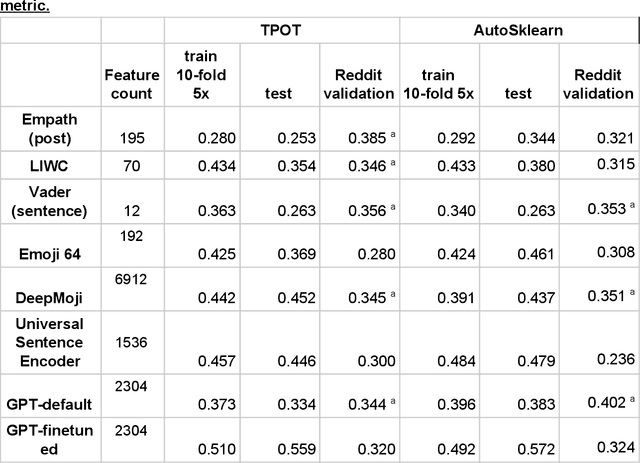

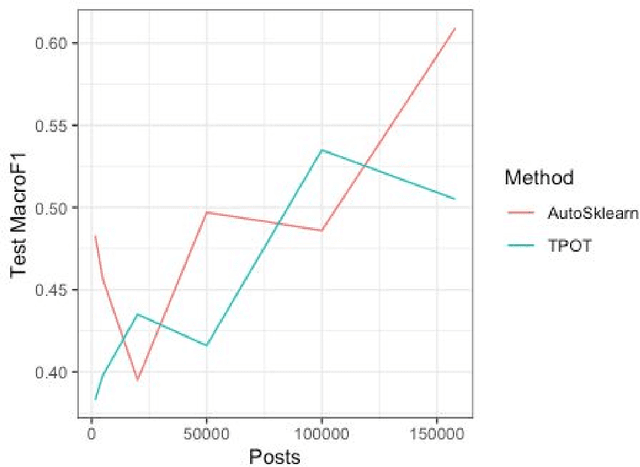

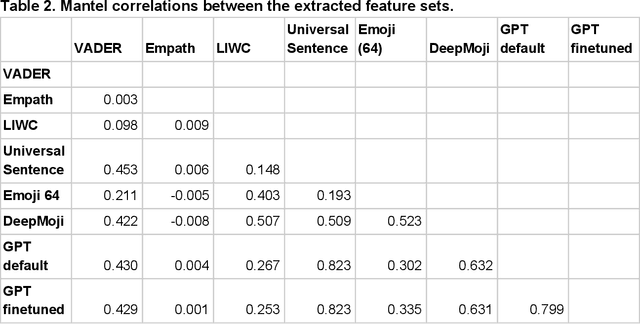

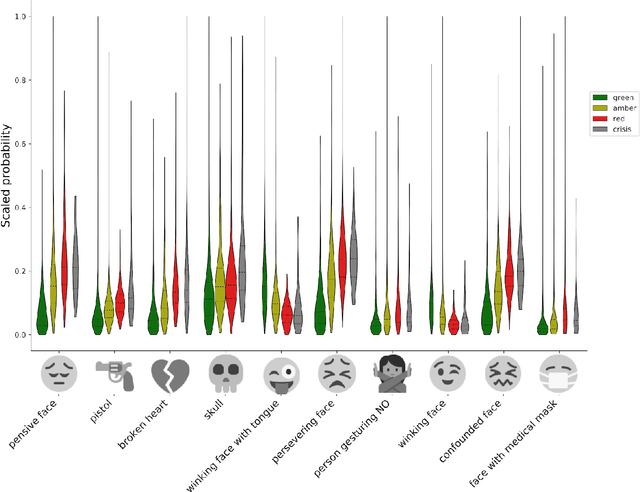

Abstract:Mental illness affects a significant portion of the worldwide population. Online mental health forums can provide a supportive environment for those afflicted and also generate a large amount of data which can be mined to predict mental health states using machine learning methods. We benchmark multiple methods of text feature representation for social media posts and compare their downstream use with automated machine learning (AutoML) tools to triage content for moderator attention. We used 1588 labeled posts from the CLPsych 2017 shared task collected from the Reachout.com forum (Milne et al., 2019). Posts were represented using lexicon based tools including VADER, Empath, LIWC and also used pre-trained artificial neural network models including DeepMoji, Universal Sentence Encoder, and GPT-1. We used TPOT and auto-sklearn as AutoML tools to generate classifiers to triage the posts. The top-performing system used features derived from the GPT-1 model, which was finetuned on over 150,000 unlabeled posts from Reachout.com. Our top system had a macro averaged F1 score of 0.572, providing a new state-of-the-art result on the CLPsych 2017 task. This was achieved without additional information from meta-data or preceding posts. Error analyses revealed that this top system often misses expressions of hopelessness. We additionally present visualizations that aid understanding of the learned classifiers. We show that transfer learning is an effective strategy for predicting risk with relatively little labeled data. We note that finetuning of pretrained language models provides further gains when large amounts of unlabeled text is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge