Marta Guimaraes

Keep Calm and Avoid Harmful Content: Concept Alignment and Latent Manipulation Towards Safer Answers

Oct 14, 2025

Abstract:Large Language Models are susceptible to jailbreak attacks that bypass built-in safety guardrails (e.g., by tricking the model with adversarial prompts). We propose Concept Alignment and Concept Manipulation \textbf{CALM}, an inference-time method that suppresses harmful concepts by modifying latent representations of the last layer of the model, without retraining. Leveraging \gls*{cw} technique from Computer Vision combined with orthogonal projection, CALM removes unwanted latent directions associated with harmful content while preserving model performance. Experiments show that CALM reduces harmful outputs and outperforms baseline methods in most metrics, offering a lightweight approach to AI safety with no additional training data or model fine-tuning, while incurring only a small computational overhead at inference.

Decision Support Models for Predicting and Explaining Airport Passenger Connectivity from Data

Nov 02, 2021

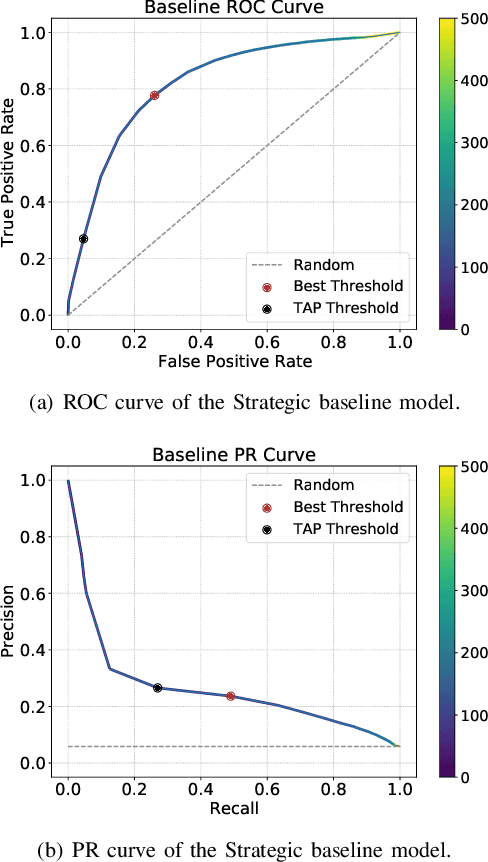

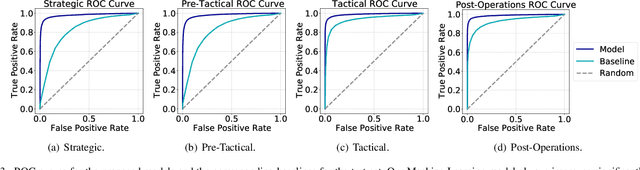

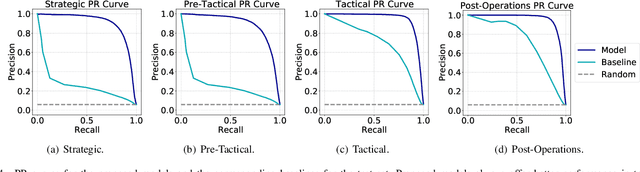

Abstract:Predicting if passengers in a connecting flight will lose their connection is paramount for airline profitability. We present novel machine learning-based decision support models for the different stages of connection flight management, namely for strategic, pre-tactical, tactical and post-operations. We predict missed flight connections in an airline's hub airport using historical data on flights and passengers, and analyse the factors that contribute additively to the predicted outcome for each decision horizon. Our data is high-dimensional, heterogeneous, imbalanced and noisy, and does not inform about passenger arrival/departure transit time. We employ probabilistic encoding of categorical classes, data balancing with Gaussian Mixture Models, and boosting. For all planning horizons, our models attain an AUC of the ROC higher than 0.93. SHAP value explanations of our models indicate that scheduled/perceived connection times contribute the most to the prediction, followed by passenger age and whether border controls are required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge