Marcos Zampieri

Domain-specific MT for Low-resource Languages: The case of Bambara-French

Mar 31, 2021

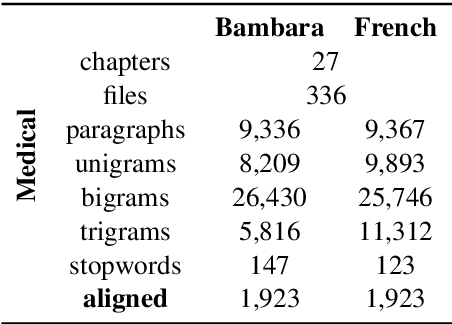

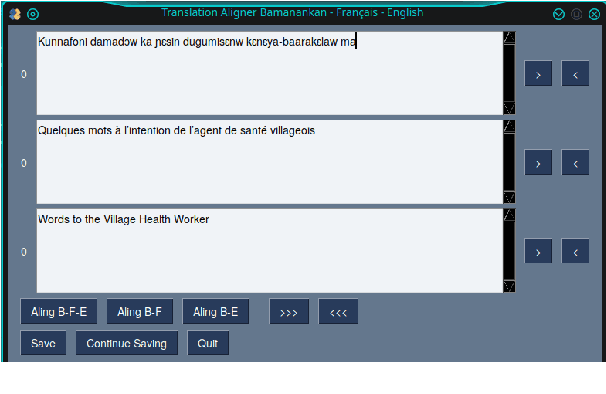

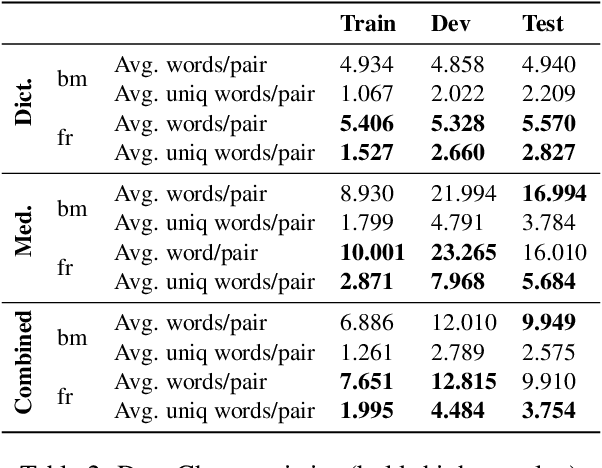

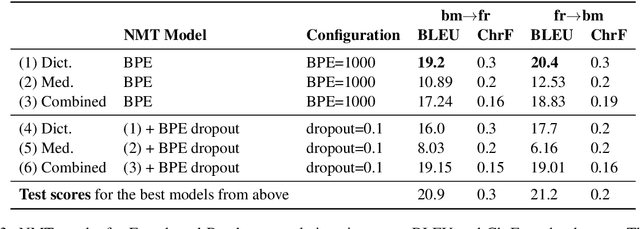

Abstract:Translating to and from low-resource languages is a challenge for machine translation (MT) systems due to a lack of parallel data. In this paper we address the issue of domain-specific MT for Bambara, an under-resourced Mande language spoken in Mali. We present the first domain-specific parallel dataset for MT of Bambara into and from French. We discuss challenges in working with small quantities of domain-specific data for a low-resource language and we present the results of machine learning experiments on this data.

Comparing Approaches to Dravidian Language Identification

Mar 09, 2021

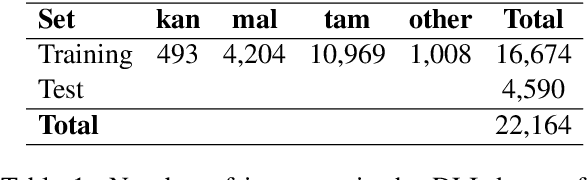

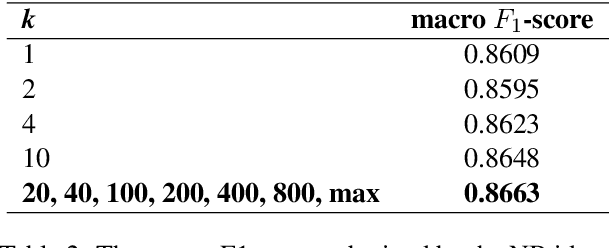

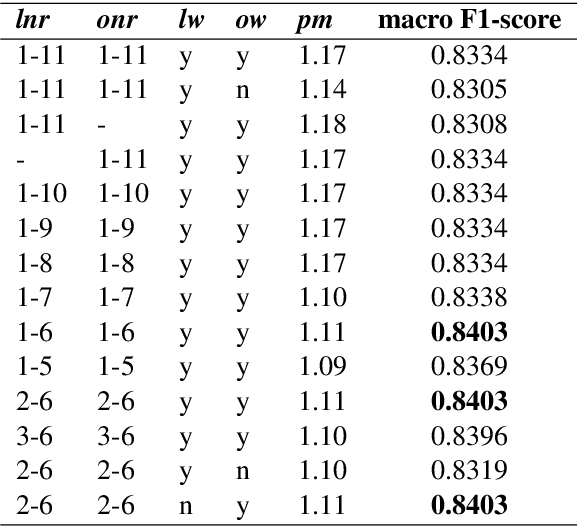

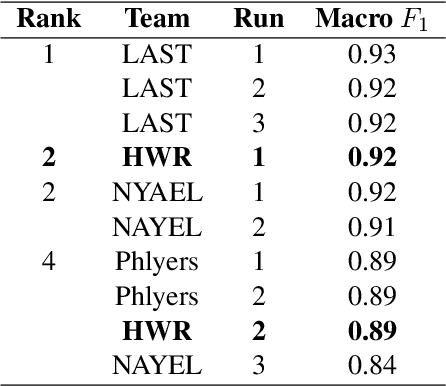

Abstract:This paper describes the submissions by team HWR to the Dravidian Language Identification (DLI) shared task organized at VarDial 2021 workshop. The DLI training set includes 16,674 YouTube comments written in Roman script containing code-mixed text with English and one of the three South Dravidian languages: Kannada, Malayalam, and Tamil. We submitted results generated using two models, a Naive Bayes classifier with adaptive language models, which has shown to obtain competitive performance in many language and dialect identification tasks, and a transformer-based model which is widely regarded as the state-of-the-art in a number of NLP tasks. Our first submission was sent in the closed submission track using only the training set provided by the shared task organisers, whereas the second submission is considered to be open as it used a pretrained model trained with external data. Our team attained shared second position in the shared task with the submission based on Naive Bayes. Our results reinforce the idea that deep learning methods are not as competitive in language identification related tasks as they are in many other text classification tasks.

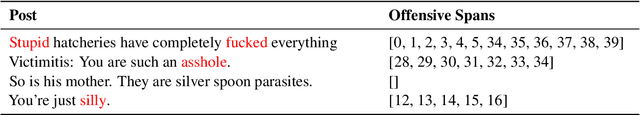

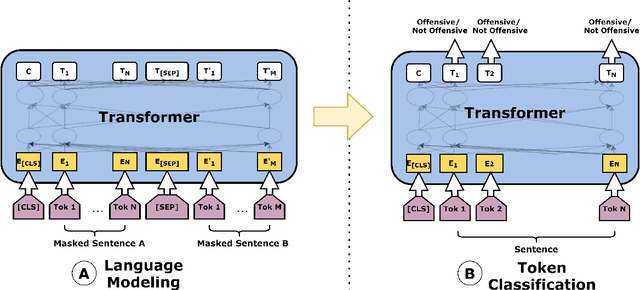

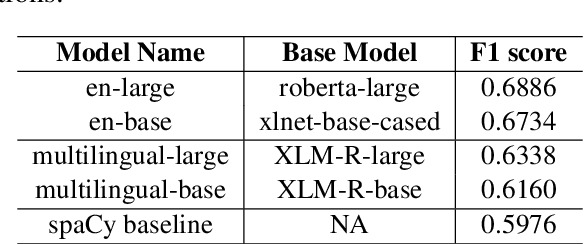

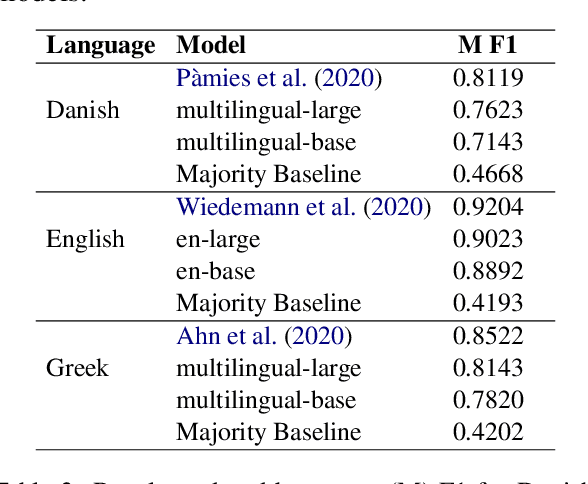

MUDES: Multilingual Detection of Offensive Spans

Feb 18, 2021

Abstract:The interest in offensive content identification in social media has grown substantially in recent years. Previous work has dealt mostly with post level annotations. However, identifying offensive spans is useful in many ways. To help coping with this important challenge, we present MUDES, a multilingual system to detect offensive spans in texts. MUDES features pre-trained models, a Python API for developers, and a user-friendly web-based interface. A detailed description of MUDES' components is presented in this paper.

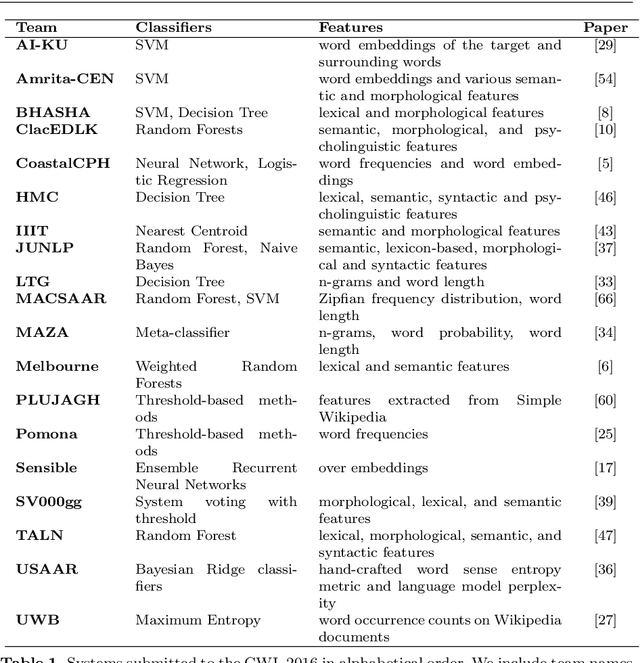

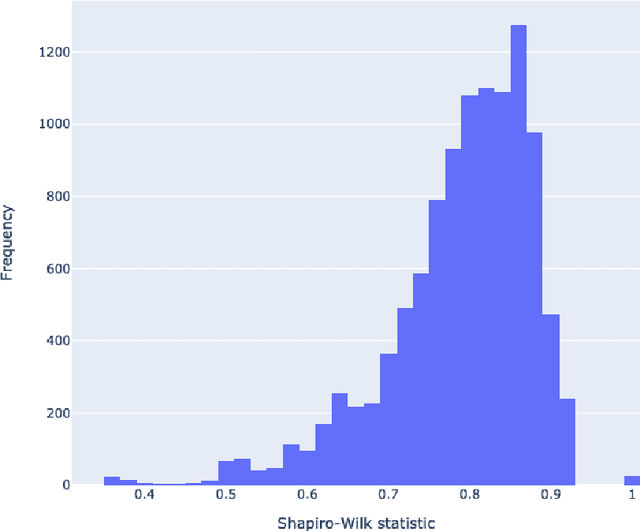

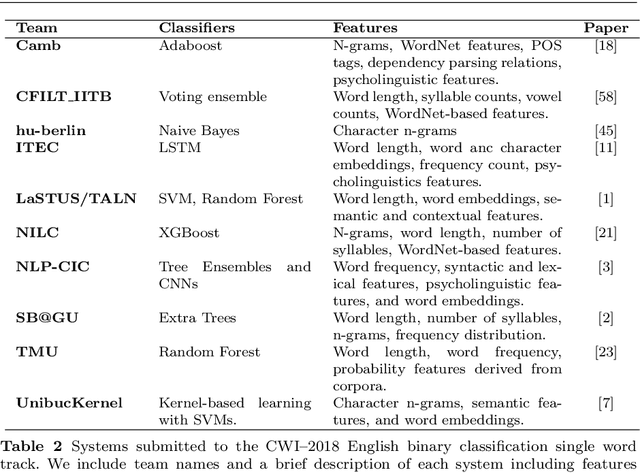

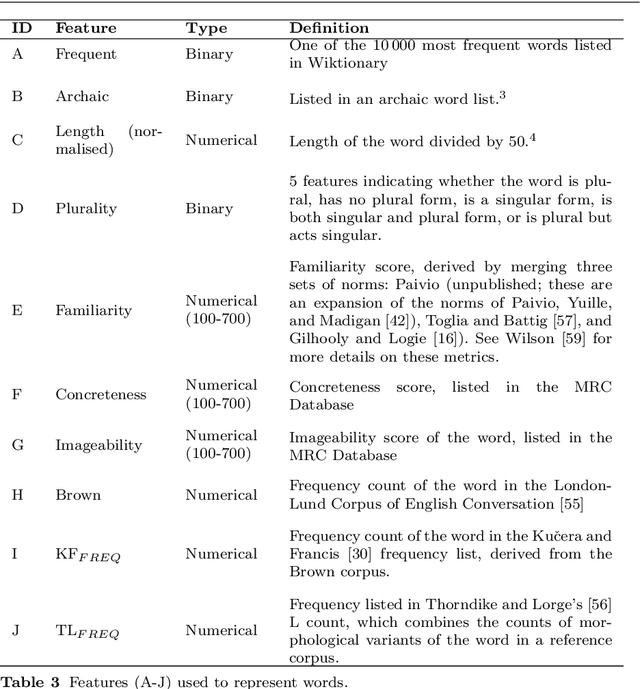

Predicting Lexical Complexity in English Texts

Feb 17, 2021

Abstract:The first step in most text simplification is to predict which words are considered complex for a given target population before carrying out lexical substitution. This task is commonly referred to as Complex Word Identification (CWI) and it is often modelled as a supervised classification problem. For training such systems, annotated datasets in which words and sometimes multi-word expressions are labelled regarding complexity are required. In this paper we analyze previous work carried out in this task and investigate the properties of complex word identification datasets for English.

Neural Machine Translation for Extremely Low-Resource African Languages: A Case Study on Bambara

Nov 10, 2020

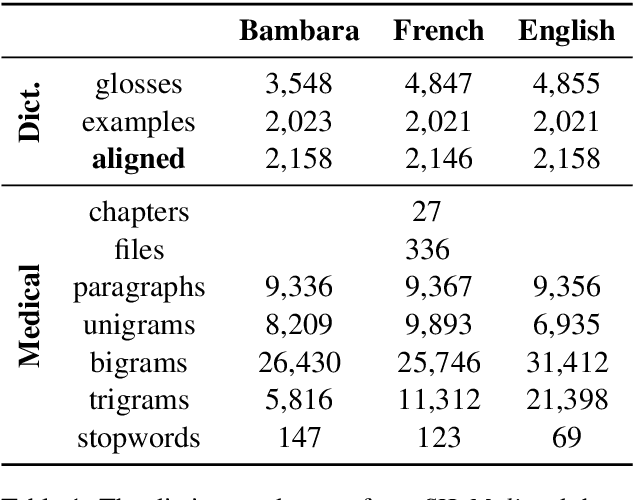

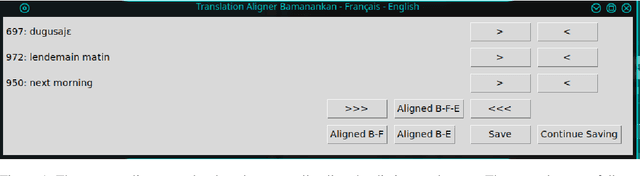

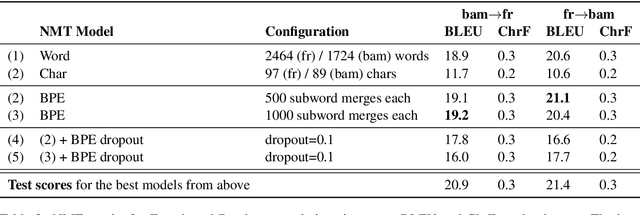

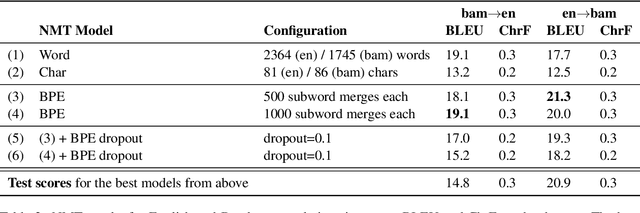

Abstract:Low-resource languages present unique challenges to (neural) machine translation. We discuss the case of Bambara, a Mande language for which training data is scarce and requires significant amounts of pre-processing. More than the linguistic situation of Bambara itself, the socio-cultural context within which Bambara speakers live poses challenges for automated processing of this language. In this paper, we present the first parallel data set for machine translation of Bambara into and from English and French and the first benchmark results on machine translation to and from Bambara. We discuss challenges in working with low-resource languages and propose strategies to cope with data scarcity in low-resource machine translation (MT).

WLV-RIT at HASOC-Dravidian-CodeMix-FIRE2020: Offensive Language Identification in Code-switched YouTube Comments

Nov 01, 2020

Abstract:This paper describes the WLV-RIT entry to the Hate Speech and Offensive Content Identification in Indo-European Languages (HASOC) shared task 2020. The HASOC 2020 organizers provided participants with annotated datasets containing social media posts of code-mixed in Dravidian languages (Malayalam-English and Tamil-English). We participated in task 1: Offensive comment identification in Code-mixed Malayalam Youtube comments. In our methodology, we take advantage of available English data by applying cross-lingual contextual word embeddings and transfer learning to make predictions to Malayalam data. We further improve the results using various fine tuning strategies. Our system achieved 0.89 weighted average F1 score for the test set and it ranked 5th place out of 12 participants.

Multilingual Offensive Language Identification with Cross-lingual Embeddings

Oct 11, 2020

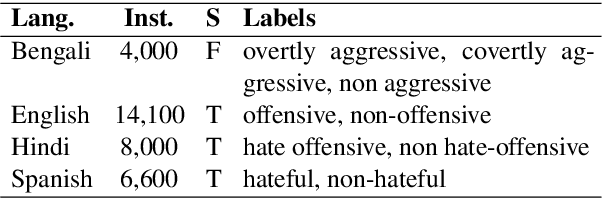

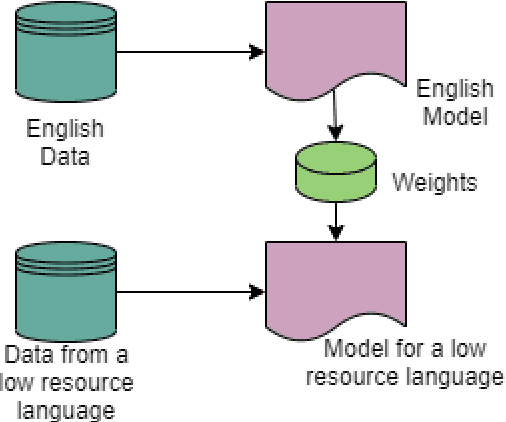

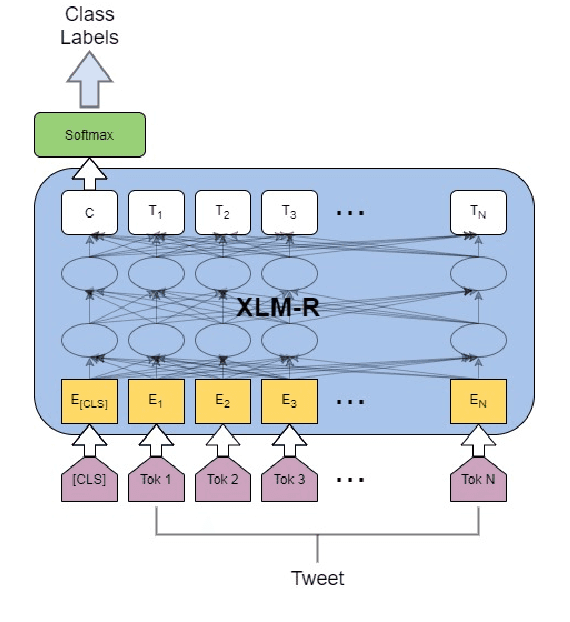

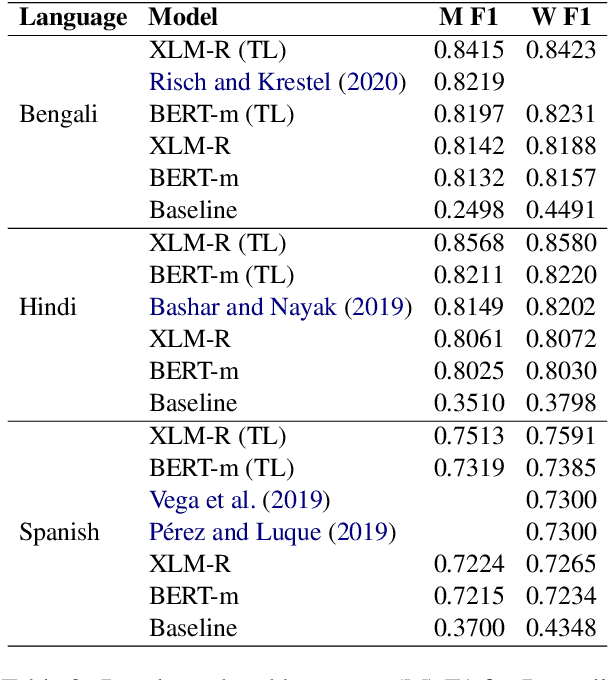

Abstract:Offensive content is pervasive in social media and a reason for concern to companies and government organizations. Several studies have been recently published investigating methods to detect the various forms of such content (e.g. hate speech, cyberbulling, and cyberaggression). The clear majority of these studies deal with English partially because most annotated datasets available contain English data. In this paper, we take advantage of English data available by applying cross-lingual contextual word embeddings and transfer learning to make predictions in languages with less resources. We project predictions on comparable data in Bengali, Hindi, and Spanish and we report results of 0.8415 F1 macro for Bengali, 0.8568 F1 macro for Hindi, and 0.7513 F1 macro for Spanish. Finally, we show that our approach compares favorably to the best systems submitted to recent shared tasks on these three languages, confirming the robustness of cross-lingual contextual embeddings and transfer learning for this task.

SemEval-2020 Task 12: Multilingual Offensive Language Identification in Social Media (OffensEval 2020)

Jun 12, 2020

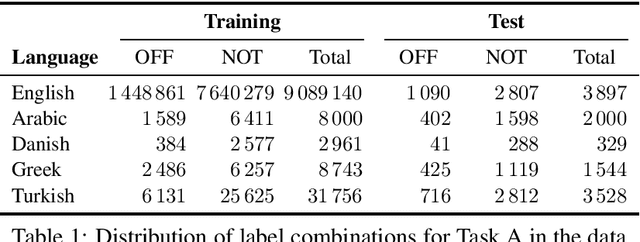

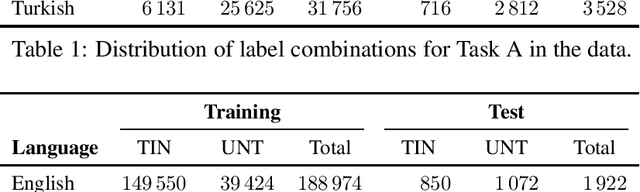

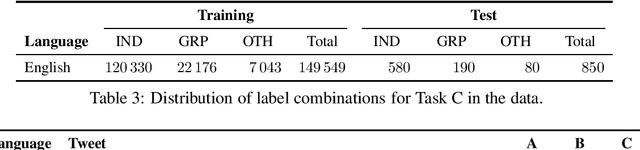

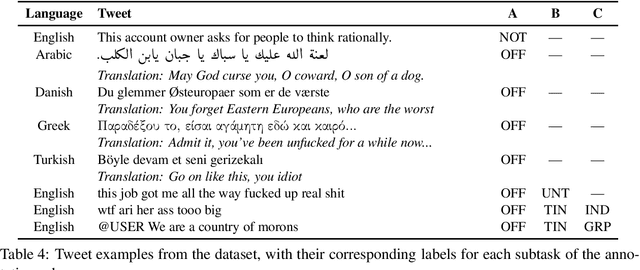

Abstract:We present the results and main findings of SemEval-2020 Task 12 on Multilingual Offensive Language Identification in Social Media (OffensEval 2020). The task involves three subtasks corresponding to the hierarchical taxonomy of the OLID schema (Zampieri et al., 2019a) from OffensEval 2019. The task featured five languages: English, Arabic, Danish, Greek, and Turkish for Subtask A. In addition, English also featured Subtasks B and C. OffensEval 2020 was one of the most popular tasks at SemEval-2020 attracting a large number of participants across all subtasks and also across all languages. A total of 528 teams signed up to participate in the task, 145 teams submitted systems during the evaluation period, and 70 submitted system description papers.

MaintNet: A Collaborative Open-Source Library for Predictive Maintenance Language Resources

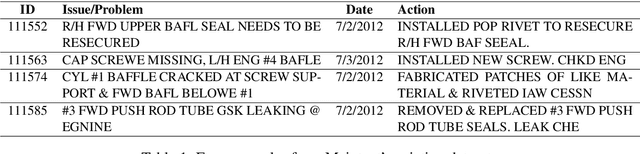

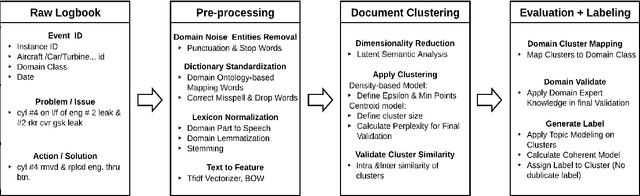

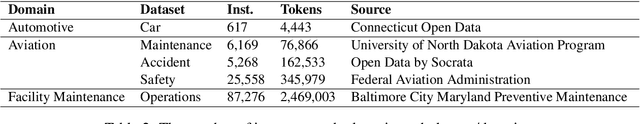

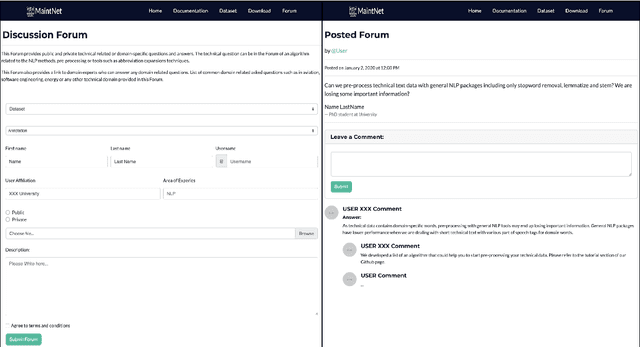

May 25, 2020

Abstract:Maintenance record logbooks are an emerging text type in NLP. They typically consist of free text documents with many domain specific technical terms, abbreviations, as well as non-standard spelling and grammar, which poses difficulties to NLP pipelines trained on standard corpora. Analyzing and annotating such documents is of particular importance in the development of predictive maintenance systems, which aim to provide operational efficiencies, prevent accidents and save lives. In order to facilitate and encourage research in this area, we have developed MaintNet, a collaborative open-source library of technical and domain-specific language datasets. MaintNet provides novel logbook data from the aviation, automotive, and facilities domains along with tools to aid in their (pre-)processing and clustering. Furthermore, it provides a way to encourage discussion on and sharing of new datasets and tools for logbook data analysis.

A Large-Scale Semi-Supervised Dataset for Offensive Language Identification

Apr 29, 2020

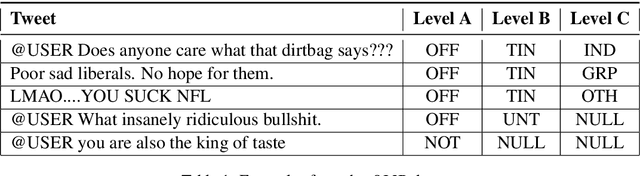

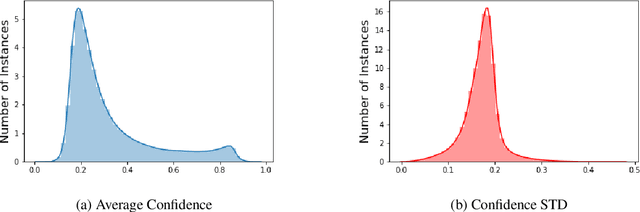

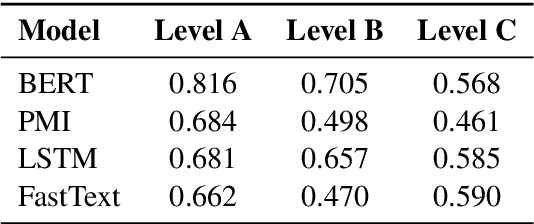

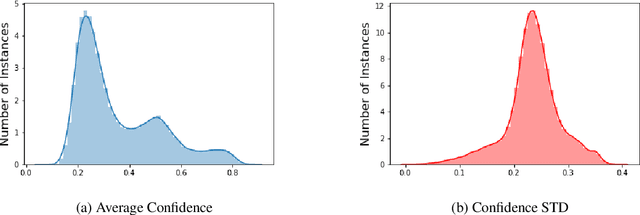

Abstract:The use of offensive language is a major problem in social media which has led to an abundance of research in detecting content such as hate speech, cyberbulling, and cyber-aggression. There have been several attempts to consolidate and categorize these efforts. Recently, the OLID dataset used at SemEval-2019 proposed a hierarchical three-level annotation taxonomy which addresses different types of offensive language as well as important information such as the target of such content. The categorization provides meaningful and important information for understanding offensive language. However, the OLID dataset is limited in size, especially for some of the low-level categories, which included only a few hundred instances, thus making it challenging to train robust deep learning models. Here, we address this limitation by creating the largest available dataset for this task, SOLID. SOLID contains over nine million English tweets labeled in a semi-supervised manner. We further demonstrate experimentally that using SOLID along with OLID yields improved performance on the OLID test set for two different models, especially for the lower levels of the taxonomy. Finally, we perform analysis of the models' performance on easy and hard examples of offensive language using data annotated in a semi-supervised way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge