Marco Ramilli

AI-GenBench: A New Ongoing Benchmark for AI-Generated Image Detection

Apr 29, 2025

Abstract:The rapid advancement of generative AI has revolutionized image creation, enabling high-quality synthesis from text prompts while raising critical challenges for media authenticity. We present Ai-GenBench, a novel benchmark designed to address the urgent need for robust detection of AI-generated images in real-world scenarios. Unlike existing solutions that evaluate models on static datasets, Ai-GenBench introduces a temporal evaluation framework where detection methods are incrementally trained on synthetic images, historically ordered by their generative models, to test their ability to generalize to new generative models, such as the transition from GANs to diffusion models. Our benchmark focuses on high-quality, diverse visual content and overcomes key limitations of current approaches, including arbitrary dataset splits, unfair comparisons, and excessive computational demands. Ai-GenBench provides a comprehensive dataset, a standardized evaluation protocol, and accessible tools for both researchers and non-experts (e.g., journalists, fact-checkers), ensuring reproducibility while maintaining practical training requirements. By establishing clear evaluation rules and controlled augmentation strategies, Ai-GenBench enables meaningful comparison of detection methods and scalable solutions. Code and data are publicly available to ensure reproducibility and to support the development of robust forensic detectors to keep pace with the rise of new synthetic generators.

Classification of Web Phishing Kits for early detection by platform providers

Oct 15, 2022

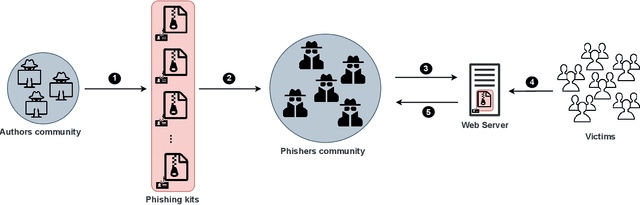

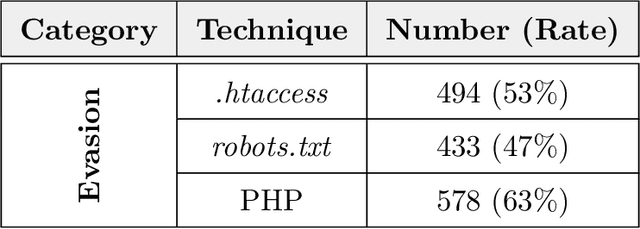

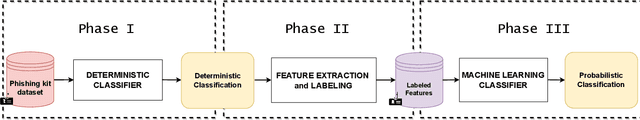

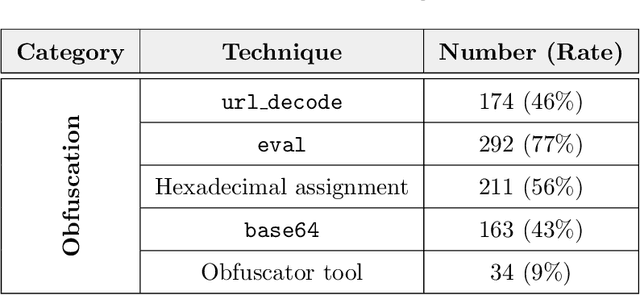

Abstract:Phishing kits are tools that dark side experts provide to the community of criminal phishers to facilitate the construction of malicious Web sites. As these kits evolve in sophistication, providers of Web-based services need to keep pace with continuous complexity. We present an original classification of a corpus of over 2000 recent phishing kits according to their adopted evasion and obfuscation functions. We carry out an initial deterministic analysis of the source code of the kits to extract the most discriminant features and information about their principal authors. We then integrate this initial classification through supervised machine learning models. Thanks to the ground-truth achieved in the first step, we can demonstrate whether and which machine learning models are able to suitably classify even the kits adopting novel evasion and obfuscation techniques that were unseen during the training phase. We compare different algorithms and evaluate their robustness in the realistic case in which only a small number of phishing kits are available for training. This paper represents an initial but important step to support Web service providers and analysts in improving early detection mechanisms and intelligence operations for the phishing kits that might be installed on their platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge