Convergence and sample complexity of gradient methods for the model-free linear quadratic regulator problem

Dec 26, 2019Hesameddin Mohammadi, Armin Zare, Mahdi Soltanolkotabi, Mihailo R. Jovanović

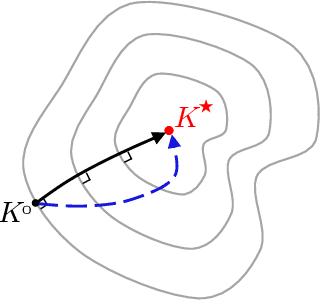

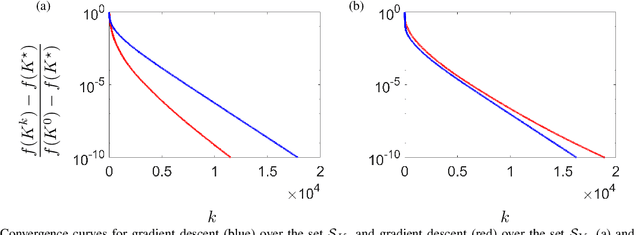

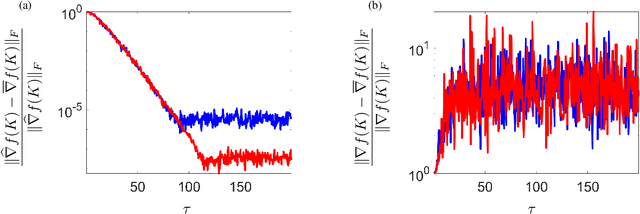

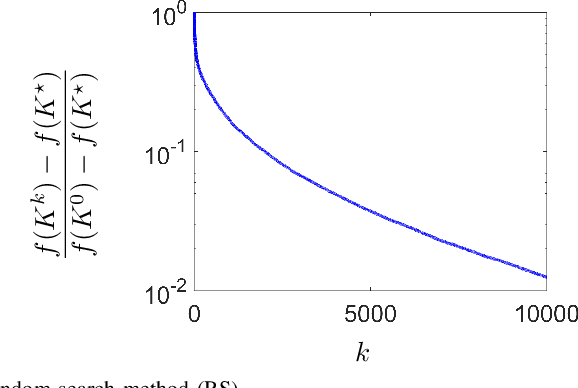

Model-free reinforcement learning attempts to find an optimal control action for an unknown dynamical system by directly searching over the parameter space of controllers. The convergence behavior and statistical properties of these approaches are often poorly understood because of the nonconvex nature of the underlying optimization problems as well as the lack of exact gradient computation. In this paper, we take a step towards demystifying the performance and efficiency of such methods by focusing on the standard infinite-horizon linear quadratic regulator problem for continuous-time systems with unknown state-space parameters. We establish exponential stability for the ordinary differential equation (ODE) that governs the gradient-flow dynamics over the set of stabilizing feedback gains and show that a similar result holds for the gradient descent method that arises from the forward Euler discretization of the corresponding ODE. We also provide theoretical bounds on the convergence rate and sample complexity of a random search method. Our results demonstrate that the required simulation time for achieving $\epsilon$-accuracy in a model-free setup and the total number of function evaluations both scale as $\log \, (1/\epsilon)$.

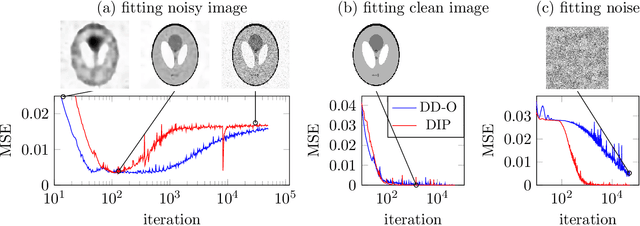

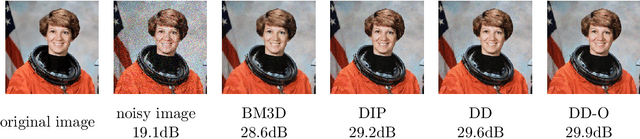

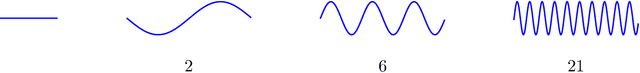

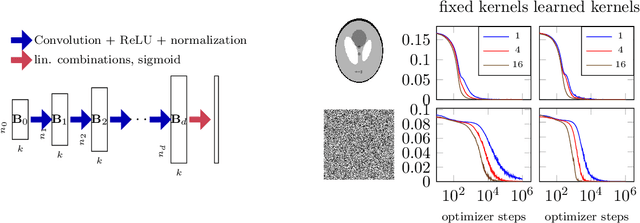

Denoising and Regularization via Exploiting the Structural Bias of Convolutional Generators

Oct 31, 2019Reinhard Heckel, Mahdi Soltanolkotabi

Convolutional Neural Networks (CNNs) have emerged as highly successful tools for image generation, recovery, and restoration. This success is often attributed to large amounts of training data. However, recent experimental findings challenge this view and instead suggest that a major contributing factor to this success is that convolutional networks impose strong prior assumptions about natural images. A surprising experiment that highlights this architectural bias towards natural images is that one can remove noise and corruptions from a natural image without using any training data, by simply fitting (via gradient descent) a randomly initialized, over-parameterized convolutional generator to the single corrupted image. While this over-parameterized network can fit the corrupted image perfectly, surprisingly after a few iterations of gradient descent one obtains the uncorrupted image. This intriguing phenomenon enables state-of-the-art CNN-based denoising and regularization of linear inverse problems such as compressive sensing. In this paper, we take a step towards demystifying this experimental phenomenon by attributing this effect to particular architectural choices of convolutional networks, namely convolutions with fixed interpolating filters. We then formally characterize the dynamics of fitting a two-layer convolutional generator to a noisy signal and prove that early-stopped gradient descent denoises/regularizes. This result relies on showing that convolutional generators fit the structured part of an image significantly faster than the corrupted portion.

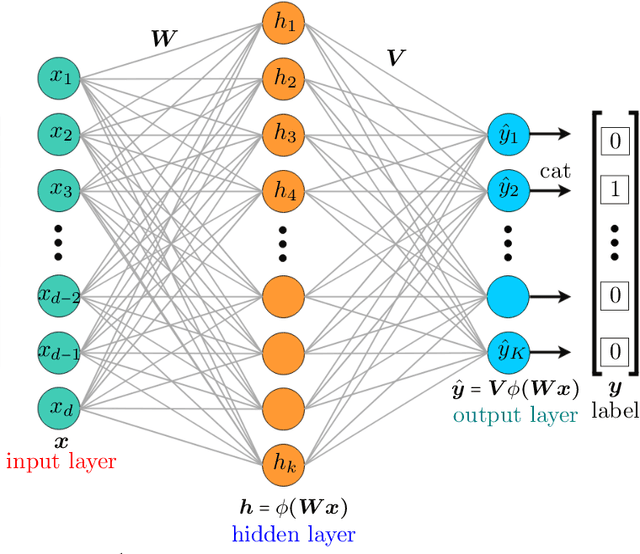

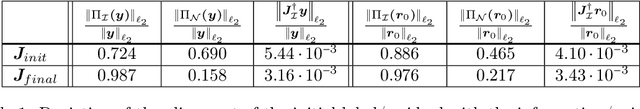

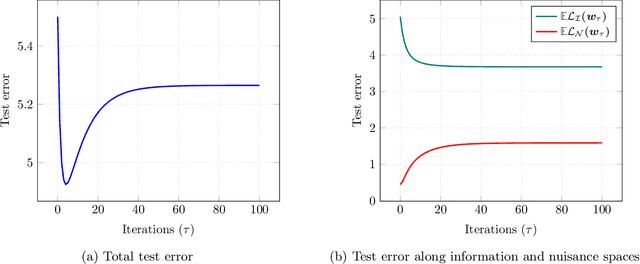

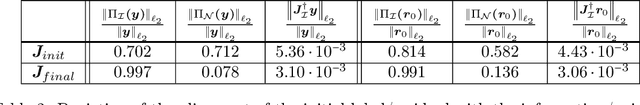

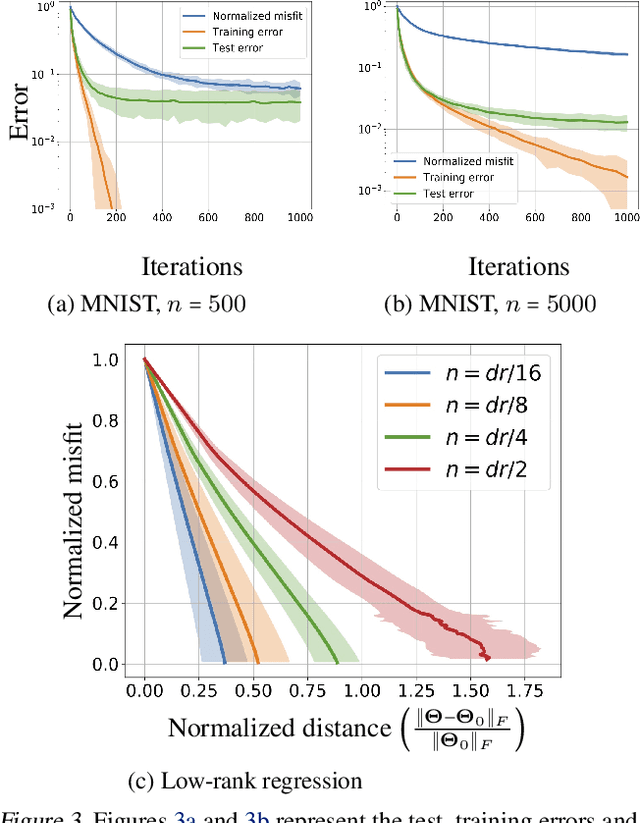

Generalization Guarantees for Neural Networks via Harnessing the Low-rank Structure of the Jacobian

Jul 04, 2019Samet Oymak, Zalan Fabian, Mingchen Li, Mahdi Soltanolkotabi

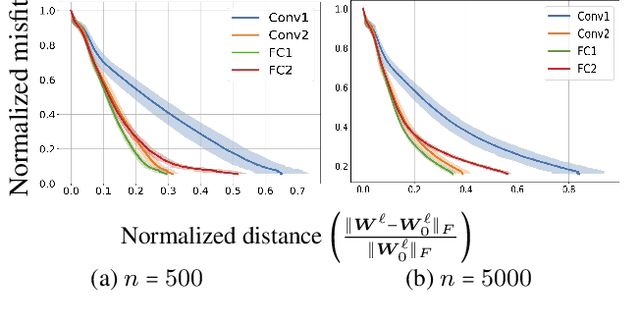

Modern neural network architectures often generalize well despite containing many more parameters than the size of the training dataset. This paper explores the generalization capabilities of neural networks trained via gradient descent. We develop a data-dependent optimization and generalization theory which leverages the low-rank structure of the Jacobian matrix associated with the network. Our results help demystify why training and generalization is easier on clean and structured datasets and harder on noisy and unstructured datasets as well as how the network size affects the evolution of the train and test errors during training. Specifically, we use a control knob to split the Jacobian spectum into "information" and "nuisance" spaces associated with the large and small singular values. We show that over the information space learning is fast and one can quickly train a model with zero training loss that can also generalize well. Over the nuisance space training is slower and early stopping can help with generalization at the expense of some bias. We also show that the overall generalization capability of the network is controlled by how well the label vector is aligned with the information space. A key feature of our results is that even constant width neural nets can provably generalize for sufficiently nice datasets. We conduct various numerical experiments on deep networks that corroborate our theoretical findings and demonstrate that: (i) the Jacobian of typical neural networks exhibit low-rank structure with a few large singular values and many small ones leading to a low-dimensional information space, (ii) over the information space learning is fast and most of the label vector falls on this space, and (iii) label noise falls on the nuisance space and impedes optimization/generalization.

Gradient Descent with Early Stopping is Provably Robust to Label Noise for Overparameterized Neural Networks

Apr 07, 2019Mingchen Li, Mahdi Soltanolkotabi, Samet Oymak

Modern neural networks are typically trained in an over-parameterized regime where the parameters of the model far exceed the size of the training data. Due to over-parameterization these neural networks in principle have the capacity to (over)fit any set of labels including pure noise. Despite this high fitting capacity, somewhat paradoxically, neural network models trained via first-order methods continue to predict well on yet unseen test data. In this paper we take a step towards demystifying this phenomena. In particular we show that first order methods such as gradient descent are provably robust to noise/corruption on a constant fraction of the labels despite over-parametrization under a rich dataset model. In particular: i) First, we show that in the first few iterations where the updates are still in the vicinity of the initialization these algorithms only fit to the correct labels essentially ignoring the noisy labels. ii) Secondly, we prove that to start to overfit to the noisy labels these algorithms must stray rather far from from the initial model which can only occur after many more iterations. Together, these show that gradient descent with early stopping is provably robust to label noise and shed light on empirical robustness of deep networks as well as commonly adopted heuristics to prevent overfitting.

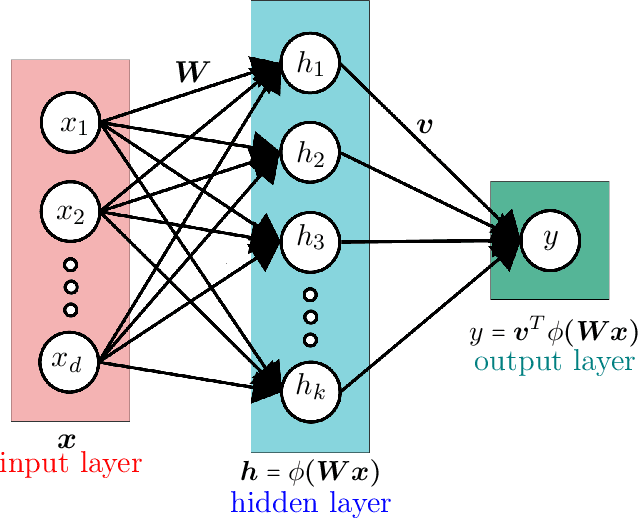

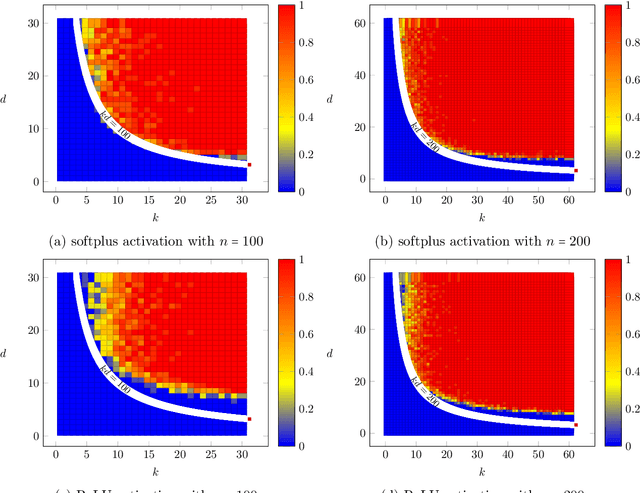

Towards moderate overparameterization: global convergence guarantees for training shallow neural networks

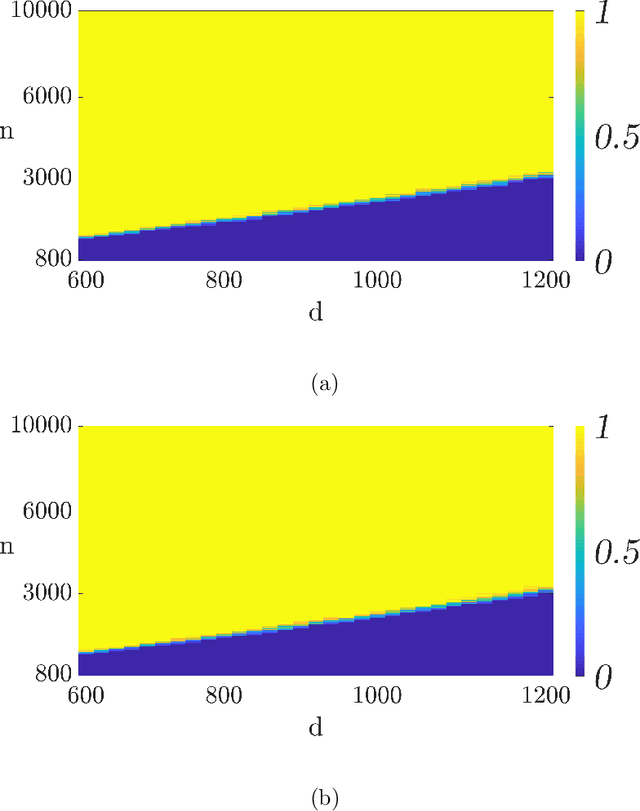

Feb 12, 2019Samet Oymak, Mahdi Soltanolkotabi

Many modern neural network architectures are trained in an overparameterized regime where the parameters of the model exceed the size of the training dataset. Sufficiently overparameterized neural network architectures in principle have the capacity to fit any set of labels including random noise. However, given the highly nonconvex nature of the training landscape it is not clear what level and kind of overparameterization is required for first order methods to converge to a global optima that perfectly interpolate any labels. A number of recent theoretical works have shown that for very wide neural networks where the number of hidden units is polynomially large in the size of the training data gradient descent starting from a random initialization does indeed converge to a global optima. However, in practice much more moderate levels of overparameterization seems to be sufficient and in many cases overparameterized models seem to perfectly interpolate the training data as soon as the number of parameters exceed the size of the training data by a constant factor. Thus there is a huge gap between the existing theoretical literature and practical experiments. In this paper we take a step towards closing this gap. Focusing on shallow neural nets and smooth activations, we show that (stochastic) gradient descent when initialized at random converges at a geometric rate to a nearby global optima as soon as the square-root of the number of network parameters exceeds the size of the training data. Our results also benefit from a fast convergence rate and continue to hold for non-differentiable activations such as Rectified Linear Units (ReLUs).

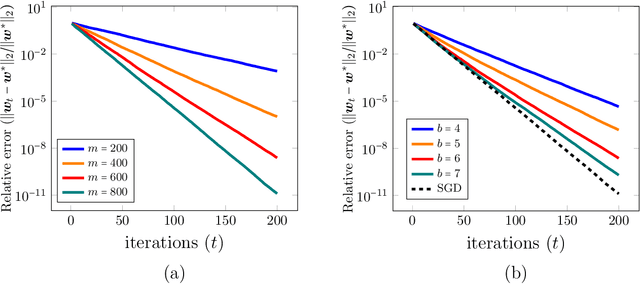

Fitting ReLUs via SGD and Quantized SGD

Jan 19, 2019Seyed Mohammadreza Mousavi Kalan, Mahdi Soltanolkotabi, A. Salman Avestimehr

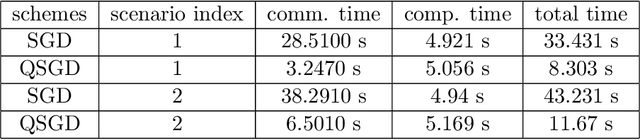

In this paper we focus on the problem of finding the optimal weights of the shallowest of neural networks consisting of a single Rectified Linear Unit (ReLU). These functions are of the form $\mathbf{x}\rightarrow \max(0,\langle\mathbf{w},\mathbf{x}\rangle)$ with $\mathbf{w}\in\mathbb{R}^d$ denoting the weight vector. We focus on a planted model where the inputs are chosen i.i.d. from a Gaussian distribution and the labels are generated according to a planted weight vector. We first show that mini-batch stochastic gradient descent when suitably initialized, converges at a geometric rate to the planted model with a number of samples that is optimal up to numerical constants. Next we focus on a parallel implementation where in each iteration the mini-batch gradient is calculated in a distributed manner across multiple processors and then broadcast to a master or all other processors. To reduce the communication cost in this setting we utilize a Quanitzed Stochastic Gradient Scheme (QSGD) where the partial gradients are quantized. Perhaps unexpectedly, we show that QSGD maintains the fast convergence of SGD to a globally optimal model while significantly reducing the communication cost. We further corroborate our numerical findings via various experiments including distributed implementations over Amazon EC2.

Overparameterized Nonlinear Learning: Gradient Descent Takes the Shortest Path?

Dec 25, 2018Samet Oymak, Mahdi Soltanolkotabi

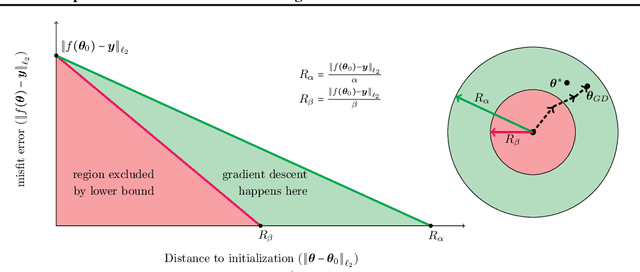

Many modern learning tasks involve fitting nonlinear models to data which are trained in an overparameterized regime where the parameters of the model exceed the size of the training dataset. Due to this overparameterization, the training loss may have infinitely many global minima and it is critical to understand the properties of the solutions found by first-order optimization schemes such as (stochastic) gradient descent starting from different initializations. In this paper we demonstrate that when the loss has certain properties over a minimally small neighborhood of the initial point, first order methods such as (stochastic) gradient descent have a few intriguing properties: (1) the iterates converge at a geometric rate to a global optima even when the loss is nonconvex, (2) among all global optima of the loss the iterates converge to one with a near minimal distance to the initial point, (3) the iterates take a near direct route from the initial point to this global optima. As part of our proof technique, we introduce a new potential function which captures the precise tradeoff between the loss function and the distance to the initial point as the iterations progress. For Stochastic Gradient Descent (SGD), we develop novel martingale techniques that guarantee SGD never leaves a small neighborhood of the initialization, even with rather large learning rates. We demonstrate the utility of our general theory for a variety of problem domains spanning low-rank matrix recovery to neural network training. Underlying our analysis are novel insights that may have implications for training and generalization of more sophisticated learning problems including those involving deep neural network architectures.

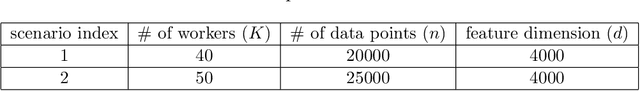

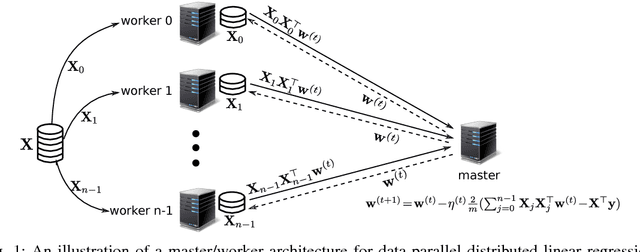

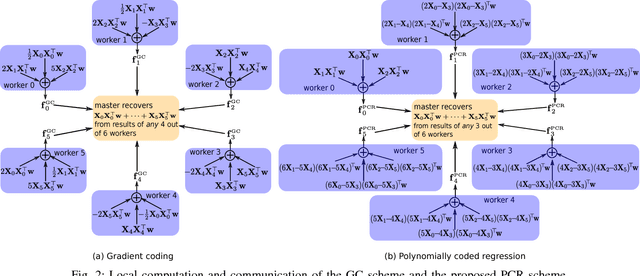

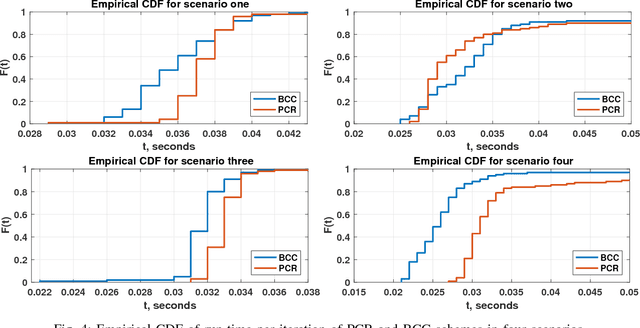

Polynomially Coded Regression: Optimal Straggler Mitigation via Data Encoding

May 24, 2018Songze Li, Seyed Mohammadreza Mousavi Kalan, Qian Yu, Mahdi Soltanolkotabi, A. Salman Avestimehr

We consider the problem of training a least-squares regression model on a large dataset using gradient descent. The computation is carried out on a distributed system consisting of a master node and multiple worker nodes. Such distributed systems are significantly slowed down due to the presence of slow-running machines (stragglers) as well as various communication bottlenecks. We propose "polynomially coded regression" (PCR) that substantially reduces the effect of stragglers and lessens the communication burden in such systems. The key idea of PCR is to encode the partial data stored at each worker, such that the computations at the workers can be viewed as evaluating a polynomial at distinct points. This allows the master to compute the final gradient by interpolating this polynomial. PCR significantly reduces the recovery threshold, defined as the number of workers the master has to wait for prior to computing the gradient. In particular, PCR requires a recovery threshold that scales inversely proportionally with the amount of computation/storage available at each worker. In comparison, state-of-the-art straggler-mitigation schemes require a much higher recovery threshold that only decreases linearly in the per worker computation/storage load. We prove that PCR's recovery threshold is near minimal and within a factor two of the best possible scheme. Our experiments over Amazon EC2 demonstrate that compared with state-of-the-art schemes, PCR improves the run-time by 1.50x ~ 2.36x with naturally occurring stragglers, and by as much as 2.58x ~ 4.29x with artificial stragglers.

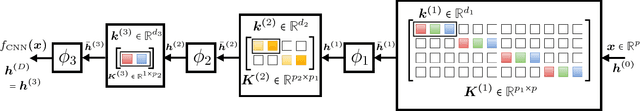

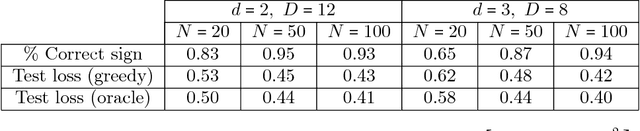

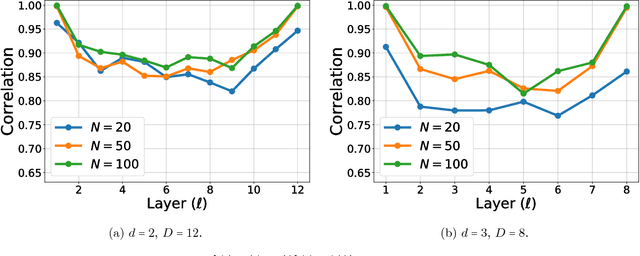

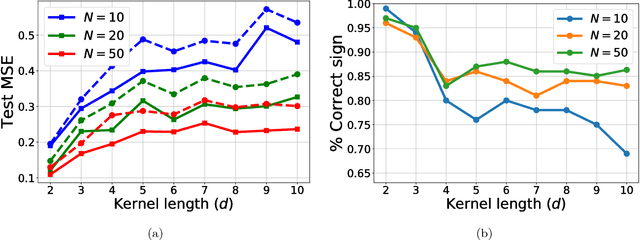

End-to-end Learning of a Convolutional Neural Network via Deep Tensor Decomposition

May 16, 2018Samet Oymak, Mahdi Soltanolkotabi

In this paper we study the problem of learning the weights of a deep convolutional neural network. We consider a network where convolutions are carried out over non-overlapping patches with a single kernel in each layer. We develop an algorithm for simultaneously learning all the kernels from the training data. Our approach dubbed Deep Tensor Decomposition (DeepTD) is based on a rank-1 tensor decomposition. We theoretically investigate DeepTD under a realizable model for the training data where the inputs are chosen i.i.d. from a Gaussian distribution and the labels are generated according to planted convolutional kernels. We show that DeepTD is data-efficient and provably works as soon as the sample size exceeds the total number of convolutional weights in the network. We carry out a variety of numerical experiments to investigate the effectiveness of DeepTD and verify our theoretical findings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge