Maciej Bobowicz

on behalf of EUCanImage working group

Robust Multicentre Detection and Classification of Colorectal Liver Metastases on CT: Application of Foundation Models

Jan 12, 2026Abstract:Colorectal liver metastases (CRLM) are a major cause of cancer-related mortality, and reliable detection on CT remains challenging in multi-centre settings. We developed a foundation model-based AI pipeline for patient-level classification and lesion-level detection of CRLM on contrast-enhanced CT, integrating uncertainty quantification and explainability. CT data from the EuCanImage consortium (n=2437) and an external TCIA cohort (n=197) were used. Among several pretrained models, UMedPT achieved the best performance and was fine-tuned with an MLP head for classification and an FCOS-based head for lesion detection. The classification model achieved an AUC of 0.90 and a sensitivity of 0.82 on the combined test set, with a sensitivity of 0.85 on the external cohort. Excluding the most uncertain 20 percent of cases improved AUC to 0.91 and balanced accuracy to 0.86. Decision curve analysis showed clinical benefit for threshold probabilities between 0.30 and 0.40. The detection model identified 69.1 percent of lesions overall, increasing from 30 percent to 98 percent across lesion size quartiles. Grad-CAM highlighted lesion-corresponding regions in high-confidence cases. These results demonstrate that foundation model-based pipelines can support robust and interpretable CRLM detection and classification across heterogeneous CT data.

Federated nnU-Net for Privacy-Preserving Medical Image Segmentation

Mar 04, 2025

Abstract:The nnU-Net framework has played a crucial role in medical image segmentation and has become the gold standard in multitudes of applications targeting different diseases, organs, and modalities. However, so far it has been used primarily in a centralized approach where the data collected from hospitals are stored in one center and used to train the nnU-Net. This centralized approach has various limitations, such as leakage of sensitive patient information and violation of patient privacy. Federated learning is one of the approaches to train a segmentation model in a decentralized manner that helps preserve patient privacy. In this paper, we propose FednnU-Net, a federated learning extension of nnU-Net. We introduce two novel federated learning methods to the nnU-Net framework - Federated Fingerprint Extraction (FFE) and Asymmetric Federated Averaging (AsymFedAvg) - and experimentally show their consistent performance for breast, cardiac and fetal segmentation using 6 datasets representing samples from 18 institutions. Additionally, to further promote research and deployment of decentralized training in privacy constrained institutions, we make our plug-n-play framework public. The source-code is available at https://github.com/faildeny/FednnUNet .

MAMA-MIA: A Large-Scale Multi-Center Breast Cancer DCE-MRI Benchmark Dataset with Expert Segmentations

Jun 19, 2024Abstract:Current research in breast cancer Magnetic Resonance Imaging (MRI), especially with Artificial Intelligence (AI), faces challenges due to the lack of expert segmentations. To address this, we introduce the MAMA-MIA dataset, comprising 1506 multi-center dynamic contrast-enhanced MRI cases with expert segmentations of primary tumors and non-mass enhancement areas. These cases were sourced from four publicly available collections in The Cancer Imaging Archive (TCIA). Initially, we trained a deep learning model to automatically segment the cases, generating preliminary segmentations that significantly reduced expert segmentation time. Sixteen experts, averaging 9 years of experience in breast cancer, then corrected these segmentations, resulting in the final expert segmentations. Additionally, two radiologists conducted a visual inspection of the automatic segmentations to support future quality control studies. Alongside the expert segmentations, we provide 49 harmonized demographic and clinical variables and the pretrained weights of the well-known nnUNet architecture trained using the DCE-MRI full-images and expert segmentations. This dataset aims to accelerate the development and benchmarking of deep learning models and foster innovation in breast cancer diagnostics and treatment planning.

High-resolution synthesis of high-density breast mammograms: Application to improved fairness in deep learning based mass detection

Sep 20, 2022

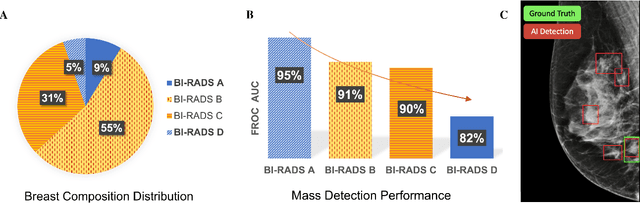

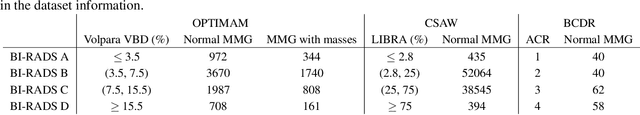

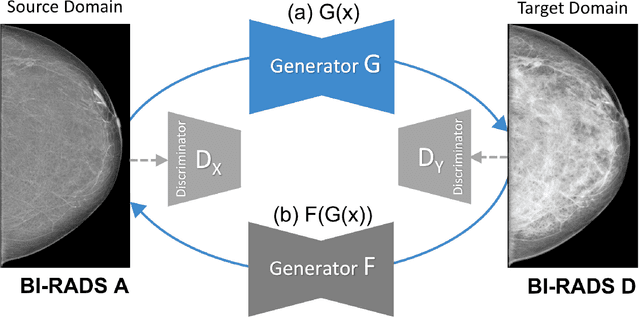

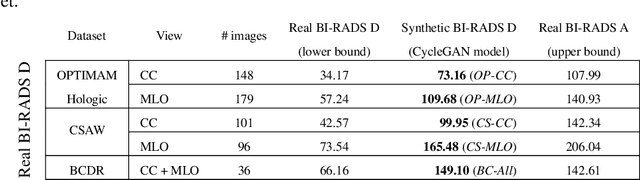

Abstract:Computer-aided detection systems based on deep learning have shown good performance in breast cancer detection. However, high-density breasts show poorer detection performance since dense tissues can mask or even simulate masses. Therefore, the sensitivity of mammography for breast cancer detection can be reduced by more than 20% in dense breasts. Additionally, extremely dense cases reported an increased risk of cancer compared to low-density breasts. This study aims to improve the mass detection performance in high-density breasts using synthetic high-density full-field digital mammograms (FFDM) as data augmentation during breast mass detection model training. To this end, a total of five cycle-consistent GAN (CycleGAN) models using three FFDM datasets were trained for low-to-high-density image translation in high-resolution mammograms. The training images were split by breast density BI-RADS categories, being BI-RADS A almost entirely fatty and BI-RADS D extremely dense breasts. Our results showed that the proposed data augmentation technique improved the sensitivity and precision of mass detection in high-density breasts by 2% and 6% in two different test sets and was useful as a domain adaptation technique. In addition, the clinical realism of the synthetic images was evaluated in a reader study involving two expert radiologists and one surgical oncologist.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge