Lifang Yang

AUV-Fusion: Cross-Modal Adversarial Fusion of User Interactions and Visual Perturbations Against VARS

Jul 30, 2025Abstract:Modern Visual-Aware Recommender Systems (VARS) exploit the integration of user interaction data and visual features to deliver personalized recommendations with high precision. However, their robustness against adversarial attacks remains largely underexplored, posing significant risks to system reliability and security. Existing attack strategies suffer from notable limitations: shilling attacks are costly and detectable, and visual-only perturbations often fail to align with user preferences. To address these challenges, we propose AUV-Fusion, a cross-modal adversarial attack framework that adopts high-order user preference modeling and cross-modal adversary generation. Specifically, we obtain robust user embeddings through multi-hop user-item interactions and transform them via an MLP into semantically aligned perturbations. These perturbations are injected onto the latent space of a pre-trained VAE within the diffusion model. By synergistically integrating genuine user interaction data with visually plausible perturbations, AUV-Fusion eliminates the need for injecting fake user profiles and effectively mitigates the challenge of insufficient user preference extraction inherent in traditional visual-only attacks. Comprehensive evaluations on diverse VARS architectures and real-world datasets demonstrate that AUV-Fusion significantly enhances the exposure of target (cold-start) items compared to conventional baseline methods. Moreover, AUV-Fusion maintains exceptional stealth under rigorous scrutiny.

Resampling detection of recompressed images via dual-stream convolutional neural network

Jan 15, 2019

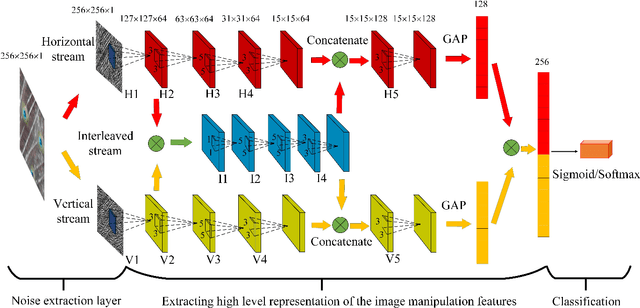

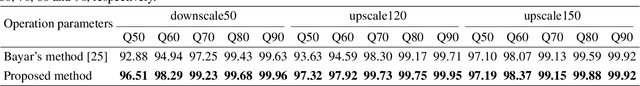

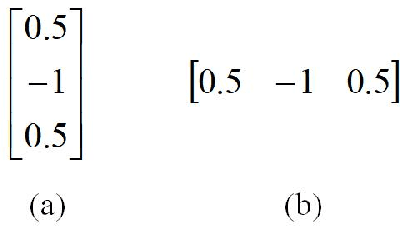

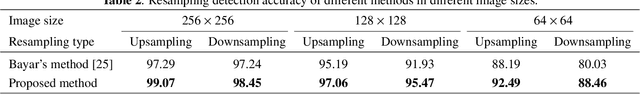

Abstract:Resampling detection plays an important role in identifying image tampering, such as image splicing. Currently, the resampling detection is still difficult in recompressed images, which are yielded by applying resampling and post-JPEG compression to primary JPEG images. Although low quality primary compression benefits the detection, it remains rather challenging due to the widespread use of middle/high quality compression in imaging devices. In this paper, we propose a novel deep learning approach to learn resampling features directly from the recompressed images. To this end, a noise extraction layer based on low-order high pass filters is deployed to yield the image noise residual domain, which is more beneficial to extract manipulation trail features. A dual-stream convolutional neural network (CNN) is presented to capture the resampling trails along different directions, where the horizontal and vertical streams are interleaved and concatenated. Lastly, the learned features are fed into Sigmoid/Softmax layer, which is used as a binary/multiple classifier for achieving the blind detection or parameter estimation of resampling operations, respectively. Extensive experimental results demonstrate that our proposed method could detect resampling effectively in recompressed images and outperform the state-of-the-art detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge