Li Weng

Semantic Signatures for Large-scale Visual Localization

May 07, 2020

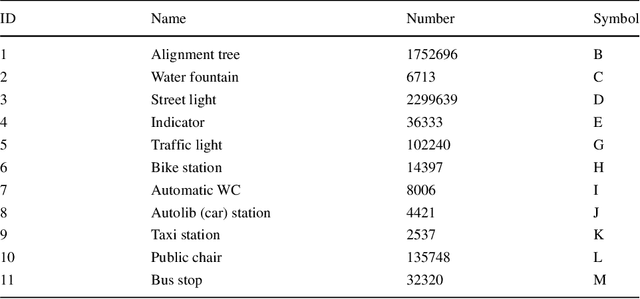

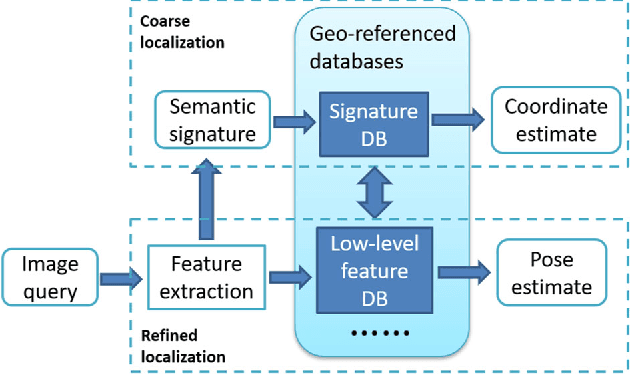

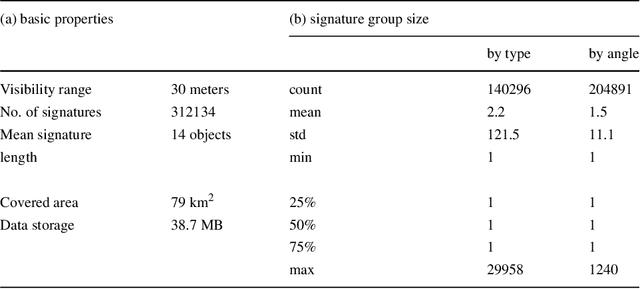

Abstract:Visual localization is a useful alternative to standard localization techniques. It works by utilizing cameras. In a typical scenario, features are extracted from captured images and compared with geo-referenced databases. Location information is then inferred from the matching results. Conventional schemes mainly use low-level visual features. These approaches offer good accuracy but suffer from scalability issues. In order to assist localization in large urban areas, this work explores a different path by utilizing high-level semantic information. It is found that object information in a street view can facilitate localization. A novel descriptor scheme called "semantic signature" is proposed to summarize this information. A semantic signature consists of type and angle information of visible objects at a spatial location. Several metrics and protocols are proposed for signature comparison and retrieval. They illustrate different trade-offs between accuracy and complexity. Extensive simulation results confirm the potential of the proposed scheme in large-scale applications. This paper is an extended version of a conference paper in CBMI'18. A more efficient retrieval protocol is presented with additional experiment results.

* 12 pages, 22 figures, submitted to Multimedia Tools and Applications

Random VLAD based Deep Hashing for Efficient Image Retrieval

Feb 06, 2020

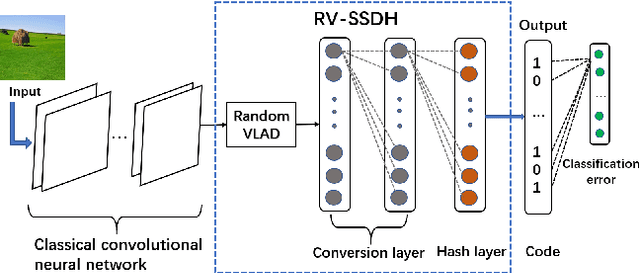

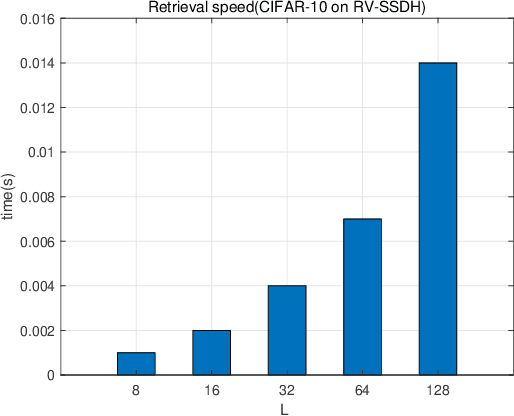

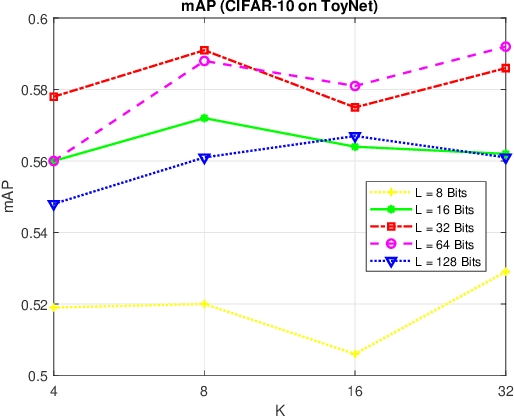

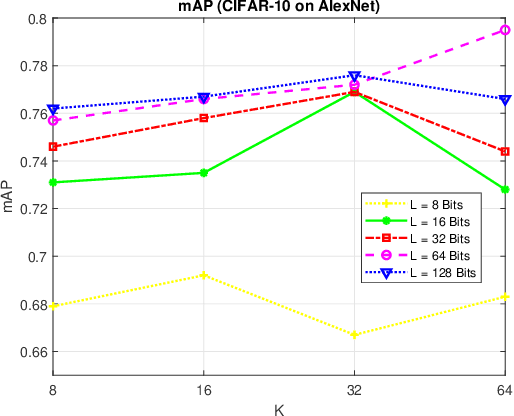

Abstract:Image hash algorithms generate compact binary representations that can be quickly matched by Hamming distance, thus become an efficient solution for large-scale image retrieval. This paper proposes RV-SSDH, a deep image hash algorithm that incorporates the classical VLAD (vector of locally aggregated descriptors) architecture into neural networks. Specifically, a novel neural network component is formed by coupling a random VLAD layer with a latent hash layer through a transform layer. This component can be combined with convolutional layers to realize a hash algorithm. We implement RV-SSDH as a point-wise algorithm that can be efficiently trained by minimizing classification error and quantization loss. Comprehensive experiments show this new architecture significantly outperforms baselines such as NetVLAD and SSDH, and offers a cost-effective trade-off in the state-of-the-art. In addition, the proposed random VLAD layer leads to satisfactory accuracy with low complexity, thus shows promising potentials as an alternative to NetVLAD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge